Growth rates

advertisement

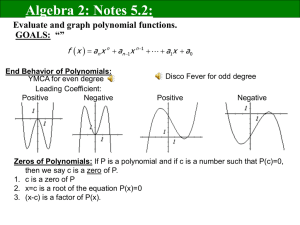

Growth Rates of Functions 3/26/12 Asymptotic Equivalence • Def: f (n ) f (n ) : g (n ) iff lim n 1 g (n ) For example, n 1 : n (think: 2 2 2 5 10 17 , , , 1 4 9 16 Note that n2+1 is being used to name the function f such that f(n) = n2+1 for every n 3/26/12 , ...) An example: Stirling’s formula n n! : e 3/26/12 n 2 n ("Stirling's approxim ation") Little-Oh: f = o(g) • Def: f(n) = o(g(n)) iff lim n f (n ) 0 g (n ) • For example, n2 = o(n3) since lim n 3/26/12 n 2 n 3 lim n 1 n 0 = o( ∙ ) is “all one symbol” • “f = o(g)” is really a strict partial order on functions • NEVER write “o(g) = f”, etc. 3/26/12 Big-Oh: O(∙) • Asymptotic Order of Growth: f (n ) f (n ) O (g (n )) iff lim n g (n ) • “f grows no faster than g” • A Weak Partial Order 3/26/12 Growth Order 3n n 2 O (n ) because 2 2 3n n 2 2 lim n 3/26/12 n 2 3 f = o(g) implies f = O(g) because if lim n then lim n f (n ) 0 g (n ) f (n ) g (n ) So for exam ple, n 1 O (n ) 2 3/26/12 Big-Omega • f = Ω(g) means g = O(f) • “f grows at least as quickly as g” 3/26/12 Big-Theta: 𝛩(∙) “Same order of growth” f (n ) ( g (n )) iff f (n ) O ( g (n )) an d g (n ) O ( f (n )) o r eq u iv alen tly f (n ) O ( g (n )) an d f (n ) ( g (n )) So, for exam ple, 3n 2 (n ) 2 3/26/12 2 Rough Paraphrase • • • • • f∼g: f=o(g): f=O(g): f=Ω(g): f=𝛩(g): 3/26/12 f and g grow to be roughly equal f grows more slowly than g f grows at most as quickly as g f grows at least as quickly as g f and g grow at the same rate Equivalent Defn of O(∙) “From some point on, the value of f is at most a constant multiple of the value of g” f (n ) O ( g (n )) iff c, n 0 such that n n 0 : f (n ) c g (n ) 3/26/12 Three Concrete Examples • Polynomials • Logarithmic functions • Exponential functions 3/26/12 Polynomials • A (univariate) polynomial is a function such as f(n) = 3n5+2n2-n+2 (for all natural numbers n) • This is a polynomial of degree 5 (the largest exponent) • Or in general f (n ) c n • Theorem: d i i i 0 – If a<b then any polynomial of degree a is o(any polynomial of degree b) – If a≤b then any polynomial of degree a is O(any polynomial of degree b) 3/26/12 Logarithmic Functions • A function f is logarithmic if it is Θ(logbn) for some constant b. • Theorem: All logarithmic functions are Θ() of each other, and are Θ(any logarithmic function of a polynomial) • Theorem: Any logarithmic function is o(any polynomial) 3/26/12 Exponential Functions • A function is exponential if it is Θ(cn) for some constant c>1. • Theorem: Any polynomial is o(any exponential) • If c<d then cn=o(dn). 3/26/12 Growth Rates and Analysis of Algorithms • Let f(n) measure the amount of time taken by an algorithm to solve a problem of size n. • Most practical algorithms have polynomial running times • E.g. sorting algorithms generally have running times that are quadratic (polynomial or degree 2) or less (for example, O(n log n)). • Exhaustive search over an exponentially growing set of possible answers requires exponential time. 3/26/12 Another way to look at it • Suppose an algorithm can solve a problem of size S in time T and you give it twice as much time. • If the running time is f(n)=n2, so that T=S2, then in time 2T you can solve a problem of size (21/2)∙S • If the running time is f(n)=2n, so that T=2S, then in time 2T you can solve a problem of size S+1. • In general doubling the time available to a polynomial algorithm results in a MULTIPLICATIVE increase in the size of the problem that can be solved • But doubling the time available to an exponential algorithm results in an ADDITIVE increase to the size of the problem that can be solved. 3/26/12 FINIS 3/26/12