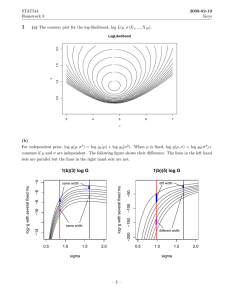

ppt

advertisement

Lecture 2: Parallel Reduction Algorithms & Their Analysis on Different Interconnection Topologies Shantanu Dutt Univ. of Illinois at Chicago An example of an SPMD message-passing parallel program 2 SPMD message-passing parallel program (contd.) node xor D, 3 1 Reduction Computations & Their Parallelization The prior max computation is a reduction computation, defined as x = f(D), where D is a data set (e.g., a vector), and x is a scalar quantity. In the max computation, f is the max function, and D the set/vector of numbers for which the max is to be computed. Reduction computations that are associative [defined as f(a,b,c) = f(f(a,b), c) = f(a, f(b,c))], can be easily parallelized using a recursive reduction approach (the final value of f(D) needs to be at some processor at the end of the parallel computation): The data set D is evenly distributed among P processors—each processor Pi has a disjoint subset Di of D/P data elements. Each processor Pi performs the computation f(Di) on its data set Di. Due to associativity, the final result = f(f(D1), f(D2), …., f(DP)). This is achieved via communication betw. the processors and f computation of successively larger f(Di) sets. A natural communication pattern is that of a binary tree. But a more general pattern is described below. Each processor then engages in (log P) rounds of message passing with some other processors. In the k’th round Pi communicates with a unique partner processor Pj = partner(Pi, k) in which it sends or receives (depending, say, on whether its id is > or < than Pj, resp.) the current f computation result it or Pj contains, resp. If Pi receives a computation result b from Pj in the k’th round, it computes a = f(a,b), where a is its current result, and participates in the (k+1)’th round of commun. If Pi has sent its data to Pj, then it does not participate in any further rounds of communication and computation; it is done with its task. At the end of the (log P) rounds of communication, the processor with the least ID (= 0) will hold f(D). Reduction Computations & Their Parallelization (contd.) Assuming (Pi, Pj), where Pj = partner(Pi, k), is a unique send-recv pair (the pairs may or may not be disjoint from each other) in round k, the # of processors holding the required partial computation results halve after each round, and hence the term recursive halving for such parallel computations. In general, there are other variations of parallel reduction computations (generally dictated by the interconnection topology) in which the # of processors will reduce by some factor other than 2 in each round of communication. The general term for such parallel computations is recursive reduction. A topology independent recursive-halving communication pattern is shown below. Note also that as the # of processors involved halve, the # of initial data sets that each “active” partial result represents/covers double (a recursive doubling of coverage of data sets by each active partial result). Total # of msgs sent is P-1 (Why?). Basic metrics of performance we will use initially: parallel time, speedup (seq. time/parallel time), efficiency (speedup/P = # of procs.), total number or size of msgs Time step 1 0 Time step 1 1 2 3 4 5 Time step 2 Time step 2 Time step 3 Time step 1 Time step 1 6 7 Reduction Computations & Their Parallelization (contd.) A variation of a reduction computation is one in which each processor needs to have the f(D) value. In this case, instead of a send from Pj to its partner in the k’th round Pi (assuming, id(Pi) < id(Pj)), Pi and Pj would exchange their respective data elements a, b, each compute f(a,b) and each engage in the (k+1)’th round of communication with different partners. Thus there is no recursive-halving of the # of processors involved in each subsequent round (the # of participating processors always remains P). However, the # of initial data sets that each active partial result covers recursively doubles in each round as in the recursive-halving computation. The “exchange” communication pattern for a reduction computation is shown below (total # of msgs sent is P(log P)). Time step 1 0 Time step 1 1 2 2 2 3 Time step 1 Time step 1 3 3 4 3 5 6 2 2 3 7 Analysis of Parallel Reduction on Different Topologies Recursive halving based reduction on a hypercube: Initial computation time = Theta(N/P); N= # of data items, P = # processors. Communication time = Theta(log P), as there are (log P) msg passing rounds, in each round all msgs are sent in parallel, each msg is a 1-hop msg., and there is no conflict among msgs Computation time during commun. rounds = Theta(log P) [1 red. oper. in each processor in each round). Note that computation and commun. are sequentialized (e.g., no overlap betw. them), and so need to take the sum of both times to obtain total time. Same comput. and commun. time for exchange commun. on a hypercube Speedup = S(P) = Seq._time/Parallel_time(P) = Theta(N)/[Theta((N/P) + Theta(2*logP))] ~ Theta(P) if N >> P Time steps 3 2 2 3 3 1 1 2 1 1 1 2 1 1 1 2 3 3 2 (a) Hypercubes of dimensions 0 to 4 (b) Msg pattern for a reduction comput. using recursive halving; processor 000 will hold the final result (c) Msg pattern for a reduction comput. using exchange communication; all processors will hold the final result Analysis of Parallel Reduction on Different Topologies (contd). Recursive reduction on a direct tree: Initial Computation time = Theta(N/P); N= # of data items, P = # processors. Communication time = Theta((log (P/2)), as there are (log ((P+1)/2)) msg passing rounds, in each round all msgs are sent in parallel, each msg is a 1hop msg., and there is no conflict among msgs; Computation time during commun. rounds = Theta(2*(log (P/2)) [2 red. opers. in the “parent” processor in each round) = Theta(2*(log P)). Again comput. and commun.not overlapped. Speedup = S(P) = Seq_time/Parallel_time(P) = Theta(N)/[Theta((N/P) + Theta(3*logP) )]~ Theta(P) if N >> P Time steps 2 1 2, 4 2 1 1 Round #, Hops 1 1, 2 1, 2 Recursive reduction in (a) a direct tree network; and (b) an indirect tree network. Analysis of Parallel Reduction on Different Topologies (contd). Recursive reduction on an indirect tree: Initial Computation time = Theta(N/P); N= # of data items, P = # processors. Communication time = 2 + 4 + 6 +…. + 2*(log P) = [(2*((log P)(log P +1)/2)) = (log P)((log P) +1), as there are (log P) msg passing rounds, in round k all msgs are sent in parallel, each msg is a (2*k)-hop msg., and there is no conflict among msgs; Computation time during commun. rounds = Theta(log P) [1 red. opers. in each receiving processor in each round) = Theta(log P) Speedup = S(P) = Seq_time/Parallel_time(P) = Theta(N)/[Theta((N/P) + Theta((log P)^2) + Theta(logP)] ~ Theta(P)) if N >> P Time steps 2 1 2, 4 2 1 1 Round #, Hops 1 1, 2 1, 2 Recursive reduction in (a) a direct tree network; and (b) an indirect tree network.