BARC Report 5/30/96 - Microsoft Research

advertisement

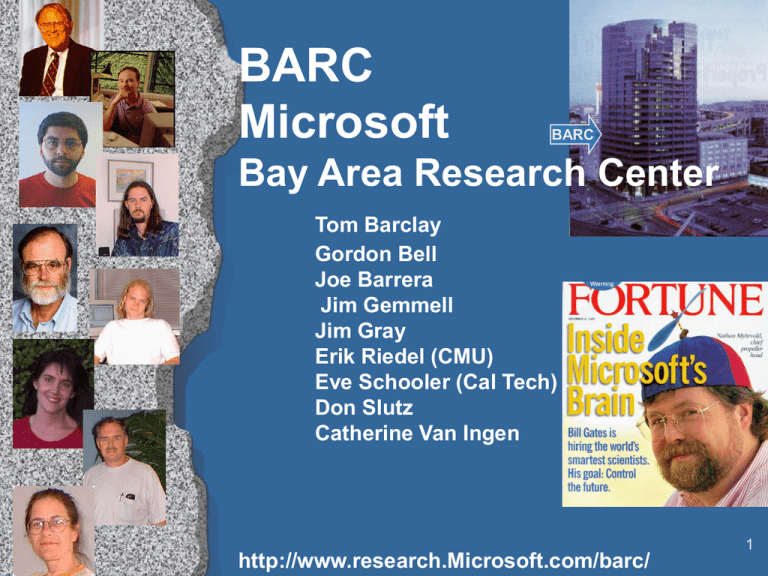

BARC

Microsoft

BARC

Bay Area Research Center

Tom Barclay

Gordon Bell

Joe Barrera

Jim Gemmell

Jim Gray

Erik Riedel (CMU)

Eve Schooler (Cal Tech)

Don Slutz

Catherine Van Ingen

http://www.research.Microsoft.com/barc/

1

2

Telepresence

• The next killer app

• Space shifting:

» Reduce travel

• Time shifting:

» Retrospective

» Offer condensations

» Just in time meetings.

• Example: ACM 97

» NetShow and Web site.

» More web visitors than attendees

• People-to-People communication

3

Working with NorCal

An Experiment in Presence

Is being there, then

better than being somewhere else

at some other time?

December 11, 1997

4

5

6

Telework = work + telepresence

“being there while being here”

• The teleworkplace is just an office with limited

» Communication, computer, and network support!

» Team interactions for work!

•

Until we understand in situ collaboration,

CSCW is a “rat hole”!

» Serendipitous social interaction in hallway, office,

coffee place, meeting room, etc.

» Administrative support for helping, filing, sending, etc.

Telepresentations and communication

• Computing environment …

being always connected and operational,

administrivia, help in managing phones and messages,

information (especially paper) management

• SOHOs & COMOHOs is a high growth market

7

IP Multicast

• Is pruned broadcast to a multicast address

• Unreliable

• Reliable would require Ack/Nack.

• State or Nack implosion problem

router

router

=sender

router

=receiver

=not interested

router

8

What We Are Doing

• Scalable Reliable Multicast (SRM)

» used by WB (white board) of Mbone

» Nack suppression (backoff)

» N2 message traffic to set up

• Error Correcting SRM (EC SRM)

» Do not resend lost packets.

» Send Error Correction in addition to regular

» (or)Send Error Correction in response to NACK

» One EC packet repairs any of k lost packets

» Improved scaleability (millions of subscribers).

9

(n,k) encoding

1

2

k

Original packets

Encode

(copy 1st k)

1

2

k

k+1 k+2

n

Take any k

Decode

1

2

k

Original packets

10

•

•

•

•

•

•

ECSRM

Combine suppression & erasure correction

Assign each packet to an EC group of size k

NACK: (group, # missing)

NACK of (g,c) suppresses all (g,xc).

Don’t re-send originals; send EC packets using (n,k)

encoding

Below, 1 NACK and one EC packet fixes all errors.

1

X

2

3

4

5

6

7

1

2

3

4

5

6

7

X

1

2

3

4

5

6

7

X

1

2

3

4

5

6

7

EC

1

2

3

4

5

6

7

X

1

2

3

4

5

6

7

X

1

2

3

4

5

6

7

X

1

2

3

4

5

6

7

X

11

Telepresence Prototypes

• PowerCast: multicast PowerPoint

» Streaming - pre-sends next anticipated slide

» Send slides and voice rather than talking head and voice

» Uses ECSRM for reliable multicast

» 1000’s of receivers can join and leave any time.

» No server needed; no pre-load of slides.

» Cooperating with NetShow

• FileCast: multicast file transfer.

» Erasure encodes all packets

» Receivers only need to receive as many bytes

as the length of the file

» Multicast IE to solve Midnight-Madness problem

• NT SRM: reliable IP multicast library for NT

12

RAGS:

RAndom SQL test Generator

• Microsoft spends a LOT of money on testing.

• Idea: test SQL by

» generating random correct queries

» executing queries against database

» compare results with SQL 6.5, DB2, Oracle

• Being used in SQL 7.0 testing.

» 185 unique bugs found (since 2/97)

» Very productive test tool

13

Sample Rags

Generated

Statement

This Statement yields an error:

SQLState=37000, Error=8623

Internal Query Processor Error:

Query processor could not

produce a query plan.

SELECT TOP 3 T1.royalty , T0.price , "Apr 15 1996 10:23AM" , T0.notes

FROM titles T0, roysched T1

WHERE EXISTS (

SELECT DISTINCT TOP 9 $3.11 , "Apr 15 1996 10:23AM" , T0.advance , (

"<v3``VF;" +(( UPPER(((T2.ord_num +"22\}0G3" )+T2.ord_num ))+("{1FL6t15m" +

RTRIM( UPPER((T1.title_id +((("MlV=Cf1kA" +"GS?" )+T2.payterms )+T2.payterms

))))))+(T2.ord_num +RTRIM((LTRIM((T2.title_id +T2.stor_id ))+"2" ))))),

T0.advance , (((-(T2.qty ))/(1.0 ))+(((-(-(-1 )))+( DEGREES(T2.qty )))-(-((

-4 )-(-(T2.qty ))))))+(-(-1 ))

FROM sales T2

WHERE EXISTS (

SELECT "fQDs" , T2.ord_date , AVG ((-(7 ))/(1 )), MAX (DISTINCT

-1 ), LTRIM("0I=L601]H" ), ("jQ\" +((( MAX(T3.phone )+ MAX((RTRIM( UPPER(

T5.stor_name ))+((("<" +"9n0yN" )+ UPPER("c" ))+T3.zip ))))+T2.payterms )+

MAX("\?" )))

FROM authors T3, roysched T4, stores T5

WHERE EXISTS (

SELECT DISTINCT TOP 5 LTRIM(T6.state )

FROM stores T6

WHERE ( (-(-(5 )))>= T4.royalty ) AND (( (

( LOWER( UPPER((("9W8W>kOa" +

T6.stor_address )+"{P~" ))))!= ANY (

SELECT TOP 2 LOWER(( UPPER("B9{WIX" )+"J" ))

FROM roysched T7

WHERE ( EXISTS (

SELECT (T8.city +(T9.pub_id +((">" +T10.country )+

UPPER( LOWER(T10.city))))), T7.lorange ,

((T7.lorange )*((T7.lorange )%(-2 )))/((-5 )-(-2.0 ))

FROM publishers T8, pub_info T9, publishers T10

WHERE ( (-10 )<= POWER((T7.royalty )/(T7.lorange ),1))

AND (-1.0 BETWEEN (-9.0 ) AND (POWER(-9.0 ,0.0)) )

)

--EOQ

) AND (NOT (EXISTS (

SELECT MIN (T9.i3 )

FROM roysched T8, d2 T9, stores T10

WHERE ( (T10.city + LOWER(T10.stor_id )) BETWEEN (("QNu@WI" +T10.stor_id

)) AND ("DT" ) ) AND ("R|J|" BETWEEN ( LOWER(T10.zip )) AND (LTRIM(

UPPER(LTRIM( LOWER(("_\tk`d" +T8.title_id )))))) )

GROUP BY T9.i3, T8.royalty, T9.i3

HAVING -1.0 BETWEEN (SUM (-( SIGN(-(T8.royalty ))))) AND (COUNT(*))

)

--EOQ

) )

)

--EOQ

) AND (((("i|Uv=" +T6.stor_id )+T6.state )+T6.city ) BETWEEN ((((T6.zip +(

UPPER(("ec4L}rP^<" +((LTRIM(T6.stor_name )+"fax<" )+("5adWhS" +T6.zip ))))

+T6.city ))+"" )+"?>_0:Wi" )) AND (T6.zip ) ) ) AND (T4.lorange BETWEEN (

3 ) AND (-(8 )) ) )

)

--EOQ

GROUP BY ( LOWER(((T3.address +T5.stor_address )+REVERSE((T5.stor_id +LTRIM(

T5.stor_address )))))+ LOWER((((";z^~tO5I" +"" )+("X3FN=" +(REVERSE((RTRIM(

LTRIM((("kwU" +"wyn_S@y" )+(REVERSE(( UPPER(LTRIM("u2C[" ))+T4.title_id ))+(

RTRIM(("s" +"1X" ))+ UPPER((REVERSE(T3.address )+T5.stor_name )))))))+

"6CRtdD" ))+"j?]=k" )))+T3.phone ))), T5.city, T5.stor_address

)

--EOQ

ORDER BY 1, 6, 5

)

14

Reduced Statement

Causes Same Error

SELECT roysched.royalty

FROM titles, roysched

WHERE EXISTS (

SELECT DISTINCT TOP 1 titles.advance

FROM sales

ORDER BY 1)

• Next steps:

» Auto-Simplify failure cases

» Compare outputs with other products

» Extend to other parts of SQL

» Patents

15

Scaleup - Big Database

• Build a 1 TB SQL Server database

»

•

•

•

•

•

»

Show off Windows NT and

SQL Server scalability

Stress test the product

Data must be

»

»

»

»

1 TB

Unencumbered

Interesting to everyone everywhere

And not offensive to anyone anywhere

Loaded

»

»

»

1.1 M place names from Encarta World Atlas

1 M Sq Km from USGS (1 meter resolution)

2 M Sq Km from Russian Space agency (2 m)

Will be on web (world’s largest atlas)

Sell images with commerce server.

USGS CRDA: 3 TB more coming.

16

The System

•

•

•

•

•

DEC Alpha + 8400

324 StorageWorks Drives (2.8 TB)

SQL Server 7.0

USGS 1-meter data (30% of US)

Russian Space data

1.6 meter

resolution

images

SPIN-2

17

Demo

Http://t2b2c

18

Technical Challenge

Key idea

• Problem: Geo-Spatial Search without

•

geo-spatial access methods.

(just standard SQL Server)

Solution:

Geo-spatial search key:

Divide earth into rectangles of 1/48th degree longitude (X) by

1/96th degree latitude (Y)

Z-transform X & Y into single Z value,

build B-tree on Z

Adjacent images stored next to each other

Search Method:

Latitude and Longitude => X, Y, then Z

Select on matching Z value

19

Live on the internet in 98H1

(Tied to Sphinx Beta 2 RTM )

• New Since S-Day: •

•

•

•

More data:

•

•

4.8 TB USGS DOQ

.5 TB Russian

Bigger Server:

•

Alpha 8400

•

•

8 proc, 8 GB RAM,

2.8 TB Disk

•

•

•

For 18 Months

Cut images and Load Dec&Jan

Built Commerce App for

USGS & Spin-2

Release on Internet with Sphinx

B2

Launch on Internet in Spring

Improved Application

•

•

•

Better UI

Uses ASP

Commerce App

20

NT Clusters (Wolfpack)

• Scale DOWN to PDA: WindowsCE

• Scale UP an SMP: TerraServer

• Scale OUT with a cluster of machines

• Single-system image

» Naming

» Protection/security

» Management/load balance

• Fault tolerance

»“Wolfpack”

• Hot pluggable hardware & software

21

Symmetric Virtual Server

Failover Example

Browser

Server

Server 11

Server 2

Web

site

Web

site

Web

site

Database

Database

Database

Web site files

Web site files

Database files

Database files

22

Clusters & BackOffice

• Research: Instant & Transparent failover

• Making BackOffice PlugNPlay on

Wolfpack

» Automatic install & configure

• Virtual Server concept makes it easy

» simpler management concept

» simpler context/state migration

» transparent to applications

• SQL 6.5E & 7.0 Failover

• MSMQ (queues), MTS (transactions).

23

1.2 B tpd

• 1 B tpd ran for 24 hrs.

• Out-of-the-box software

• Off-the-shelf hardware

• AMAZING!

•Sized for 30 days

•Linear growth

•5 micro-dollars per

transaction

24

The Memory Hierarchy

• Measuring & Modeling Sequential IO

• Where is the bottleneck?

• How does it scale with

» SMP,

RAID, new interconnects

Goals:

balanced bottlenecks

Low overhead

Scale many processors (10s)

Scale many disks (100s)

Memory

File cache

Mem bus

App address

space

PCI

Adapter

SCSI

Controller

26

PAP (peak advertised

Performance) vs

RAP (real application performance)

• Goal: PAP = RAP / 2 (the half-power point)

System Bus

422 MBps

40 MBps

7.2 MB/s

7.2 MB/s

Application

Data

10-15 MBps

7.2 MB/s

File System

Buffers

133 MBps

7.2 MB/s

SCSI

Disk

PCI

27

The Best Case: Temp File, NO IO

Temp file Read / Write File System Cache

Program uses small (in cpu cache) buffer.

So, write/read time is bus move time (3x better than copy)

Paradox: fastest way to move data is to write then read it.

This hardware is limited to 150 MBps per processor

200

Temp File Read/Write

148

150

136

100

MBps

•

•

•

•

•

54

50

0

Temp read

Temp write

Memcopy ()

28

Out of the Box Disk File

Performance

• One NTFS disk

• Buffered read

• NTFS does 64 KB read-ahead

» if you ask FILE_FLAG_SEQUENTIAL

» or if it thinks you are sequential

64KB

• NTFS does 64 KB write behind

» under same conditions

» aggregates many small IO to few big IO.

29

Synchronous Buffered Read/Write

• Read throughput is GREAT! • Net: default out of the box

Write throughput is 40% of read

WCE is fast but dangerous

•

•

performance is good.

20 ms/MB ~ 2 instructions/byte!

CPU will saturate at 50MBps

O u t o f th e B o x T h ro u g h p u t

O u t o f th e B o x O ve rh e a d

10

8

W r ite + W CE

6

4

W r ite

2

0

O verh ead ( cp u m sec/M B )

80

Re a d

T h ro u g h p u t ( M B /s)

•

•

70

Read

60

W rite

50

W rite + W C E

40

W r ite

30

20

Re a d

10

0

2

4

8

16

32

64

128

Re q u e s t Siz e ( K- Byt e s )

192

2

4

8

16

32

64

Re q u e s t Siz e ( K Byt e s )

128

192

30

Bottleneck Analysis

• Drawn to linear scale

Disk R/W

~9MBps

Memory

MemCopy Read/Write

~50 MBps

~150 MBps

Theoretical

Bus Bandwidth

422MBps = 66 Mhz x 64 bits

31

Parallel Access To Data?

At 10 MB/s

1.2 days to scan

1 Terabyte

1,000 x parallel

100 second SCAN.

1 Terabyte

10 GB/s

10 MB/s

Parallelism:

divide a big problem

into many smaller ones

to be solved in parallel.

32

PAP vs RAP

• Reads are easy, writes are hard

• Async write can match WCE.

422 MBps

142MBps

SCSI

Application

Data

40 MBps

File System

31 MBps

Disks

10-15 MBps

9 MBps

133 MBps

72 MBps

PCI

SCSI

33

Bottleneck Analysis

• NTFS Read/Write 9 disk, 2 SCSI bus, 1 PCI

~ 65 MBps Unbuffered read

~ 43 MBps Unbuffered write

~ 40 MBps Buffered read

~ 35 MBps Buffered write

Adapter

Memory

~30 MBps

PCI Read/Write

~70 MBps

~150 MBps

Adapter

34

NT Memory Broker

• Some servers absorb memory

circumvent NT memory management.

• Complicated by

» Wolfpack failover,

» large memory support,

» interactions among servers.

• Prototype Memory Broker service

augments NT memory management:

» Separates memory needs and desires

» Dynamic expand & reclaim memory footprint

» Monitor memory usage, paging, shared buffers

» Cross-server arbitration

• Clients: SQL Server, Exchange, Oracle,..

• Working with NT-Team (Lou Perazzoli)

35

Public Service

• Gordon Bell

» Computer Museum

» Vanguard Group

» Edits column in CACM

• Jim

Gray

» National Research Council

Computer Science and Telecommunications Board

» Presidential Advisory Committee on NGI-IT-HPPC

» Edit Journals & Conferences.

• Tom Barclay

» USGS and Russian cooperative research

36

BARC

Microsoft

Bay Area Research Center

Tom Barclay

Gordon Bell

Joe Barrera

Jim Gemmell

Jim Gray

Don Slutz

Catherine Van Ingen

http://www.research.Microsoft.com/barc/

37