Enabling Fast Prediction for Ensemble Models

advertisement

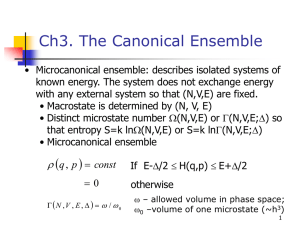

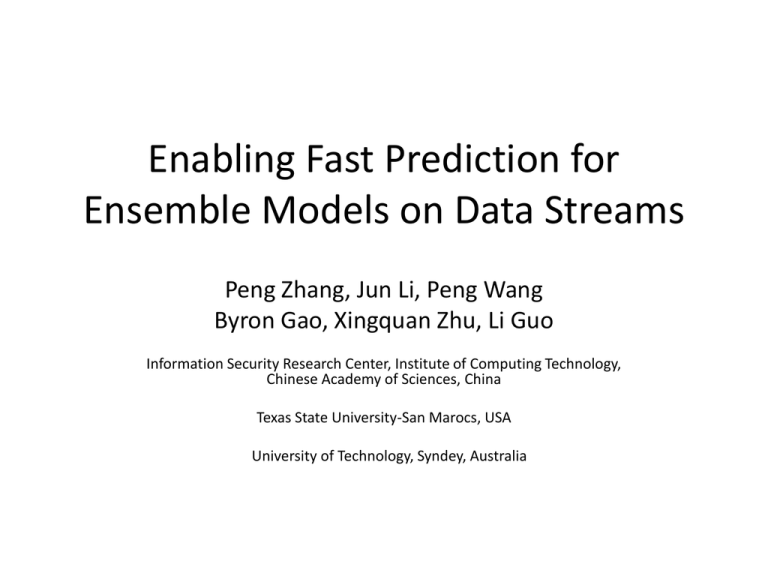

Enabling Fast Prediction for Ensemble Models on Data Streams Peng Zhang, Jun Li, Peng Wang Byron Gao, Xingquan Zhu, Li Guo Information Security Research Center, Institute of Computing Technology, Chinese Academy of Sciences, China Texas State University-San Marocs, USA University of Technology, Syndey, Australia Outline • • • • • Motivation Solutions Experiments Related work Conclusion Outline • • • • • Motivation Solutions Experiments Related work Conclusion Data Stream Classification • Information Security – Applications: Intrusion detection, spam filtering, web page stream monitoring – Example 1. Web page stream monitoring • Two requirements – Classify each incoming web page as accurate as possible. – Classify each incoming web page as fast as possible. Ensemble Models • Technique – Use a divide-and-conquer manner to build models from continuous data streams • Merits – Scale well, adapt quickly to new concepts, low variance errors, and ease of parallelization. • Family – Ensemble weighted classifiers, incremental classifiers, both classifiers and clusters Limitation of Ensembles • Low Prediction efficiency. – Prediction usually takes linear time complexity. – Previous works combine a small number of base classifiers in ensemble. • Example 2: Prediction time of ensemble models 830 Processors for 50 classifier ensembles ! Outline • • • • • Motivation Solutions Experiments Related work Conclusion Solutions • • • • Convert ensemble into spatial database Design a new Ensemble-Tree indexing structure Prediction becomes search over Ensemble-Tree Build and update ensemble becomes insertion and deletion. Our solutions Spatial Indexing for Ensemble • How to convert ensemble into spatial database ? • Example 3: Convert ensemble into spatial database Spatial Indexing for Ensemble • Why convert ensemble to spatial database ? – Trade space for time. – Example 4. linear vs. sub-linear prediction (a) Linear prediction (b) Spatial database (c) Sub-linear prediction Ensemble-Tree (E-Tree) Comparisons Indexing object Traditional spatial DB Ensemble Models Image, map, multimedia data Decision rules which carry class label information (classifier_id, weight, pointer) Parameters M, m (I, classifier_id, sibling) • E-Tree is designed for binary classification. • All decision rules are from one class, which is called target class. Operations on E-Tree • Search – Goal: Predict the label of each incoming stream record x, • Step 1: depth-first traverse to find entries in leaf covering x (i.e., u entries) • Step 2: calculate x’s class label • Example 5. Search – Consider a new record x = (4, 4). – E-Tree achieve 20% improvement in predicting x’s class label. Operations on E-Tree • Insertion – Goal: Integrates new classifiers into ensemble • Step 1: Insert the classifier into the table structure • Step 2: For each rule R in the classifier – search the leaf node that contains R – If the leaf node is full, split the node; otherwise, add in directly. – Repeat until all nodes meet the [m, M] constraint. • Example 6. Insertion – We insert the five classifiers one by one. Suppose parameters M=3, and m=1. Operations on E-Tree • Deletion – Goal: Discards outdated classifiers from ensemble • Step 1: Delete an outdated classifier from the table. • Step 2: Delete all entries associated with the classifier • Step 3: After deletion, if a node has less than m entries, then delete the leaf node, and re-insert into the tree. • Step 4: Repeat until all nodes meet the [m, M] constraint. Ensemble Learning with E-Trees • The architecture – online prediction: search operation – Sideway training and updating: Insertion and deletion operations Theoretical Analysis of E-Trees • For each incoming record x, the ideal situation is that all target decision rules that cover x are located in one single leaf. (1) What's the probability of x's target decision rules being located in one single leaf node? (2) In the ideal case, what’s the worst prediction time? • The worst case is that the target decision rules are uniformly distributed across m leaf nodes. (3) In the worst case, what’s the worst prediction time? Theoretical Analysis of E-Trees Sublinear time Outline • • • • • Motivation Solutions Experiments Related work Conclusion Experiments • Data sets – Synthetic data – Real world data Decision space 1 0 1 • Comparison methods – Global E-Tree, Local E-Tree – Global-Ensemble, Local-Ensemble Experiments • Prediction time Experiments • Memory cost Experiments • Four methods comparisons • • • • LE-tree performs better than L-Ensemble with respect to prediction time. GE-tree performs better than G-Ensemble with respect to prediction time. LE-tree achieves the same prediction accuracy as L-Ensemble. GE-tree achieves the same prediction accuracy as G-Ensemble. E-Trees can reduce Ensemble model’s prediction time, meanwhile, E-Tree can achieve the same prediction accuracy as ensemble. Outline • • • • • Motivation Solutions Experiments Related work Conclusion Related Work • Stream classification – Incremental learning, ensemble learning • Stream indexing – Boolean expression indexing in Publish/subscribe systems • Spatial indexing – R-Tree, R*-Tree, R+-Tree, X-Tree, … • Ensemble Pruning – Concept drifting Outline • • • • • Motivation Solutions Experiments Related work Conclusion Conclusion • Contributions – Identify and address the prediction efficiency problem for ensemble models on data streams – Convert ensemble model to spatial database, and propose a new type of spatial indexing Ensemble-Tree to achieve sub-linear prediction time. • Future work – For time-critical data mining tasks. – Index SVM and other classification models. Thank you ! Questions? Source code http://streammining.org