L22 NL Methods 2

advertisement

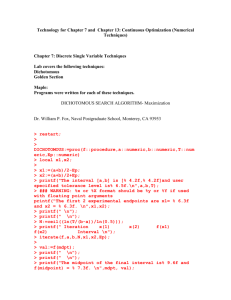

L22 Numerical Methods part 2 • • • • • • Homework Review Alternate Equal Interval Golden Section Summary Test 4 1 2 Problem 10.4 given : f (x ) x12 2 x22 2 x32 2 x1 x2 2 x2 x3 and d ( 3,10, 12) at x (1,2,3) Determine whether d is a descent direction i.e. c d 0 2 x1 2 x2 2(1) 2(2) 6 c f (x*) 4 x2 2 x1 2 x3 4(2) 2(1) 2(3) 16 16 4 x3 2 x2 x* 4(3) 2(2) c d0 3 c d cTd 6 16 16 10 12 c1d1 c2 d 2 c3d 3 6( 3) 16(10) 16( 12) 18 160 192 Yes, descent direction 50 0 3 Prob 10.10 given : f (x ) ( x1 x2 )2 ( x2 x3 )2 and d (4,8,4) at x (1,1,1) Determine whether d is a descent direction i.e. c d 0 2( x1 x2 ) 2(1 1) 4 c f (x*) 2( x1 x2 ) 2( x2 x3 ) 2(1 1) 2(1 1) 8 2(1 1) 4 2( x x ) 2 3 x* c d0 4 c d c Td 4 8 4 8 4 c1d1 c2 d 2 c3d 3 4(4) 8(8) 4(4) 16 64 16 96 0 No, not a descent direction 4 Prob 10.19 given : f (x ) x12 2 x22 2 x32 2 x1 x2 2 x2 x3 and d ( 12, 40, 48) at x (2,4,10) What is the slope of the function at the point? Find the optimum step size. 2 x1 2 x2 2(2) 2(4) 12 c f (x*) 4 x2 2 x1 2 x3 4(4) 2(2) 2(10) 40 48 4 x3 2 x2 x* 4(10) 2(4) 12 c d 12 40 48 40 48 12( 12) 40( 40) 48( 48) (144 1600 2304) 4048 Slope 5 Prob 10.19 cont’d x ( k 1) x ( k ) d( k ) x1 x2 x3 ( new ) ( new ) x1 x2 x3 ( old ) d1 d2 d3 x1 2 12 x2 4 40 10 48 x3 x1 2 ( 12) 2 12 x2 4 ( 40) 4 40 x2 10 ( 48) 10 48 ( old ) f (x ) x12 2 x22 2 x32 2 x1 x2 2 x2 x3 (2 12)2 2(4 40)2 2(10 48)2 2(2 12)(4 40) 2(4 40)(10 48) f () 4048 25504 0 * 4048 / 25504 0.15873 6 Prob 10.19 cont’d x ( k 1) x ( k ) d( k ) x1 x2 x3 ( new ) 2 4 10 ( old ) 12 0.15873 40 48 x1 2 0.15873( 12) 0.095 x2 4 0.15873( 40) 2.35 x2 10 0.15873( 48) 2.38 alpha 0.15872 x1 x2 x3 0.095358 -2.34881 2.38143 f(x) 10.75031 7 Prob 10.30 f ( x ) ( x1 1)2 ( x2 2)2 ( x3 3)2 ( x4 4)2 x (2,1,4,3) d ( 2,2, 2,2) find f ( ) x ( k 1) x ( k ) d( k ) x1 x2 x 3 x4 ( new ) 2 1 4 3 x1 2 ( 2) x2 1 ( 2) x3 4 ( 2) x4 3 ( 2) ( old ) 2 2 2 2 f () ((2 2) 1)2 ((1 2) 2)2 ((4 2) 3)2 ((3 2) 4) 2 f () 162 16 4 8 The Search Problem • Sub Problem A Which direction to head next? • Sub Problem B How far to go in that direction? x(k ) 9 Search Direction… Min f(x): Let’s go downhill! 1 f (x ) f (x*) f (x*)(x x*) (x x*)T H(x*)(x x*) R 2 T let d (x x*) then x x * d or x( new) x( old ) d 1 T f (x * d ) f (x*) f (x*)d d H d R 2 T f f T (x*) d Descent condition f T (x*) d 0 let c f T (x*) c d0 10 Step Size? How big should we make alpha? Can we step too “far?” i.e. can our step size be chosen so big that we step over the “minimum?” 11 We are here Which direction should we head? Figure 10.2 Conceptual diagram for iterative steps of an optimization method. 12 Some Step Size Methods • “Analytical” Search direction = (-) gradient, (i.e. line search) Form line search function f(α) Find f’(α)=0 • Region Elimination (“interval reducing”) Equal interval Alternate equal interval Golden Section 13 Nonunimodal functions Unimodal if stay in locale? Figure 10.5 Nonunimodal function f() for 0 0 14 Monotonic Increasing Functions 15 Monotonic Decreasing Functions continous 16 Unimodal functions monotonic decreasing then monotonic increasing monotonic increasing then monotonic decreasing Figure 10.4 Unimodal function f(). 17 Some Step Size Methods • “Analytical” Search direction = (-) gradient, (i.e. line search) Form line search function f(α) Find f’(α)=0 • Region Elimination (“interval reducing”) Equal interval Alternate equal interval Golden Section 18 Analytical Step size given d, and x ( old ) let x ( new ) x ( old ) d( k ) then x ( k 1) x ( k ) d( k ) f (x( k 1) ) f (x( k ) +d) f () Slope of line search= c d f (x ( k 1) ) f (x ( k ) +d) f () f '()=0 Slope of line at fmin c d0 Figure 10.3 Graph of f() versus . 19 Analytical Step Size Example given : f (x ) ( x1 2)2 ( x2 1)2 4 and let d c and at x 4 find optimal step size *and f (*)! 2( x1 2) 2(4 2) d c 2(4 1) 2( x2 1) 4 6 x ( k 1) x ( k ) d( k ) ( new ) ( old ) x1 x1 d1 x2 x2 d 2 x1 4 ( 4) 4 4 x2 4 ( 6) 4 6 f () ( x1 2)2 ( x2 1)2 f () ((4 4) 2)2 ((4 6) 1)2 f () 522 52 13 f () 2(52) 52 0 * 1 / 2 x1 4 1 / 2( 4) 2 x2 4 1 / 2( 6) 1 2 x* 1 f (x*) ( x1 2)2 ( x2 1)2 (2 2)2 (1 1)2 0 f ( ) ((4 4) 2)2 ((4 6) 1)2 f ( ) 2((4 4) 2)( 4) 2((4 6) 1)( 6) 0 f ( *) (2 4)( 4) (3 6)( 6) 0 8 16 18 36 26 52 0 * 26 / 52 1 / 2 20 Alternative Analytical Step Size f (x ( k 1) ) f (x ( k ) +d ) f () f '()=0 df (x ( k 1) ) f T (x ( k 1) ) d (x ( k 1) ) 0 d x d since x ( k 1) x ( k ) d( k ) d (x ( k 1) ) d( k ) d f (x ( k 1) ) d( k ) 0 c( k 1) d( k ) 0 New gradient must be orthogonal to d for f '()=0 x ( k 1) x ( k ) d( k ) x1 4 ( 4) 4 4 x2 4 ( 6) 4 6 2( x 2) c( k 1) 1 2( x2 1) 2(4 4 2) 2(4 6 1) 4 8 6 12 c( k 1) d( k ) 0 T 4 6 12 6 4(4 8) 6(6 12) 0 16 32 36 72 0 52 104 0 52 / 104 1 / 2 4 8, 21 Some Step Size Methods • “Analytical” Search direction = (-) gradient, (i.e. line search) Form line search function f(α) Find f’(α)=0 • Region Elimination (“interval reducing”) Equal interval Alternate equal interval Golden Section 22 “Interval Reducing” Region elimination “bounding phase” Figure 10.6 Equal-interval search process. (a) Phase I: initial bracketing of minimum. (b) Phase II: reducing the interval of uncertainty. Interval reduction phase” l ( q 1) u ( q 1) I u l 2 23 2 delta! 24 Successive-Equal Interval Algorithm f ( x) 2- 4 x exp( x) x -5.0000 -4.0000 -3.0000 -2.0000 -1.0000 0.0000 1.0000 2.0000 3.0000 4.0000 5.0000 f(x) 22.0067 18.0183 14.0498 10.1353 6.3679 3.0000 0.7183 1.3891 10.0855 40.5982 130.4132 x lower x upper delta -5 5 1 x 0.0000 0.2000 0.4000 0.6000 0.8000 1.0000 1.2000 1.4000 1.6000 1.8000 2.0000 f(x) 3.0000 2.4214 1.8918 1.4221 1.0255 0.7183 0.5201 0.4552 0.5530 0.8496 1.3891 x 1.2000 1.2400 1.2800 1.3200 1.3600 1.4000 1.4400 1.4800 1.5200 1.5600 1.6000 0 2 0.2 “Interval” of uncertainty 1.2 1.6 0.04 f(x) 0.5201 0.4956 0.4766 0.4634 0.4562 0.4552 0.4607 0.4729 0.4922 0.5188 0.5530 x 1.3600 1.3680 1.3760 1.3840 1.3920 1.4000 1.4080 1.4160 1.4240 1.4320 1.4400 f(x) 0.456193 0.455488 0.455034 0.454833 0.454888 0.455200 0.455772 0.456605 0.457702 0.459065 0.460696 1.36 1.44 0.008 25 More on bounding phase I Swan’s method ( k 1) ( k ) 2k ( k 1) ( k ) 10k Fibonacci sequence k q (1.618) j j0 26 Successive Alternate Equal Interval Assume bounding phase has found u and l Min can be on either side of b Point values… not a line 1 b l I 3 2 1 b l I u I 3 3 But for sure its not in this region! 27 ME 482 Optimal Design alt_eq_int.xls RJE Successive Alt Equal Int 4/11/2012 Alternate Equal Interval Search for locating minimum of f(x) x lower 0.5 x upper 2.618 f ( x) 2- 4 x exp( x) Iteration 1 2 3 4 5 x f(x) x f(x) x f(x) x f(x) x f(x) xl 0.5000000 1.6487213 0.5000000 1.6487213 0.9706667 0.7570370 0.9706667 0.7570370 1.1798519 0.5344847 1/3(xu+2xl) 1/3(2xu+xl) 1.2060000 1.9120000 0.5160975 1.1186085 0.9706667 1.4413333 0.7570370 0.4609938 1.2844444 1.5982222 0.4748826 0.5513460 1.1798519 1.3890370 0.5344847 0.4548376 1.3193086 1.4587654 0.4635997 0.4655851 xu 2.6180000 5.2362796 1.9120000 1.1186085 1.9120000 1.1186085 1.5982222 0.5513460 1.5982222 0.5513460 Interval 2.118000 1.412000 0.941333 0.627556 0.418370 Optimal 1.2060000 0.5160975 1.4413333 0.4609938 1.2844444 0.4748826 1.3890370 0.4548376 1.3193086 0.4635997 Requires two function evaluations per iteration 28 Fibonacci Bounding Figure 10.8 Initial bracketing of the minimum point in the golden section method. 29 Golden section leftside = rightside I (1 ) I [ I ] (1 ) I [ ] (1 ) 2 1 0 Figure 10.9 Graphic of a section partition. b b2 4ac 1 12 4(1)( 1) 1,2 2a 2(1) 1 5 1 2.236 2 2 0.618, 1.618 30 Golden Section Example ME 482 Optimal Design alt_gold.xls RJE 4/11/2012 Golden Section Region Elimiation Search for locating minimum of f(x) x lower 0.5 1-τ= 0.381966 x upper 2.618 τ= 0.618034 f ( x) 2- 4 x exp( x) Iteration 1 2 3 4 5 xl x f(x) x f(x) x f(x) x f(x) x f(x) 0.5 1.6487213 0.5 1.6487213 0.999992 0.7182921 0.999992 0.7182921 1.1909719 0.5263899 xL+(1-τ) I 1.309004 0.4664682 0.999992 0.7182921 1.309004 0.4664682 1.1909719 0.5263899 1.309004 0.4664682 xL+ τ I 1.808996 0.8683316 1.309004 0.4664682 1.499984 0.4816814 1.309004 0.4664682 1.3819519 0.4548602 Interval I xu 2.618 2.118 5.2362796 1.808996 1.308996 0.8683316 1.808996 0.809004 0.8683316 1.499984 0.499992 0.4816814 1.499984 0.3090121 0.4816814 Optimal 1.30900399 0.4664682 1.30900404 0.46646819 1.30900401 0.4664682 1.30900403 0.46646819 1.38195187 0.45486022 31 Summary • General Opt Algorithms have two sub problems: search direction, and step size • Descent condition assures correct direction • For line searches…in local neighborhood… we can assume unimodal! • Step size methods: analytical, region elimin. • Region Elimination (“interval reducing”) Equal interval Alternate equal interval Golden Section 32