Lecture 7 -- Sorting algorithms

advertisement

DCO20105 Data structures and algorithms

Lecture

7:

Big-O analysis

Sorting Algorithms

Big-O analysis on different ways of multiplication

Sorting Algorithms:

selection sort, bubble sort, insertion sort, radix sort, partition sort,

merge sort

Comparison of different sorting algorithms

-- By Rossella Lau

Rossella Lau

Lecture 7, DCO20105, Semester A,2005-6

Performance re-visit

For

multiplication, we can use (at least) three different

ways:

The one we used to use in primary school

bFunction(m, n) in slide 9 of Lecture 6

funny(a, b) in slide 10 of Lecture 6

int bFunction( int n, int m)

{ if (!n) return 0;

return bFunction (n-1, m) + m; }

int funny(int a, int b) {

if ( a == 1 ) return b;

return a & 1 ? funny ( a>>1, b<<1) + b :

funny ( a>>1, b<<1); }

Rossella Lau

Lecture 7, DCO20105, Semester A,2005-6

Performance analysis

The traditional way

bFunction(n,m)

funny(a, b)

Memorizing time table is Memorizing time table Memorizing is not

required (memory)

is not required

required for binary

system (in a computer)

Number of operations: Number of operations: Number of operations:

Multiplications:

Shift operations: 2

number of n additions

#(n) * #(m) –

* #b(a)

memory recall

Additions: worst

Additions: #(n)

case, #b(a)

#(n) is the number of

digits of n

Rossella Lau

#b(a) is the number of

digits of a in binary

Lecture 7, DCO20105, Semester A,2005-6

Execution time vs memory

The

traditional multiplication has the least operations

but it requires the most memory at O (log10 n)

bFunction()

does not require additional memory but it

spends a terrible amount of time getting the result :

O(n)

funny()

does not require additional memory and it has a

bit more operations at O (log2 n)

The

traditional way may have less operations but hard

to say if it really outperforms funny() since memory

load may not be faster than shift operation

Rossella Lau

Lecture 7, DCO20105, Semester A,2005-6

Ordering of data

In

order to search a record efficiently, records are stored in

the order of key values

A key

is a field or some fields of a record that can uniquely

identify the record in a file

Usually,

only the key values are stored in memory and the

corresponding record is loaded into the memory only when it

is necessary

The

key values, therefore, usually are sorted in

a special order to allow efficient searching

Rossella Lau

Lecture 7, DCO20105, Semester A,2005-6

Classification of sorting methods

Comparison-Based

Methods

Insertion Sorts

Selection Sorts

Heapsort (tree sorting) – in future lesson

Exchange sorts

• Bubble sort

• Quick sort

Merge sorts

Distribution

Rossella Lau

Methods: Radix sorting

Lecture 7, DCO20105, Semester A,2005-6

Selection sort

Selection:

choose the smaller element from a list and

place it in the 1st position.

The

process is from the first element to the second to

last element on a list and for each element to apply the

“selection” on the sub-list starting from the element

being processed.

Ford’ text

Rossella Lau

book slides 2-9 in Chapter 3

Lecture 7, DCO20105, Semester A,2005-6

Bubble sort

To

pass through the array n-1 times, where n is the

number of data in the array

For

each pass:

compare each element in the array with its successor

interchange the two elements if they are not in order

The

algorithm

Rossella Lau

bubble (int x[], int n) {

for (i=0; i<n-1; i++)

for (j=0; j<n-1; j++)

if (x[j] > x[j+1])

SWAP (x[j], x[j+1])

}

Lecture 7, DCO20105, Semester A,2005-6

An example trace of bubble sort

Given data sequence:

25 57 48 37 12 92 86 33

The first pass:

25 57 48 37 12 92 86 33

25 57 48 37 12 92 86 33

25 48 57 37 12 92 86 33

Subsequent passes:

Pass2: 25 37 12 48 57 33 86 92

Pass3: 25 12 37 48 33 57 86 92

25

25

25

25

Pass4: 12

Pass5: 12

Pass6: 12

Pass7: 12

48

48

48

48

37

37

37

37

57

12

12

12

12

57

57

57

92

92

92

86

86

86

86

92

33

33

33

33

25

25

25

25

37

33

33

33

33

37

37

37

48

48

48

48

57

57

57

57

86

86

86

86

92

92

92

92

25 48 37 12 57 86 33 92

Rossella Lau

Lecture 7, DCO20105, Semester A,2005-6

Improvement can be made

At

pass i, the last i elements should be in proper

positions since, at the first pass the largest element

should be placed at the end of the array. At the

second pass, the second large element should be

placed before the last element, and so on. The

comparison only requires from x[0] to x[n-i-1]

The

array has already been sorted at the fifth

iteration and the sixth and seventh are redundant

Therefore,

once no exchange is required in an

iteration, the array is already sorted and the

subsequent iterations are redundant

Rossella Lau

Lecture 7, DCO20105, Semester A,2005-6

The improved algorithm for bubble sort

void bubble1 (int x[], int n)

{

exchange = TRUE;

for (i=0; i<n-1 && exchange; i++) {

exchange = FALSE;

for (j=0; i<n-i-1; j++)

if (x[j] > x[j+1]) {

exchange = TRUE;

SWAP(x[j], x[j+1]);

}/*end if */

}/* end for i */

}

Rossella Lau

Lecture 7, DCO20105, Semester A,2005-6

Performance considerations of bubble sort

For

the first version, it requires (n-1) comparisons in

(n-1) passes the total number of comparisons is n2 2n +1, i.e., O(n2)

For

the improved version, it requires (n-1) + (n-2) + ...

+ (n-k) for k (<n) passes the total number of

comparisons is (2kn-k2 -k)/2. However, the average k

is O(n) yielding the overall complexity as O(n2) and

the overhead (set and check exchange) introduced

should also be considered

It

only requires little additional space

Rossella Lau

Lecture 7, DCO20105, Semester A,2005-6

Insertion sort

Insert

an item into a previous sorted order one by

one for each of the data.

It

is similar to repeatedly picking up playing cards

and inserting them into the proper position in a

partial hand of cards

Rossella Lau

Lecture 7, DCO20105, Semester A,2005-6

An example trace of insertion sort

25 57 48 37 12 92 86 33

25 57 48 37 12 92 86 33

25 57 48 37 12

25

57 37 12

25 48 57 37 12

25 48 57 37 12

92

92

92

92

86

86

86

86

33

33

33

33

25 48

57 12 92 86 33

25

48 57 12 92 86 33

25 37 48 57 12 92 86 33

25 37 48 57 12 92 86 33

25 37 48

57 92 86 33

25 37

48 57 92 86 33

25

37

25 37

12 25 37

12 25 37

48

48

48

48

57

57

57

57

92

92

92

92

86

86

86

86

33

33

33

33

12 25 37 48 57 92 86 33

12 25 37 48 57 86 92 33

12 25 37 48 57 86 92 33

……

12 25 33 37 48 57 86 92

Rossella Lau

Lecture 7, DCO20105, Semester A,2005-6

The algorithm of insertion sort

void insertsort(x,n)

int x[], n)

{for (k=1; k<n; k++) {

y = x[k];

for (i = k-1; i >=0

&& y<x[i]; i--)

x[i+1] = x[i];

x[i+1] = y;

} /* end for k */

}

Rossella Lau

The

checking of i>=0 is time

consuming. Setting a sentinel in

the beginning of the array will

prevent y from going beyond the

array

void insertsort(int x[], int m)

/* m is n+1, data from x[1] */

X[0] = MAXNEGINT

{for (k=2; k < m; k++) {

y = x[k];

for (i = k-1; y<x[i]; i--)

x[i+1] = x[i];

x[i+1] = y;

} /* end for k */

}

Lecture 7, DCO20105, Semester A,2005-6

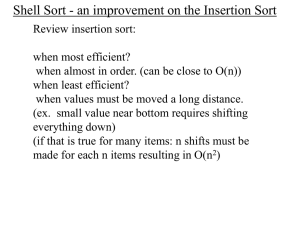

Performance analysis of insertion sort

If

the original sequence is already in order, only

one comparison is made on each pass ==> O(n)

If

the original sequence is in a reversed order, it

requires n comparison in each pass ==> O(n2)

The

It

complexity is from O(n) to O(n2)

requires little additional space

Rossella Lau

Lecture 7, DCO20105, Semester A,2005-6

Quick sort

It

is also called partition exchange sort

In

each step, the original sequence is partitioned into 3

parts:

a. all the items less than the partitioning element

b. the partitioning element in its final position

c. all the items greater than the partitioning element

The

partitioning process continues in the left and

right partitions

Rossella Lau

Lecture 7, DCO20105, Semester A,2005-6

The partitioning in each step of quicksort

To pick one of the elements as the partitioning element, p, usually

the first element of the sequence

To find the proper position for p while partitioning the sequence

into 3 parts

a) it employs two indexes, down and up

b) down goes from left to right to find elements greater than p

c) up goes from right to left to find elements less than p

d) elements found by up and down are exchanged

e) process until up and down are matched or passed each other

f) the position of p should be pointed by up

g) exchange p with the element pointed by up

Rossella Lau

Lecture 7, DCO20105, Semester A,2005-6

An example trace of quicksort

25 57 48 37 12 92 86 33

25 57 48 37 12 92 86 33

25 57 48 37 12 92 86 33

25 57 48 37 12 92 86 33

25 57 48 37 12 92 86 33

25 57 48 37 12 92 86 33

25 12 48 37 57 92 86 33

25 12 48 37 57 92 86 33

25 12 48 37 57 92 86 33

25 12 48 37 57 92 86 33

25 12 48 37 57 92 86 33

(12) 25 (48 37 57 92 86 33)

Rossella Lau

Subsequent processes:

12 25 (48 37 57 92 86 33)

12 25 (48 37 33 92 86 57)

12 25 (48 37 33 92 86 57)

12 25 (33 37) 48 (92 86 57)

12 25 (33 37) 48 (92 86 57)

12 25()33 (37) 48 (57 86) 92()

12 25 33 37 48 (57 86) 92

12 25 33 37 48()57(86) 92

12 25 33 37 48 57 86 92

_ down, _ up

Lecture 7, DCO20105, Semester A,2005-6

The algorithm for quicksort

void quickSort(int x[], int left, int right)

{

int down, up, partition;

down=left; up=right+1; partition=x[left];

while (down<up) {

while (x[++down] <= partition);

while (x[--up]

> partition);

if (down<up) SWAP(x[down], x[up])

}

x[left] = x[up];

x[up]

= partition;

if (left < up - 1)

if (down < right)

}

Rossella Lau

quickSort(x, left, up-1);

quickSort(x, down, right);

Lecture 7, DCO20105, Semester A,2005-6

Performance considerations of quicksort

Quciksort

got its name because it quickly puts an

element into its proper position by employing two

indexes to speed up the partioning process and to

minimize the exchange

pass reduces the comparisons about a half

total number of comparisons is about O(nlog2n)

Each

It

requires spaces for the recursive process or

stacks for an iterative process,

it is about O(log2n)

Rossella Lau

Lecture 7, DCO20105, Semester A,2005-6

Merge

Merge

means to combine two or more sorted sequences

into another sorted sequence

The merging of two sequences, for example, are

32 45 78 90 92 | 25 30 52 88 98 |

32 45 78 90 92 | 25 30 52 88 98 |25

32 45 78 90 92 | 25 30 52 88 98 |25 30

32 45 78 90 92 | 25 30 52 88 98 |25 30 32

32 45 78 90 92 | 25 30 52 88 98 |25 30 32 45

32 45 78 90 92 | 25 30 52 88 98 |25 30 32 45 52

32 45 78 90 92 | 25 30 52 88 98 |25 30 32 45 52 78

32 45 78 90 92 | 25 30 52 88 98 |25 30 32 45 52 78 88

32 45 78 90 92 | 25 30 52 88 98 |25 30 32 45 52 78 88 90

32 45 78 90 92 | 25 30 52 88 98 |25 30 32 45 52 78 88 90 92

32 45 78 90 92_| 25 30 52 88 98_|25 30 32 45 52 78 88 90 92 98

Rossella Lau

as follows

Lecture 7, DCO20105, Semester A,2005-6

Merge sort

It

employs the merging technique in the following

way:

1. Divide the sequence into n parts

2. Merge adjacent parts yielding the sequence

n/2 parts

3. Merge adjacent parts again yielding the sequence

n/4 parts

......

Process goes on until the sequence becomes 1 part

Rossella Lau

Lecture 7, DCO20105, Semester A,2005-6

An example of merge sort

8 parts 25 57 48 37 12 92 86 33

merge 25 57 37 48 12 92 33 86

4 parts 25 57 37 48 12 92 33 86

merge 25 37 48 57 12 33 86 92

2 parts 25 37 48 57 12 33 86 92

merge 12 25 33 37 48 57 86 92

Rossella Lau

Lecture 7, DCO20105, Semester A,2005-6

Performance considerations of merge sort

There

are only log2n passes yielding a complexity

of O(nlogn)

It

never requires n* log2n comparison while

quicksort may require O(n2) at the worst case

However,

it requires about double of assignment

statements as quicksort

It

also requires more additional spaces,

about O(n), than quicksort's O(log2n)

Rossella Lau

Lecture 7, DCO20105, Semester A,2005-6

Radix Sort

It

is based on the values of the actual digits of its octal

position

Starting

from the least significant digit to the most

significant digit

define 10 vectors for each digit and number the vectors

from v0 to v9 for digit 0 to 9 respectively

scan the data sequence once and add xi into the significant

digit's respective vector

new data sequence is as follows: remove elements from

each vector from the beginning one by one until it is empty

from q0 to q9

After

the above actions, the new data sequence is the

sorted sequence!

Rossella Lau

Lecture 7, DCO20105, Semester A,2005-6

An example of radix sort

25 57 48 37 12 92 86 33

12 92

33

25

86

57 37

48

12 92 33 25 86 57 37 48

12

25

33 37

48

57

86

92

12 25 33 37 48 57 86 92

Rossella Lau

Lecture 7, DCO20105, Semester A,2005-6

Performance considerations of radix sort

It

does not require any comparison between data

It

requires number of digits, log10 m, passes

O(n*log10

It

m) O(n), treating log10 m a constant

requires 10 times of the memory for numbers

It

seems that radix sort has the “best” performance; however,

it is not popularly used because

It consumes a terrible amount of memory

Log10 m depends on the digit (length) of a key and may

not be treated as a small constant

when the key length is long

Rossella Lau

Lecture 7, DCO20105, Semester A,2005-6

The real life sort for vector based data

Although

quick sort is known to be the fastest in

many cases, the library will not usually directly use

quick sort as the sort method

Usually,

a carefully designed library will implement

its sort method with quick sort and insertion sort

Quick

sort divides partitions until a partition is

about the size from 8 to 16, insertion is applied to

the partition since the partitions usually

are near being sorted

Rossella Lau

Lecture 7, DCO20105, Semester A,2005-6

The real life sort for non vector data

Quick

sort requires a container with random access

A container

such as a linked list does not support

random access and cannot apply quick sort

Merge

Rossella Lau

sort is preferred to be applied

Lecture 7, DCO20105, Semester A,2005-6

Sample timing of sort methods

Ford’s

prg15_2.cpp & d_sort.h

Timing for some sample runs : timeSort.out

Rossella Lau

Lecture 7, DCO20105, Semester A,2005-6

Summary

Bubble

sort and insertion sort have complexity of

O(n2) but insertion sort is still preferred for short data

stream

Partition

sort, merge sort have a less complexity at

O(n logn)

Radix

sort seemed at O(n) complexity but it consumes

more memory and may depend on the key length

Many

Rossella Lau

times, the trade off is space

Lecture 7, DCO20105, Semester A,2005-6

Reference

Ford:

3.1, 4.4, 8.3 15.1

Data

Structures using C and C++ by Yedidyah

Langsam, Moshe J. Augenstein & Aaron M.

Tenenbaum: Chapter 6

Example

programs: Ford: prg15_2.cpp, d_sort.h,

-- END -Rossella Lau

Lecture 7, DCO20105, Semester A,2005-6