Speech Project Week 6

專題研究

(4)

HDecode_live

Prof. Lin-Shan Lee, TA. Yun-Chiao Li

1

2

Part 1

Additional Information about Kaldi

Kaldi – some practices (1/2)

3

In 03.01:

Try to modify the total number of Gaussian by modifying “totgauss”

In 04.01:

Try to modify the number of leaves of decision tree by modifying “numleaves”

Try to modify the total number of Gaussian by modifying “totgauss”

run through the scripts and see the changes in performance and the optimal weight

Kaldi – some practices (2/2)

4

Some tips:

you can change “numleaves” up to around 4500

keeping the number of Gaussian less than 20 times of

“numleaves” is more stable

Try to modify other parameters if you have time:

numiters: number of iterations

realign_iters: those iterations to realign the feature to state

5

Part 2

Simple Live Recognition System (HDecode_live)

Simple Recognition System

6

Make sure the microphone is functional

和 HDecode 用法相同 (hdecode.sh)

HDecode -> Hdecode_live

Make sure HDecode, record, HCopy is under the same directory

Work on cygwin

Use bi-gram language model

-a 0.5 (acoustic model weight)

-s 8.0 (language model weight)

-t 75.0 (beamwidth)

You can change these parameters and see what will happen

Setup

7

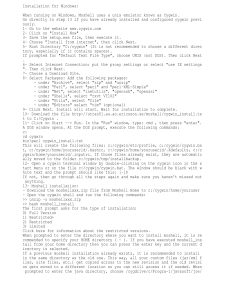

Cygwin

The purpose to use Cygwin is to simulate the unix operating system in windows

Install Cygwin

http://cygwin.com/setup-x86.exe

(x86 only!!)

Download /share/HDecode_live/

to C:\cygwin\home\youraccount\HDecode_live

leave all the options default and click next

AM / tiedlist am.lecture.speakerdependent.mmf

/ tiedlist.news

AM / tiedlist am.news.mmf / tiedlist.news

Lecture

LM trained by yourself

Lexicon

lexicon.lectur

e

News

LM trained by yourself

Lexicon

lexicon.news

• There are two sets of recognition system

• Lecture

• AM here is trained by Prof.

• News

Lee’s sound

• AM here is trained by several news reporter’s sound

• The News system provides better performance

Acoustic Model

9

Training AM by HTK is time consuming

We’ve trained it for you

final.mmf is the speaker dependent AM trained by Prof. Lee’s voice

Therefore, it is suitable to recognize the professor’s voice

it is the same as what we used in Kaldi

10

Acoustic Model Example

Here is the HMM model for each phone

Here is the Gaussian mixture model for each state

Language model training (1/2)

11

remove the first column in material/train.text, and rename it as train.lecture

hint: vim visual block + “d”

train.lecture:

OKAY [A66E] [A655][A6EC] [A6AD]

[B36F][AAF9][BDD2] [AC4F] [BCC6][A6EC] [BB79][ADB5][B342][B27A] EMPH_A

[A8BA] [B36F][AC4F] [A8E2] [ADD3] [A5D8][AABA]

Change encoding:

/share/tool/chencoding -f ascii -t utf8 train.lecture > train.lecture.utf8

OKAY 好 各位 早

這門課 是 數位 語音處理 EMPH_A

那 這是 兩 個 目的

Language model training (2/2)

12

We prepare another language model too

Use the news corpus to train language model

copy it to your folder

cp /share/corpus/train.* .

cp /share/corpus/lexicon.* .

/share/tool/ngram-count

-order 2 ( you can modify it from 1~3 !)

-kndiscount (modified Kneser-Ney)

-text train.lecture (training data, also try train.news

!)

-vocab lexicon.lecture (lexicon, also try lexicon.news

!)

-lm languagemodel (output language model name)

Simple Recognition System

13

Execute Cygwin Terminal in Windows

Edit hdecode.lecture.sh/hdecode.news.sh

change the language model to your’s

Execute “bash hdecode.lecture.sh/hdecode.news.sh”

Wait until “Ready…” appears in the terminal

Click “Enter” and say something

Click “Enter” again and wait for the result

Type “exit” if you want to leave

Some hint

14

If you have any problem training LM:

scripts are here: /share/scripts/