ppt

advertisement

Module 10: Virtual Memory

•

•

•

•

•

•

•

•

•

Background

Demand Paging

Performance of Demand Paging

Page Replacement

Page-Replacement Algorithms

Allocation of Frames

Thrashing

Other Considerations

Demand Segmenation

Applied Operating System Concepts

10.1

Silberschatz, Galvin, and Gagne 1999

Background

•

Virtual memory – separation of

user logical memory

from physical memory.

– Only part of the program needs to be in memory for

execution.

– Logical address space can therefore be much larger than

physical address space.

– Need to allow pages to be swapped in and out.

•

Virtual memory can be implemented via:

– Demand paging

– Demand segmentation

Applied Operating System Concepts

10.2

Silberschatz, Galvin, and Gagne 1999

Demand Paging

•

•

•

Bring a page into memory only when it is needed.

– Less I/O needed

– Less memory needed

– Faster response

– More users

Copy a page back to disk only when it is needed.

– Dirty (modified) bit

– Less I/O needed

– Lazy write

Page is needed reference to it

– invalid reference abort

– not-in-memory bring to memory

Applied Operating System Concepts

10.3

Silberschatz, Galvin, and Gagne 1999

Consistency Problem

-- Whenever data is replicated --

Invalid

Dirty

A

1. read

A

A

•

•

•

•

--- 최신정보

Applied Operating System Concepts

A

2. modified

2. modified

A

1. read

10.4

Strong / Weak consistency

Write through / Write back

Pull / Push

Overhead vs QoS

Silberschatz, Galvin, and Gagne 1999

Valid-Invalid Bit

•

“Invalid” means both:

– not-in-memory: this page has never been loaded from disk before

– not legal: it is from disk, but-disk copy (original) has been updated

eg: KAL reservation system:

one global disk (본사) – N computers / 영업점

•

Initially all entries are set to invalid

•

During address translation, if invalid bit is set “page fault”.

Applied Operating System Concepts

10.5

Silberschatz, Galvin, and Gagne 1999

Page Fault

•

•

•

•

•

Access to invalid page causes HW (MMU) trap – page fault trap

Trap handler is within OS address space page fault handler is invoked

OS

1.

2.

3.

4.

handles the page fault as follows:

Invalid reference? eg bad address, protection violation …? abort process.

Just not in memory? Then continue….

Get an empty page frame. (없으면 뺏어 올 것 - replace)

Read the page into frame from disk.

1. 이 disk I/O 가 끝나기 까지 이 프로세스는 CPU를 preempt 당함 (wait)

2. Disk read 가 끝나면 page tables entry 기록, validation bit = “valid”.

3. 다시 CPU ready queue에 process 를 insert – dispatch later

5. 이 프로세스가 CPU 에 다시 run – 위의 page fault trap이 이제야 끝난 것임

6. 아까 중단됬던 instruction을 다시 시작

Pure paging

– Never swap-in until referenced – start program with no page in memory

Locality of reference

– 실제 대부분의 프로그램에서 관찰되는 현상으로써

– 일정 시간에는 극히 일부 page 만 집중적으로 reference 하는 program behavior

– Paging system 이 성능이 좋게 되는 근거임

Applied Operating System Concepts

10.6

Silberschatz, Galvin, and Gagne 1999

실제 HW design의 어려움

When does Page Fault occur?

1.

2.

on instruction fetch

on operand fetch

– restart 후 다시해야 (instruction fetch, decode, operand-fetch)

3. 최악의 경우 한 기계어가 “여러 location을 변화”시키는 도중에 발생

– 예: “block copy” instruction:

copy count from_address

<op-code> <byte수>

source

to_address

destination

– 중간에서 page fault 가 나면?

Undo 시켜야 함 (block copy 자체를)

그러려면 관련된 값들을 관리하는 HW가 추가로 필요

Applied Operating System Concepts

10.7

Silberschatz, Galvin, and Gagne 1999

What happens if there is no free frame?

• Page replacement – 빼앗아올

(replace) page 를 결정해야

– Will not be used soon?

– Swap it out if necessary (if modified).

– Algorithm for choosing target page for replacement

– Goal – minimum number of page faults.

• Same page may be brought into memory several times during

run.

Applied Operating System Concepts

10.8

Silberschatz, Galvin, and Gagne 1999

Performance of Demand Paging

•

•

Page Fault Rate 0 p 1.0

– if p = 0 no page faults

– if p = 1, every reference is a fault

Effective Access Time (EAT)

EAT = (1 – p) x memory access

+ p ( [OS & HW page fault overhead]

+ [swap page out if needed ]

+ [swap page in]

+ [OS & HW restart overhead] )

Applied Operating System Concepts

10.9

Silberschatz, Galvin, and Gagne 1999

Page-Replacement Algorithms

• Want lowest page-fault rate.

• Evaluate algorithm by running it on a particular string of

memory references (reference string) and computing the

number of page faults on that string.

• In all our examples, the reference string is

1, 2, 3, 4, 1, 2, 5, 1, 2, 3, 4, 5.

Applied Operating System Concepts

10.10

Silberschatz, Galvin, and Gagne 1999

First-In-First-Out (FIFO) Algorithm

•

•

Reference string:

1, 2, 3, 4, 1, 2, 5, 1, 2, 3, 4, 5

3 frames (3 pages can be in memory at a time per process)

1

•

•

4 frames

1

4

5

2

2

1

3

3

3

2

4

(1, 2, 3, 4, 1, 2, 5, 1, 2, 3, 4, 5)

9 page faults

More page faults?

1

1

5

4

2

2

1

5

3

3

2

4

4

3

10 page faults

(1, 2, 3, 4, 1, 2, 5, 1, 2, 3, 4, 5)

FIFO Replacement – Belady’s Anomaly

– more frames less page faults

Applied Operating System Concepts

10.11

Silberschatz, Galvin, and Gagne 1999

Optimal Algorithm

•

•

Replace page that will not be used for longest period of time.

4 frames example

1, 2, 3, 4, 1, 2, 5, 1, 2, 3, 4, 5

1

2

6 page faults

3

4

•

•

How do you know this?

Used for measuring how well your algorithm performs. (비교기준용)

Applied Operating System Concepts

10.12

Silberschatz, Galvin, and Gagne 1999

Least Recently Used (LRU) Algorithm

•

Reference string: 1, 2, 3, 4, 1, 2, 5, 1, 2, 3, 4, 5

1

2

3

4

•

문제는 이것을 어떻게 implement 하느냐이다.

– 있는 그대로 구현하려면, 매 memory reference 마다

– (페이지 마다 시간을 기록해야하고) extra memory (page table) traffic

– (모든 페이지중 최소치 시간을 찾아내야 한다)

– 즉 space, time 이 너무 많이 들어감 이렇게는 불가능함

– 여러 종류의 유사 implementation 방법들도 나옴

Applied Operating System Concepts

10.13

Silberschatz, Galvin, and Gagne 1999

LRU Algorithm (구현방법들)

•

•

Counter implementation

– Every page entry has a counter;

– CPU counter is incremented at every memory reference (logical clock)

–

CPU counter = 총 memory reference 수

– When page A is accessed, copy the CPU clock into page A’s counter.

– At replacement, search page table for minimum counter

Extra memory access (counter write time) – every memory access 마다

Search (time) overhead – replacement 시

Counter (space) overhead, ….

Stack implementation – keep a stack of page numbers in a double link form:

– Page A referenced:

move page A to the top

requires 6 pointers to be changed

– No search for replacement

참조됨

Applied Operating System Concepts

10.14

Silberschatz, Galvin, and Gagne 1999

LRU Approximation Algorithms

•

•

Reference bit

– Initially -= 0

– 1 means page has been referenced

– Replace the one which is 0 (if one exists).

– We do not know the order, however.

Additional reference bit

– 8 bits for reference bit.

– 주기적으로 OS 가 이 8 비트를 shift (to high order)

– 예: 00000000 – 한번도 안사용됨

–

10101010 – 홀수 주기마다 사용됨

.

Applied Operating System Concepts

10.15

Silberschatz, Galvin, and Gagne 1999

(계 속)

•

•

Second chance (clock) algorithm

– Ref bit = 0 : never referenced

–

= 1 : yes, referenced

– Circular queue of pages (이 프로세스에 속한)

– Advance pointer until it finds reference bit 0 (never referenced)

– 포인터 이동하는 중에 reference bit 1 은 모두 0 으로 바꿈

–

(그림 10.13 중간 비트들 참조)

– 한바퀴 되돌아와서도 (second chance) 0이면 그때에는 replace 당함

– 자주 사용되는 페이지라면 second chance 가 올때 1

– 최악의 경우 모든 bit 이 1 이면 FIFO 가 됨

Enhanced Second chance

빈번사용?

I/O 유발?

– Not-Referenced, not-modified

– Referenced,

modified

Applied Operating System Concepts

10.16

-

첫번째로 replace

가장 나중 replace

Silberschatz, Galvin, and Gagne 1999

Counting Algorithms

•

•

LFU Algorithm:

– replaces page with smallest count.

MFU Algorithm:

– based on the argument that the page with the smallest

count was probably just brought in and has yet to be used.

Applied Operating System Concepts

10.17

Silberschatz, Galvin, and Gagne 1999

Allocation of Frames

•

LRU 복습

– Page fault 발생시

choose victim? (they assumed fixed allocation, not variable allocation)

Why not consider allocating one more page frame at page fault?

•

•

Allocation Problem – 매번 fault 시 마다 allocation size 다시 결정

•

참고: Each process needs minimum number of pages.

– HW 측면: IBM 370 – 6 pages to handle SS MOVE instruction:

instruction is 6 bytes, might span 2 pages.

2 pages to handle from.

2 pages to handle to.

– SW 측면:

Loop 내의 page 는 한꺼번에 allocate 되는 것이 유리함

그렇지 않으면 매 loop 마다 page fault – CPU/disk load 심한 불균형?

Two major allocation schemes.

– fixed allocation

– priority allocation

Applied Operating System Concepts

10.18

Silberschatz, Galvin, and Gagne 1999

Fixed Allocation

•

•

Equal allocation – e.g., (100 frames, 5 processes) 20 pages each.

Proportional allocation – Allocate according to the size of process.

si size of process pi

S si

m total number of frames

ai allocation for pi

si

m

S

m 64

si 10

s2 127

10

64 5

137

127

a2

64 59

137

a1

Applied Operating System Concepts

10.19

Silberschatz, Galvin, and Gagne 1999

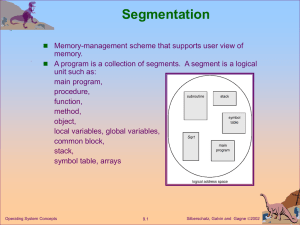

Priority Allocation

•

•

Use a proportional allocation scheme using priorities rather than size.

High priority process – give more memory – finish early

Global vs. Local Replacement

•

Global replacement – process selects a replacement frame from

the set of all frames; one process can take a frame from another.

•

Local replacement – each process selects from only its own set

of allocated frames.

Applied Operating System Concepts

10.20

Silberschatz, Galvin, and Gagne 1999

Thrashing

•

•

If a process does not have “enough” pages,

•

Thrashing a process is busy swapping pages in and out.

the page-fault rate is very high. This leads to:

– low CPU utilization.

– operating system thinks that it needs to increase the degree

of multiprogramming.

– another process added to the system (higher MPD).

CPU is idle most of the time – low throughput

A

main()

main()

{ for (I=1, 100000) { A = B + X }}

X

B

Applied Operating System Concepts

10.21

Silberschatz, Galvin, and Gagne 1999

Thrashing Diagram

•

•

Why does paging work?

Locality model

– Process migrates from one locality to another.

– Localities may overlap.

Why does thrashing occur?

( size of locality) > (total allocated memory size)

Applied Operating System Concepts

10.22

Silberschatz, Galvin, and Gagne 1999

Working-Set Model

•

working-set window a fixed number of page references

Example: 10,000 instruction

•

WS(ti ) = { pages referenced in [ ti, , ti - ] }

– if too small will not encompass entire locality.

– if too large will encompass several localities.

– if = will encompass entire program.

123123123248024802480248033666666336666666336666633666

•

•

•

•

•

WSSi = Working set size for process Pi

D = WSSi total demand frames

if D > m Thrashing (m is total number of available frames)

Policy if D > m, then suspend one of the processes.

Working set model - If a process is not allocated memory greater than its WSS

rather choose to be suspended MPD 를 결정 함

즉 (WSS 전체) or (suspended) 중 택일

Applied Operating System Concepts

10.23

Silberschatz, Galvin, and Gagne 1999

Working-Set Model

•

•

WS(ti ) = { pages referenced in [ ti, , ti - ] }

만일 페이지 P 가 ti에 WS(ti )에 속하였으면 keep in memory

안 속하였으면 out of memory

•

•

•

•

이 원칙에 따라 replace, allocate 를 결정

•

“시간” 개념

– Process (virtual) time – 이 프로세스가 run 할 동안만 운행

– Real time (wall-clock time)

따라서 working set model은 allocate/replace 를 같이 결정함

시시로 allocation size 가 달라질 수 있음

WS(ti ) 가 모두 보장되어야만 run, 아니면 suspend.

Applied Operating System Concepts

10.24

Silberschatz, Galvin, and Gagne 1999

Keeping Track of the Working Set

•

구현: 매 ref 마다 각 page 들의 최근 reference time 을 와 비교?

too expensive (space for ref-time field,

•

•

•

Example:

“1”

1.ref

Approximate with interval timer + a reference bit

–

–

–

–

•

•

•

time for 비교)

clk

= 10K,

“0”

3. reset

Timer interrupts: every 5K time units.

2. copy

Extra 2 bits for each page.

5K ref 마다 timer interrupt : (2 bit를 LEFT shift) (Right bit ZERO) ,

Reference 되면 가장 right-most bit 를 1로 set

(If one of the bits = 1) page belongs to the working set ( = 10K) .

Why is this not completely accurate?

Improvement = 10 bits and interrupt every 1000 time units.

Q: How do you decide window size?

Applied Operating System Concepts

10.25

Silberschatz, Galvin, and Gagne 1999

Page-Fault Frequency Scheme

•

Establish “acceptable” page-fault rate.

– If actual rate too low, process loses frame.

– If actual rate too high, process gains frame.

Applied Operating System Concepts

10.26

(or dec )

(or inc )

Silberschatz, Galvin, and Gagne 1999

Page Management Policy Issues

• Fetch policy

– Prefetch

– On-demand

• Replacement policy

– Recency based

– Frequency based

– …

• Allocation policy

– Equal vs unequal

– Fixed vs dynamic

Applied Operating System Concepts

10.27

Silberschatz, Galvin, and Gagne 1999

Other Considerations

•

•

•

Prepaging (prefetching, lookahead, ….)

10.7.2. Page size selection

– Internal fragmentation vs table fragmentation (table size)

– Disk transfer efficiency – seek/rotation time vs. transfer time

– I/O 횟수

– Improved Locality

Smaller page size isolate only needed info within page

Trend

– Larger page size – speed gap 이 커질수록

–

DRAM: 7%/yr, Disk: 1800 5400 rpm/50yr

– (CPU speed, memory capacity) – improves faster than disk speed.

– Page fault (relative) penalty is becoming more costly these days.

Applied Operating System Concepts

10.28

Silberschatz, Galvin, and Gagne 1999

Other Consideration (Cont.)

•

•

10.7.4. Program structure

– Locality of reference: reference 를 국지에 국한

– Data structure, program structure 가 영향을 준다

– 프로그램 언어

C ----- pointers ------- random access (non-local variable)

Java --no pointers -- locality

– Compiler, loader 도 영향을 준다

– program restructuring – 자주 쓰는 프로그램

I/O interlock

– 시나리오

–

–

–

– Solution

–

–

Pold requests disk I/O to page A, wait for I/O

CPU is given to Pnew,

Pnew generates page fault, replaces page A

disk for Pold is ready, DMA begins transfer to page A for Pold .

1. No I/O with user space page – only to kernel buffer

then to user space (yes, extra copy)

2. Lock the page reserved for disk I/O.

Applied Operating System Concepts

10.29

Silberschatz, Galvin, and Gagne 1999