Parallel Programming Models

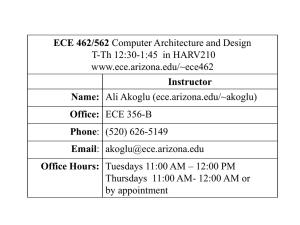

advertisement

Lecture 4:

Parallel Programming Models

Parallel Programming Models

Parallel Programming Models:

Data parallelism / Task parallelism

Explicit parallelism / Implicit parallelism

Shared memory / Distributed memory

Other programming paradigms

•

•

Object-oriented

Functional and logic

Parallel Programming Models

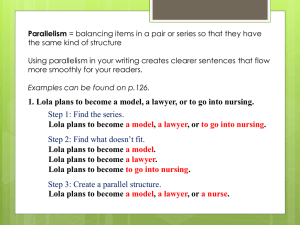

Data Parallelism

Parallel programs that emphasize concurrent execution of the

same task on different data elements (data-parallel programs)

•

Most programs for scalable parallel computers are data parallel in

nature.

Task Parallelism

Parallel programs that emphasize the concurrent execution of

different tasks on the same or different data

•

•

Used for modularity reasons.

Parallel programs, structured as a task-parallel composition of dataparallel components is common.

Parallel Programming Models

Data parallelism

Task Parallelism

Parallel Programming Models

Explicit Parallelism

The programmer specifies directly the activities of the multiple

concurrent “threads of control” that form a parallel computation.

•

Provide the programmer with more control over program behavior

and hence can be used to achieve higher performance.

Implicit Parallelism

The programmer provides high-level specification of program

behavior.

It is then the responsibility of the compiler or library to

implement this parallelism efficiently and correctly.

Parallel Programming Models

Shared Memory

The programmer’s task is to specify the activities of a set of

processes that communicate by reading and writing shared memory.

•

•

Advantage: the programmer need not be concerned with data-distribution

issues.

Disadvantage: performance implementations may be difficult on computers

that lack hardware support for shared memory, and race conditions tend to

arise more easily

Distributed Memory

Processes have only local memory and must use some other

mechanism (e.g., message passing or remote procedure call) to

exchange information.

•

Advantage: programmers have explicit control over data distribution and

communication.

Shared vs Distributed Memory

P

P

P

P

Bus

Shared memory

Distributed memory

Memory

M

P

M

P

M

P

Network

M

P

Parallel Programming Models

Parallel Programming Tools:

Parallel Virtual Machine (PVM)

•

Distributed memory, explicit parallelism

Message-Passing Interface (MPI)

•

Distributed memory, explicit parallelism

PThreads

•

Shared memory, explicit parallelism

OpenMP

•

Shared memory, explicit parallelism

High-Performance Fortran (HPF)

•

Implicit parallelism

Parallelizing Compilers

•

Implicit parallelism

Parallel Programming Models

Parallel Programming Models

Message Passing Model

Used on Distributed memory MIMD architectures

Multiple processes execute in parallel

asynchronously

•

Process creation may be static or dynamic

Processes communicate by using send and

receive primitives

Parallel Programming Models

Blocking send: waits until all data is received

Non-blocking send: continues execution after

placing the data in the buffer

Blocking receive: if data is not ready, waits until it

arrives

Non-blocking receive: reserves buffer and

continue execution. In a later wait operation if data

is ready, copies it into the memory.

Parallel Programming Models

Synchronous message-passing: Sender and

receiver processes are synchronized

•

Blocking-send / Blocking receive

Asynchronous message-passing: no

synchronization between sender and receiver

processes

•

Large buffers are required. As buffer size is finite, the

sender may eventually block.

Parallel Programming Models

Advantages of message-passing model

Programs are highly portable

Provides the programmer with explicit control over the

location of data in the memory

Disadvantage of message-passing model

Programmer is required to pay attention to such details as

the placement of memory and the ordering of

communication.

Parallel Programming Models

Factors that influence the performance of message-passing

model

Bandwidth

Latency

Ability to overlap communication with computation.

Parallel Programming Models

Example: Pi calculation

P = f01 f(x) dx = f01 4/(1+x2) dx = w ∑ f(xi)

f(x) = 4/(1+x2)

n = 10

w = 1/n

xi = w(i-0.5)

f(x)

x

0 0.1 0.2

xi

1

Parallel Programming Models

Sequential Code

f(x)

#define

f(x) 4.0/(1.0+x*x);

main(){

int n,i;

float w,x,sum,pi;

printf(“n?\n”);

scanf(“%d”, &n);

w=1.0/n;

sum=0.0;

for (i=1; i<=n; i++){

x=w*(i-0.5);

sum += f(x);

}

pi=w*sum;

printf(“%f\n”, pi);

}

x

0 0.1 0.2

xi

P = w ∑ f(xi)

f(x) = 4/(1+x2)

n = 10

w = 1/n

xi = w(i-0.5)

1

Parallel Programming Models

Parallel PVM program

Master

W0

W1

W2

Master

W3

Master:

Creates workers

Sends initial values to workers

Receives local “sum”s from

workers

Calculates and prints “pi”

Workers:

Receive initial values from master

Calculate local “sum”s

Send local “sum”s to Master

Parallel Virtual Machine (PVM)

Data Distribution

f(x)

f(x)

x

0 0.1 0.2

xi

1

x

0 0.1 0.2

xi

1

Parallel Programming Models

SPMD Parallel PVM program

Master

W0

W1

W2

Master

Master:

Creates workers

Sends initial values to workers

Receives “pi” from W0 and prints

W3

Workers:

Receive initial values from master

Calculate local “sum”s

Workers other than W0:

• Send local “sum”s to W0

W0:

• Receives local “sum”s from

other workers

• Calculates “pi”

• Sends “pi” to Master

Parallel Programming Models

Shared Memory Model

Used on Shared memory MIMD architectures

Program consists of many independent threads

Concurrently executing threads all share a single, common

address space.

Threads can exchange information by reading and writing to

memory using normal variable assignment operations

Parallel Programming Models

Memory Coherence Problem

To ensure that the latest value of a variable updated in one thread

is used when that same variable is accessed in another thread.

Thread 1

Thread 2

X

Hardware support and compiler support are required

Cache-coherency protocol

Parallel Programming Models

Distributed Shared Memory (DSM) Systems

Implement Shared memory model on Distributed memory MIMD

architectures

Concurrently executing threads all share a single, common address

space.

Threads can exchange information by reading and writing to

memory using normal variable assignment operations

Use a message-passing layer as the means for communicating

updated values throughout the system.

Parallel Programming Models

Synchronization operations in Shared Memory Model

Monitors

Locks

Critical sections

Condition variables

Semaphores

Barriers

PThreads

In the UNIX environment a thread:

Exists within a process and uses the process resources

Has its own independent flow of control

Duplicates only the essential resources it needs to be independently

schedulable

May share the process resources with other threads

Dies if the parent process dies

Is "lightweight" because most of the overhead has already been

accomplished through the creation of its process.

PThreads

Because threads within the same process share resources:

Changes made by one thread to shared system resources will be seen

by all other threads.

Two pointers having the same value point to the same data.

Reading and writing to the same memory locations is possible, and

therefore requires explicit synchronization by the programmer.