Slides

advertisement

Data-driven Kriging models

based on FANOVA decomposition

O. Roustant, Ecole des Mines de St-Etienne,

www.emse.fr/~roustant

joint work with

T. Muehlenstädt1, L. Carraro2 and S. Kuhnt1

1

University of Dortmund - 2 Telecom St-Etienne

15th February2011

1

1

Cliques of FANOVA graph

and block additive decomposition

f(x) = cos(x1+x2+x3)

+sin(x4+x5+x6)

+tan(x3+x4)

f(x) = f1,2,3(x1,x2,x3)

+f4,5,6(x4,x5,x6)

+f3,4(x3,x4)

Z(x)

= Z1,2,3(x1,x2,x3)

Cliques:

+ Z4,5,6

(x4,x5,x{3,4}

{1,2,3},

{4,5,6},

6)

+ Z3,4(x3,x4)

k(h) = k1,2,3(h1,h2,h3)

+ k4,5,6(h4,h5,h6)

2

+ k3,4(h3, h4)

This talk presents – with many pictures - the

main ideas of the corresponding paper: we refer

to it for details.

3

Introduction

Computer experiments

• A keyword associated to the analysis of timeconsuming computer codes

x1

x2

f

xd

5

y

Metamodeling and Kriging

• Metamodeling: construct a cheap-to-evaluate

model of the simulator (itself modeling the reality)

• Kriging: basically an interpolation method based on

Gaussian processes

6

Kriging model (definition)

Y(x) = b0 + b1g1(x) + … + bkgk(x) + Z(x)

linear trend (deterministic)

+ centered stationary Gaussian process (stochastic)

Some conditional

simulations

7

Kriging model (prediction)

Conditional mean

and 95% conf. int.

Some conditional

simulations

8

Kriging model (kernel)

• Kriging model is a kernel-based method

K(x,x’) = cov(Z(x), Z(x’))

FLEXIBLE (see after…)

• When Z is stationary, K(x,x’) depends on h=x-x’

we denote k(h) = K(x,x’)

9

Kriging model (kernel)

• “Making new kernels from old” (Rasmussen and Williams, 2006)

K1 + K2

cK, with c>0

K1K2

…

10

Kriging model (common choice)

• Tensor-product structure

k(h) = k1(h1)k2(h2)…kd(hd)

with hi=xi – xi’, and ki Gaussian, Matern 5/2…

11

The main idea on an example

• Ishigami, defined on D = [-π,π]3, with A=7, B=0.1:

f(x) = sin(x1) + Asin2(x2) + B(x3)4sin(x1)

• This is a block additive decomposition

f(x) = f2(x2) + f1,3(x1,x3)

Z(x) = Z2(x2) + Z1,3(x1,x3) k(h) = k2(h2) + k1,3(h1,h3)

12

The main idea on an example

• Comparison of the two Kriging models

– Training set: 100 points from a maximin Latin hypercube

– Test set: 1000 additional points from a unif. distribution

13

The schema to be generalized

f

k = k2 + k1,3

14

Outline

Introduction

[How to choose a Kriging model for the

Ishigami function]

1. From FANOVA graphs to block additive kernels

[Generalizes 1.]

2. Estimation methodologies

[With a new sensitivity index]

3. Applications

4. Some comments

15

From FANOVA graphs

to block additive kernels

FANOVA decomposition

(Efron and Stein, 1981)

• Assume that X1, …, Xd are independent random

variables. Let f be a function defined on D1x…xDd

and dν=dν1…dvd an integration measure. Then:

f(X) = μ0 + Σμi(Xi) + Σμi,j(Xi,Xj) + Σμi,j,k(Xi,Xj,Xk) + …

where all terms are centered and orthogonal to the

others. They are given by:

μ0

:= E(f(X)),

μi(Xi) := E(f(X)|Xi) – μ0

μi,j(Xi,Xj) := E(f(X)|Xi,Xj17

) - μi(Xi) - μj(Xj) – μ0

and so on…

FANOVA decomposition

Example. Ishigami function, with uniform measure

on D = [-π,π ]3. With a=π, b=2π5/5, we have:

f(x) = sin(x1) + Asin2(x2) + B(x3)4sin(x1)

= aA + sin(x1)(1+bB) + A(sin2(x2)-a) + B[(x3)4-b]sin(x1)

μ0

μ1(x1)

μ2(x2)

μ1,3(x1,x3)

(main effect)

(main effect)

(2nd order interaction)

18

FANOVA decomposition

Example (following)

• Some terms can vanish, due to averaging, as μ3 ,

or μ1 if B = -1/b, but this depends on the integration

measure, and only happens when there exist terms

of higher order

• On the other hand, and for the same reason, we

always have:

μ2,1 = μ2,3 = 0 and, under mild conditions: μ1,3 ≠ 0

19

FANOVA decomposition

• The name “FANOVA” becomes from the relation

on variances implied by orthogonality:

var(f(X)) = Σvar(μi(Xi)) + Σvar(μi,j(Xi,j)) + …

which measures the importance of each term.

• var(μJ(XJ))/var(f(X)) is often called a Sobol indice

20

FANOVA graph

Vertices: variables

Edges: if there is at least one

interaction (at any order)

Width: prop. to variances

Here (example above):

μ1,2 = μ2,3 = 0

The graph does not depend

on the integration measure,

(under mild conditions)

21

FANOVA graph and cliques

• A complete subgraph: all edges exist

• A clique: maximal complete subgraph

Cliques:

{1,3} and {2}

22

Cliques of FANOVA graph

and block additive decomposition

f(x) = cos(x1+x2+x3)

+sin(x4+x5+x6)

+tan(x3+x4)

f(x) = f1,2,3(x1,x2,x3)

+f4,5,6(x4,x5,x6)

+f3,4(x3,x4)

Cliques:

{1,2,3}, {4,5,6}, {3,4}

23

Why cliques?

f(x) = cos(x1+x2+x3)

+sin(x4+x5+x6)

+tan(x3+x4)

{1,2,3,4,5,6}

f(x) = f1,…,6(x1,…,x6) !!!

24

Incomplete subgraphs

rough model forms

Why cliques?

f(x) = cos(x1+x2+x3)

+sin(x4+x5+x6)

+tan(x3+x4)

{1,2},{2,3},{1,3},{3,4}{4,

5,6}

f(x) = f1,2(x1,x2)

+f2,3(x2,x3)+f1,3(x1,x3)

+f4,5,6(x4,x5,x6)

+f3,4(x3,x4)

25

Non maximality

wrong model forms

Cliques of FANOVA graph

and Kriging models

Cliques:

{1,2,3}, {4,5,6}, {3,4}

f(x) = f1,2,3(x1,x2,x3)

+f4,5,6(x4,x5,x6)

+f3,4(x3,x4)

Z(x) = Z1,2,3(x1,x2,x3)

+ Z4,5,6(x4,x5,x6)

+ Z3,4(x3,x4)

k(h) = k1,2,3(h1,h2,h3)

+ k4,5,6(h4,h5,h6)

26

+ k3,4(h3, h4)

Estimation methodologies

Graph estimation

• Challenge: estimate all interactions (at any order)

involving two variables

• Two issues:

1. The computer code is time-consuming

2. Huge number of combinations for the usual Sobol

indices

28

Graph estimation

• Solutions

1. Replace the computer code by a metamodel, for

instance a Kriging model with a standard kernel

2. Fix x3, …, xd, and consider the 2nd order interaction of

the 2-dimensional function:

(x1, x2) f(x) = f1(x-2) + f2(x-1) + f12(x1,x2; x-{1,2})

Denote D12(x3,…,xd) the unnormalized Sobol indice, and

define:

D12 = E(D12(x3,…,xd))

Then D12 > 0 iif (1,2) is an edge of the FANOVA graph

29

Graph estimation

• Comments:

– The new sensitivity index is computed by averaging 2nd

order Sobol indices, and thus numerically tractable

– In practice “D12 > 0” is replaced by “D12 > δ”

Different thresholds give different FANOVA graphs

30

Kriging model estimation

• Assume that there are L cliques of size d1,…,dL.

The total number of parameters to be estimated is:

ntrend + (d1+1) + … + (dL + 1)

(trend, “ranges” and variance parameters)

• MLE is used, 3 numerical procedures tested

31

Kriging model estimation

• Isotropic kernels are useful for high dimensions

• Example: suppose that C1={1,2,3}, C2={4,5,6},

C3={3,4}, and x7, …, x16 have a smaller influence

1st solution: C4={x7}, …, C13={x16}

N = ntrend + 4 + 4 + 3 + 10*2 = ntrend + 31

2nd solution: C4 = {x7, …, x16}, with an isotropic kernel

N = ntrend + 4 + 4 + 3 + 2 = ntrend + 13

32

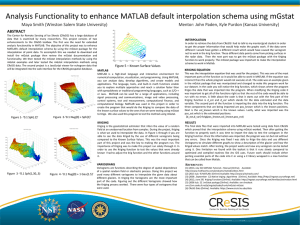

Applications

a 6D analytical case

• f(x) = cos(- 0.8 - 1.1x1 + 1.1x2 + x3)

+ sin(- 0.5 + 0.9x4 + x5 – 1.1x6)

+ (0.5 + 0.35x3 - 0.6x4)2

• Domain: [-1,1]6

• Integration measure: uniform

• Training set: 100 points from a maximin LHD

• Test set: 1000 points drawn from a unif. dist.

34

a 6D analytical case

Estimated graph

Usual Sensitivity Analysis

(from R package sensitivity)

35

a 6D analytical case

36

a 16D analytical case

• Consider the same function, but assume that it is

in a 16D space (with 10 more inactive variables)

• Including all the inactive variables in one clique

is improving the prediction

37

A 6D case study

• Piston slap data set (Fang et al., 2006)

– Unwanted noise of engine, simulated using a finite

elements method

• Training set: 100 points

• Test set: 12 points

• Leave-one-out is also considered

38

A 6D case study

39

A 6D case study

Leave-one-out RMSE: 0.0864 (standard Kriging), 0.0371 (modified Kriging)

40

Some comments

Some comments

• Main strengths

– Adapting the kernel to the data in a flexible manner

– A substantial improvement may be expected in prediction

• Depending on the function complexity

• Some drawbacks

– Dependence on the first initial metamodel

– Sometimes a large nb of parameters to be estimated

• May decrease the prediction power

42

THANKS A LOT FOR

ATTENDING!

43