2 KNN Algorithm—CF

advertisement

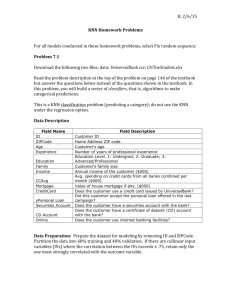

The Summary of My Work In Graduate Grade One Reporter: Yuanshuai Sun E-mail: sunyuan_2008@yahoo.cn Content 1 Recommender System 2 KNN Algorithm—CF 3 Matrix Factorization 4 MF on Hadoop 5 Thesis Framework 1 Recommender System Recommender system is a system which can recommend something you are maybe interested that you haven’t a try. For example, if you have bought a book about machine learning, the system would give a recommendation list including some books about data mining, pattern recognition, even some programming technology. 1 Recommender System 1 Recommender System But how she get the recommendation list ? Machine Learning 1. 2. 3. 4. 5. Nuclear Pattern Recognition Method and Its Application Introduction to Robotics Data Mining Beauty of Programming Artificial Intelligence 1 Recommender System There are many ways by which we can get the list. Recommender systems are usually classified into the following categories, based on how recommendations are made, 1. Content-based recommendations: The user will be recommended items similar to the ones the user preferred in the past; 1 Recommender System 2. Collaborative recommendations: The user will be recommended items that people with similar tastes and preferences liked in the past; Corated Item recommend it to target user Top 1 The similar user favorite but target user not bought 1 Recommender System 3. Hybrid approaches: These methods combine collaborative and content-based methods, which can help to avoid certain limitations of content-based and collaborative. Different ways to combine collaborative and content-based methods into a hybrid recommender system can be classified as follows: 1). implementing collaborative and content-based methods separately and combining their predictions, 2). incorporating some content-based characteristics into a collaborative approach, 3). incorporating some collaborative characteristics into a content-based approach, 4). constructing a general unifying model that incorporates both content-based and collaborative characteristics. 2 KNN Algorithm—CF KDD CUP 2011 website: http://kddcup.yahoo.com/index.php Recommending Music Items based on the Yahoo! Music Dataset. The dataset is split into two subsets: - Train data: in the file trainIdx2.txt - Test data: in the file testIdx2.txt At each subset, user rating data is grouped by user. First line for a user is formatted as: <UsedId>|<#UserRatings>\n Each of the next <#UserRatings> lines describes a single rating by <UsedId>. Rating line format: <ItemId>\t<Score>\n The scores are integers lying between 0 and 100, and are withheld from the test set. All user id's and item id's are consecutive integers, both starting at zero 2 KNN Algorithm—CF KNN is the algorithm used when I participate the KDD CUP 2011 with my advisor Mrs Lin, KNN belongs to collaborative recommendation. Corated Item recommend it to target user Top 1 The similar user’s favorite song but target user not seen 2 KNN Algorithm—CF item 1 1 user 2 r11 ? 3 user1 (r11 , ?, r13 , r14 ) 4 r13 r14 , 2 r21 r22 ? 3 r31 r32 r33 ? r24 user2 (r21 , r22 , ?, r24 ) user3 (r31 , r32 , r33 , ?) 2 KNN Algorithm—CF 1. Cosine distance 2. Pearson correlation coefficient Where Sxy is the set of all items corated by both users x and y. KNN Algorithm—CF 2 1. Cosine distance (r sS xy x,s r x )( ry ,s ry ) (r x,s r x )( ry ,s ry ) sS 1xy where S xy S S 2 xy and x 2 sS xy sim( x, y) UC 1 xy (100 r )(100 r ) S1xy Sxy2 y 2 KNN Algorithm—CF 2. Pearson correlation coefficient r sS xy r x,s y ,s r r x,s y ,s 10000 | S | sS 1xy sim( x, y ) UP 2 where S xy S1xy S xy and S1xy Sxy2 2 xy 2 KNN Algorithm—CF trackData.txt - Track information formatted as: <TrackId>|<AlbumId>|<ArtistId>|<Optional GenreId_1>|...|<Optional GenreId_k>\n albumData.txt - Album information formatted as: <AlbumId>|<ArtistId>|<Optional GenreId_1>|...|<Optional GenreId_k>\n artistData.txt - Artist listing formatted as: <ArtistId>\n genreData.txt - Genre listing formatted as: <GenreId>\n KNN Algorithm—CF 2 Artist Genre Track h a l m i b c d j k e f Album g 2 KNN Algorithm—CF 1. The distance between parent node with child node E d ( p) 1 wt (c, p) ( (1 ) )( ) IC(c) IC( p)T (c, p) E ( p) d ( p) where 2. Similarity between c1 and c2 is comentropy. 2 KNN Algorithm—CF 2 KNN Algorithm—CF 3 Matrix Factorization i1 i2 i3 Users Feature Matrix Items Feature Matrix u1 u2 u3 x11*y11 + x12*y12 = 1 x11*y31 + x12*y32 = ? x11*y21 + x12*y22 = 3 x21*y11 + x22*y12 = 2 x31*y21 + x32*y22 = 1 x31*y31 + x32*y32 = 3 x21*y21 + x22*y22 = ? U,V x21*y31 + x22*y32 = ? x31*y11 + x32*y12 = ? 3 Matrix Factorization Matrix factorization (abbr. MF), just as the name suggests, decomposes a big matrix into the multiplication form of several small matrix. It defines mathematically as follows, We here assume the target matrix Rmn , the factor matrix U mk and Vnk , where k << min (m, n), so it is R K (U ,V ) T 3 Matrix Factorization Kernel Function Kernel Function decides how to compute the prediction matrix R , that is, it’s a function with the features matrix U and V as the arguments. We can express it as follows: ~ ri, j a c K (ui , v j ) 3 Matrix Factorization Kernel Function For the kernel K : R k R k R one can use one of the following well-known kernels: Kl (ui , v j ) ui , v j ……………… linear K p (ui , v j ) (1 ui , v j ) d ………… polynomial || ui v j ||2 ……….. K r (ui , v j ) exp( ) RBF 2 2 Ks (ui , v j ) s (bi, j ui , v j ) ……… logistic 1 with s ( x) : 1 ex 3 Matrix Factorization ~with the We quantify the quality of the approximation ri , j arg mindistance, f r j ri , j ) Euclidean so(rwe can get i , j log ~ thei ,objective U ,V as follows, ( i , j )R function r i, j (r arg min f ( i , j )R U ,V ~ Where ri , j ui* v j* value. arg min f U ,V ~ i, j K u k 1 (r (i , j )R ij (ri , j )) 2 ~ ik * v jk i.e. ri , j is the predict ui v j ) u || u || v || v j || 2 2 i 2 Matrix Factorization 3 1. Alternating Descent Method This method only works, when the loss function implies with Euclidean distance. f j [(rij U i V j ) V j ] uU i 0 U i So, we can get Ui The same to Vj . (V j r Vj j ij V j ) Matrix Factorization 3 2. Gradient Descent Method The update rules of U defines as follows, U Ui * f / Ui / i where f j [(rij U i V j ) V j ] U i arg min f U ,V The same to (r (i , j )R Vj . ij uU i ui v j ) u || u || v || v j || 2 2 i 2 3 Matrix Factorization Stochastic Gradient Algorithm Gradient Algorithm 3 Matrix Factorization Online Algorithm Online-Updating Regularized Kernel Matrix Factorization Models for Large-Scale Recommender Systems 4 MF on Hadoop Loss Function arg min f (r (i , j )R U ,V ij ui v j ) 2 We update the factor V for reducing the objective function f with the conventional gradient descendent, as follows, Vij Vij ij [(RTU )ij (VU TU )ij ] Here we set V VU T U RTU Vij V j T VU U , so it is reachable , the same to factor matrix U. 4 MF on Hadoop 4 MF on Hadoop MF on Hadoop B A T a1 a2 T bn R_1_1 R_1_2 … R_1_n R_2_1 R_2_2 … R_2_n R_m_1 R_m_2 … T b2 … … … am … b1 … 4 … R_m_n MF on Hadoop 4 × = + × Left Matrix = + × Right Matrix = || MF on Hadoop B A a1 a2 … as T 1 b b2 T … bs T R_1_1 R_2_2 … 4 R_s_s 4 MF on Hadoop A11 . A= . . AM1 AB = C11 . . . CM 1 … A1 S . . , . … A MS … … B= B11 . . . BS 1 … … B1N . . . B SN , C1N . S , where C A B (i 1,...,M ; j 1,..., N ) . ij ik kj k 1 . C MN 5 Thesis Framework Recommendation System 1. Introduction to recommendation system 2. My work to KNN 3. Matrix factorization in recommendation system 4. MF incremental updating using Hadoop