Correctness criteria and Proof techniques

advertisement

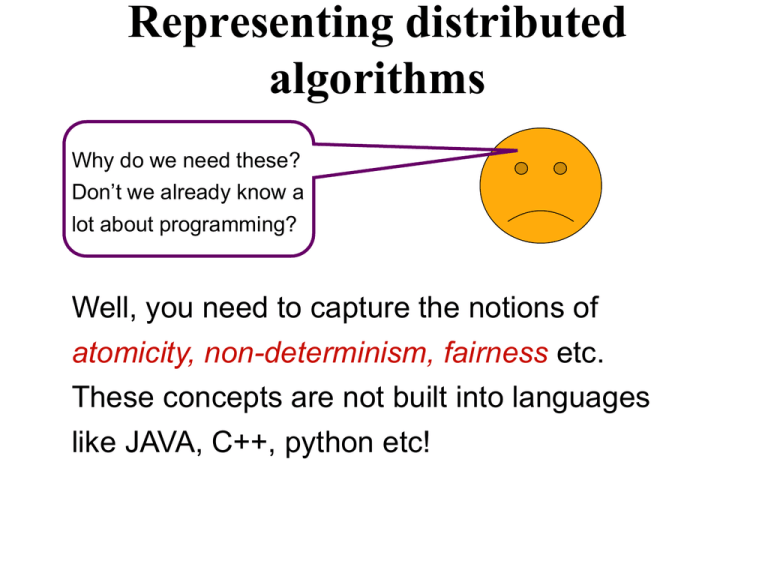

Representing distributed

algorithms

Why do we need these?

Don’t we already know a

lot about programming?

Well, you need to capture the notions of

atomicity, non-determinism, fairness etc.

These concepts are not built into languages

like JAVA, C++, python etc!

Syntax & semantics:

guarded actions

<guard G> <action A>

is equivalent to

if G then A

(Borrowed from E.W. Dijkstra: A Discipline of Programming)

Syntax & semantics: guarded

actions

• Sequential actions

S0; S1; S2; . . . ; Sn

• Alternative constructs

if . . . . . . . . . . fi

• Repetitive constructs

do . . . . . . . . . od

The specification is useful for

representing abstract algorithms, not

executable codes.

Syntax & semantics

Alternative construct

if

[]

…

[]

fi

G1 S1

G2 S2

Gn Sn

When no guard is true, skip (do nothing). When

multiple guards are true, the choice of the action to

be executed is completely arbitrary.

Syntax & semantics

Repetitive construct

do

[]

.

[]

od

G1 S1

G2 S2

Gn Sn

Keep executing the actions until all guards are false

and the program terminates. When multiple guards

are true, the choice of the action is arbitrary.

Example: graph coloring

1

0

0

There are four processes and two colors

0, 1. The system has to reach a configuration

in which no two neighboring processes have

the same color.

{program for process i}

c[i] = color of process I

1

do

∃j ∈ neighbor(i): c(j) = c(i) → c(i) := 1- c(i)

od

Will the above computation terminate?

Consider another example

program

define

initially

do

x<4

[]

x=3

od

uncertain;

x :

integer;

x=0

x := x + 1

x := 0

Question. Will the program terminate?

(Our goal here is to understand fairness)

The adversary

A distributed computation can be

viewed as a game between the

system and an adversary. The

adversary may come up with feasible

schedules to challenge the system

(and cause “bad things”). A correct

algorithm must be able to prevent

those bad things from happening.

Non-determinism

(Program for a token server - it has a single token}

repeat

if (req1 ∧ token) then give the token to client1

else if (req2 ∧ token) then give the token to client2

else if (req3 ∧ token) then give the token to client3

forever

Now, assume that all three requests are sent simultaneously.

Client 2 or 3 may never get the token! The outcome could

have been different if the server makes a non-deterministic

choice.

Token server

1

2

3

Examples of non-determinism

If there are multiple processes ready to execute actions,

then who will execute the action first is nondeterministic.

Message propagation delays are arbitrary and the order

of message reception is non-deterministic.

Determinism caters to a specific order and is a special

case of non-determinism.

Atomicity (or granularity)

Atomic = all or nothing

Atomic actions = indivisible actions

do red message x:= 0 {red action}

[] blue message x:=7 {blue action}

od

x

Regardless of how nondeterminism is

handled, we would expect that the value of

x will be an arbitrary sequence of 0's and 7's.

Right or wrong?

Atomicity (continued)

do red message x:= 0 {red action}

[] blue message x:=7 {blue action}

od

Let x be a 3-bit integer x2 x1 x0, so

x:=7 means (x2:=1, x1:= 1, x2:=1), and

x:=0 means (x2:=0, x1:= 0, x2:=0)

x

If the assignment is not atomic, then many

interleavings are possible, leading to

any possible value of x between 0 and 7

So, the answer depends on the atomicity of the assignment

Atomicity (continued)

Does hardware guarantee any form

of atomicity? Yes! (examples?)

if x ≠ y x:= y fi

Transactions are atomic by

definition (in spite of process

failures). Also, critical section

codes are atomic.

We will assume that G → A is an

“atomic operation.” Does it make

a difference if it is not so?

x

y

if x ≠ y y:= x fi

Atomicity (continued)

{Program for P}

define b: boolean

initially b = true

do b send msg m to Q

[] ¬ empty(R,P) receive msg;

b := false

od

Suppose it takes 15 seconds to

send the message. After 5 seconds,

P receives a message from R. Will it

stop sending the remainder of the

message?

NO.

R

P

b

Q

Fairness

Defines the choices or restrictions

on the scheduling of actions. No

such restriction implies an

unfair scheduler. For fair

schedulers, the following types of

fairness have received attention:

– Unconditional fairness

– Weak fairness

– Strong fairness

Scheduler / demon /

adversary

Fairness

Program

test

define x : integer

{initial value unknown}

do

[]

[]

od

true

x=0

x=1

x:=0

x:=1

x:=2

An unfair scheduler may never

schedule the second (or the third

actions). So, x may always be

equal to zero.

An unconditionally fair scheduler

will eventually give every

statement a chance to execute

without checking their eligibility.

(Example: process scheduler in

a multiprogrammed OS.)

Weak fairness

• A scheduler is weakly fair,

when it eventually executes

every guarded action whose

guard becomes true, and

remains true thereafter

Program test

define x : integer

{initial value unknown}

do

true x : = 0

[]

x = 0 x : = 1 • A weakly fair scheduler will

eventually execute the

[]

x=1 x:=2

second action, but may

od

never execute the third

action. Why?

Strong fairness

Program

test

define

x : integer

{initial value unknown}

do

true x : = 0

[]

x=0 x:=1

[]

x=1 x:=2

od

A scheduler is strongly fair, when it

eventually executes every guarded

action whose guard is true infinitely

often.

The third statement will be executed

under a strongly fair scheduler. Why?

Study more examples to reinforce these concep

Program correctness

The State-transition model

transition

A global state S ∈ s0 x s1 x … x sm

C

{sk = local state of process k}

action

S0

S1

action

action

S2 …

A

D

E

B

F

G

Each state transition is caused by an

action by an eligible process.

state

H

L

I

Initial

state

J

K

We reason using interleaving

semantics, and assume that concurrent

actions are serialized in an arbitrary order

A sample computation (or behavior) is ABGHIFL

Correctness criteria

• Safety properties

• Bad things never happen

• Liveness properties

• Good things eventually happen

Testing vs. Proof

Testing: Apply inputs and observe if the outputs

satisfy the specifications. Fool proof testing can be

painfully slow, even for small systems. Most testing

are partial.

Proof: Has a mathematical foundation, and is a

complete guarantee. Sometimes not scalable.

Testing vs. Proof

To test this program, you have

to test all possible interleavings.

With n processes p0, p1, … pn-1,

and m steps per process, the

number of interleavings is

(n.m)!

(m!) n

The state explosion problem

p0

p1

p2

p3

step1 step1 step1 step1

step2 step2 step2 step2

step3 step3 step3 step3

Example: Mutual Exclusion

Process 0

do true

Entry protocol

Critical section

Exit protocol

od

Process 1

do true

Entry protocol

Critical section

Exit protocol

od

Safety properties

(1) There is no deadlock

(2) At most one process is in its critical section.

Liveness property

A process trying to enter the CS must eventually succeed.

(This is also called the progress property)

CS

CS

Exercise

program mutex 1

{two process mutual exclusion algorithm: shared memory model}

define busy :shared boolean (initially busy = false}

{process 0}

{process 1}

do true

do true

do busy skip od;

do busy skip od;

busy:= true;

busy:= true;

critical section;

critical section

busy := false;

busy := false

{remaining codes}

{remaining codes}

od

od

Does this mutual exclusion protocol satisfy liveness and safety properties?

Safety invariants

Invariant means: something meaningful should always hold

Example: Total no. of processes in CS ≤ 1 (mutual exclusion problem)

Another safety property is Partial correctness. It implies that

“If the program terminates then the postcondition will hold.”

Consider the following:

do G0 S1 [] G1 S1 [] … [] Gk Sk od

Safety invariant: ¬(G0 ∨ G1 ∨ G2 ∨…∨ Gk) ⇒ postcondition

It does not say if the program will terminate.

(termination is a liveness property)

Total correctness = partial correctness + termination.

Exercise

Starting from the given initial state, devise an algorithm to color the

nodes of the graph using the colors 0 and 1, so that no

two adjacent nodes have the same color.

0

program colorme {for process Pi }

0

p1

p2

p0

p3

1

1

define color c ∈ {0, 1}

Initially colors are arbitrary

do ∃j ∈neighbor(i) : (c[i] = c[j]) → c[i] := 1 - c[i] od

Is the program partially

correct? YES (why?)

Does it terminate? NO (why?)

Liveness properties

Eventuality is tricky. There is no need to guarantee when

the desired thing will happen, as long as it happens..

Some examples

The message will eventually reach the receiver.

The process will eventually enter its critical section.

The faulty process will be eventually be diagnosed

Fairness (if an action will eventually be scheduled)

The program will eventually terminate.

The criminal will eventually be caught.

Absence of liveness cannot be determined from finite prefix

of the computation

Proving safety

define

c1, c2 : channel; {init c1 = c2 = null}

r, t : integer; {init r = 5, t = 5}

{program for T}

1

do t > 0 → send msg along c1; t := t -1

2

[] ¬empty (c2) → rcv msg from c2; t := t + 1

od

{program for R}

3

do ¬empty (c1) → rcv msg from c1; r := r+1

4

[]

r>0

→ send msg along c2; r := r-1

od

the safety property P:

P ≡ n1 + n2 ≤ 10

We want to prove

n1= # of messages in c1

n2= # of messages in c2

c1

t T

R

r

c2

transmitter

receiver

Proving safety

n1, n2 = # of messages in c1and c2 respectively.

We will establish the following invariant:

c1

I ≡ (t ≥ 0) ∧ (r ≥ 0) ∧ (n1 + t + n2 + r = 10)

(I ⇒ P). Check if I holds after every action.

{program for T}

1

do t > 0 → send msg along c1; t := t -1

2

[] ¬empty (c2) → rcv msg from c2; t := t+1

od

{program for R}

3

do ¬empty (c1) → rcv msg from c1; r := r+1

4

[]

r>0

→ send msg along c2; r := r-1

od

t=4

T

R

r=1

c2

Use the method of induction

Show that I initially holds, and

holds after each action.

Proving liveness

Global state

Global state

S1→ S2 → S3 → S4

↓ f ↓f

↓f ↓f

w1 w2 w3 w4

o w1, w2, w3, w4 ∈ WF

o WF is a well-founded set whose

elements can be ordered by » and

there is a smallest element

f is called a variant function

If there is no infinite chain like

w1 » w2 » w3 » w4 .., i.e.

f(si) » f(si+1) » f(si+2) ..

then the computation will

definitely terminate!

Example?

Proof of liveness: an example

0

Clock phase synchronization

1

System of n clocks ticking at the same rate.

Each clock is 3-valued, i,e it ticks as 0, 1, 2, 0, 1, 2…

2

3

n-1

A failure may arbitrarily alter the clock phases.

The clocks need to return to the same phase. .

Proof of liveness: an example

∀k: c[k] ∈ {0,1,2}

Clock phase synchronization

{Program for each clock}

(c[k] = phase of clock k, initially arbitrary)

0

do ∃j: j∈ N(i) :: c[j] = c[i] +1 mod 3

c[i] := c[i] + 2 mod 3

→

[] ∀j: j ∈ N(i) :: c[j] ≠ c[i] +1 mod 3

c[i] := c[i] + 1 mod 3

→

od

Show that eventually all clocks will return

to the same phase (convergence), and

continue to be in the same phase (closure)

1

2

3

n-1

Proof of convergence

0

1

2

0

2

2

2

0

1

1

0

1

1

2

2

2

n-1

2

Understand the game of arrows

2

Let D = d[0] + d[1] + d[2] + … + d[n-1]

d[i] = 0 if no arrow points towards clock i;

= i + 1 if a ← points towards clock i;

= n - i if a → points towards clock i;

= 1 if both → and ← point towards clock i.

By definition, D

≥ 0.

Also, D decreases after every step in the

system. So the number of arrows must

reduce to 0.