Features - WordPress.com

advertisement

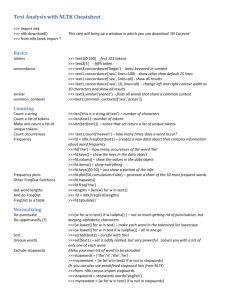

Document Classification using the Natural Language Toolkit Ben Healey http://benhealey.info @BenHealey Source: IStockPhoto http://upload.wikimedia.org/wikipedia/commons/b/b6/FileStack_retouched.jpg The Need for Automation http://upload.wikimedia.org/wikipedia/commons/d/d6/Cat_loves_sweets.jpg Take ur pick! Class: Features: - # Words - % ALLCAPS - Unigrams - Sender - And so on. The Development Set Classification Algo. New Document (Class Unknown) Document Features Trained Classifier (Model) Classified Document. Relevant NLTK Modules • Feature Extraction – – – – – from from from from from nltk.corpus import words, stopwords nltk.stem import PorterStemmer nltk.tokenize import WordPunctTokenizer nltk.collocations import BigramCollocationFinder nltk.metrics import BigramAssocMeasures – See http://text-processing.com/demo/ for examples • Machine Learning Algos and Tools – – – – from from from from nltk.classify nltk.classify nltk.classify nltk.classify import import import import NaiveBayesClassifier DecisionTreeClassifier MaxentClassifier WekaClassifier – from nltk.classify.util import accuracy NaiveBayesClassifier P(label) ∗ P(features|label) P(label|features) = P(features) P(label) ∗ P(f1|label)∗...∗ P(fn|label) P(label|features) = P(features) http://61.153.44.88/nltk/0.9.5/api/nltk.classify.naivebayes-module.html http://www.educationnews.org/commentaries/opinions_on_education/91117.html 517,431 Emails Source: IStockPhoto Prep: Extract and Load • Sample* of 20,581 plaintext files • import MySQLdb, os, random, string • MySQL via Python ODBC interface • File, string manipulation • Key fields separated out – To, From, CC, Subject, Body * Folders for 7 users with a large number of email. So not representative! Prep: Extract and Load • Allocation of random number • Some feature extraction – #To, #CCd, #Words, %digits, %CAPS • Note: more cleaning could be done • Code at benhealey.info From: james.steffes@enron.com To: louise.kitchen@enron.com Subject: Re: Agenda for FERC Meeting RE: EOL Louise -We had decided that not having Mark in the room gave us the ability to wiggle if questions on CFTC vs. FERC regulation arose. As you can imagine, FERC is starting to grapple with the issue that financial trades in energy commodities is regulated under the CEA, not the Federal Power Act or the Natural Gas Act. Thanks, Jim From: pete.davis@enron.com To: pete.davis@enron.com Subject: Start Date: 1/11/02; HourAhead hour: 5; Start Date: 1/11/02; HourAhead hour: 5; No ancillary schedules awarded. No variances detected. LOG MESSAGES: PARSING FILE -->> O:\Portland\WestDesk\California Scheduling\ISO Final Schedules\2002011105.txt Class[es] assigned for 1,000 randomly selected messages: External Relations 45 Social/Personal 68 Human Resources 134 Other/Unclear 141 Admin/Planning 158 Info Tech 167 Regulatory/Accounting 172 Deals, Trading, Modelling 247 0 50 100 150 200 250 Prep: Show us ur Features • NLTK toolset – – – – – from from from from from nltk.corpus import words, stopwords nltk.stem import PorterStemmer nltk.tokenize import WordPunctTokenizer nltk.collocations import BigramCollocationFinder nltk.metrics import BigramAssocMeasures • Custom code – def extract_features(record,stemmer,stopset,tokenizer): … • Code at benhealey.info Prep: Show us ur Features • Features in boolean or nominal form if record['num_words_in_body']<=20: features['message_length']='Very Short' elif record['num_words_in_body']<=80: features['message_length']='Short' elif record['num_words_in_body']<=300: features['message_length']='Medium' else: features['message_length']='Long' Prep: Show us ur Features • Features in boolean or nominal form text=record['msg_subject']+" "+record['msg_body'] tokens = tokenizer.tokenize(text) words = [stemmer.stem(x.lower()) for x in tokens if x not in stopset and len(x) > 1] for word in words: features[word]=True Sit. Say. Heel. random.shuffle(dev_set) cutoff = len(dev_set)*2/3 train_set=dev_set[:cutoff] test_set=dev_set[cutoff:] classifier = NaiveBayesClassifier.train(train_set) print 'accuracy for > ',subject,':', accuracy(classifier, test_set) classifier.show_most_informative_features(10) Most Important Features Most Important Features Most Important Features Performance: ‘IT’ Model Decile Mean Prob. 9 1.0000 8 0.7364 7 0.0000 6 0.0000 5 0.0000 4 0.0000 3 0.0000 2 0.0000 1 0.0000 0 0.0000 % PR 3 4 1 5 4 6 7 15 % Social 1 11 11 8 7 6 7 8 4 5 IMPORTANT: These are ‘cheat’ scores! % HR 7 10 14 11 16 16 18 13 29 % Other % Admin 1 17 13 30 21 13 16 13 18 16 17 19 28 10 16 12 14 11 14 % IT 95 49 2 2 4 4 6 2 2 2 % Legal 2 5 13 11 13 17 16 37 42 16 % Deal 3 13 25 38 41 32 25 21 21 28 Performance: ‘Deal’ Model Decile Mean Prob. 9 1.0000 8 1.0000 7 0.9971 6 0.1680 5 0.0000 4 0.0000 3 0.0000 2 0.0000 1 0.0000 0 0.0000 % PR 2 1 2 3 4 5 5 7 16 % Social 2 3 15 11 8 9 6 4 10 IMPORTANT: These are ‘cheat’ scores! % HR 2 1 3 9 19 21 21 22 3 33 % Other % Admin 11 6 3 4 18 4 35 17 22 19 17 32 13 25 9 26 2 5 11 20 % IT 5 4 2 11 9 14 13 18 77 14 % Legal 14 3 17 17 23 18 28 24 13 15 % Deal 79 93 58 9 4 2 3 Performance: ‘Social’ Model Decile Mean Prob. 9 1.0000 8 1.0000 7 1.0000 6 1.0000 5 1.0000 4 1.0000 3 1.0000 2 1.0000 1 0.7382 0 0.0001 % PR 1 7 2 22 10 1 2 - % Social 9 15 5 2 11 7 15 3 1 - IMPORTANT: These are ‘cheat’ scores! % HR 6 17 6 21 32 20 10 15 7 - % Other % Admin 9 11 18 16 24 20 15 25 9 22 13 22 5 9 22 24 25 9 1 - % IT 13 2 3 11 10 13 7 14 4 89 % Legal 40 21 30 24 5 10 14 14 13 1 % Deal 24 21 18 22 10 24 49 24 45 10 Don’t get burned. • • • • Biased samples Accuracy and rare events Features and prior knowledge Good modelling is iterative! • Resampling and robustness • Learning cycles http://www.ugo.com/movies/mustafa-in-austin-powers Resources • NLTK: – www.nltk.org/ – http://www.nltk.org/book • Enron email datasets: – http://www.cs.umass.edu/~ronb/enron_dataset.html • Free online Machine Learning course from Stanford – http://ml-class.com/ (starts in October) • StreamHacker blog by Jacob Perkins – http://streamhacker.com