PowerPoint ****

advertisement

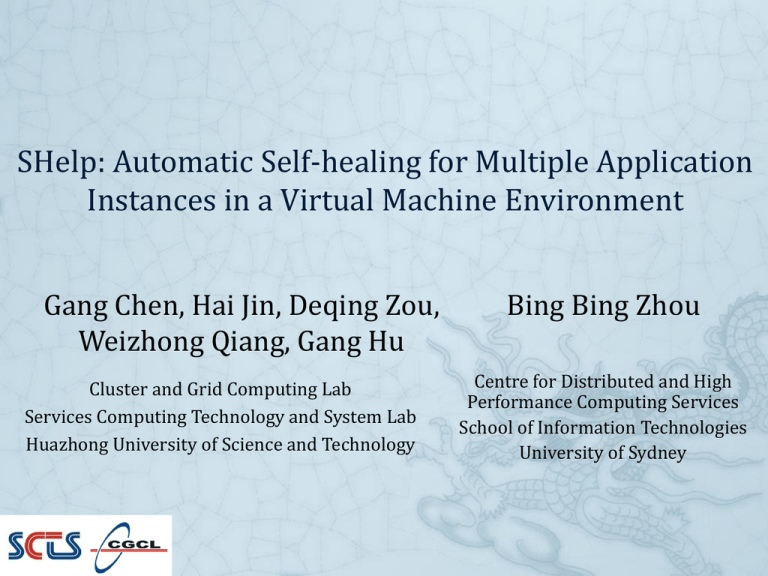

SHelp: Automatic Self-healing for Multiple Application

Instances in a Virtual Machine Environment

Gang Chen, Hai Jin, Deqing Zou,

Weizhong Qiang, Gang Hu

Cluster and Grid Computing Lab

Services Computing Technology and System Lab

Huazhong University of Science and Technology

Bing Bing Zhou

Centre for Distributed and High

Performance Computing Services

School of Information Technologies

University of Sydney

Introduction

Many applications need high availability

But there are still numerous security vulnerabilities

Fix all bugs in testing is impossible

Virtualization technology brings new challenges

Server downtime is very costly (1hr = $84,000~$108,000)

there are more application instances in a single-machine

How to guarantee high availability?

Current Approaches & limitations

Rx

STEM

Software components are fail-stop and individually recoverable

Limitations

Manufacture values for “out of the bounds read”

Discard “out of the bounds write”

Micro-reboot

Emulate function and potentially others within a larger scope to return error

values

Failure-oblivious computing

Change execution environment

Deterministic bugs are still there

Require program redesign

A narrow suitability for only a small number of applications or memory bugs

……

ASSURE better address these problems [ASPLOS’09]

SHelp can be considered as an extension of ASSURE to a virtualized computing

environment

ASSURE Overview

ASPLOS’09

Bypass the “faulty” functions

Rescue points

Error virtualization

locations in the existing application code used to handle programmeranticipated failures

force a heuristic-based error return in a function

Quick recovery for future faults

Take a checkpoint once the appropriate rescue point is called

Walk stack

Execution Graph

input

foo()

bar()

bad()

Create

rescue-graph

input

Rescue Graph

foo()

bar()

other()

int bad(char* buf)

{

char rbuf[10];

int i = 0;

if(buf == NULL)

return -1;

while(i < strlen(buf))

{

rbuf[i++] = *buf++;

}

return 0;

}

ASSURE Limitations

ASPLOS’09

--main.c-052 int main()

...

167

if (!fork()) { /* this is the child process */

168

while(1)

169

{

...

185

if(serveconnection(new_fd)==-1) break;

...

A potential problem

when

Rescue

point Bthe

canappropriate rescue point is in the

survivemain

faultsprocedure1.of

an application Candidate rescue point B 1) Define

Define

Two cases

2. Create

High overhead for

frequently

checkpointing

3. Assignment

2) Assignment

No rescue point is

3) Use

appropriate

Candidate rescue point A

--protocol.c-038 int serveconnection(int sockfd)

...

041

char tempdata[8192], *ptr, *ptr2, *host_ptr1, *host_ptr2;

043

char filename[255];

...

054

while(!strstr(tempdata, "\r\n\r\n") && !strstr(tempdata, "\n\n"))

055

{

056

if((numbytes=recv(sockfd, tempdata+numbytes, 4096-numbytes, 0))==-1)

057

return -1;

058

}

059

for(loop=0; loop<4096 && tempdata[loop]!='\n' && tempdata[loop]!='\r'; loop++)

060

tempstring[loop] = tempdata[loop];

...

063

ptr = strtok(tempstring, " ");

...

098

Log("Connection from %s, request = \"GET %s\"", inet_ntoa(sa.sin_addr), ptr);

...

--util.c-212 void Log(char *format, ...)

...

Memory Region:

4) Create

217

char temp[200], temp2[200], logfilename[255];

Name: filename

...

Size: 255 Byte

222

va_start(ap, format);

// format it all into temp

5) Copy

223

vsprintf(temp, format, ap);

Buffer Overflow B ...

Memory Region:

144

4. Copy

strcat(filename, ptr);

...

Buffer Overflow A

Name: temp

Size: 200 Byte

SHelp Main Idea

“Weighted” rescue point

assign weight values to rescue points

When an appropriate rescue point is chosen, its associated

weight value is incremented.

first select the rescue point with the largest weight value to

test once detecting a fault

Error handling information sharing in VMs

A two-level storage hierarchy for rescue point management

a global rescue point database in Dom0

a rescue point cache in each DomU

Weight values are updating between Dom0 and DomUs for

error handling information sharing

The accumulative effect of added weight values in Dom0

provides a useful guideline for diagnosis of serious bugs

SHelp Architecture

Sensors for detecting software faults

Recovery and Test component for choosing

the appropriate rescue point

DomU

Programmers

Report

Application 1

Dom0

Sensors

Rescue Point

Database

Rescue

Point Cache

Management

...

DomU

Application n

Application 1

...

Application n

Sensors

Checkpoint

& Rollback

Recovery

& Test

Control Unit

VMM (Xen)

Hardware

...

Rescue

Point Cache

Checkpoint

& Rollback

Control Unit

Recovery

& Test

SHelp Procedure

Determine candidate rescue points

Prioritize candidate rescue points and test one

by one

first test the largest weight value of rescue point

Increment the corresponding weight values

Quick recovery for the same stack smashing bug

Program execution

checkpoint

Fault detected

Rollback to previous checkpoints

② Dom0

Update

①

Candidate

Rescue Point

Rescue Point

Rescue Points Matched

Cache

Database

Log

Inputs

Select and

Instrument

Survival

Test

③

Bug-Rescue

List

④

⑤

Update

Weight Value

Appropriate

Rescue Point

Report

Module

⑥

Send

Bug

Report

Programmers

Implementation Details

Updating the Rescue Point Cache

At the application level -> LRU

At the trace level of applications -> LFUM

Consider globally maximum weight value and local hit rate for

trace i

TraceFlag(i) k wmax h(i)

Updating Weight Values of Rescue Points

Real-time updating for RP database

Periodical updating for RP cache

Bug-Rescue List

The stack is corrupted in stack smashing bug

Get the trace need to replay program -> high overhead

Record the appropriate rescue point related to the fault

Choose it to probabilistically survive faults

Experimental Setup

Implementation

Platform

Linux 2.6.18.8 kernel with BLCR and TCPCP checkpoint

support

Xen 3.2.0 and Dyninst 6.0

Intel Xeon E6550, 4MB L2 cache, 1GB memory

100Mbps Ethernet connection

Applications

Application

Version

Bug

Depth

Apache

2.0.49

2.0.50

Off-by-one

Heap overflow

2

2

2.0.59

NULL dereference

3

Stack smashing

2

Divide-by-zero

2

Stack smashing

1

Heap overflow

1

Double free

3

Light-HTTPd

Light-HTTPd-dbz

ATP-HTTPd

Null-HTTPd

Null-HTTPd-df

0.1

0.4b

0.5.0

Comparison between ASSURE and SHelp

Web server application Light-HTTPd

Select the function serveconnection as the

appropriate rescue point

Throughput (MB/s)

Throughput is only about 60KB/s in ASSURE

0

4

5

10

15

20

ASSURE

2

0

4

SHelp

2

00

5

10

Elapsed Time (sec)

15

20

SHelp Recovery Performance

First-2

First-1

First-2

u

TT ll

Pd

H Nu

TT ll

Pd

-d

f

N

H

2.

Web Server Application

First-1

First-1 First-2

First-2

First-1

First-2

First-2

First-2

First-1

First-2

First-1

First-1 First-1

pa

c

0. he

49

A

p

2. ach

0.

50 e

A

p

2. ach

0.

59 e

L

H igh

TT t

Pd

H Lig

TT

h

Pd t

-d

bz

A

H TP

TT

Pd

24

20

16

12

8

4

0

Test

Instrument

Analysis

A

First-1: new faults occur

First-2: same faults occur again in local VM

or in other VMs

Time (s)

Benefits of the Bug-Rescue List

Subsequent: with Bug-Rescue List

24

20

16

Time (s)

Test

Instrument

Analysis

First-1

First-2

First-1

First-2

12

8

4

Subsequent

Subsequent

0

Light-HTTPd

ATP-HTTPd

Web Server Application

Checkpoint/Rollback Overhead Analysis

Lightweight checkpoint and roll-back

Modified BLCR with TCPCP tool support

Time (ms)

60

50

40

30

20

10

0

Checkpoint

Rollback

Apache

2.0.49

Apache Apache

Light

ATP

2.0.50

2.0.59 HTTPd HTTPd

Web Server Application

Null

HTTPd

Conclusions and Future Work

“Weighted” rescue points and two-level

storage hierarchy for rescue point

management make the system perform

more effectively and efficiently.

Future Work

Integrate the COW mechanism in BLCR

Evaluate the effectiveness of our system for

more complex server and client applications

Thank you!

Questions?