SCI PowerPoint Template

advertisement

Introduction to Python

Session 2: Beginning Numerical Python and Visualization

Jeremy Chen

This Session’s Agenda

•

•

•

•

•

NumPy Arrays

Random Number Generation

Numerical Linear Algebra

Visualization

An OLS Example

2

新加坡国立大学商学院

Preliminaries

• By now you should have a Python

distribution…

– Run IPython or Spyder

– If not, start downloading one and work with

IDLE

3

新加坡国立大学商学院

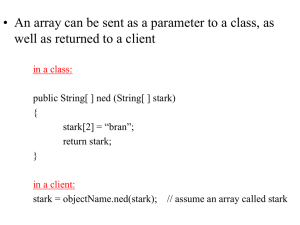

NumPy Arrays

• The ndarray is a typed N-dimensional

array object:

>>> import numpy as np

>>> A = np.ndarray((2,3))

# Kinda the same as np.empty((2,3)); Uninitialized data

array([[ 8.48798326e-314,

2.12199579e-314,

0.00000000e+000],

[ 0.00000000e+000,

1.05290307e-253,

1.47310613e-319]])

>>> A[0,0] = 2; A[0,1] = 3; A[1,1] = 4; A[1,2] = 5; A

array([[ 2., 3., 0.],

[ 0., 4., 5.]])

>>> 10 * A + 1

# Simple vector operations

array([[ 21., 31.,

1.],

[ 1., 41., 51.]])

>>> np.exp(A)

# And various other vector operations

array([[

7.3890561 ,

20.08553692,

1.

],

[

1.

,

54.59815003, 148.4131591 ]])

>>> [A.shape, A.ndim]

# And don’t forget ndarrays have a "shape" and "dimension"

[(2, 3), 2]

>>> A.dtype

# ... and a data type.

dtype('float64')

4

新加坡国立大学商学院

NumPy Arrays: Creating

• Common ways of creating a ndarray

>>> np.array(np.arange(5))

# arange is like range but returns a ndarray

array([0, 1, 2, 3, 4])

>>> np.array(np.arange(5)).dtype

# numpy selects an appropriate datatype

dtype('int32')

>>> np.reshape([3,2,1,3,1,2,6,7], (2,4))

array([[3, 2, 1, 3],

[1, 2, 6, 7]])

>>> np.ones((2,3,4))

array([[[ 1., 1., 1., 1.],

[ 1., 1., 1., 1.],

[ 1., 1., 1., 1.]],

[[ 1., 1., 1., 1.],

[ 1., 1., 1., 1.],

[ 1., 1., 1., 1.]]])

>>> np.zeros((5))

array([ 0., 0., 0., 0., 0.])

5

新加坡国立大学商学院

NumPy Arrays: Creating

• An amusing way to create a ndarray

>>> M = np.mat('[1,2,3;4,5,6]'); M

# Like MATLAB

matrix([[1, 2, 3],

[4, 5, 6]])

>>> A = np.array(M); A

# ... not really necessary

array([[1, 2, 3],

[4, 5, 6]])

>>> # Here's the obligatory Matrix Multiplication digression

>>> M * M.T

# Matrix multiplication (M.T is the transpose of M)

matrix([[14, 32],

[32, 77]])

>>> A * A.T

# Not matrix multiplication

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

ValueError: operands could not be broadcast together with shapes (2,3) (3,2)

>>> A.dot(A.T)

# Matrix multiplication for 2 dimensional ndarrays

array([[14, 32],

[32, 77]])

>>> np.dot(A,A.T) # ... same here. (Dot product for 1 dimensional ndarrays)

array([[14, 32],

[32, 77]])

新加坡国立大学商学院

6

NumPy Arrays: Accessing

• Simple slicing creates views (not copies)

>>> A = np.reshape(np.arange(2*4)+1, (2,4)); A

array([[1, 2, 3, 4],

[5, 6, 7, 8]])

>>> B = A[:,1:3]; B

array([[2, 3],

[6, 7]])

>>> B[0,0] = 100; B; A

array([[100,

3],

[ 6,

7]])

array([[ 1, 100,

3,

4],

[ 5,

6,

7,

8]])

• “Fancy Indexing” creates copies

>>> C = A[:, [1,3]]; C

array([[100,

4],

[ 6,

8]])

>>> C[0,0] = 200; C; A

array([[200,

4],

[ 6,

8]])

array([[ 1, 100,

3,

[ 5,

6,

7,

新加坡国立大学商学院

# Using lists/arrays for indexing

4],

8]])

7

NumPy Arrays: A Little More

• Some standard operations

>>> A = np.reshape(np.arange(2*4)+1, (2,4)); A

array([[1, 2, 3, 4],

[5, 6, 7, 8]])

>>> A.sum()

36

>>> A.sum(axis=0)

array([ 6, 8, 10, 12])

>>> A.sum(axis=1)

array([10, 26])

>>> A.cumsum(axis=1)

array([[ 1, 3, 6, 10],

[ 5, 11, 18, 26]])

>>> (A.mean(axis=1), A.std(axis=1), A.var(axis=1), A.min(axis=1), A.argmax(axis=1))

(array([ 2.5,

6.5]), array([ 1.11803399,

1.11803399]), array([ 1.25,

1.25]), array([1, 5]), array([3, 3]))

>>> arr = np.array([1,2,4,9,1,4]); arr.sort(); arr

# Sorting

array([1, 1, 2, 4, 4, 9])

>>> np.unique(arr)

# Keep only unique entries

array([1, 2, 4, 9])

>>> np.unique(np.array(['A', 'B', 'CC', 'c', 'A', 'CC']))

# Applies to strings too

array(['A', 'B', 'CC', 'c'],

dtype='|S2')

新加坡国立大学商学院

8

Random Number Generation

• The Random Seed

>>> np.random.seed(10)

• Various Distributions

>>> np.random.SOME_DISTRIBUTION(params, size=(dimensions))

>>> # rand ~ Uniform; randn ~ Normal; exponential; beta;

>>> # gamma; binomial; randint(=>Discrete) ... even triangular

9

新加坡国立大学商学院

Numerical Linear Algebra

• Matrix Decompositions

>>> A = np.ones((5,5)) + np.eye(5)

>>> L = np.linalg.cholesky(A)

# Cholesky decomposition.

>>> np.allclose(np.dot(L,L.T), A)

# Check...

True

>>> Q, R = np.linalg.qr(A)

# QR decomposition

>>> np.allclose(np.dot(Q,R), A)

True

>>> # Eigenvalue decomposition (Use eigh when symmetric/Hermetian)

>>> e, V = np.linalg.eig(A)

>>> np.allclose(np.dot(A,V), np.dot(V,np.diag(e)))# V may not be unitary

True

>>> # Singular Value Decomposition

>>> U, s, V = np.linalg.svd(A, full_matrices = True)

>>> np.allclose(np.dot(np.dot(U, np.diag(s)),V), A)

True

10

新加坡国立大学商学院

Numerical Linear Algebra

• Solving Linear Systems

>>> np.linalg.solve(A, np.ones((5,1))).T

array([[ 0.16666667,

0.16666667,

0.16666667,

0.16666667,

0.16666667]])

-0.16666667,

-0.16666667,

-0.16666667,

0.83333333,

-0.16666667,

-0.16666667],

-0.16666667],

-0.16666667],

-0.16666667],

0.83333333]])

>>> np.linalg.inv(A)

array([[ 0.83333333,

[-0.16666667,

[-0.16666667,

[-0.16666667,

[-0.16666667,

-0.16666667,

0.83333333,

-0.16666667,

-0.16666667,

-0.16666667,

-0.16666667,

-0.16666667,

0.83333333,

-0.16666667,

-0.16666667,

# Solve Linear System

# Matrix Inverse

• Other Stuff

>>> np.linalg.norm(A)

6.324555320336759

>>> np.linalg.det(A)

5.9999999999999982

>>> np.trace(A)

10

>>> np.linalg.matrix_rank(A)

5

新加坡国立大学商学院

# Matrix norm

# Determinant (should be 6...)

# Matrix trace

# Matrix rank

11

Visualization (By Example)

• By now you should have a Python

distribution.

• Run IPython or Spyder

In [1]: %pylab

Using matplotlib backend: module://IPython.kernel.zmq.pylab.backend_inline

Populating the interactive namespace from numpy and matplotlib

In [2]: plot( arange(20) )

Out[2]: [<matplotlib.lines.Line2D at 0x8918850>]

12

新加坡国立大学商学院

Visualization (By Example)

• A Standard Brownian Motion

In [3]:

...:

...:

Out[3]:

dx = 0.01

# Hit CTRL-Enter for multi-line input

walk = (np.random.randn(1000) * np.sqrt(dx)).cumsum()

plot(np.arange(0,1000)*dx, walk - walk[0])

[<matplotlib.lines.Line2D at 0xd31f770>]

13

新加坡国立大学商学院

Visualization (By Example)

• A 2D Intensity Plot

In [4]:

...:

...:

...:

...:

...:

...:

Out[4]:

xy_extent = 20

xy_range = np.arange(-xy_extent,xy_extent,0.1)

X_m, Y_m = np.meshgrid(xy_range, xy_range)

f = ((X_m-5) - 2 * (Y_m+5)) ** 2 - (1.5*(X_m+3) + 1 * (Y_m-5)) ** 2

imshow(f, cmap = matplotlib.cm.bone, \

extent=[-xy_extent, xy_extent, -xy_extent, xy_extent])

colorbar()

<matplotlib.colorbar.Colorbar instance at 0x0C3AFE90>

14

新加坡国立大学商学院

Visualization (By Example)

• A 2D Contour Plot

In [5]:

...:

...:

...:

...:

...:

Out[5]:

xy_extent = 20

xy_range = np.arange(-xy_extent,xy_extent,0.1)

X_m, Y_m = np.meshgrid(xy_range, xy_range)

f = ((X_m-5) - 2 * (Y_m+5)) ** 2 - (1.5*(X_m+3) + 1 * (Y_m-5)) ** 2

contourf(X_m, Y_m, f, 20)

colorbar()

<matplotlib.colorbar.Colorbar instance at 0x10C12DA0>

15

新加坡国立大学商学院

Visualization (By Example)

Our Objective…

16

新加坡国立大学商学院

Visualization (By Example)

• Some data for plotting

In [6]:

...:

...:

...:

...:

XY = np.arange(1,1+100)/100.0

XZ = np.arange(1,1+1000)/1000.0

Y = np.random.randn(100)

Z = np.random.randn(1000)

Z.sort()

• Setting up the figure and subplots

In [7]:

...:

...:

...:

...:

...:

n_row = 2

n_col = 2

fig1 = plt.figure(figsize=(12,8))

axes = []; # Collect axis references in a list

for k in range(n_row * n_col):

axes.append( fig1.add_subplot(n_row ,n_col , k+1) )

17

新加坡国立大学商学院

Visualization (By Example)

• The simple line plot

In [8]:

...:

...:

...:

...:

...:

...:

Out[8]:

axes[0].plot( XZ - 0.5, np.cos(1.5 * (XZ - 0.5) * \

2 * 3.14159), color='k')

axes[0].set_xlim([-0.6, 0.6])

axes[0].set_title(r'$\cos\ 2\pi x$')

axes[0].set_xlabel('x')

axes[0].set_ylabel('y')

fig1

18

新加坡国立大学商学院

Visualization (By Example)

• The histogram of normal samples

In [9]: axes[1].hist( Z, bins=20, color='b')

...: axes[1].set_title('Some Histogram of 1000 samples from $N(0,1)$')

...: fig1

Out[9]:

19

新加坡国立大学商学院

Visualization (By Example)

• The scatter plots (with legend)

In [10]:

...:

...:

...:

...:

...:

Out[10]:

axes[2].scatter( XY[10:60], Y[:50] + XY[10:60]*0.05, marker='o', \

c=(0.5, 0.5, 0.5), label='Population 1')

axes[2].scatter( XY[40:90], Y[50:] + XY[40:90]*0.05, marker='x', \

c='#FF00A0', label='Population 2')

axes[2].legend(loc = 'best')

fig1

20

新加坡国立大学商学院

Visualization (By Example)

• The big mess of normal CDFs

In [11]:

...:

...:

...:

...:

...:

...:

...:

...:

...:

Out[11]:

axes[3].set_title(r'A Colourful Mess of $N(\mu_k, 1)$ CDFs')

col = ['r', 'g', 'b', 'c', 'm', 'y', 'k'] # w for white

line = ['solid', 'dashed', 'dashdot', 'dotted']

linestyle = [(c,l) for c in col for l in line]

for k in range(40):

ls_idx = k % len(linestyle)

axes[3].plot( Z+(k-20)*0.5, XZ, color = linestyle[ls_idx][0], \

linestyle=linestyle[ls_idx][1])

axes[3].text(-10, 0.45, 'Nothing to See Here.', fontsize=20)

fig1

21

新加坡国立大学商学院

Visualization (By Example)

22

新加坡国立大学商学院

Example: Ordinary Least Squares

• The standard linear model

– 𝑦𝑘 = 𝛽𝑇 𝑥𝑘 + 𝜀𝑘 where 𝜀𝑘 ~𝑁(0, 𝜎 2 )

– The Maximum Likelihood Estimator is the Least Squares

𝑇

2

Solution: 𝛽∗ = arg min 𝑁

𝑘=1 𝑦𝑘 − 𝛽 𝑥𝑘

𝛽

In [12]:

...:

...:

...:

...:

...:

...:

...:

...:

...:

...:

...:

import math

import numpy as np

import matplotlib.pyplot as plt

import scipy.stats

# Load data

(Choose between data sets with...

#

100, 200, 500, 1000, 5000, and 10000 samples)

data = np.load('OLS_data_500.npz')

X = data['X']

Y = data['Y']

true_beta = data['true_beta']

# Yeah... These are provided

true_error_std = data['true_error_std']

data.close()

23

新加坡国立大学商学院

Example: Ordinary Least Squares

In [13]: if X.shape[0] != Y.shape[0]:

# Always good to check for errors

...:

raise ValueError("Data dimension mismatch.")

...: num_pred = X.shape[1]

...: num_samples = X.shape[0]

In [14]:

...:

...:

...:

...:

...:

...:

...:

...:

...:

...:

...:

...:

# Descriptive Statistics

plt_side = math.ceil(math.sqrt(num_pred + 1))

fig = plt.figure(figsize = (12,12));

for k in range(num_pred + 1):

ax = fig.add_subplot(plt_side, plt_side, k+1)

if k == 0:

ax.hist(Y, bins=20)

ax.set_title( "Dependent Variable ($\\mu={mean:.3}$, $\\sigma={std:.3}$)".format( \

mean = np.mean(Y), std = np.std(Y)) )

else:

ax.hist(X[:, k-1], bins=20)

ax.set_title( "Predictor {pred} ($\\mu={mean:.3}$, $\\sigma={std:.3}$)".format( \

pred = k, mean = np.mean(X[:, k-1]), std = np.std(X[:, k-1])) )

24

新加坡国立大学商学院

Example: Ordinary Least Squares

25

新加坡国立大学商学院

Example: Ordinary Least Squares

In [15]: # OLS

...: XtX = np.dot(X.T, X)

...: XtY = np.dot(X.T, Y)

...: beta_est = np.linalg.solve(XtX, XtY)

...: s_sq = np.var(Y - np.dot(X, beta_est))

...:

...: print "True Betas", true_beta.T

...: print "Estimated Betas", beta_est.T

...: print

...:

...: print "True Error Variance: ", true_error_std ** 2

...: print "Estimated Error Variance: ", s_sq

True Betas [[ 1. 1. 1. 1. 1. 0.]]

Estimated Betas [[ 1.2389584

True Error Variance: 4

Estimated Error Variance:

0.98711812

0.90258976

0.86002269

0.99770273

0.01079312]]

3.85523406528

26

新加坡国立大学商学院

Example: Ordinary Least Squares

In [16]:

...:

...:

...:

...:

...:

...:

...:

...:

# Hypothesis Testing

standard_errors = np.reshape(np.sqrt(np.diag( \

s_sq * np.linalg.solve(XtX, np.eye(num_pred)) )), (num_pred,1))

print "Standard Errors: ", standard_errors.T

t_statistics = beta_est / standard_errors

print "t-Statistics: ", t_statistics.T

df = num_samples - num_pred

p_values = 2 * scipy.stats.t.cdf(-abs(t_statistics), df).T

print "p-values (2-sided): ", p_values

Standard Errors: [[ 0.26819354 0.05023788 0.16891535 0.09578419 0.09744182 0.06468454]]

t-Statistics: [[ 4.61964296 19.6488801

5.34344442

8.97875377 10.23895845 0.16685784]]

p-values (2-sided): [[ 4.91038992e-06 6.11554987e-64 1.39498275e-07 5.75446104e-18 1.92610049e-22 8.67550186e-01]]

So let’s do it again!

27

新加坡国立大学商学院

Example: Ordinary Least Squares

In [15]: # OLS Again

...: X = X[:,:-1]; num_pred = X.shape[1]; num_samples = X.shape[0]

...: XtX = np.dot(X.T, X)

...: XtY = np.dot(X.T, Y)

...: beta_est = np.linalg.solve(XtX, XtY)

...: s_sq = np.var(Y - np.dot(X, beta_est))

...: print "Estimated Betas", beta_est.T

...: standard_errors = np.reshape(np.sqrt(np.diag( \

...:

s_sq * np.linalg.solve(XtX, np.eye(num_pred)) )), (num_pred,1))

...: print "Standard Errors: ", standard_errors.T

...: t_statistics = beta_est / standard_errors

...: print "t-Statistics: ", t_statistics.T

...: df = num_samples - num_pred

...: p_values = 2 * scipy.stats.t.cdf(-abs(t_statistics), df).T

...: print "p-values (2-sided): ", p_values

Estimated Betas [[ 1.25027031 0.98744205 0.90124032 0.86084415 0.9975555 ]]

Standard Errors: [[ 0.25949091 0.05020176 0.16872633 0.09566025 0.09744054]]

t-Statistics: [[ 4.81816615 19.6694721

5.34143267

8.99897405 10.23758223]]

p-values (2-sided): [[ 1.92950811e-06 4.54116320e-64 1.40853414e-07 4.88551777e-18 1.93166121e-22]]

28

新加坡国立大学商学院

☺

29

新加坡国立大学商学院