Tensor Network Renormalization (TNR)

advertisement

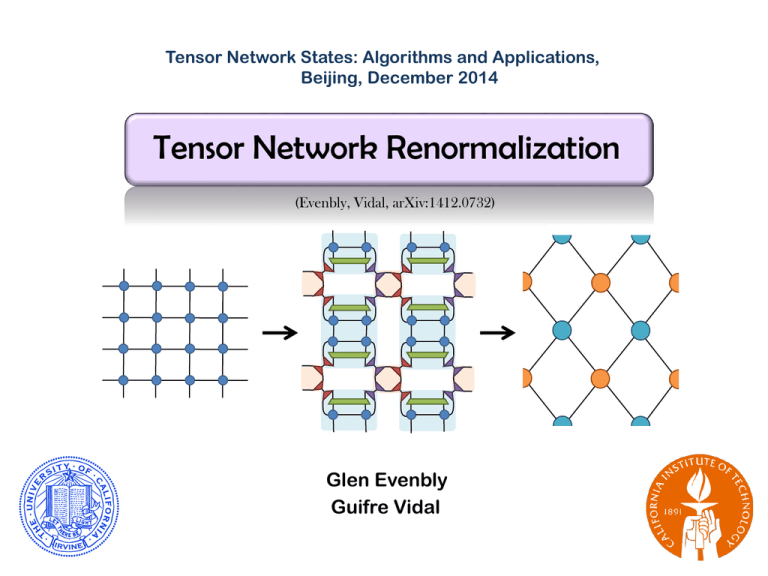

Tensor Network States: Algorithms and Applications, Beijing, December 2014 Tensor Network Renormalization (Evenbly, Vidal, arXiv:1412.0732) Glen Evenbly Guifre Vidal Tensor Renormalization Methods What is the usefulness of renormalization (or coarse-graining) in many-body physics??? description in terms of very many microscopic degrees of freedom Iterative RG transformations each transformation removes short-range (high energy) degrees of freedom description in terms of a few effective (low-energy) degrees of freedom effective theory should contain only universal information (i.e. no microscopic details remaining) Previous methods based upon tensor renormalization can be very powerful and useful…. …but they don’t give you this! Previous tensor RG methods do not address all short-ranged degrees of freedom at each RG step. Some (unwanted) short ranged detail always remains in the coarse-grained system Tensor Renormalization Methods Previous tensor RG methods do not address all short-ranged degrees of freedom at each RG step. Some (unwanted) short ranged detail always remains in the coarse-grained system Consequences: • they do not give a proper RG flow (i.e. give wrong RG fixed points) • accumulation of short-ranged degrees of freedom can cause computational breakdown (at or near criticality) Tensor Network Renormalization (TNR) (Evenbly, Vidal, arXiv:1412.0732) A method of coarse-graining tensor networks that addresses all short-ranged degrees of freedom at each RG step Consequences: • gives a proper RG flow (i.e. correct RG fixed points) • gives a sustainable RG transformation (even at or near criticality) Outline: Tensor Network Renormalization Introduction: Tensor networks and methods based upon tensor renormalization Comparison: Tensor Renormalization Group (TRG) vs Tensor Network Renormalization (TNR) Discussion: Failure of previous tensor RG methods to address all short-ranged degrees of freedom Reformulation: A different prescription for implementing tensor RG methods. Resolution: how to build a tensor RG scheme that addresses all short-ranged degrees of freedom Can express the exact ground state of quantum system (in terms of a Euclidean path integral) as a tensor network: evolution in imaginary time Tensor Renormalization Methods 1D lattice in space Tensor Renormalization Methods Can express the exact ground state of quantum system (in terms of a Euclidean path integral) as a tensor network: Ground state |𝜓GS lim 𝑒 −𝛽𝐻 1D quantum system with Hamiltonian H: 𝛽→∞ 1D lattice in space Tensor Renormalization Methods Can express the exact ground state of quantum system (in terms of a Euclidean path integral) as a tensor network: 1D quantum system with Hamiltonian H: There are many different approaches to evaluate a network of this form (e.g. Monte-carlo, transfer matrix methods, tensor RG…) Tensor RG: evaluate expectation value through a sequence of controlled (quasi-exact) coarsegraining transformations of the network Expectation value = 𝜓𝐺𝑆 |𝑜|𝜓𝐺𝑆 Tensor Renormalization Methods Sequence of coarse graining transformations applied to the tensor network: 𝐴 0 𝐴 1 Could represent: • Euclidean path integral of 1D quantum system • Partition function of 2D classical statistical system 𝐴 2 𝐴 3 Expectation value of local observable, two-point correlator etc Tensor Renormalization Methods Sequence of coarse graining transformations applied to the tensor network: 𝐴 𝐴 0 1 𝐴 2 𝐴 width N log 𝑁 RG flow in the space of tensors: 𝐴 0 →𝐴 1 →𝐴 2 steps →⋯→𝐴 𝑆 scalar →𝐶 3 Tensor Renormalization Methods log 𝑁 RG flow in the space of tensors: 𝐴 0 →𝐴 1 →𝐴 2 steps →⋯→𝐴 𝑆 scalar →𝐶 Tensor RG approaches borrow from earlier ideas (e.g. Kadanoff spin blocking) First tensor RG approach: • Tensor Renormalization Group (TRG) (Levin, Nave, 2006) based upon truncated singular value decomposition (SVD): ≈ 𝐴 dim 𝜒 𝑈 𝑆 dim 𝑉 = 𝑈 𝑆 𝑆𝑉 𝜒 ≤ 𝜒2 Incurs a truncation error that is related to the size of the discarded singular values Tensor Renormalization Group (TRG) 𝐴 (Levin, Nave, 2006) 0 𝐴 truncated SVD 0.5 contract coarser network initial network truncated SVD contract Tensor Renormalization Methods log 𝑁 RG flow in the space of tensors: 𝐴 0 →𝐴 1 →𝐴 2 steps →⋯→𝐴 𝑆 scalar →𝑍 Tensor RG approaches borrow from earlier ideas (e.g. Kadanoff spin blocking) First tensor RG approach: • Tensor Renormalization Group (TRG) (Levin, Nave, 2006) Many improvements and generalizations: • Second Renormalization Group (SRG) (Xie, Jiang, Weng, Xiang, 2008) • Tensor Entanglement Filtering Renormalization (TEFR) (Gu, Wen, 2009) • Higher Order Tensor Renormalization Group (HOTRG) + many more… (Xie, Chen, Qin, Zhu, Yang, Xiang, 2012) Give improvement in accuracy (e.g. by taking more of the local environment into account when truncating) or allow application to higher dimensional systems etc. Tensor Renormalization Methods But previous tensor RG approaches do not address all shortranged degrees of freedom at each RG step Consequences: • they do not give a proper RG flow (i.e. gives wrong RG fixed points) • do not give a sustainable RG flow (at or near criticality) Tensor Network Renormalization (TNR) (Evenbly, Vidal, arXiv:1412.0732) A method of coarse-graining tensor networks that addresses all short-ranged degrees of freedom at each RG step Consequences: • gives a proper RG flow (i.e. correct RG fixed points) • gives a sustainable RG flow (even at or near criticality) Outline: Tensor Network Renormalization Introduction: Tensor networks and methods based upon tensor renormalization Comparison: Tensor Renormalization Group (TRG) vs Tensor Network Renormalization (TNR) Discussion: Failure of previous tensor RG methods to address all short-ranged degrees of freedom Reformulation: A different prescription for implementing tensor RG methods. Resolution: how to build a tensor RG scheme that addresses all short-ranged degrees of freedom Tensor Renormalization Methods RG flow in the space of tensors: 𝐴 0 →𝐴 1 →𝐴 2 →⋯→𝐴 𝑠 →⋯ Consider 2D classical Ising ferromagnet at temperature T: Phases: Proper RG flow: 𝑇 < 𝑇𝐶 ordered phase 𝑇 = 𝑇𝐶 critical point (correlations at all length scales) 𝑇 > 𝑇𝐶 disordered phase 0 𝐴 𝑇<𝑇𝐶 0 𝐴 𝑇=𝑇𝐶 𝐴(1) 𝐴order 𝑇=0 𝐴(3) Encode partition function (temp T) as a tensor network: 0 𝐴𝑇 0 𝐴 𝑇>𝑇𝐶 𝐴(1) 𝐴(2) 𝐴(2) 𝐴crit 𝑇 = 𝑇𝐶 𝐴(3) 𝐴disorder 𝑇=∞ Proper RG flow: 2D classical Ising Numerical results, Tensor renormalization group (TRG): disordered phase |𝐴 1 | |𝐴 2 | |𝐴 3 | |𝐴 4 | 𝑇 = 1.1 𝑇𝑐 𝑇 = 2.0 𝑇𝑐 should converge to the same (trivial) fixed point, but don’t! Numerical results, Tensor Network Renormalization (TNR): 𝑇 = 1.1 𝑇𝑐 𝑇 = 2.0 𝑇𝑐 converge to the same fixed point (containing only information on the universal properties of the phase Proper RG flow: 2D classical Ising Numerical results for 2D classical Ising, Tensor Network Renormalization (TNR): • Converges to one of three RG fixed points, consistent with a proper RG flow |𝐴 sub-critical 𝑇 = 0.9 𝑇𝑐 critical 𝑇 = 𝑇𝑐 super-critical 𝑇 = 1.1 𝑇𝑐 1 | |𝐴 2 | |𝐴 3 | |𝐴 4 | ordered (Z2) fixed point critical (scaleinvariant) fixed point disordered (trivial) fixed point Tensor Renormalization Methods RG flow in the space of tensors: 𝐴 0 →𝐴 1 →𝐴 2 →⋯→𝐴 𝑠 →⋯ Consider 2D classical Ising ferromagnet at temperature T: Phases: 𝑇 < 𝑇𝐶 Proper RG flow: ordered phase 𝑇 = 𝑇𝐶 critical point (correlations at all length scales) 𝑇 > 𝑇𝐶 disordered phase 0 𝐴 𝑇<𝑇𝐶 0 𝐴 𝑇=𝑇𝐶 𝐴(1) 𝐴order 𝑇=0 𝐴(3) Encode partition function (temp T) as a tensor network: 0 𝐴𝑇 0 𝐴 𝑇>𝑇𝐶 𝐴(1) 𝐴(2) 𝐴(2) 𝐴crit 𝑇 = 𝑇𝐶 𝐴(3) 𝐴disorder 𝑇=∞ Sustainable RG flow: 2D classical Ising RG flow in the space of tensors: 𝐴 0 →𝐴 1 →𝐴 2 →⋯→𝐴 𝑠 What is a sustainable RG flow??? Key step of TRG: truncated singular value decomposition (SVD): 𝐴 dim 𝑆𝑉 𝑈 𝑆 dim 𝜒 𝜒 ≤ 𝜒2 𝑠 Let 𝜒 be the number of singular values (or bond dimension) needed to maintain fixed truncation error ε at RG step s The RG is sustainable if 𝜒 Is TRG sustainable??? 𝑠 is upper bounded by a constant. →⋯ Sustainable RG flow: 2D classical Ising Does TRG give a sustainable RG flow? Spectrum of 𝐴(𝑠) 10 RG flow at criticality 0 s=0 s=1 s=6 s=2 10 -1 10 -2 10 -3 10 -4 10 0 10 1 10 2 10 0 10 1 10 2 10 0 Bond dimension 𝜒 required to maintain fixed truncation error (~10-3): ~20 TRG: ~10 10 1 10 2 10 0 10 1 ~40 >100 4 × 109 > 1012 Computational cost: TRG, 𝑂(𝜒 6 ) : 1 × 106 6 × 107 Cost of TRG scales exponentially with RG iteration! 10 2 Sustainable RG flow: 2D classical Ising Does TRG give a sustainable RG flow? Spectrum of 𝐴(𝑠) 10 RG flow at criticality 0 s=0 s=1 s=6 s=2 10 -1 TRG 10 -2 10 -3 10 -4 10 0 TNR 10 1 10 2 10 0 10 1 10 2 10 0 10 1 Bond dimension 𝜒 required to maintain fixed truncation error (~10-3): ~20 TRG: ~10 ~40 ~10 ~10 TNR: ~10 10 2 10 0 10 1 Sustainable >100 ~10 Computational costs: TRG, TNR 𝑂(𝜒 6 ) : 1 × 106 𝑂(𝑘𝜒 6 ): 5 × 107 6 × 107 5 × 107 10 2 4 × 109 5 × 107 > 1012 5 × 107 Tensor Renormalization Methods Previous RG methods for contracting tensor networks • • • Tensor Network Renormalization (TNR) vs • do not address all short-ranged degrees of freedom do not give a proper RG flow (wrong RG fixed points) • • unsustainable RG flow (at or near criticality) can address all short-ranged degrees of freedom gives a proper RG flow (correct RG fixed points) can give a sustainable RG flow Analogous to: Tree tensor network (TTN) vs Multi-scale entanglement renormalization ansatz (MERA) Tensor Renormalization Methods Previous RG methods for contracting tensor networks • • • do not address all short-ranged degrees of freedom do not give a proper RG flow (wrong RG fixed points) unsustainable RG flow (at or near criticality) Tensor Network Renormalization (TNR) vs • • • can address all short-ranged degrees of freedom gives a proper RG flow (correct RG fixed points) can give a sustainable RG flow Can we see how TRG fails to address all short-ranged degrees of freedom? … consider the fixed points of TRG Outline: Tensor Network Renormalization Introduction: Tensor networks and methods based upon tensor renormalization Comparison: Tensor Renormalization Group (TRG) vs Tensor Network Renormalization (TNR) Discussion: Failure of previous tensor RG methods to address all short-ranged degrees of freedom Reformulation: A different prescription for implementing tensor RG methods. Resolution: how to build a tensor RG scheme that addresses all short-ranged degrees of freedom Fixed points of TRG 𝑗 𝑖 𝐴 𝑙 𝑘 = 𝑗1 𝑗2 𝑖1 𝑖2 Partition function built from CDL tensors represents a state with short-ranged correlations 𝑘2 𝑘1 𝑙2 𝑙1 Imagine “A” is a special tensor such that each index can be decomposed as a product of smaller indices, Aijkl A( i1i2 )( j1 j2 )( k1k 2 )( l1l 2 ) such that certain pairs of indices are perfectly correlated: A( i1i2 )( j1 j2 )( k1k 2 )( l1l 2 ) i1 j1 j2 k 2 k l l i 1 1 2 2 These are called corner double line (CDL) tensors. CDL tensors are fixed points of TRG. Fixed points of TRG Singular value decomposition Contraction = = new CDL tensor! Some short-ranged always correlations remain under TRG! Fixed points of TRG short-range correlated short-range correlated TRG TRG removes some short ranged correlations, but… others are artificially promoted to the next length scale • Coarse-grained networks always retain some dependence on the microscopic (short-ranged) details • Accumulation of short-ranged correlations causes computational breakdown when at / near criticality Is there some way to ‘fix’ tensor renormalization such that all short-ranged correlations are addressed? Outline: Tensor Network Renormalization Introduction: Tensor networks and methods based upon tensor renormalization Comparison: Tensor Renormalization Group (TRG) vs Tensor Network Renormalization (TNR) Discussion: Failure of previous tensor RG methods to address all short-ranged degrees of freedom Reformulation: A different prescription for implementing tensor RG methods. Resolution: how to build a tensor RG scheme that addresses all short-ranged degrees of freedom Reformulation of Tensor RG Change in formalism: RG scheme based on insertion of projectors into network RG scheme based on SVD decompositions truncated singular value decomposition (SVD): dim dim 𝜒 𝐴 𝐴 = dim 𝐴 = 𝑈 𝑆 𝜒 𝑊† 𝑊 rank 𝐴 𝜒 projector 𝑆𝑉 𝜒 ≤ 𝜒2 Reformulation of Tensor RG Change in formalism: RG scheme based on insertion of projectors into network RG scheme based on SVD decompositions truncated singular value decomposition (SVD): dim dim 𝜒 𝐴 𝐴 = 𝑈 𝑆 dim 𝐴 = set: 𝑊 𝜒 ≤ 𝜒2 𝑆𝑉 𝜒 Want to choose ‘w’ as to minimize error: 𝑊† 𝐴 𝑈† 𝐴 𝜀 = ‖𝐴 − 𝐴‖ 𝑊=𝑈 𝑈 = 𝑈 𝑆𝑉 Reformulation of Tensor RG Change in formalism: RG scheme based on insertion of projectors into network RG scheme based on SVD decompositions 𝜒 dim 𝑈 𝑆 approximate decomposition into pair of 3-index tensors 𝑆𝑉 𝜒 dim 𝐴 𝑊 𝑊† if isometry w is optimised to act as an approximate resolution of the identity, then these are equivalent! 𝐴 = 𝑊 𝑊 †𝐴 Reformulation of Tensor RG Change in formalism: RG scheme based on insertion of projectors into network RG scheme based on SVD decompositions 𝑈 𝑆 𝑊 𝑊† could be equivalent decompositions 𝑉 𝐴 HOTRG can also be done with insertion of projectors 𝑊 𝐴 𝑊 †𝐴 𝑄 𝑄† 𝑄 𝑄† Reformulation of Tensor RG TRG truncated SVD Equivalent scheme contract Can reduce cost of TRG 𝑂 𝜒6 → 𝑂 𝜒5 insert projectors 𝑤† 𝑤 contract Reformulation of Tensor RG 𝐴 ≈ 𝑤† 𝑤 = 𝐴 𝑤 𝑤 †𝐴 Insertion of projectors can mimic a matrix decomposition (e.g. SVD)… …but can also do things that cannot be done using a matrix decomposition dim 𝜒 𝐴 dim ≈ 𝑢 𝜒≤𝜒 𝑢† 𝐴 = projector that is decomposed as a product of fourindex isometries Restrict to the case 𝜒=𝜒 such that u is a unitary 𝑢 𝑢†𝐴 Reformulation of Tensor RG 𝐴 ≈ 𝑤† 𝑤 𝐴 = 𝑤 𝑤 †𝐴 Insertion of projectors can mimic a matrix decomposition (e.g. SVD)… …but can also do things that cannot be done using a matrix decomposition dim 𝜒 𝐴 dim = 𝜒=𝜒 𝑢† 𝑢 𝐴 exact resolution of the identity 𝑢 𝑢† = = 𝑢 𝑢†𝐴 Reformulation of Tensor RG 𝐴 ≈ 𝑤† 𝑤 𝐴 = 𝑤 𝑤 †𝐴 Insertion of projectors can mimic a matrix decomposition (e.g. SVD)… …but can also do things that cannot be done using a matrix decomposition dim 𝜒 𝐴 dim = 𝑢 𝜒=𝜒 𝑢† 𝐴 𝐴 = 𝑢 𝑢† 𝐴 = 𝑢 = 𝑢 𝐴 𝐴 𝑢†𝐴 Tensor network renormalization (TNR) approach follows from composition of these insertions… Outline: Tensor Network Renormalization Introduction: Tensor networks and methods based upon tensor renormalization Comparison: Tensor Renormalization Group (TRG) vs Tensor Network Renormalization (TNR) Discussion: Failure of previous tensor RG methods to address all short-ranged degrees of freedom Reformulation: A different prescription for implementing tensor RG methods. Resolution: how to build a tensor RG scheme that addresses all short-ranged degrees of freedom Tensor Network Renormalization 𝑢† 𝑢 = 𝑤† 𝑤 ≈ 𝐴(s) Insert exact resolutions of the identity Insert approximate resolutions of the identity Tensor Network Renormalization 𝑢† 𝑢 𝑤† 𝑤 = ≈ 𝐴(s) = Contractions = = Contract Tensor Network Renormalization 𝑢† 𝑢 = 𝐴(s) 𝑤† 𝑤 ≈ disentanglers = 𝐴(s+1) = Contract ≈ Singular value decomposition Contract Tensor Network Renormalization Equivalent to TRG Tensor network Renormalization (TNR) Tensor Network Renormalization (TNR): 𝑢† 𝑢 = 𝑤† 𝑤 ≈ Insert exact resolutions of the identity Insert approximate resolutions of the identity If the disentanglers ‘u’ are removed then the TNR approach becomes equivalent to TRG I will not here discuss the algorithm required to optimize disentanglers ‘u’ and isometries ‘w’ Does TNR address all short-ranged degrees of freedom? Tensor Network Renormalization (TNR): short-range correlated short-range correlated What is the effect of disentangling? TRG TNR can address all short range degrees of freedom! TNR Key step of TNR algorithm: Insert unitary disentanglers: 𝑢† = 𝑢 = trivial (product) state Tensor Network Renormalization (TNR): short-range correlated trivial (product) state TEFR trivial (product) state TNR An earlier attempt at resolving the problem of accumulation of shortranged degrees of freedom: Tensor Entanglement Filtering Renormalization (TEFR) (Gu, Wen, 2009) TEFR can transform the network of CDL tensors to a trivial network Tensor Network Renormalization (TNR): short-range correlated Removing correlations from CDL fixed point is necessary, but not sufficient, to generate a proper RG flow trivial (product) state TNR also TEFR More difficult case: can short-ranged correlations still be removed when correlations at many length scales are present? TEFR? No, does not appear so… Tensor entanglement filtering renormalization only works removes correlations from CDL tensors (i.e. systems far from criticality) TNR? Yes… Tensor network renormalization can remove short-ranged correlations near or at criticality, and potentially in higher dimension D Outline: Tensor Network Renormalization Introduction: Tensor networks and methods based upon tensor renormalization Comparison: Tensor Renormalization Group (TRG) vs Tensor Network Renormalization (TNR) Discussion: Failure of previous tensor RG methods to address all short-ranged degrees of freedom Reformulation: A different prescription for implementing tensor RG methods. Resolution: how to build a tensor RG scheme that addresses all short-ranged degrees of freedom Benchmark numerics: 212 × 212 2D classical Ising model on lattice of size: 10 -3 1 Error in Free Energy, 𝛿𝑓 10 10 10 10 𝜒 = 4 TRG -4 𝜒 = 8 TRG -5 𝜒=4 TNR -6 𝜒 = 8 TNR -7 TC 0.8 0.7 0.6 0.5 0.4 0.3 Exact 𝜒=4 TNR 0.2 0.1 TC 10 Spontaneous Magnetization, 𝑀 0.9 0 -8 2 2.1 2.2 2.3 Temperature, T 2.4 2 2.1 2.2 2.3 Temperature, T 2.4 Tensor Renormalization Methods RG flow in the space of tensors: 𝐴 0 →𝐴 1 →𝐴 2 →⋯→𝐴 𝑠 →⋯ Consider 2D classical Ising ferromagnet at temperature T: Phases: 𝑇 < 𝑇𝐶 Proper RG flow: ordered phase 𝑇 = 𝑇𝐶 critical point (correlations at all length scales) 𝑇 > 𝑇𝐶 disordered phase 0 𝐴 𝑇<𝑇𝐶 0 𝐴 𝑇=𝑇𝐶 𝐴(1) 𝐴order 𝑇=0 𝐴(3) Encode partition function (temp T) as a tensor network: 0 𝐴𝑇 0 𝐴 𝑇>𝑇𝐶 𝐴(1) 𝐴(2) 𝐴(2) 𝐴crit 𝑇 = 𝑇𝐶 𝐴(3) 𝐴disorder 𝑇=∞ Proper RG flow: 2D classical Ising Numerical results, Tensor renormalization group (TRG): disordered phase |𝐴 1 | |𝐴 2 | |𝐴 3 | |𝐴 4 | 𝑇 = 1.1 𝑇𝑐 𝑇 = 2.0 𝑇𝑐 Numerical results, Tensor Network Renormalization (TNR): 𝑇 = 1.1 𝑇𝑐 𝑇 = 2.0 𝑇𝑐 CDL tensor fixed points Proper RG flow: 2D classical Ising more difficult! |𝐴 1 | |𝐴 2 | |𝐴 3 | |𝐴 8 | |𝐴 7 | |𝐴 6 | |𝐴 9 10 | 11 | |𝐴 4 |𝐴 5 | |𝐴 12 | 𝑇 = 1.002 𝑇𝑐 TNR bond dimension: 𝜒=4 | |𝐴 |𝐴 | Proper RG flow: 2D classical Ising critical point: |𝐴 1 | |𝐴 2 | |𝐴 3 | |𝐴 8 | |𝐴 7 | |𝐴 6 | |𝐴 9 10 | 11 | |𝐴 4 |𝐴 5 | |𝐴 12 | 𝑇 = 𝑇𝑐 TNR bond dimension: 𝜒=4 | |𝐴 |𝐴 | Proper RG flow: 2D classical Ising more difficult! |𝐴 1 | |𝐴 2 | |𝐴 3 | |𝐴 8 | |𝐴 7 | |𝐴 6 | |𝐴 9 10 | 11 | |𝐴 4 |𝐴 5 | |𝐴 12 | 𝑇 = 1.002 𝑇𝑐 bond dimension: 𝜒=4 | |𝐴 |𝐴 | Sustainable RG flow: 2D classical Ising Does TRG give a sustainable RG flow? Spectrum of 𝐴(𝑠) 10 0 RG flow at criticality (a) s=0 s=1 s=6 s=2 10 -1 TRG 10 -2 10 -3 10 -4 10 0 TNR 10 1 10 2 10 0 10 1 10 2 10 0 10 1 Bond dimension 𝜒 required to maintain fixed truncation error (~10-3): ~20 TRG: ~10 ~40 ~10 ~10 TNR: ~10 10 2 10 0 10 1 >100 ~10 Computational costs: TRG, TNR 𝑂(𝜒 6 ) : 1 × 106 𝑂(𝑘𝜒 6 ): 5 × 107 6 × 107 5 × 107 4 × 109 5 × 107 > 1012 5 × 107 10 2 Summary We have introduced an RG based method for contracting tensor networks: Tensor Network Renormalization (TNR) key idea: proper arrangement of isometric ‘w’ and unitary ‘u’ tensors address all short-ranged degrees of freedom at each RG step 𝑤 𝑤† 𝑢† 𝑢 key features of TNR: • Address all short-ranged degrees of freedom • Proper RG flow (gives correct RG fixed points) • Sustainable RG flow (can iterate without increase in cost) Direct applications to study of 2D classical and 1D quantum many-body systems, and for contraction of PEPS In 2D quantum (or 3D classical) the accumulation of short-ranged degrees of freedom in HOTRG is much worse (due to entanglement area law) …but higher dimensional generalization of TNR could still generate a sustainable RG flow