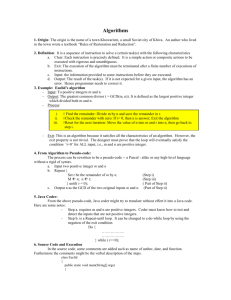

Asymptotic Analysis of Algorithms

advertisement

Nattee Niparnan

Recall

What is the measurement of algorithm?

How to compare two algorithms?

Definition of Asymptotic Notation

Today Topic

Finding the asymptotic

bound of the algorithm

Interesting Topics of Upper Bound

Rule of thumb!

We neglect

Lower order terms from addition

E.g.

n3+n2 = O(n3)

Constant

E.g.

3n3 = O(n3)

Remember that we use =

instead of (more correctly)

Why Discard Constant?

From the definition

We can use any constant

E.g. 3n = O(n)

Because

When we let c >= 3, the condition is satisfied

Why Discard Lower Order Term?

Consider

f(n) = n3+n2

g(n) = n3

If f(n) = O(g(n))

Then, for some c and n0

c * g(n)-f(n) > 0

Definitely, just use any c >1

Why Discard Lower Order Term?

Try c = 1.1

Does

1.1 * g(n)-f(n) = 0.1n3-n2

0.1n3-n2 > 0

It is when

0.1n > 1

E.g., n > 10

?

0.1n3-n2 > 0

0.1n3

> n2

0.1n3/n2 > 1

0.1n

> 1

Lower Order only?

In fact,

It’s only the dominant term that count

Which one is dominating term?

The one that grow faster

The nondominant term

Why?

Eventually, it is g*(n)/f*(n)

If g(n) grows faster,

g(n)/f*(n) > some constant

E.g, lim g(n)/f*(n) infinity

The dominant

term

What dominating what?

Left side

dominates

na

n log n

nb

(a > b)

n

n2 log n

n log2 n

cn

Log n

nc

1

n

log n

Putting into Practice

What is the asymptotic class of

0.5n3+N4-5(n-3)(n-5)+n3log8n+25+n1.5

(n-5)(n2+3)+log(n20)

20n5+58n4+15n3.2*3n2

Putting into Practice

What is the asymptotic class of

0.5n3+N4-5(n-3)(n-5)+n3log8n+25+n1.5

O(n4)

(n-5)(n2+3)+log(n20)

O(n3)

20n5+58n4+15n3.2*3n2

O(n5.4)

Asymptotic Notation from Program

Flow

Sequence

Conditions

Loops

Recursive Call

Sequence

Block A

f (n)

f(n) + g(n) =

Block B

g (n)

O(max (f(n),g(n))

Example

Block A

O(n)

O(n2)

Block B

O(n2)

Example

Block A

Θ(n)

O(n2)

Block B

O(n2)

Example

Block A

Θ(n)

Θ(n2)

Block B

Θ(n2)

Example

Block A

O(n2)

Θ(n2)

Block B

Θ(n2)

Condition

Block A

f (n)

Block B

g (n)

O(max (f(n),g(n))

Loops

for (i = 1;i <= n;i++) {

P(i)

}

Let P(i)

takes time ti

n

t

i 1

i

Example

for (i = 1;i <= n;i++) {

sum += i;

}

sum += i Θ(1)

n

1 ( n )

i 1

Why don’t we use max(ti)?

Because the number of terms is not constant

for (i = 1;i <= n;i++) {

sum += i;

}

for (i = 1;i <= 100000;i++) {

sum += i;

}

Θ(n)

Θ(1)

With big

constant

Example

for (j = 1;j <= n;j++) {

for (i = 1;i <= n;i++) {

sum += i;

}

}

sum += i Θ(1)

n

n

(1)

n

j 1 i 1

(n)

j 1

( n ) ( n ) ... ( n )

(n )

2

Example

n

for (j = 1;j <= n;j++) {

for (i = 1;i <= ;i++) {

sum += i;

}

}

sum += i Θ(1)

j

(1)

n

j 1 i 1

( j)

j 1

n

cj

j 1

n

c j

j 1

n ( n 1)

c

2

2

(n )

Example : Another way

n

for (j = 1;j <= n;j++) {

for (i = 1;i <= ;i++) {

sum += i;

}

}

sum += i Θ(1)

j

(1)

n

j 1 i 1

( j)

j 1

n

O (n)

j 1

2

O (n )

Example

n 1

n 1

j

(1)

j2 i3

for (j = ;j <=

for (i = ;i <=

sum += i;

}

}

;j++) {

;i++) {

j2

sum += i Θ(1)

( j 2)

n 1

j

j2

( m 1) m

1

2

2

m

m

(

1)

2

2

2

(m )

Example : While loops

While (n > 0) {

n = n - 1;

}

Θ(n)

Example : While loops

While (n > 0) {

n = n - 10;

}

Θ(n/10)

= Θ(n)

Example : While loops

While (n > 0) {

n = n / 2;

}

Θ(log n)

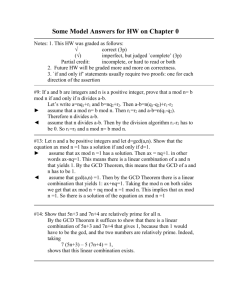

Example : Euclid’s GCD

function gcd(a, b) {

while (b > 0) {

tmp = b

b

= a mod b

a

= tmp

}

return a

}

Example : Euclid’s GCD

Until the

modding one is

zero

function gcd(a, b) {

while (b > 0) {

tmp = b

b

= a mod b

a

= tmp

}

return a

}

How many

iteration?

Compute mod

and swap

Example : Euclid’s GCD

a

b

Example : Euclid’s GCD

a

b

a mod b

If a > b

a mod b < a / 2

Case 1: b > a / 2

a

b

a mod b

Case 1: b ≤ a / 2

a

b

We can

always put

another b

a mod b

Example : Euclid’s GCD

function gcd(a, b) {

while (b > 0) {

tmp = b

b

= a mod b

a

= tmp

}

return a

}

O( log n)

B always reduces

at least half

Theorem

If Σai <1 then

T(n)= ΣT(ain) + O(N)

T(n) = O(n)

T(n) = T(0.7n) + T(0.2n) + T(0.01)n + 3n

= O(n)

Recursion

try( n ){

if ( n <= 0 ) return 0;

for ( j = 1; j <= n ; j++)

sum += j;

try (n * 0.7)

try (n * 0.2)

}

Recursion

try( n ){

if ( n <=

0 ) return 0;

terminating

Θ(1)

for ( j = 1; j <= n ; j++)

process

Θ(n)

sum += j;

try (n * 0.7)

recursion

try (n * 0.2)

T(0.7n) + T(0.2n)

}

T(n) = T(0.7n) + T(0.2n) + O(n)

T(n)

Guessing and proof by induction

T(n) = T(0.7n) + T(0.2n) + O(n)

Guess: T(n) = O(n), T(n) ≤ cn

Proof:

Basis: obvious

Induction:

Assume T(i < n) = O(i)

T(n) ≤ 0.7cn + 0.2cn + O(n)

= 0.9cn + O(n)

= O(n)

<<< dominating rule

Using Recursion Tree

T(n) = 2 T(n/2) + n

n

Lg n

n

n/2

n/4

n/2

n/4

n/4

2 n/2

n/4

4 n/4

T(n) = O(n lg n)

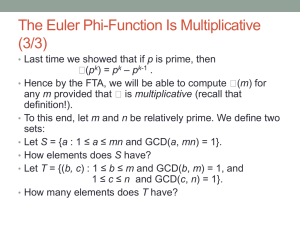

Master Method

T(n) = aT(n/b) + f (n) a ≥ 1, b > 1

Let c = logb(a)

f (n) = Ο( nc - ε )

f (n) = Θ( nc )

f (n) = Ω( nc + ε )

→

→

→

T(n) = Θ( nc )

T(n) = Θ( nc log n )

T(n) = Θ( f (n) )

Master Method : Example

T(n) = 9T(n/3) + n

a = 9, b = 3, c = log 3 9 = 2, nc = n2

f (n) = n = Ο( n2 - 0.1 )

T(n) = Θ(nc) = Θ(n2)

Master Method : Example

T(n) = T(n/3) + 1

a = 1, b = 3, c = log 3 1 = 0, nc = 1

f (n) = 1 = Θ( nc ) = Θ( 1 )

T(n) = Θ(nc log n) = Θ( log n)

Master Method : Example

T(n) = 3T(n/4) + n log n

a = 3, b = 4, c = log 4 3 < 0.793, nc < n0.793

f (n) = n log n = Ω( n0.793 )

a f (n/b) = 3 ((n/4) log (n/4) ) ≤ (3/4) n log n = d f (n)

T(n) = Θ( f (n) ) = Θ( n log n)

Conclusion

Asymptotic Bound is, in fact, very simple

Use the rule of thumbs

Discard non dominant term

Discard constant

For recursive

Make recurrent relation

Use master method

Guessing and proof

Recursion Tree