Shrinking the Cone of Uncertainty with Continuous Assessment

advertisement

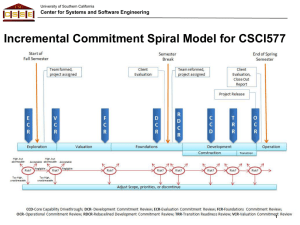

University of Southern California Center for Systems and Software Engineering Shrinking the Cone of Uncertainty with Continuous Assessment Pongtip Aroonvatanaporn CSCI 510 Fall 2011 October 5, 2011 10/5/11 (C) USC-CSSE 1 University of Southern California Center for Systems and Software Engineering Outline • Introduction – Motivation – Problems • • • • Related Works Proposed Methodologies Conclusion Tool Demo 10/5/11 (C) USC-CSSE 2 University of Southern California Center for Systems and Software Engineering Motivation • • • • The Cone of Uncertainty Exists until the product is delivered, or even after The wider, the more difficult to ensure accuracies and timely deliveries Focus on uncertainties of team aspects from product design onwards – – 10/5/11 COCOMO II space Many factors before that (requirements volatility, technology, etc.) (C) USC-CSSE 3 University of Southern California Center for Systems and Software Engineering Motivation • Key principles of ICSM – Stakeholder satisficing – Incremental and evolutionary growth of system definition and stakeholder commitment – Iterative system development and definition – Concurrent system definition and development – Risk management • COCOMO II – COCOMO II space is in the development cycle – Influences on estimations and schedules [Construx, 2006] • Human factors: 14x • Capability factors: 3.5x • Experience factors: 3.0x 10/5/11 (C) USC-CSSE 4 University of Southern California Center for Systems and Software Engineering Motivation • Standish CHAOS Summary 2009 • Surveyed 9000 projects Delivered with full capability within budget and schedule 32% Cancelled 24% Over budget, over schedule, or undelivered 44% 68% project failure rate 10/5/11 (C) USC-CSSE 5 University of Southern California Center for Systems and Software Engineering Terms and Definitions • Inexperienced – Inexperienced in general – Experienced, but in new domain – Experienced, but using new technology • Continuous Assessment – – – – 10/5/11 Assessments take place over periods of time Done in parallel with process, instead of only at the end Widely used in education Used in software process measurement [Jarvinen, 2000] (C) USC-CSSE 6 University of Southern California Center for Systems and Software Engineering The Problem • Experienced teams can produce better estimates – – – – Use “yesterday’s weather” Past projects of comparable sizes Past data of team’s productivity Knowledge of accumulated problems and solutions • Inexperienced teams do not have this luxury No tools or data that monitors project’s progression within the cone of uncertainty 10/5/11 (C) USC-CSSE 7 University of Southern California Center for Systems and Software Engineering Problems of Inexperience • Imprecise project scoping – Overestimation vs. underestimation • Project estimations often not revisited – Insufficient data to perform predictions – Project’s uncertainties not adjusted • Manual assessments are tedious – Complex and discouraging • Limitations in software cost estimation – Models cannot fully compensate for lack of knowledge and understanding of project • Overstating team’s capabilities – Unrealistic values that do not reflect project situation – Teams and projects misrepresented (business vs. technical) 10/5/11 (C) USC-CSSE 8 University of Southern California Center for Systems and Software Engineering Imprecise Project Scoping • Based on CSCI577 data, projects either significantly overestimate or underestimate effort – Possibly due to: • Unfamiliarity with COCOMO • Inexperienced • Teams end up with inaccurate project scoping – Promise too much 10/5/11 (C) USC-CSSE 9 University of Southern California Center for Systems and Software Engineering Overestimation • Estimate is too high to achieve within available resources • Need to reduce the scope of the project – Re-negotiate requirements with client – Throw away some critical core capabilities • Lose the expected benefits • Often do not meet client satisfactions/needs 10/5/11 (C) USC-CSSE 10 University of Southern California Center for Systems and Software Engineering Underestimation • Estimate is lower than actual • Project appears that it can be done in with less resources – Clients may ask for more capabilities – Teams may end up promising more • As project progresses, team may realize that project is not achievable – If try to deliver what was promised, quality suffers – If deliver less that what was promised, clients suffer 10/5/11 (C) USC-CSSE 11 University of Southern California Center for Systems and Software Engineering Project Estimations Not Revisited • At the beginning, teams do not always have the necessary data – No “yesterday’s weather” – High number of uncertainties • Initial estimates computed are not accurate • If estimates are readjusted, no problem • Reality is, estimates are left untouched 10/5/11 (C) USC-CSSE 12 University of Southern California Center for Systems and Software Engineering Estimations in ICSM • Estimates are “supposedly” adjusted during each milestone reviews – Reviewed by team – Reviewed by stakeholders • Adjustments require necessary assessments to become more accurate • Without assessments, adjustments are made with no directions 10/5/11 (C) USC-CSSE 13 University of Southern California Center for Systems and Software Engineering Manual Assessments are Tedious • Complex process • Time consuming • Require experienced facilitator/assessor to perform effectively • Often done by conducting various surveys, analyze the data, and determine weak/strong points – Repeated as necessary • Discouraging to the teams 10/5/11 (C) USC-CSSE 14 University of Southern California Center for Systems and Software Engineering Size Reporting • How to accurately report progress – By developer’s status report? – By project manager’s take? • Report by size is most accurate – Counting logical lines of code is difficult – Even with tools support, a labor intensive task to report accurately 10/5/11 (C) USC-CSSE 15 University of Southern California Center for Systems and Software Engineering Limitations in Software Cost Estimation • Little compensation for lack of information and understanding of software to be developed • The “Cone of Uncertainty” – There’s a wide range of products and costs that the project can result in – Not 100% sure until product is delivered • Designs and specifications are prone to changes – Especially in agile environment 10/5/11 (C) USC-CSSE 16 University of Southern California Center for Systems and Software Engineering Overstating Team’s Capabilities • Unrealistic values – COCOMOII parameters – Do not reflect project’s situation • Business vs. Technical – – – – Clients – want the highest value Sales – want to sell products Project Managers – want the best team Programmers – want the least work • Is it really feasible? – Provide the evidence – From where? 10/5/11 (C) USC-CSSE 17 University of Southern California Center for Systems and Software Engineering Competing Project Proposals • Write project proposals to win • Often overstate and overcommit yourselves – – – – Put the best, highly-capabled people on the project Have high experienced teams Keep costs low Any capabilities are possible • This may only be true at the time of writing – What about when project really begins? 10/5/11 (C) USC-CSSE 18 University of Southern California Center for Systems and Software Engineering Ultimate Problem • Developers rather spend time to develop rather than – Documenting – Assessing – Adjusting • Not as valuable to developers as to other stakeholders • In the end, nothing is done to improve 10/5/11 (C) USC-CSSE 19 University of Southern California Center for Systems and Software Engineering The Goal • Develop a framework to address mentioned issues • Help unprecedented projects track project progression • Reduce the uncertainties in estimation – Achieve eventual convergence of estimate and actual Must be quick and easy to use 10/5/11 (C) USC-CSSE 20 University of Southern California Center for Systems and Software Engineering Benefits • Improve project planning and management – Resources and goals – Ensure the accuracy of estimation – Determine/confirm project scope • Improved product quality control – Certain about amount of work required – Better timeline – Allows for better work distribution • Actual project progress tracking – Better understanding of project status – Actual progress reports • Help manage realistic schedule and deliveries 10/5/11 (C) USC-CSSE 21 University of Southern California Center for Systems and Software Engineering Outline • Introduction • Related Works – Assessment – Sizing – Management • Proposed Methodologies • Conclusion • Tool Demo 10/5/11 (C) USC-CSSE 22 University of Southern California Center for Systems and Software Engineering IBM Self-Check [Kroll, 2008] • A survey-based assessment/retrospective • Method to overcome common assessment pitfalls – Bloated metrics, Evil scorecards, Lessons forgotten, Forcing process, Inconsistent sharing • Reflections by the team for the team • Team choose set of core practices to focus assessment on – Discussions triggered by inconsistent answers between team members – Develop actions to resolve issues 10/5/11 (C) USC-CSSE 23 University of Southern California Center for Systems and Software Engineering Software Sizing and Estimation • Agile techniques – Story points and velocity [Cohn, 2006] – Planning Poker [Grenning, 2002] • Treatments for uncertainty – PERT Sizing [Putnam, 1979] – Wideband Delphi Technique [Boehm, 1981] – COCOMO-U [Yang 2006] Require high level of expertise and experience 10/5/11 (C) USC-CSSE 24 University of Southern California Center for Systems and Software Engineering Project Tracking and Assessment • Identify critical paths • Nodes updated to show progress • Grows quickly • Becomes unusable when large, especially in smaller agile environments PERT Network Chart [Wiest, 1977] GQM [Basili, 1995] Goal Objective Question Answer Metric Measurement • Effective in tracking progress • Not good at responding to major changes Burn Charts [Cockburn, 2004] Architecture Review Board [Maranzano, 2005] 10/5/11 • Captures progress from conceptual, operational, and qualitative levels • Align with organization/team • Only useful when used correctly • Reviews to validate feasibility of architecture and design • Increases the likelihood of project success • Adopted by software engineering course • Stabilize team, reduce knowledge gaps, evaluate risks (C) USC-CSSE 25 University of Southern California Center for Systems and Software Engineering Outline • Introduction • Related Works • Proposed Methodologies – Project Tracking Framework – Team Assessment Framework • Conclusion • Tool Demo 10/5/11 (C) USC-CSSE 26 University of Southern California Center for Systems and Software Engineering Project Tracking Framework [Aroonvatanaporn, 2010] Integrating the Unified Code Count tool and COCOMO II model – Quickly determine effort based on actual progress – Extend to use Earned-Value for percent complete n PM NS A Size EM i E 5 E 0.91 0.01 SFj i 1 j 1 Hypotheses: H1 10/5/11 Adjusted with REVL (C) USC-CSSE 27 University of Southern California Center for Systems and Software Engineering Size Counting • COCOMO uses size to determine effort • Use of the Unified Code Count tool • Allows for quick collection of SLOC data – Then fed to the COCOMO model to calculate equivalent effort • Collected at every build – Depends on iteration length 10/5/11 (C) USC-CSSE 28 University of Southern California Center for Systems and Software Engineering Project Tracking Results [Aroonvatanaporn, 2010] ~18% Initial estimate ~50 % Initial estimate Adjusted estimate Adjusted estimate Accumulated effort 10/5/11 Accumulated effort (C) USC-CSSE 29 University of Southern California Center for Systems and Software Engineering Team Assessment Framework • Similar to the approach of – IBM Self-Check • Use survey-based approach to identify inconsistencies and knowledge gaps among team members – Inconsistencies/uncertainties in answers – Use conflicting questions to validate consistencies • Two sources for question development 10/5/11 (C) USC-CSSE 30 University of Southern California Center for Systems and Software Engineering Question Development Team’s assessment performed by each team member Strengths, weaknesses, issues, etc. Survey questions • Team members assess and evaluate their own team – – – – – – – Operational concept engineering Requirements gathering Business case analysis Architecture development Planning and control Personnel capability Collaboration ICSM • These questions focus on resolving team issues and reducing knowledge gaps 10/5/11 (C) USC-CSSE 31 University of Southern California Center for Systems and Software Engineering Adjusting the COCOMOII Estimates • Answering series of questions is more effective than providing metrics [Krebs, 2008] • Framework to help adjust COCOMO II estimates to reflect reality • Questions developed to focus on – Team stabilization and reducing knowledge gaps – Each question relate to COCOMO II scale factors and cost drivers • Two approaches to determine relationship between question and COCOMO II parameters – Finding correlation – Expert advice 10/5/11 (C) USC-CSSE 32 University of Southern California Center for Systems and Software Engineering Example Scenario COCOMO II ACAP: PCAP: APEX: PLEX: LTEX: HI LO NOM HI NOM HI NOM NOM + 50% HI NOM + 50% 10 Survey Have sufficiently talented and experienced programmers and systems engineering managers been identified? 4 Average: Deviation: 7.7 3.2 9 Discussion • Where do we lack in experience? • How can we improve? 10/5/11 (C) USC-CSSE 33 University of Southern California Center for Systems and Software Engineering Outline • • • • Introduction Related Works Proposed Methodologies Conclusion – Past 577 data – Summary • Tool Demo 10/5/11 (C) USC-CSSE 34 University of Southern California Center for Systems and Software Engineering COCOMO II Estimation Range Team provided range vs. COCOMO II built-in calculation – Data from Team 1 of Fall 2010 – Spring 2011 semesters Team’s pessimistic 3500 Most likely COCOMO II pessimistic Estimated Effort (Hours) 3000 2500 2000 1500 1000 500 Team’s optimistic COCOMO II optimistic 0 Valuations 10/5/11 Foundations Rebaseline Foundations Phase (C) USC-CSSE Development Iteration 1 Development Iteration 2 35 University of Southern California Center for Systems and Software Engineering CSCI577 Estimation Errors • Data from Fall 2009 – Spring 2010 semesters • Fall 2010 – Spring 2011 will be collected after this semester ends NDI/NCS Teams Architected Agile Teams 3000 4500 4000 2500 3500 2000 Actual Effort 2500 Valuation Foundations 2000 RDC 1500 Hours Hours 3000 Actual Effort 1500 Valuation Foundations 1000 Development IOC1 1000 IOC2 500 500 0 0 2 10/5/11 4 7 Team 9 5 11 (C) USC-CSSE 6 Team 12 36 University of Southern California Center for Systems and Software Engineering Conclusion • This research focuses on improving team performance and project outcomes – Tracking project progress – Synchronization and stabilization of team – Improving project estimations • Framework to shrink the cone of uncertainty – Less uncertainties in estimations – Less uncertainties within team – Better project scoping • The tool support for the framework will be used to validate and refine the assessment framework 10/5/11 (C) USC-CSSE 37 University of Southern California Center for Systems and Software Engineering Outline • • • • • Introduction Related Works Proposed Methodologies Conclusion Tool Demo – What is the tool? – What does it support? 10/5/11 (C) USC-CSSE 38 University of Southern California Center for Systems and Software Engineering Tool Support for Framework • Develop with IBM Jazz – Provides team management – Provides user management – Support for high collaborative environment • Potentials – Extensions to Rational Team Concert – Support for other project management tools 10/5/11 (C) USC-CSSE 39 University of Southern California Center for Systems and Software Engineering Tool Support • Tool will be used throughout the project life cycle • Used for: – Tracking project progress – Project estimation – Team assessment • Frequency – Start after prototyping begins – Done every two weeks? 10/5/11 (C) USC-CSSE 40 University of Southern California Center for Systems and Software Engineering Different Project Types • Architected Agile – Track through development of source code • NDI/NCS – Utilize Application Points 10/5/11 (C) USC-CSSE 41 University of Southern California Center for Systems and Software Engineering Tool 10/5/11 (C) USC-CSSE 42 University of Southern California Center for Systems and Software Engineering Tool 10/5/11 (C) USC-CSSE 43 University of Southern California Center for Systems and Software Engineering Tool 10/5/11 (C) USC-CSSE 44 University of Southern California Center for Systems and Software Engineering Tool 10/5/11 (C) USC-CSSE 45 University of Southern California Center for Systems and Software Engineering Publications Aroonvatanaporn, P., Sinthop, C., and Boehm, B. “Reducing Estimation Uncertainty with Continuous Assessment: Tracking the ‘Cone of Uncertainty’.” In Proceedings of the IEEE/ACM International conference on Automated Software Engineering, pp. 337-340. New York, NY, 2010. 10/5/11 (C) USC-CSSE 46 University of Southern California Center for Systems and Software Engineering References Basili, Victor R. “Applying the goal/question/metric paradigm in the experience factory”. In Software Quality Assurance and Measurement: Worldwide Perspective, pp. 21-44. International Thomson Computer Press, 1955. Boehm, B. Software Engineering Economics. Prentice-Hall, 1981. Boehm, B. and Lane, J. “Using the incremental commitment model to integrate systems acquisition, systems engineering, and software engineering.” CrossTalk, pp. 4-9, October 2007. Boehm, B., Abts, C., Brown, A.W., Chulani, S., Horowitz, E., Madachy, R., Reifer, D.J., and Steece, B. Software Cost Estimation with COCOMO II. Prentice-Hall, 2000. Boehm, B., Port, D., Huang, L., and Brown, W. “Using the Spiral Model and MBASE to Generate New Acquisition Process Models: SAIV, CAIV, and SCQAIV.” CrossTalk, pp. 20-25, January 2002 Boehm, B. et al. “Early Identification of SE-Related Program Risks.” Technical Task Order TO001, September 2009. Cockburn, A. “Earned-value and Burn Charts (Burn Up and Burn Down). Crystal Clear, Addison-Wesley, 2004. Cohn, M. Agile Estimating and Planning. Prentice-Hall, 2006. Construx Software Builders, Inc. “10 Most Important Ideas in Software Development”. http://www.scribd.com/doc/2385168/10Most-Important-Ideas-in-Software-Development Grenning, J. Planning Poker, 2002. http://www.objectmentor.com/resources/article/PlanningPoker.zip 10/5/11 (C) USC-CSSE 47 University of Southern California Center for Systems and Software Engineering References IBM Rational Jazz. http://www.jazz.net Jarvinen, J. Measurement based continuous assessment of software engineering process. PhD thesis, University of Oulu, 2000 Koolmanojwong, S. The Incremental Commitment Spiral Model Process Patterns for Rapid-Fielding Projects. PhD thesis, University of Southern California, 2010 Krebs, W., Kroll, P., and Richard, E. “Un-assessment – reflections by the team, for the team.” Agile 2008 Conference, 2008. Kroll, P. and Krebs, W. “Introducing IBM Rational Self Check for Software Teams, 2008”. http://www.ibm.com/developerworks/rational/library/edge/08/may08/kroll_krebs Maranzano, J.F., Rozsypal, S.A., Zimmerman, G.H., Warnken, P.E., and Weiss, D.M. “Architecture Reviews: Practice and Experience.” Software, IEEE, 22: 34-43, March-April, 2005. Putnam, L. and Fitzsimmons, A. “Estimating Software Costs.” Datamation, 1979. Standish Group. CHAOS Summary 2009. http://standishgroup.com Unified Code Count. http://sunset.usc.edu/research/CODECOUNT/ USC Software Engineering I Class Website. http://greenbay.usc.edu/ 10/5/11 (C) USC-CSSE 48 University of Southern California Center for Systems and Software Engineering References Wiest, J.D. and Levy, F.K. A Management Guide to PERT/CPM. Prentice-Hall, Englewood Press, 1977. Yang, D., Wan, Y., Tang, Z., Wu, S., He, M., and Li, M. “COCOMO-U: An Extension of COCOMO II for Cost Estimation with Uncertainty.” Software Process Change, 2006, pp.132-141 10/5/11 (C) USC-CSSE 49