UIP 101 New Principal Boot Camp PowerPoint slides

advertisement

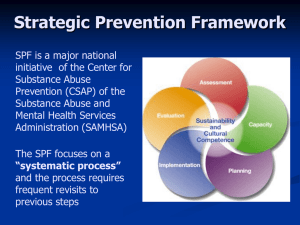

Denver Public Schools Unified Improvement Planning 101 June 17, 2013 UIP 101 Road Map Overview of the UIP Process Structure and Components of the UIP Template Developing a UIP Data Narrative School Performance Frameworks and the UIP Data Analysis: Review Past Performance/Describe Trends/Performance Challenges/Root Causes Action Plans: Target Setting/Action Planning/Progress Monitoring Leadership Considerations & Resources School Type & Title I UIP Timeline & DPS/CDE Resources Intent, Power, Possibility and Purpose of the UIP School Improvement Historically at DPS: Prior to the UIP process DPS developed School Improvement Plans (SIPs) In 2008, Colorado introduced the Unified Improvement Plan (UIP) to streamline state and federal accountability requirements. The UIP was established by the Education Accountability Act of 2009 (SB 09-163) Colorado is entering its 4th year (2013-14) of requiring UIPs for all schools throughout the state. Planning Requirements met by the UIP State Accountability (SB09-163) School Level Performance Improvement Priority Improvement Turnaround Student Graduation and Completion Plan Focus Schools Title IA Schoolwide Program Plan Title IA Targeted Assistance Program Plan Title I (priority improvement or turnaround) Title IIA 2141c (priority improvement or turnaround) Title III Improvement (AMAO)- Tiered Intervention Grant (TIG) (Priority Schools) School Improvement Grant (SIG)/ Implementation Support Partnership (ISP) Other grants reference the UIP Student Graduation and Completion ESEA Program Plan Competitive Grants District Level Distinction Performance Improvement Priority Improvement Turnaround Targeted District Improvement Grant (TDIP) Implementation Support Partnership (ISP) Other grants reference the UIP Intent, Power, Possibility and Purpose of the UIP Theory of Action: Continuous Improvement FOCUS How Has the UIP Process Focused Your School Improvement Efforts? Alex Magaña, Principal Grant Beacon Middle School “The UIP reminds us to do what we expect from our teachers…analyze the data, set goals, develop action steps and measure the results. Here at GBMS we align our UIP to the professional development, SGO's and data team process. Although we have set up our plan there are times we have not met our goals. This allows us take a step back and talk about why we did not meet the goal. For example, we were going to incorporate small group instruction in our reading classes but did not provide enough PD to support the goal.” Intended Session Outcomes Participants will understand: The district and state SPF The purpose, power and possibility of the UIP. The accountability measures associated with the UIP. How to develop each component of the UIP template. How local, state and federal accountability connect to the UIP process.. The state and district tools and resources available to assist school leaders in engaging in UIP work. Purpose of the UIP Provide a framework for performance management. Support school and district use of performance data to improve system effectiveness and student learning. Shift from planning as an event to continuous improvement. Meet state and federal accountability requirements. Give external stakeholders a way to learn about how schools and districts are making improvements. Serves as a 2-Year strategic plan Structure and Components of the UIP Template Major Sections: I. Summary Information about the School (pre-populated template) II. Improvement Plan Information III. Narrative on Data Analysis and Root Cause Identification IV. Action Plan(s) V. Appendices (addenda forms) State SPF: Pre-Populated UIP Template 9 State SPF: Pre-Populated UIP Template 10 Unified Improvement Planning Processes Gather and Organize Data Review Performance Summary Describe Notable Trends Data Analysis Analysis Data (Data Narrative) Narrative) (Data Progress Monitoring Prioritize Performance Challenges Identify Root Causes Set Performance Targets Identify Major Improvement Strategies Identify Interim Measures Identify Implementation Benchmarks Target Setting Action Planning Data Narrative as a Repository Data Narrative: A Repository School A has …. TCAP Reading Writing Math Science 2007 38 28 18 16 2008 41 29 19 15 2009 45 30 40 14 2010 41 31 40 17 2011 38 29 39 20 UIP Data and Information Description of School & Data Analysis Process Review Current Performance Trend Analysis: Worksheets 1 & 2 Action Plans Target Setting/Action Planning Forms Data Narrative Purpose: The purpose of the data narrative is to describe the process and results of the analysis of the data for school improvement. It serves as a repository for everything you do in the UIP process. Elements to Include in the Data Narrative: Description of the School Setting and Process for Data Analysis Review Current Performance State & Federal Accountability Expectations Progress Towards Last Year’s Targets Trend Analysis Priority Performance Challenges Root Cause Analysis Throughout the school-year capture the following in the data narrative: Progress Monitoring (Ongoing) UIP 101 Road Map √ Overview of the UIP Process √ Structure and Components of the UIP Template Developing a UIP √ Data Narrative School Performance Frameworks and the UIP Data Analysis: Review Past Performance/Describe Trends/Performance Challenges/Root Causes Action Plans: Target Setting/Action Planning/Progress Monitoring Leadership Considerations & Resources School Type & Title I UIP Timeline & DPS/CDE Resources Step 2. Review Current Performance DPS School Performance Framework (SPF) 15 What is the DPS SPF? A comprehensive annual review of school performance. • Provides a body of evidence related to student growth and achievement and overall organizational strength using a variety of measures • Is the basis of mandatory school accreditation ratings • Aligns district goals, state requirements, and federal mandates • Provides information for teacher and principal compensation systems • Made public for the Denver community and is a factor in enrollment decisions 5 Possible Ratings Based on the percentage of overall points earned, schools receive one of five possible SPF ratings DISTINGUISHED 80% - 100% MEETS EXPECTATIONS 51% - 79% ACCREDITED ON WATCH 40% - 50% ACCREDITED ON PRIORITY WATCH 34% - 39% ACCREDITED ON PROBATION UP TO 33% Consider this: What about schools that combine grade levels? (i.e. elementary and middle schools in a K-8th grade format, and middle and high schools in a 6th-12th grade format) 2012 DPS SPF Overall Rating Categories 8% ES 22% MS 8% HS 53% ES 35% MS 33% HS 20% ES 22% MS 38% HS 8% ES 9% MS 4% HS 11% ES 13% MS 17% HS *Alternative Schools excluded 18 Indicators >> Academic Growth >> Academic Status >> Post-Secondary Readiness Growth * >> Post-Secondary Readiness Status * >> Student Engagement & Satisfaction >> Enrollment >> Parent Satisfaction Consider this: Should all areas be measured equally? What weight would you assign to each category? DPS 2012 SPF Indicator Weights 100% 90% 80% 70% 2.6% 5.2% 3.9% 2.6% 5.3% 3.9% 2.1% 2.1% 1.4% 20.3% 21.7% 22.7% 60% 28.2% 50% 26.7% 13.3% 11.3% 40% 30% 20.0% 66.4% 65.6% 20% 34.7% 40.0% 10% 0% Elementary Middle High Alternative Student Engagement Growth Status PSR Growth Post-Secondary Readiness PSR Status Student Engagement School Demand Status Parent Engagement Growth DPS Sample Stoplight Scorecard: Indicators 21 Indicator Total Points Come From the Measures 1) ACADEMIC GROWTH 2) ACADEMIC STATUS 10 Measures 7 Measures Alt SPF= 2 Alt SPF= 1 3) POST-SECONDARY READINESS GROWTH * 9 Measures 4) POST-SECONDARY READINESS STATUS * 11 Measures 5) STUDENT ENGAGEMENT & SATISFACTION 3 Measures 6) ENROLLMENT Alt SPF= 7 3 Measures 7) PARENT SATISFACTION Consider this: What indicators do the Elementary/Middle/High School grades have in common? Alt SPF PSR= 7 2 Measures Adding up the Points Data Collection & Aggregation Apply SPF Rubrics Measure Points & Stoplight Sum and Apply Cut-Offs Indicator Total Stoplight Sum and Apply Cut-Offs Overall Total Accreditation Rating 23 Exceeds Standard Meets Standard Approaching Standard Does Not Meet Standard DPS SPF: Based on 2-Years of Data Example: TCAP Median Growth Percentile 2011: MGP=40 2012: MGP=60 Approaching Meets 2012 Measure Rating: Approaching Computation Process: Based on 2-Years of Data Cutpoints Twoyear rubric 25 1.1 a-c Median Growth Percentile: Was the school's CSAP median growth percentile at or above 50? 0. Does not meet The median growth percentile was less than 35. standard 2. Approaching The median growth percentile was at or above 35 and less than standard 50. 4. Meets standard The median growth percentile was at or above 50 and less than 65. 6. Exceeds standard The median growth percentile was 65 or higher. Year 2 Year 1 0. Does not meet 2. Approaching 4. Meets 6. Exceeds 0. Does not meet 0. Does not meet 0. Does not meet 2. Approaching 2. Approaching 2. Approaching 0. Does not meet 2. Approaching 2. Approaching 4. Meets 4. Meets 2. Approaching 2. Approaching 4. Meets 4. Meets 6. Exceeds 2. Approaching 4. Meets 4. Meets 6. Exceeds DPS Sample Detail Scorecard: Measures 26 Consider this: What does Measure 1.2 mean by “Similar School”? DPS Similar Schools Clusters The DPS SPF provides information on how each school performs relative to similar schools in the district. The School Characteristics Indicator is a weighted calculation: FRL (40%) + ELL (20%) + SpEd (20%) + Mobility*(20%) • Schools are then rank-ordered by Ed Level and compared with 10 schools that are closest to them. – Clusters are custom for each school. *Mobility is defined as the total number of students who entered or left the school after 10/1 divided by the number of students in the school as of 10/1. 27 Similar Schools Calculation: How it Works School Name % FRL % ELL % SpEd % Mobility School Characteristics Indicator School A 30.35% 16.47% 7.06% 13.00% 19.45 School B 37.82% 14.26% 6.34% 20.00% 23.25 School C 34.03% 36.39% 5.76% 7.00% 23.44 School D 49.19% 10.93% 9.92% 14.00% 26.65 School E 51.92% 21.39% 5.12% 4.75% 27.02 School F 47.92% 17.25% 12.14% 12.00% 27.45 School G 52.22% 17.21% 8.32% 10.83% 28.16 School H 50.86% 17.08% 15.55% 12.00% 29.27 School I 57.02% 24.47% 13.83% 9.00% 32.27 School J 56.98% 20.16% 8.91% 18.99% 32.40 School K 62.50% 13.78% 13.78% 14.00% 33.31 School L 60.57% 26.49% 7.19% 15.00% 33.97 School F’s Cluster FRL(40%) + ELL(20%) + SpEd(20%) + Mobility(20%) = School Characteristics Indicator DPS Sample Detail Scorecard: Measures 29 Consider this: What is a Median Growth Percentile? What is Catch Up and Keep Up? Growth Percentiles • In order to receive a growth percentile, students need a valid English CSAP/ TCAP score over two consecutive years with a typical grade level progression (e.g., third grade to fourth grade) • Each student receives a growth percentile indicating how much growth they achieved in the current year compared to other students who earned similar scores in prior years. A growth percentile of 50 is considered “typical” growth. • Every student’s growth percentile is then rank-ordered and the middle score, or median, for the population is identified. This is the median growth percentile (MGP). • For accountability purposes (i.e., inclusion in the SPF), students need to have been enrolled in the same school since October 1 of the same school year. Catch-Up & Keep-Up Growth • Includes all students who took TCAP for two consecutive years. • Different from the state’s Catch-Up and KeepUp. ▫ DPS Catch-up: the percentage of students transitioning from a lower to higher performance level from one year to the next. ▫ DPS Keep-up: the percentage of students staying in the proficient and advanced categories or moved from proficient to advanced. ▫ State’s is progress needed to be proficient in 3 years or by 10th grade. • This measure is limited to TCAP Reading, Math, and Writing. Excellent Training on MGPs, Keep Up and Catch Up can be found at the CDE website: http://www.cde.state.co.us/media/training/SPF_Online_Tutorial/player.html 32 DPS Sample Detail Scorecard: Measures 33 Consider this: What measures look at your English Language Learners? Proficiency Gaps? DPS SPF – Language of Assessment Language of Assessment – Performance over time CELA Proficiency Compared to TCAP Proficiency – Reading/Lectura 80% 2nd Grade CELA Level CELA 3 41% 9% 20% 7% 7% 32% 2% 0% 51% 28% 56% CELA 1 20% 40% Lectura 35 33% 30% CELA 2 %P+ Grade 5 TCAP Reading (English) %P+ Grade 4 TCAP Reading (English) 3rd Grade % Proficient or Better - TCAP 60% 80% 100% 0% 17% 3% 5% 1% 3% 20% 40% 0% Reading Note: ELLs who were in 2nd grade in either 2006-07, 2007-08, or 2008-09 and took CELA were combined into one cohort and tracked forward to 3rd, 4th and 5th grades. Students opted out of ELA services by their parents were not included in the population. 20% 40% 60% Language of Assessment – Performance over time CELA Proficiency Compared to TCAP Reading Growth (MGPs) Students who scored at CELA Levels 1, 2, or 3 and took TCAP in English showed lower than typical (50th percentile) growth in 4th grade. Grade2 CELA Level 1 Grade2 CELA Level 2 Grade2 CELA Level 3 Total N Grade 4 TCAP Reading MGP N Grade 4 TCAP Readin g MGP 40.0 248 47.0 445 42.0 809 110 52.0 257 52.0 491 49.0 858 25.0 79 42.0 259 45.0 380 42.0 718 2010 39.5 56 42.0 232 52.0 385 48.0 673 Total 32.0 361 43.0 996 49.0 1,701 45.0 3,058 Grade 4 TCAP Reading MGP N Grade 4 TCAP Reading MGP 2007 33.5 116 2008 36.5 2009 Grade 2 Year Data display the 4th grade MGPs for students who scored at CELA Levels 1, 2, or 3 in 2nd grade and took TCAP Reading (English test) in 3rd grade. The population includes 2nd graders in either 2007, 36 2008, 2009, or 2010 with growth scores in 4th grade (2009, 2010, 2011, or 2012). N Language of Assessment – Dispelling the SPF Myth Accountability Measures • ELLs who take the TCAP Lectura in 3rd Grade, rather than the TCAP Reading, are more likely to have a positive impact on SPF scores. – TCAP Lectura scores count toward both DPS and state SPF Status measures as much as TCAP Reading scores. – Students who take the TCAP Lectura in 3rd Grade are removed from DPS and state SPF growth measures in 4th Grade (i.e., they do not count against a school). TCAP State Testing Requirements • Students qualify for the Lectura/Escritura and/or the Oral Translation accommodation if: – The student has been enrolled in a Colorado Public School for less than three years in first grade or later (kindergarten is not counted in the three years). – The student has been receiving native language instruction within the last 9 months. • 37 Decisions regarding the language of assessment should be made based on a body of evidence and in accordance with what is best for the student. State School Performance Framework (SPF) What is the State SPF and DPF? Colorado Educational Accountability Act of 2009 (SB09-163) • Colorado Dept. of Education annually evaluates districts and schools based on student performance outcomes. • All districts receive a District Performance Framework (DPF). This determines their accreditation rating. • All schools receive a School Performance Framework (SPF). This determines their school plan types. • Provides a common framework through which to understand performance and focus improvement efforts – A statewide comparison that highlights performance strengths and areas for improvement. State SPF:4 Possible Plan Types, 5 District Designations Plan Types • • • • Performance Plan Improvement Plan Priority Improvement Plan Turnaround Plan District Accreditation Designations Accredited with Distinction Accredited Accredited with Improvement Plan Accredited with Priority Improvement Plan (DPS 2012 Accreditation Rating) • Accredited with Turnaround Plan • • • • Consider this: How many times can a school or district be on Priority Improvement or Turnaround? 40 State SPF: Accountability Clock • Once a school is rated as either turnaround or priority improvement (red or orange) on CDE’s SPF they enter into a 5-year clock. • Schools may not implement a Priority Improvement or Turnaround Plan for longer than five consecutive years before facing action directed by the State Board: i.e., the district is required to restructure or close the school. 41 2012 State SPF DPS Schools' Plan Types 100% 90% 80% 70% 58% 60% 71% Performance 50% Improvement 40% Priority Improvement 30% 20% 10% 0% 25% 8% 9% DPS Turnaround 20% 7% 2% Colorado CDE *Alternative Schools excluded 42 2012 CDE Performance Indicators Achievement Growth Growth Gaps Percent proficient and advanced Normative and CriterionReferenced Growth Growth Gaps • Reading (CSAP, Lectura, and CSAPA) • Writing (CSAP, Escritura, and CSAPA) • Math (CSAP and CSAPA) • Science (CSAP and CSAPA) • CSAP Reading, Writing and Math • CELApro • Median Student Growth Percentiles • Adequate Median Student Growth Percentiles Median Student Growth Percentiles and Median Adequate Growth Percentiles for disaggregated groups: • • • • Poverty Race/Ethnicity Disabilities English Language Learners • Below proficient Postsecondary and Workforce Readiness Colorado ACT Graduation Rate (overall and for disaggregated groups) Dropout Rate 43 State SPF: Performance Indicator Weights 44 State SPF: 1 year vs. 3 year data • CDE provides two different versions of the School Performance Framework Reports: ▫ One Year SPF The most recent year of data ▫ Three Year SPF The most recent three years • Only one report counts for official accountability purposes: ▫ Higher number of the performance indicators, or ▫ The one under which it received a higher total number of points. CDE vs. DPS SPF 46 CDE vs. DPS SPF Key Differences 1) DPS framework includes metrics not collected by the state; 2) Evaluates growth using a broader definition, including measures that evaluate the growth of advanced students; and, 3) Rates each metric based on two consecutive years of performance as compared to CDE’s approach of using either one or three years of data. 47 CDE vs. DPS SPF Key Differences • Rating differences: Several schools receive lower ratings on the district’s framework than on the state’s framework. • Cut Points – “The Standard”: For the district, these cuts are based largely on the distribution of total points earned by all district schools. Since these cuts are informed by the district’s distribution, the placement of these cuts would invariably differ from cuts set by CDE using information from the distribution of all schools in the state. 48 DPS & CDE SPF Ratings Crosswalk DPS SPF Rating CDE SPF Rating Distinguished Performance Meets Expectations Performance Accredited on Watch Improvement Accredited on Priority Watch Priority Improvement Accredited on Probation Turnaround Looking at the 2011-12 SPF: •DPS’ SPF was higher than the state’s 10% of the times. •The state’s SPF was higher than DPS’ 28% of the times. 49 DPS SPF vs. State SPF DPS SPF Indicators CDE SPF Indicators Academic Achievement (Status) Academic Achievement Academic Growth Academic Growth Academic Growth Gaps Academic Growth Gaps Postsecondary Readiness (Status) Postsecondary & Workforce Readiness Postsecondary Readiness (Growth) n/a Student Engagement & Satisfaction n/a Re-Enrollment n/a Parent Engagement n/a DPS SPF Locations http://spf.dpsk12.org ARE Homepage http://testing.dpsk12.org/ > School Accountability> School Performance Framework DPS Homepage http://www.dpsk12.org/> About DPS > School Performance Framework 51 CDE Website http://www.schoolview.org • District: Denver County 1 • School: (Select) • School Report: Improvement Plan 2012 1 year SPF 2012 3 year SPF 52 Principal Portal https://principal.dpsk12.org Lunch Break Lunch 1 hour UIP 101 Road Map √ Overview of the UIP Process √ Structure and Components of the UIP Template Developing a UIP √ Data Narrative √ School Performance Frameworks and the UIP Data Analysis: Review Past Performance/Describe Trends/Performance Challenges/Root Causes Action Plans: Target Setting/Action Planning/Progress Monitoring Leadership Considerations & Resources School Type & Title I UIP Timeline & DPS/CDE Resources Data Analysis (Worksheets #1 and #2) Initial Root Cause Analysis Describe Notable Trends Review Performance Prioritization of Performance Challenges Root Cause Analysis Consider Prior Year’s Performance Review Prior Year’s Performance (Worksheet #1) List targets set for last year Denote the following: Whether the target was met or not How close the school/district was to meeting the target; and To what degree does current performance support continuing with current major improvement strategies and action steps Prior Year’s Performance DPS Example UIP 101 Road Map √ Overview of the UIP Process √ Structure and Components of the UIP Template √ New Updates to the UIP Process Developing a UIP √ Data Narrative √ School Performance Frameworks and the UIP Data Analysis: √ Review Past Performance/Describe Trends/Performance Challenges/Root Causes Action Plans: Target Setting/Action Planning/Progress Monitoring Leadership Considerations & Resources School Type & Title I UIP Timeline & DPS/CDE Resources Data Locations Indicator Measure Location Academic Achievement (Status) TCAP, CoAlt, CELA & ACCESS, Principal Portal DRA/EDL2, GPAs, MAP, STAR, - SPF Drill Down Assessment Frameworks School Folders (CoAlt) Academic Growth Median Student Growth Percentile Principal Portal - SPF Drill Down - School level by Content Area - School level by grade Academic Growth Gaps Median Student Growth Percentile CDE (SchoolView.org) - Sub-groups Student Engagement Attendance School Satisfaction Survey Student Perception Survey Principal Portal School Folders ARE Website UIP Data Locations Indicator Measure Location Post Secondary and Work Force Readiness Graduation Rate Dropout Rate College Remediation Rate Principal Portal Disaggregated Graduation Rate CDE (SchoolView.org) - School Performance – Data Center Mean ACT Composite Principal Portal (2013) Advanced Placement School Folders Data Websites • Principal Portal http://principal.dpsk12.org/ • School Folders https://are.dpsk12.org/assessapps/ • CDE http://www.schoolview.org/ • SchoolNet https://schoolnet.dpsk12.org/ Step 2: Identify Trends IL 1 • Include all performance indicator areas. • Identify indicators* where the school did not at least meet state and federal expectations. • Include at least three years of data (ideally 5 years). • Consider data beyond that included in the school performance framework (grade-level, sub-group, local data). • Include positive and negative performance patterns. • Include information about what makes the trend notable. * Indicators listed on pre-populated UIP template include: status, growth, growth gaps and postsecondary/workforce readiness Writing Trend Statements: 1. Identify the measure/metrics. 2. Describe for which students (grade level and disaggregated group). 3. Describe the time period. 4. Describe the trend (e.g. increasing, decreasing, flat). 5. Identify for which performance indicator the trend applies. 6. Determine if the trend is notable and describe why. Trends Could be: Stable Increasing Decreasing Increasing then decreasing Decreasing then increasing Flat then increasing Flat then decreasing What other patterns could staff see in three years of data? What makes a trend notable? • In comparison to what . .. • Criterion-based: How did we compare to a specific expectation? ▫ Minimum state expectations ▫ Median adequate growth percentiles • Normative: How did we compare to others? ▫ District or state trends for the same metric over the same time period. ▫ For disaggregated groups, to the school over-all ▫ By standard to the content area over-all Examples of Notable Trends • The percent of 4th grade students who scored proficient or advanced on math TCAP/CSAP declined from 70% to 55% to 48% between 2009 and 2011 dropping well below the minimum state expectation of 71%. • The median growth percentile of English Language learners in writing increased from 28 to 35 to 45 between 2009 and 2011,meeting the minimum expectation of 45 and exceeding the district trend over the same time period. • The dropout rate has remained relatively stable (15, 14, 16) and much higher than the state average between 2009 and 2011. Data Analysis: DPS Example Trends: What did they get right? What can be improved? Data Analysis: DPS Example w/ Table & Graph Disaggregating Data Review Current Performance by Sub-Group • Status • Principal Portal -TCAP • Growth • Schoolview.org - CDE Growth Summary • Academic Growth Gaps • Schoolview.org - CDE Growth Gaps Report • More than 10% difference? Yes Example data to review • CELA Trajectory • Continuously Enrolled • Frameworks • District SPF Measures • Follow steps for Prioritizing Performance 70 Challenges. IL 2 Is there a difference among groups? Example data to review Analyze more data to understand differences: CEL 1&2 No STOP! • Step back and look for patterns in performance. • Denote that performance challenge spans across sub-groups. • Follow steps for Prioritizing Performance Challenges. Analyzing Trends: Keep in Mind… • Be patient and hang out in uncertainty • Don’t try to explain the data • Observe what the data actually shows • No Because Because How to Describe Trends 1. Start with a performance focus and relevant data report(s). 2. Make predictions about performance. 3. Interact with data (at least 3 years). 4. Look for things that pop out, with a focus on patterns over time (at least three years). 5. Capture a list of observations about the data in Data Analysis worksheet (positive or negative). 6. Write trend statements. 7. Identify which trends are significant (narrow) and which require additional analysis. Make Notes for Data Narrative Guiding Questions •In which performance indicators did school performance not at least meet state expectations? •What data did the planning team review? •Describe the process in which your team engaged to analyze the school’s data. •What were the results of the analysis (which trends were identified as significant)? UIP 101 Road Map √ Overview of the UIP Process √ Structure and Components of the UIP Template Developing a UIP √ Data Narrative √ School Performance Frameworks and the UIP Data Analysis: √ Review Past Performance/√ Describe Trends/Performance Challenges/Root Causes Action Plans: Target Setting/Action Planning/Progress Monitoring Leadership Considerations & Resources School Type & Title I UIP Timeline & DPS/CDE Resources Priority Performance Challenges Priority performance challenges are. . . Priority performance challenges are NOT… Specific statements (notable trend statements) about performance What caused or why we have the performance challenge Strategic focus for the improvement efforts Action steps that need to be taken About the students About the adults Concerns about budget, staffing, curriculum, or instruction Priority Performance Challenges Examples • The percent of fifth grade students scoring proficient or better in mathematics has declined from 45% three years ago, to 38% two years ago, to 33% in the most recent school year. • For the past three years, English language learners (making up 60% of the student population) have had median growth percentiles below 30 in all content areas. • Math achievement across all grade-levels and all disaggregated groups over three years is persistently less than 30% proficient or advanced. Priority Performance Challenges NonExamples • To review student work and align proficiency levels to the Reading Continuum and Co. Content Standards • Provide staff training in explicit instruction and adequate programming designed for intervention needs. • Implement interventions for English Language Learners in mathematics. • Budgetary support for para-professionals to support students with special needs in regular classrooms. • No differentiation in mathematics instruction when student learning needs are varied. Prioritizing Performance Challenges 1. Clarify indicator areas where performance challenges must be identified (where school performance did not at least meet state/federal expectations). 2. Start with one indicator area, consider all negative trends. 3. Focus the list (combining similar trends) (i.e., If you have declining performance…in ELLs, SPED 4. Do a reality check (preliminary prioritization by dot voting on the tree map) 5. Achieve consensus about top priorities (consider using the REAL criteria, see the UIP Handbook). 6. Record on Data Analysis Worksheet. Capture in Data Narrative Guiding Questions 1.In which performance indicators did school performance not at least meet state expectations? 2.Who was involved in identifying trends and prioritizing performance challenges? 3.What data did the planning team review? 4.In what process did the planning team engaged to analyze the school’s data? 5.What were the results of the analysis (which trends were identified as significant)? 6.How were performance challenges prioritized? 7.What were identified as priority performance challenges for the 2011-12 school year? UIP 101 Road Map √ Overview of the UIP Process √ Structure and Components of the UIP Template Developing a UIP √ Data Narrative √ School Performance Frameworks and the UIP Data Analysis: √ Review Past Performance/√ Describe Trends/ √ Performance Challenges/Root Causes Action Plans: Target Setting/Action Planning/Progress Monitoring Leadership Considerations & Resources School Type & Title I UIP Timeline & DPS/CDE Resources What is a Root Cause? IL 1 • Root causes are statements that describe the deepest underlying cause, or causes, of performance challenges. • They are the causes that, if dissolved, would result in elimination, or substantial reduction, of the performance challenge(s). • Root causes describe WHY the performance challenges exist. • Things we can change and need to change • The focus of our major improvement strategies. • About adult action. Focus of Root Cause Analysis • Root Cause analysis is always focused on student performance. • It answers the question: What adult actions explain the student performance that we see? • Root cause analysis can focus on positive or negative trends. • In this case the focus is on “challenges” Steps in Root Cause Analysis 1. Focus on a performance challenge (or closely related performance challenges). 2. Consider External Review results (or categories) 3. Generate explanations (brainstorm) 4. Categorize/ classify explanations 5. Narrow (eliminate explanations over which you have no control) and prioritize 6. Deepen thinking to get to a “root” cause 7. Validate with other data Root Cause Activity Priority Performance Challenge: Little Johnny, who is in the fifth grade, habitually gets up late in the morning for school. As a result Johnny is late for his 1st class period 10 days in a row. •Work with your table partners to determine the potential root causes for Johnny’s behavior. (7 minutes) From Priority Performance Challenge to Root Cause…… Systemic Programmatic Incident or Procedural Level Priority Performance Challenge There has been an overall decline academic achievement in reading, writing and math from 2007-2011 for all grades (K-8). There has been a decline in achievement in reading, math and writing for 4th grade reading from 2007-2011. 4th graders did not demonstrate the multiplication concept on the last Everyday Math Exam. Root Cause The RTI process has not been implemented with fidelity. Instruction has not been differentiated to meet the needs of the 4th grade student. The teachers did not teach multiplication concepts in the last EDM unit. Verify Root Causes (example) Priority Performance Challenge: The % proficient/adv students in reading has been substantially above state expectations in 3rd grade but substantially below stable (54%, 56%, 52%) in 4th and 5th for the past three years. Possible Root Causes Questions to Explore Data Sources Validation Curriculum materials and Instructional plans for each grade. K-3 strategies are different from 45. K-3 is using new teaching strategies, 4-5 are not. What strategies are primary vs. intermediate teachers using ? Less time is given to direct reading instruction in 4-5 How much time is Daily schedule in devoted to reading in each grade level. primary v. intermediate grades? No evidence that less time is devoted to reading in 4-5. Capture in Data Narrative Guiding Questions • What data and evidence was used to determine root causes? • What process was used to identify these? (e.g., 5 Whys?) • What process and data was used to verify these? (e.g., reviewed curriculum, teacher observations, interim assessments) UIP 101 Road Map √ Overview of the UIP Process √ Structure and Components of the UIP Template √ New Updates to the UIP Process Developing a UIP √ Data Narrative √ School Performance Frameworks and the UIP √ Data Analysis: √ Review Past Performance/√ Describe Trends/ √Performance Challenges/ √Root Causes Action Plans: Target Setting/Action Planning/Progress Monitoring Leadership Considerations & Resources School Type & Title I UIP Timeline & DPS/CDE Resources Setting Targets Focus on a priority performance challenge SL 1 Review state or local expectations Determine progress needed in next two years Describe annual targets for two years Action Planning Tools, p. 9 *See accountability clock slide above. Determine YOUR timeframe* (max 5 years) Minimum State Expectations • The value for which a rating of “meets” would be assigned for the state metric in each measure. • Review state expectations on the 1st two pages of your prepopulated UIP template Example: •Postsecondary and Workforce Readiness: • Graduation rate should be at or above 80% DPS District Accountability DPS’ goal is to close or significantly reduce the academic achievement and postsecondary readiness gaps between DPS and the state by 2015. DPS’ SPF is used to evaluate school performance as schools pursue the district’s goal. School specific targets have been established that represent each school’s share of the district’s goal annually through 2015. Schools should strive to achieve these targets at minimum; however, some schools will need to set higher targets. (see School Folders for your school’s targets) Example of School UIP Targets in School Folders Setting Academic Achievement Targets Practice Activity: 1.Review your school’s performance for the 2012-2013 school year. Find one area where you are not meeting district or state expectations 1.How long would it take for your school to meet CDE expectations? (at most 5 years) 2.How much progress can you make in the next two years? This is the process you will use to set your Annual Targets for the next two years. Don’t forget to consider your accountability clock if you are orange or red on the state SPF. Interim Measures Once annual performance targets are set for the next two years, schools must identify interim measures, or what they will measure during the year, to determine if progress is being made towards each of the annual performance targets. Interim measures should be based on local performance data that will be available at least twice during the school year. Across all interim measures, data should be available that would allow schools to monitor progress at least quarterly. 94 Interim Measures • Examples of Interim Measures: ▫ District-level Assessments: Benchmarks/Interims, STAR, SRI ▫ School-level Assessments: End of Unit Assessments, DIBELS, NWEA MAPS • Measures, metrics and availability should be specified in the School Goals Form. • Remember that the Interim Measures need to align with Priority Performance Challenges. Disaggregated groups should be included as appropriate. 95 Examples of Interim Measures • The percentage of all students scoring Proficient/Advanced on the DPS Writing Interim assessment will increase by a minimum of 10 percentage points from the Fall administration to the Spring administration. • The median SRI score for 6th grade students will increase by 50 lexile points for each of the three administrations during the 2012-2013 school year. 96 UIP 101 Road Map √ Overview of the UIP Process √ Structure and Components of the UIP Template Developing a UIP √ Data Narrative √ School Performance Frameworks and the UIP √ Data Analysis: √ Review Past Performance/√ Describe Trends/ √Performance Challenges/ √Root Causes Action Plans: √ Target Setting/Action Planning/Progress Monitoring Leadership Considerations & Resources School Type & Title I UIP Timeline & DPS/CDE Resources Major Improvement Strategies OL 1 • Respond to root causes of the performance problems the school/district is attempting to remedy. • Action steps are smaller activities that fit within larger major improvement strategies. • Improvement Strategies and Action Steps must be associated with resources, people, and time. Describe your Desired Future • If root causes are eliminated . . . • What will these different groups be doing differently? ▫ ▫ ▫ ▫ Students Staff members Leadership team Parents / Community • Examples of Major Improvement Strategy ▫ Teachers daily use data about learning formatively to refocus instruction on their students’ needs. ▫ Staff members consistently implement identified practices in effective literacy instruction. Action Steps 1. Timeline • • Should include the 2013-2014 and 2014-2015 school years. Should include specific months. 2. Key Personnel • Consider who is leading each step and their capacity. 3. Resources • • Include the amount of money, time, and source. Consider resources other than money. Implementation Benchmarks • Directly correspond to the action steps. • Are something that a school/district leadership team could review periodically. • Should help teams adjust their plans – critical to a cycle of continuous improvement. Implementation Benchmarks • Implementation Benchmarks are. . . ▫ how schools will know major improvement strategies are being implemented; ▫ measures of the fidelity with which action steps are implemented; and ▫ what will be monitored. • Implementation Benchmarks are NOT: ▫ Performance measures (assessment results). Implementation Benchmarks /Interim Measures Activity • STAND UP if Example = Interim Measure Benchmark • SIT DOWN if Example = Implementation Benchmark Practice ELL students increased their performance on Reading Interim assessment in Round 2 by 5%. Implementation Benchmarks or Interim Measures? Students increased STAR performance. • STAND UP if Example = Interim Measure • SIT DOWN if Example = Implementation Benchmark Implementation Benchmarks or Interim Measures? Classroom walkthroughs weekly. • STAND UP if Example = Interim Measure • SIT DOWN if Example = Implementation Benchmark Implementation Benchmarks or Interim Measures? Third grade students’ progress in reading will be benchmarked three times through AIMsWeb. •STAND UP if Example = Interim Measure •SIT DOWN if Example = Implementation Benchmark Implementation Benchmarks or Interim Measures? Input of classroom teachers will be gathered during the last week of October. • STAND UP if Example = Interim Measure • SIT DOWN if Example = Implementation Benchmark Implementation Benchmarks or Interim Measures? High school English students will demonstrate mastery using teacher developed writing rubrics. • STAND UP if Example = Interim Measure • SIT DOWN if Example = Implementation Benchmark Implementation Benchmarks or Interim Measures? Staff will participate in three Strategy Labs. Teacher leaders and administration will gather evidence and give feedback on the strategies being implemented in classrooms. Teachers will keep reflection journals on their implementation of the strategies. • STAND UP if Example = Interim Measure • SIT DOWN if Example = Implementation Benchmark UIP 101 Road Map √ Overview of the UIP Process √ Structure and Components of the UIP Template Developing a UIP √ Data Narrative √ School Performance Frameworks and the UIP √ Data Analysis: √ Review Past Performance/√ Describe Trends/ √Performance Challenges/ √Root Causes Action Plans: √ Target Setting/ √Action Planning/Progress Monitoring Leadership Considerations & Resources School Type & Title I UIP Timeline & DPS/CDE Resources Progress Monitoring • Consider: ▫ What performance data will be used to monitor progress towards annual targets? How will you check throughout the year that your strategies and actions are having an impact on student outcomes? ▫ What process data will be used to monitor progress towards annual targets? How will you check throughout the year that your strategies and actions are having an impact on adult actions? UIP 101 Road Map √ Overview of the UIP Process √ Structure and Components of the UIP Template Developing a UIP √ Data Narrative √ School Performance Frameworks and the UIP √ Data Analysis: √ Review Past Performance/√ Describe Trends/ √Performance Challenges/ √Root Causes √ Action Plans: √ Target Setting/ √Action Planning/ √Progress Monitoring Leadership Considerations & Resources School Type & Title I UIP Timeline & DPS/CDE Resources Importance of School Type See CDE’s Quality Criteria (in the back of the UIP handbook) to determine what you should include based on school/plan type. School plan types are based on CDE’s SPF (and in a few minor cases the DPS’ SPF) and will be officially released in Dec. 2013 ARE will communicate your preliminary CDE SPF rating with your IS/DAP/SIP only if you are a priority improvement or turnaround school. Title I Requirements All Title I DPS schools follow Schoolwide plan requirements Additional Title I requirements for: State SPF Priority Improvement Schools State SPF Turnaround Schools Focus Schools DPS Turnaround (TIG) ARE will communicate to you your status and the appropriate Title addenda forms to attach to your UIP in the fall. Timelines/Deadlines/Resources Websites Contains DPS UIP http://testing.dpsk12.org/accountability/U IP/UIP.htm Training Materials & Tools Timeline Templates/Addenda Forms UIP Upload Tool CDE UIP http://www.cde.state.co.us/uip/index.asp Training Materials & Tools Templates/Addenda Forms DPS SPF http://communications.dpsk12.org/initiati ves/school-performance-framework/ Principal Portal: Drill-Down Tool Current and previous year’s SPF Additional reports on TCAP and ACCESS School CDE SPF Results Resources CDE SPF Rubric CDE SPF http://www.schoolview.org/performance.a sp School CDE SPF Results Resources CDE SPF Rubric DPS Federal http://fedprograms.dpsk12.org/ Title I Status District & Network Contacts District Contact UIP & SPF Network Contact Brandi Van Horn (ARE) brandi_vanhorn@dpsk.12.org 720-423-3640 Katherine Beck (ARE) katherine_beck@dpsk12.org 720-423-3734 Federal Programs (Title I) Veronica Bradsby (Federal Programs) veronica_bradsby@dpsk12.or g 720-423-8157 Assessments Assessment Coordinators (ARE) http://testing.dpsk12.org/secure/sal_r esources/SAL%20Role%20and%20Ass essment%20Information.pdf Instructional Superintendents (IS) School Improvement Partner (SIP) Instructional Support Partner (ISP) Data Assessment Partner (DAP) Data Websites • Principal Portal http://principal.dpsk12.org/ • School Folders https://secure2.dpsk12.org/schoolfolders/ • CDE http://www.schoolview.org/ • SchoolNet https://schoolnet.dpsk12.org/