Building a psychometric portfolio: Evidence for reliability

advertisement

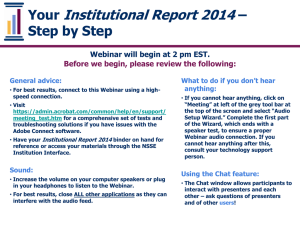

NSSE’s Psychometric Portfolio: Evidence for Reliability, Validity, and Other Quality Indicators Thank you for joining us. The Webinar will begin at 3:00pm (EST) Sound tests will begin around 2:55pm (EST) Some general advice before we begin: Additional materials: You may want to open an additional tab or window for easy access to the NSSE Psychometric Portfolio at nsse.iub.edu/links/psychometric_portfolio Sound: Turn up your computer speakers or plug in your headphones to listen to the Webinar. For best results, close all other applications – they may interfere with the audio feed. What to do if you can’t hear anything: • Click on “Meeting” in the left side of the dark grey tool bar at the top of the screen and select “Audio Setup Wizard.” Complete the first part of the Wizard, which ends with a speaker test, to ensure you are properly connected for webinar audio. If you still can’t hear anything, consult your technology support person. • The Webinar will be recorded. If you can’t fix the problem, you will be able to access the recorded session on the NSSE Web site which will be posted a few days after the live session. Using the Chat feature: The Chat window will be available throughout the presentation for participants to interact with presenters and each other. Please use chat to pose questions, suggest a resource etc. NSSE’s Psychometric Portfolio: Evidence for Reliability, Validity, and Other Quality Indicators NSSE Webinar Tuesday, August 31, 2010 Bob Gonyea, NSSE Associate Director Angie Miller, Research Analyst Overview 1. Introduction 2. NSSE Psychometric Portfolio 3. The Framework A. Reliability B. Validity C. Other Quality Indicators 4. Summary of Results 5. Discussion with you Introduction Psychometric testing? What is a psychometric portfolio? Who is this for? NSSE’s Psychometric Framework A framework for organizing and presenting studies about the quality of NSSE Consists of three areas of analysis, each containing multiple approaches 1. Reliability 2. Validity 3. Other Quality Indicators Report Template Each brief report in the portfolio contains: Purpose of the analysis and research question(s) Data description Methods of analysis Results Summary References and additional resources Report Template View the portfolio at: nsse.iub.edu/links/psychometric_portfolio - Take a brief tour of the portfolio - Reliability Reliability refers to the consistency of results • Are results similar across different forms of the instrument or across time periods of data collection? • Reliable instruments/scales imply that data/results are reproducible • Strongly related to error—large amounts of error can lead to unreliable measurements • Reliability measurements can be calculated with data from single or multiple survey administrations Reliability Brief working definitions of reliability: • Internal Consistency • “Item homogeneity, or the degree to which the items on a test jointly measure the same construct” (Henson, 2001) • Temporal Stability • Also called test-retest reliability • “How constant scores remain from one occasion to another” (DeVellis, 2003) • Equivalence • Also called inter-method or parallel forms reliability • “The reproducibility between different versions of an instrument” (Cook & Beckman, 2006) Reliability Example questions of NSSE reliability: • How well do the items within the NSSE benchmarks intercorrelate? • How stable are institutional benchmark scores over time? • Does the NSSE survey produce similar results when administered to the same person at different times? • Are students able to reliably estimate the number of papers they write? • Do different versions of NSSE questions produce similar results, specifically, how often is often? Reliability Results are presented • At the student-level and/or institution-level • Student-level Temporal Stability • Institution-level Temporal Stability • For various subgroups • Internal Consistency • From original studies or previously written papers • Equivalence: How often is often • From multiple years of testing • Reliability Framework Summary of Reliability Results • Internal Consistency- 3 of 5 NSSE benchmarks are generally reliable across various subpopulations • Internal Consistency- Enriching Educational Experiences and Active & Collaborative Learning are less reliable than other benchmarks • Temporal Stability- Benchmarks are highly reliable from year to year at the institution level • Temporal Stability- Students’ responses relatively stable between periods of a few weeks • Equivalence- Students reliably respond to vague quantifiers such as “sometimes” or “often” Validity Validity refers to “the degree to which evidence and theory support the interpretations of test scores entailed by the proposed uses of tests” (Messick, 1989). • Validity is a property of the inference, not the instrument • Involves empirical and theoretical support for the interpretation of the construct • Hypotheses driven (evidences collected) Validity Relied on literature • No consensus on a framework but guided us to include different dimensions • American Educational Research Association, Psychological Association, & National Council on Measurement in Education. (1999), Messick (1989), Cook & Beckman(2006), Borden & Young (2008). • Evolving concept • Not a fixed characteristic (depends on use, population and sample) Validity Brief working definitions of validity: • Response process (cognitive interviews, focus groups) • Reviewing the actions and thought processes of test takers or observers • Content validity (theory, expert reviews) • The extent to which a measure represents all facets of a given construct • Construct validity (factor analyses) • The extent to which a construct actually measures what the theory says it does • Concurrent validity (relations to other variables) • The extent to which a construct correlates with other measures of the same construct that are measured at the same time Validity Brief working definitions of validity: • Known groups validity • The extent to which the construct measures the differences and similarities in various groups similar to expected/known (literature) differences or similarities • Predictive validity • The extent to which a score on a scale or test predicts scores on some criterion measure • Consequential validity • Investigating both positive/negative and intended/unintended consequences of inferences to properly evaluate the validity of construct/assessment Validity Example questions of NSSE validity: • Do BCSSE scales predict NSSE benchmarks? • Is there a relationship between student engagement and selected measures of student success? • Do students interpret the survey questions in the same way the authors intended? • Do students’ responses differ according to group membership in a predictable way? • Do institutions appropriately use the survey data and results? Summary of Validity Results • Response Process- Overall, cognitive interviews and focus groups found survey questions to be clearly worded and understandable. • Content Validity- NSSE conceptual framework summarizes the history of student engagement, NSSE purpose, philosophy, and development of survey • Construct Validity- Deep learning scale shows good factor solution; benchmarks don’t show construct structure. They were developed as additive measures, but improving factor structure is an important consideration for future survey revisions • Concurrent Validity- BCSSE scales are highly related to NSSE benchmarks Summary of Validity Results • Predictive Validity- Student engagement has a positive effect on first-year persistence and cumulative credits taken. Small effects are also seen for GPA • Known Groups Validity- The NSSE benchmarks are able to detect differences between groups in a predictable way. • Consequential Validity- Institutional uses of NSSE data coincide with the intended purposes of the NSSE instrument. Other Quality Indicators “Other Quality Indicators” includes procedures, standards, and other evaluations implemented by NSSE to reduce error and bias, and to increase the precision and rigor of the data. – Assesses NSSE’s adherence to the best practices in survey design, including sampling, survey administration, and reporting – Related to both reliability and validity Other Quality Indicators Brief working definitions for Other Quality Indicators: • Institution participation • Need to look for self-selection bias with those institutions that participate • Item bias • Need to explore if items are functioning differently for different groups of people (DeVellis, 2003) • Measurement error • Verify that procedures, policies, and administrative processes are appropriate and do not introduce error into the data (NCES, 2002) • Data quality issues • Need to look for impact of item non-response and missing data Other Quality Indicators Brief working definitions for Other Quality Indicators: • Mode analysis • Need to examine if mode of completion (paper vs. web) impacts responses (Carini et al., 2003) • Non-response error • Need to examine if responders differ from non-responders (Groves et al., 1992) • Sampling error • Need to determine acceptable rates of sampling error for participating institutions (Dillman, 2007) • Social desirability bias • Need to examine if respondents are answering untruthfully in order to provide socially appropriate responses (DeVellis, 2003) Other Quality Indicators Example questions: • Do NSSE policies and practices adhere to NCES recommended standards and guidelines? • Are institutions that participate in NSSE different from other baccalaureate-granting colleges and universities? • Are responses to the NSSE questionnaire influenced by a tendency to respond in a socially desirable manner? Summary of Other Quality Indicators Results • Institution participation- Institutions participating in NSSE are generally similar to other institutions • Measurement error- NSSE has stringent policies and procedures for data collection and reporting • Mode analysis- No strong evidence for mode bias • Non-response error- Some evidence for non-response issues, such as high rate of drop-off for certain items. However, there is also evidence that neither high school engagement nor attitudes toward engagement influence response to NSSE • Sampling error- Institutions participating in NSSE generally have an adequate number of respondents Conclusion 1. This is responsible survey research. 2. This portfolio is about transparency – so we value feedback. 3. NSSE strives for continuous improvement. 4. We are very excited about the possibilities this presents (e.g., NSSE 2.0) Bob Gonyea rgonyea@indiana.edu Angie Miller anglmill@indiana.edu Discussion • Questions? Bob Gonyea rgonyea@indiana.edu Angie Miller anglmill@indiana.edu Register Now! NSSE Users Workshop Oct. 7-8, 2010 Registration Deadline: Oct. 1, 2010 Fall 2010 NSSE Users Workshop - Dillard University, New Orleans Want to learn more about working with your 2010 NSSE results or your NSSE data from past years as part of your assessment efforts? Join us in New Orleans for the Fall 2010 NSSE Users Workshop, co-hosted by NSSE and Dillard University. The workshop will take place on Thursday and Friday, October 7-8, 2010, on the Dillard campus. Online registration and workshop details are available now at: nsse.iub.edu/links/fall_workshop