Chapter 9 - Evaluation

advertisement

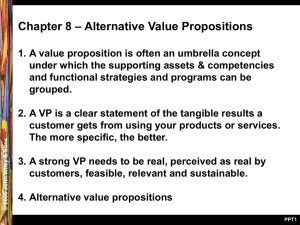

Chapter 7 - Evaluation HCI: Developing Effective Organizational Information Systems Dov Te’eni Jane Carey Ping Zhang Copyright 2006 John Wiley and Sons, Inc. Road Map Context Foundation Application 4 1 Physical Introduction Engineering 7 8 Evaluation Principles & Guidelines 3 Interactive 2 Technologies 5 Context Methodology Engineering Org & Business 11 Cognitive 9 6 Affective 10 Organizational Componential Tasks Design Engineering 12 13 Relationship, Collaboration, Social & & Organization Global Issues 14 Changing Needs of IT Development & Use Additional Context Copyright 2006 John Wiley and Sons, Inc. Learning Objectives Explain what evaluation is and why it is important. Understand the different types of HCI concerns and their rationales. Understand the relationships of HCI concerns with various evaluations. Understand usability, usability engineering, and universal usability. Copyright 2006 John Wiley and Sons, Inc. Learning Objectives Understand different evaluation methods and techniques. Select appropriate evaluation methods for a particular evaluation need. Carry out effective and efficient evaluations. Critique reports of studies done by others. Understand the reasons for setting up industry standards. Copyright 2006 John Wiley and Sons, Inc. Evaluation Evaluation: the determination of the significance, worth, condition, or value by careful appraisal and study. Copyright 2006 John Wiley and Sons, Inc. HCI Methodology and Evaluation Project Selection & Planning Analysis Project Selection Project Planning Requirements Determination Context Analysis User Needs Test User Analysis Task Analysis Formative Evaluation Alternative Selection Interface Specification Design Metaphor Design Media Design Dialogue Design Presentation Design Coding Implementation Formative Evaluation Formative Evaluation Copyright 2006 John Wiley and Sons, Inc. Summative Evaluation HCI Principles & Guidelines Evaluation Metrics What to evaluate? Four levels of HCI concerns HCI Concern Description Sample Measure Items Physical System fits our physical strengths and limitations and does not cause harm to our health Legible Audible Safe to use Cognitive System fits our cognitive strengths and Fewer errors and easy recovery Easy to use limitations and functions as the Easy to remember how to use cognitive extension of our brain Easy to learn Affective System satisfies our aesthetic and affective needs and is attractive for its own sake Aesthetically pleasing Engaging Trustworthy Satisfying Enjoyable Entertaining Fun Usefulness Using the system would provide rewarding consequences Support individual’s tasks Can do some tasks that would not be possible without the system Extend one’s capability Rewarding Why evaluate? The goal of the evaluation is to provide feedback in software development thus suporting an iterative development process (Gould and Lewis 1985). Copyright 2006 John Wiley and Sons, Inc. When to evaluate Formative Evaluation: conducted during the development of a product in order to form or influence design decisions. Summative Evaluation: conducted after the product is finished to ensure that it posses certain quality, meets certain standards or satisfies certain requirements set by the sponsors or other agencies. Copyright 2006 John Wiley and Sons, Inc. When to evaluate Implementation Prototyping Task analysis/ Functional analysis Evaluation Requirements specification Conceptual design/ formal design Figure 7.1 Evaluation as the Center of Systems Development Copyright 2006 John Wiley and Sons, Inc. When to evaluate Use and Impact Evaluation: conducted during the actual use of the product by real users in real context. Longitudinal Evaluation: involving the repeated observation or examination of a set of subjects over time with respect to one or more evaluation variables. Copyright 2006 John Wiley and Sons, Inc. Issues in Evaluation Evaluation Plan Stage of design (early, middle, late) Novelty of product (well defined versus exploratory) Number of expected users Criticality of the interface (e.g., life-critical medical system versus museum-exhibit support) Costs of product and finances allocated for test Time available Experience of the design and evaluation team Copyright 2006 John Wiley and Sons, Inc. Usability and Usability Engineering Usability: the extent to which a product can be used by specified users to achieve specified goals with effectiveness, efficiency and satisfaction in a specified context of use. Copyright 2006 John Wiley and Sons, Inc. Usability and Usability Engineering Figure 7.2 System Acceptability and Usability Table 7.4 Nielsen’s Definitions Usefulness: is the issue whether the system can be used to achieve some desired goal. Utility: the question of whether the functionality of the system in principle can do what is needed. Usability: the question of how well users can use that functionality. Learnability: the system should be easy to learn so that the user can rapidly start getting some work done with the system. Efficiency: the system should be efficient to use, so that once the user has learned the system, a high level of productivity is possible. Copyright 2006 John Wiley and Sons, Inc. Table 7.4 Nielsen’s Definitions Memorability: the system should be easy to remember, so that the casual user is able to return to the system after some period of not having used it, without having to learn everything all over again. Errors: the system should have a low error rate, so that users make few errors during the use of the system, and so that if they do make errors they can easily recover from them. Further, catastrophic errors much not occur. Satisfaction: the system should be pleasant to sue, so that users are subjectively satisfied when using it; they like it. Copyright 2006 John Wiley and Sons, Inc. Usability Engineering Usability Engineering: a process through which usability characteristics are specified, quantitatively and early in the development process, and measured throughout the process. Copyright 2006 John Wiley and Sons, Inc. Evaluation Methods Field strategies (Settings under conditions as natural as possible) Respondent strategies (Settings are muted or made moot) Field studies Ethnography and interaction Contextual inquiry Judgment studies Usability inspection methods (e.g. heuristic evaluation) analysis Field experiments Beta testing of products Studies of technological change Sample surveys Questionnaires Interviews Experimental strategies (Settings concocted for research purposes) Theoretical strategies (No observation of behavior required) Experimental stimulations Usability testing Usability engineering Formal theory Design theory (e.g. Norman’s 7 stages) Behavioral theory (e.g. color vision) Laboratory Experiments Controlled Experiments Computer Simulation Human Information Processing Theory Copyright 2006 John Wiley and Sons, Inc. Analytical Methods Heuristic Evaluation Heuristics: higher level design principles when used in practice to guide designs. Heuristics are also called rules-of-thumb. Heuristic evaluation: a group of experts, guided by a set of higher level design principles or heuristics, evaluate whether interface elements conform to the principles. Copyright 2006 John Wiley and Sons, Inc. Usability Heuristics Rules Description Visibility of system status The system should always keep users informed about what is going on, through appropriate feedback within reasonable time. Match between system and the real world The system should speak the users' language, with words, phrases and concepts familiar to the user, rather than system-oriented terms. Follow real-world conventions, making information appear in a natural and logical order. User control and freedom Users often choose system functions by mistake and will need a clearly marked "emergency exit" to leave the unwanted state without having to go through an extended dialogue. Support undo and redo. Consistency and standards Users should not have to wonder whether different words, situations, or actions mean the same thing. Follow platform conventions. Error prevention Even better than good error messages is a careful design which prevents a problem from occurring in the first place. Table 7.3 Ten Usability Heuristics Copyright 2006 John Wiley and Sons, Inc. Usability Heuristics Rules Description Recognition rather than recall Make objects, actions, and options visible. The user should not have to remember information from one part of the dialogue to another. Instructions for use of the system should be visible or easily retrievable whenever appropriate. Flexibility and efficiency of use Accelerators -- unseen by the novice user -- may often speed up the interaction for the expert user such that the system can cater to both inexperienced and experienced users. Allow users to tailor frequent actions. Aesthetic and minimalist design Dialogues should not contain information which is irrelevant or rarely needed. Every extra unit of information in a dialogue competes with the relevant units of information and diminishes their relative visibility. Help users recognize, diagnose, and recover from errors Error messages should be expressed in plain language (no codes), precisely indicate the problem, and constructively suggest a solution. Help and documentation Even though it is better if the system can be used without documentation, it may be necessary to provide help and documentation. Any such information should be easy to search, focused on the user's task, list concrete steps to be carried out, and not be too large. Table 7.6 Ten Usability Heuristics Eight Golden Rules Rules Description Strive for consistency This rule is the most frequently violated one, but following it can be tricky because there are many forms of consistency. Consistent sequences of actions should be required in similar situations; Identical terminology should be used in prompt, menus, and help screens; Consist color, layout, capitalization, fonts, etc. should be employed throughout. Exceptions, such as required confirmation of the delete command or no echoing of passwords, should be comprehensible and limited in number. Cater to universal usability Recognize the needs of diverse users and design for plasticity, facilitating transformation of content. Novice-expert differences, age ranges, disabilities, and technology diversity each enrich the spectrum of requirements that guides design. Adding features for novices, such as explanations and features for expert, such as shortcuts and faster pacing, can enrich the interface design and improve perceived system quality. Offer informative feedback For every user action, there should be some system feedback. For frequent and minor actions, the response can be modest, whereas for infrequent and major actions, the response should be more substantial. Visual presentation of the objects of interest provides a convenient environment for showing changes explicitly. Design dialogs to yield closure Sequence of actions should be organized into groups with a beginning, middle, and end. Informative feedback at the completion of a group of actions gives operators the satisfaction of accomplishment, a sense of relief, the signal to drop contingency plans from their minds, and a signal to prepare for the next group of actions. Table 7.7 Eight Golden Rules for User Interface Design Eight Golden Rules Rules Description Prevent errors As much as possible, design the system so that users cannot make serious errors. If a user makes an error, the interface should detect the error and offer simple, constructive and specific instructions for recovery. Erroneous actions should leave the system state unchanged, or the interface should give instructions about restoring the state. Permit easy reversal of actions As much as possible, actions should be reversible. This feature relieves anxiety, since the user knows that errors can be undone, thus encouraging exploration of unfamiliar options. The units of reversibility may be a single action, a data-entry task, or a complete group of actions, such as entry of a name and the address book. Support internal locus of control Experienced operators strongly desire the sense that they are in charge of the interface and that the interface responds their actions. Surprising interface actions, tedious sequences of data entries, inability to obtain or difficulty in obtaining necessary information, and inability to produce the action desired all build anxiety and dissatisfaction. Reduce short-term memory load The limitation of human information processing in short-term memory requires that displays be kept simple, multiple-page displays be consolidated, window-motion frequency be reduced, and sufficient training time be allotted for codes, mnemonics, and sequences of actions. Where appropriate, online access to command-syntax forms, abbreviations, codes, and other information should be provided. Table 7.7 Eight Golden Rules for User Interface Design (Shneiderman and Plaisant 2005) HOMERUN Heuristics for Websites Description High-quality content Often updated Minimal download time Ease of use Relevant to users’ needs Unique to the online medium Net-centric corporate culture Table 7.8 HOMERUN Heuristics for Commercial Websites (Nielsen 2000) Copyright 2006 John Wiley and Sons, Inc. Cognitive Walkthrough The following steps are involved in cognitive walkthroughs: The characteristics of typical users are identified and documented and sample tasks are developed that focus on the aspects of the design to be evaluated. A designer and one or more expert evaluators then come together to do the analysis. The evaluators walk through the action sequences for each task, placing it within the context of a typical scenario, and as they do this they try to answer the following questions: Will the correct action be sufficiently evident to the user? Will the user notice that the correct action is available? Will the user associate and interpret the response from the action correctly? Copyright 2006 John Wiley and Sons, Inc. Cognitive Walkthrough As the walkthrough is being done, a record of critical information is complied in which the assumptions about what would cause problems and why are recorded. This involves explaining why users would face difficulties. Notes about side issues and design changes are made. A summary of the results is compiled. The design is then revised to fix the problems presented. Copyright 2006 John Wiley and Sons, Inc. Pluralistic Walkthroughs Pluralistic walkthroughs are “another type of walkthrough in which users, developers and usability experts work together to step through a task scenario, discussing usability issues associated with dialog elements involved in the scenario steps.” (Nielsen and Mack 1994) Copyright 2006 John Wiley and Sons, Inc. Inspection with Conceptual Frameworks such as the TSSL model Another structured analytical evaluation method is to use conceptual frameworks as bases for evaluation and inspection. One such framework is the TSSL model we have introduced earlier in the book. Copyright 2006 John Wiley and Sons, Inc. Example 1 - Evaluating option/configuration specification interfaces Figure 7.3 A Sample Dialog Box Copyright 2006 John Wiley and Sons, Inc. Evaluating option/configuration specification interfaces Tabs act as a menu for the Dialog Figure 7.4 A Sample Tabbed Dialog Box Evaluating option/configuration specification interfaces Title Area Tree menu Figure 7.5 The Preferences Dialog Box with Tree Menu Copyright 2006 John Wiley and Sons, Inc. Evaluating option/configuration specification interfaces Additional Tabs Navigators Tabbed DropDown Menu Copyright 2006 John Wiley and Sons, Inc. Example 2 Yahoo, Google, and Lycos web portals and search engines Compare and contrast displays for top searches of 2003. Which uses color most effectively? Layout? Ease of understanding? Why? Copyright 2006 John Wiley and Sons, Inc. Empirical Methods Surveys and Questionnaires Used to collect information from a large group of respondents. Interviews (including focus groups) Used to collect information from a small key set of respondents. Experiments Used to determine the best design features from many options. Field studies Results are more generalizable since they occur in real settings. Copyright 2006 John Wiley and Sons, Inc. Lifecycle Stage System Status Environ. Of Evaluation Real Users Participation User Tasks Used Main Advantage Main disadvantage Heuristic evaluation Any stage; early ones benefit most Any status (mock up, prototype, final product) Any None None Finds individual problems. Can address expert user issues Does not involve real users, thus may not find problems related to real users in real context. Does not link to user's tasks. Guideline preview Any stage; early ones benefit most Any status Any None None Finds individual problems. Does not involve real users. Does not link to user's tasks. Cognitive walkthrough Any stage; early ones benefit most Any status Any None Yes, need to identify tasks first Less expensive. Does not involve real users. Limited to expert's view. Table 7.11 Comparison of Evaluation Methods Lifecycle Stage System Status Environ. Of Evaluation Real Users Participation User Tasks Used Main Advantage Main disadvantage TSSL based inspection Any stage Any status Any None Yes, need to identify tasks first Direct link to user tasks. Structured with less number of steps to go through. Does not involve real users. Limited to the tasks identified. Survey Any stage Any status Any Yes, a lot Yes or no Finds subjective reactions. Ease to conduct and compare. Questions need to be well designed. Need large sample. Interview Task analysis Mock up, prototype Any Yes None Flexible, in-depth probing. Time consuming. Hard to analyze and compare. Lab controlled experiment Design, implement, or use Prototype, final product Lab Yes. Yes, most time artificially designed to mimic real tasks Provides factbased measurements. Results easy to compare. Requires expensive facility, setup, and expertise. Field study w/ observation and monitoring Design, implement, or use Prototype, final product Real work setting Yes None Easy applicable. Reveal user's real tasks. Can highlight difficulties in real use Observation may affect user behavior Table 7.11 Comparison of Evaluation Methods Standards Standards: are concerned with prescribed ways of discussing, presenting, or doing things to achieve consistency across same type of products. Quality in Use Product Quality Process Quality Organizational Capability User Performance/ Satisfaction Product Development Process Life Cycle Process Figure 7.10 Categories of HCI Related Standards Copyright 2006 John Wiley and Sons, Inc. Standards Sources of Standards Information Published ISO standards ISO national member bodies BSI: British Standards Institute URL www.iso.ch/projects/programme.html www.iso.ch/addresse/membodies.html www.bsi.org.uk ANSI: American National Standards Institute www.ansi.org NSSN: A National Resource for Global Standards www.nssn.org TRUMP list of HCI and Usability Standards www.usability.serco.com/trump/resources/sta ndards.htm Table 7.12 Sources for HCI and Usability Related Standards Common Industry Format (CIF) Common Industry Format (CIF): a standard method for reporting summative usability test findings. The type of information and level of detail that is required in a CIF report is intended to ensure that: Good practice in usability evaluation had been adhered to. There is sufficient information for a usability specialist to judge the validity of the results. If the test was replicated on the basis of the information given in the CIF, it should produce essentially the same results. Specific effectiveness and efficiency metrics must be used, Satisfaction must also be measured. Copyright 2006 John Wiley and Sons, Inc. Common Industry Format (CIF) According to NIST, the CIF can be used in the following fashion. For purchased software: Require that suppliers provide usability test reports in CIF format. Analyze for reliability and applicability. Replicate within agency if required. Use data to select products. For developed software (in house or subcontract): Define measurable usability goals. Conduct formative usability testing as part of user interface design activities. Conduct summative usability test using the CIF to ensure goals have been met. Copyright 2006 John Wiley and Sons, Inc. Summary Evaluations are driven by the ultimate concerns of human– computer interaction. In this chapter, we presented four types of such concerns along the following four dimensions of human needs: agronomical, cognitive, affective, and extrinsic motivational (usefulness). Evaluations should occur during the entire system development process, after system is finished, and during the period the system is actually used. This chapter introduced several commonly used evaluation methods. Their pros and cons were compared and discussed. The chapter also provided several useful instruments and heuristics. Standards play an important role in practice. This is discussed in the chapter. A particular standard, Common Industry Format, is described and the detailed format is given in the appendix. Copyright 2006 John Wiley and Sons, Inc.