Program Science: Definition, Components and Issues

advertisement

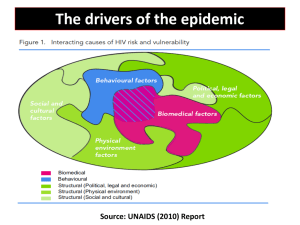

Program Science: Definition, Components and Issues James Blanchard, MD, MPH, PhD Director, Centre for Global Public Health University of Manitoba Presentation Overview • Introduction to the “program science” concept • Discuss briefly the components of program science • Provide a few illustrative examples • Note: HIV/STI prevention lens Brief History… leading to Rome • SOA and JB in N. Karnataka (2007): – – – – – Avahan (BMGF) supported large scale targeted intervention program “How are strategic decisions being made, and why?” “How are issues of heterogeneity between contexts being handled?” “How does the project go to scale, and how is it managed?” “What lessons are transferable to other contexts?” • Chicago, 2008, National meeting of the STD Control Directors: – Introduce the Program Science concept, and examine a range of issues • Philadelphia, 2008 (APHA): – Symposium on Program Science • London, 2009 (ISSTDR): – Meeting with scientific colleagues and NIH proposed meeting • New Delhi, Bethesda, etc…: – Meetings with academic colleagues and program / policy leaders Some Conceptual Roots • “Evidence-based Decision Making” – Decision makers actively using “evidence” in policy and program development • “Knowledge Translation” – “… the process of supporting the uptake of health research…”1 • “Implementation Science” – “…the scientific study of methods to promote the systematic uptake of research findings and other evidence-based practices… to improve the quality and effectiveness of health services and care.”2 1. Canadian Institutes of Health Research 2. Eccles and Mittman. Implementation Science;1:2006. Some Potential Pitfalls in Knowledge Translation in Public Health • Knowledge production and synthesis often addresses a single component in a single sphere of knowledge: – Protective efficacy of a vaccine – Effectiveness of a specific behaviour change method • “Evidence traps” for public health practice: – Focus on single interventions (“magic bullets”) – Ignore the epidemiological context – Ignore the social, cultural, political, economic context “Knowledge Translation Bias” • Inappropriate prioritization of interventions for which evidence has been generated and disseminated, often without regard to context “We have some evidence in support of this intervention, so let’s do a lot of it” Program Science: Two Aspects 1. A research domain or enterprise (“program” as adjective): – Theoretical base – Methodologies 2. An interactive process (“program” as noun): – Dynamic interaction between scientists, program and policy leaders, and implementers/practitioners Intervention vs. Program • Public Health Intervention – Specific technological or behavioural modality – Particular target group(s) – Focus on effectiveness, fidelity, coverage • Public Health Program – Multiple components (interventions) – Resource allocation between components, and sharing across components – Emphasis on optimizing population level impact “Program Science” for HIV/STI Prevention: A Component Model Spheres of Knowledge • Epidemiology • Transmission dynamics • Policy analysis • Health systems research • Efficacy / effectiveness • Operations research • Surveillance • Monitoring/evaluation • Operations research • Health systems research Spheres of Practice Strategic Planning Policy Development Program Implementation Program Management Intended Outcomes Choose: • The best strategy… • The right populations… • The right time… Do: • The right things… • The right way… Ensure: • Appropriate scale… • Efficiency… • Change when needed… Program Science Components – Interactive Model Epidemiology Strategic Planning Transmission Dynamics Population Focus Prevention Objectives Program Management Coverage Scale-up Quality Impact Operations Research Health Services Evaluation Intervention Design & Mix Implementation Efficacy Planning Effectiveness Illustrative Issues • Strategic planning – Rubrics for classifying epidemics and tailoring prevention strategies • Program implementation – Assessing the effectiveness and generalizability of single and combined interventions • Program management – Methods for scaling up and sustaining effective interventions Strategic Planning <> Epidemiology and Transmission Dynamics • Focused on providing science-based guidance for resource allocation for prevention: – The best strategic approaches – The right population focus – The right timing of interventions • The challenges: – Epidemic heterogeneity – Understanding transmission dynamics and classifying epidemics appropriately HIV Epidemic Remains Highly Heterogeneous, At the Global Level Rubrics for HIV Epidemic Classification and Strategic Response • “Prevalence-based” classification • “Modes of Transmission” method for “Know Your Epidemic, Know Your Response” • “Epidemic typology” approach “Prevalence Based” (numerical proxy) Approach • Based on HIV prevalence threshold rules: – <5% in all “high risk groups” “Low Level” – >5% in at least one “high risk group” “Concentrated” – >1% in the general population (usually antenatal women) “Generalized” • Prescribed program strategies depending on epidemic typology “Know Your Epidemic…” and “Modes of Transmission” Approach • A recent initiative focused on “modes of transmission” studies to understand “where incident HIV infections are occurring” • Standardized method for allocating incident infections to behaviourally defined sub-groups • Focus prevention strategy to “where new HIV infections are occurring” “Epidemic Typology” Approach • Focuses on classifying epidemics based on transmission dynamics • Depends on an understanding of sexual structure and other transmission networks, in addition to epidemiological parameters • Considers epidemic potential and trajectory, not just current status • Focuses on assessing the relative contribution of identifiable high risk groups and networks to the overall epidemic in all groups Uganda “Modes of Transmission” Study Uganda “Know Your Epidemic, Know Your Response” Analysis “Modes of Transmission” Analysis – India Size Estimation HIV Prevalence (%) HIV Incidence (per 100,000) Distribution of incident cases, 2008 FSW 0.4% (F) 20% 437 5.3% Clients 3.0% (M) 5% 422 34.4% Partners of clients 1.5% (F) 3% 306 12.5% Low activity GP 83.8% (M, F) 0.2% 9 44.9% High activity GP 2% (M) 1% (F) 0.6% 36 2.8% “Modes of Transmission” – 3 Districts in India District HIV prevalence in antenatal females % of new infections in low activity GP Bagalkot 2.1% 38.4% Shimoga 1.0% 57.9% Varanasi 0.25% 77.2% Same Epidemic, Different Prevention Strategies? Epidemic typology Program Science Challenges • Development of methods for classifying epidemics • Development of methods for collecting key information: – Estimating the size of key populations – Measuring and describing sexual behaviours and networks • Linking transmission dynamics so prevention priorities and resource allocation Issue – Assessing the effectiveness of interventions • More than 30 randomized controlled trials with HIV incidence as an end point: – Behavioural (partner reduction, condom use) – Technological (microbicides) – Biological (male circumcision) • Only 4 had “positive” results (i.e. reduced HIV incidence): – 3 individual RCTs on male circumcision – 1 randomized community trial on enhanced STD control – 2 subsequent trials contradicted the results • Possible explanations… – Intervention has no efficacy – Intervention couldn’t be implemented effectively, or at sufficient scale – Wrong intervention for that epidemic at that time “Research driven” approach to intervention design and assessment Theoretical Basis Intervention Design Demonstrate efficacy / effectiveness Implement and Scale Up With “Fidelity” Constraints of “research driven” models • Complexity and heterogeneity of transmission dynamics: – Designing and assessing the right intervention for a particular context • Secular trends and “co-interventions”: – Isolating a particular intervention or intervention package • Cost • Ethics Differing Research Paradigms: “GRIP” to “GROP”1 • “GRIP” – Getting Research Into Policy • “GROP” – Getting Research Out of Practice 1. Parkhurst J, Weller I, Kemp J. Getting research into policy, or out of practice, in HIV? Lancet 2010:375;1414-5. Pathways of activities and evidence flow into, and out of, policy and practice1 Evidence continuum From… Clear, “reliable” evidence, direct cause-effect To…. Evidence gaps, unclear causality, complex interventions, multiple interacting factors GRIP Policy Formulation Implementation Programme evaluation Activity flow Evidence flow 1. Development of hypotheses and research questions 1. Parkhurst et al. Lancet 2010. Designing interventions Implementation 4. Outcome evaluation 2, 3 Operational research and process evaluation GROP Four Challenges within the GROP Model (Parkhurst et al.) 1. Better and more explicit hypothesis development: – Reviewing existing knowledge and explaining assumptions 2. Improved operational research: – Learning how complex interventions are implemented for scaling up what works in particular contexts 3. Increasing the use of process evaluation: – Investigate causal mechanisms by which an intervention works for particular groups in given settings 4. Proper outcome evaluation Issues in Program Management and Scale Up • Contrasting conceptual approaches to scaling up • Management processes and models for scaling up Conceptual Approaches to Scaling Up… (from David Peters et al.) Domain Scaling up to reach the MDGs Scaling up small scale innovations Validity of strategies Assumption of external validity of strategies. Search for standardized approaches that can be generalized within and across countries. Assumption that approaches should be determined contextually. Value on internal validity. What works best depends on the context. Planning perspective Create better blueprints and targets that can be locally adapted. Learn by doing. Plan with key stakeholders, and link knowledge with action. Implementation perspectives Focus on “accelerating” implementation to meet welldefined goals and deadlines. Slower, phased implementation… incremental expansion based on concurrent, participatory research and adaptation. Monitoring and evaluation methodologies Focus on status of the problem. Focus on problem solving. Management Models and Processes – Example, Targeted Interventions • Management by review and audit: – – – – – Established guidelines and norms Generic episodic training programs Monitor programs based on “effort based” targets Periodic performance reviews and audits “Red light, Orange light, Green light” performance assessments and funding decisions • Management through supportive supervision: – Established intervention package, with local adaptation – Ongoing training, technical assistance, knowledge sharing among implementers – Monitor based on “outcome based” targets – Supportive supervision with active joint problem solving – Participatory performance assessments Issues in Program Evaluation • Expanding methodological base: – Complex interventions – Non-randomized methods • Understanding internal and external validity: – How does context influence the evaluation method and interpretation? • Specifying the level of evidence required for different types of interventions: – E.g. Prevention technologies vs. implementation models Other Issues • Capacity building for “program science” • Integrating knowledge / science domains and improving knowledge translation processes Final thoughts…. (from Michael Gibbons1) • “Socially robust knowledge” – “… can only be produced by much more sprawling socio/scientific constituencies.” – “… is superior to reliable knowledge both because it has been subjected to more intensive testing and retesting in many more contexts…. and also because of its malleability and connective capability.” – “…. is the product of an intensive (and continuous) interaction between data and other results, between people and environments, between applications and implications.” Thank You