Psychological Research and Scientific Method pt3

advertisement

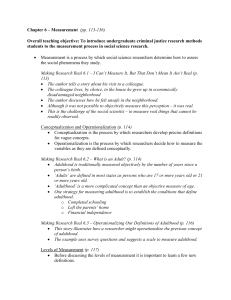

Psychological Research and Scientific Method pt3 Designing psychological investigations. Selection & application of appropriate research methods. What is my hypothesis (directional/non-directional? What is my research aim? Should I use quantative or qualitative. Key considerations when designing psychological investigations. 1. Choosing an appropriate research method. 2. Deciding upon the amount of Ps. 3. Using appropriate sampling method 4. How to debrief Ps. 5. How should I record the data & the techniques to be used. 1. Is the data qualitative or quantative, if the former = transcripts, if the latter = experiment. 2. Consider finances & practicalities. 3. Target population should be identified & a representative sample should be used. 4. Need to always consider ethics...is deception necessary? Should they know you are there (observations!) 5. Number analysis, Written record, video of interviews, a combination? How should it be coded? Should results be omitted? Pilot study….why is this necessary? • This is an important step in designing a good research study. It is characterised as • ‘A small scale trial run of a specific research investigation in order to test out the planned procedures & identify any flaws & areas for improvement’. • It provides feedback to clear up any issues. The relationship between researcher & participants. Studying complex behaviour can create several ‘social’ type issues that can effect the outcome of the investigation such as 1. Demand characteristics: Behaving in a way that they perceive will help or distort the investigation. 2. Participant reactivity: Faithful/faithless, evaluation apprehension or social desirability bias. Change in behaviour because you think you are being evaluated in a positive/negative way (try harder/not hard enough!) 3. Investigator effects: Undesired effect of the researcher’s expectations or behaviour on participants or on the interpretation of data. So I don’t have to retype it!!!!! • See AS Research methods for information on experimental design • Extraneous variables • Methodology • Ethics • Sampling techniques. • You should be able to identify, explain and give at least two advantages & two disadvantages of all the above!!!!!! Issues of reliability & validity. Types of reliability 1. Internal: Consistency of measures, no lone ranger gets in and messes with the investigation! 2. External: Consistency of measures from one occasion to another- Can a test be relied upon to generate same or similar results. 3. Research Reliability: Extent to which researcher acts entirely consistently when gathering data in an investigation. Aka experimenter reliability in experimental conditions & inter-rater/inter-observer reliability. Assessing researcher reliability. (Intra) • Intra researcher reliability is achieved of the investigator has performed consistently. • This is achieved when scoring/measuring on more than one occasion and receiving the same or similar results. • If using observations or other non experimental methods this is achieved by comparing two sets of data obtained on separate occasions and observing the degree of similarities in the scores. Assessing researcher reliability. (Inter) • Researchers need to act in similar ways. • All observers need to agree, therefore they record their data independently then correlate to establish the degree of similarity! • Inter observer reliability is achieved if there is a statistically significant positive correlation between the scores of the different observers. Improving researcher reliability • Variability brings about extraneous variables so it is important to ensure high intra-inter research reliability by: • Careful design of a study- E.g. Use of piloting, as this can improve the precision and make the investigation less open to interpretation. • Careful training of researchers- In procedures & materials so variability can be reduced among researchers. Operational definitions should be used and understood by all and researchers should know how to carry out procedures and record data. Assessing & improving internal & external reliability. • Split-half method: Splitting the test into two parts after data has been collected. The two sets of data are then correlated, if the result is statistically significant it indicates reliability. If not significant each item is removed & retesting occurs. The overall aim is to reach +.8 & reach internal reliability. • Test-retest method: Presenting same test on different occasions with no feedback after first presentation. Time between is important too, cant be too short/long! If statistically significant between scores this is deemed as stable, if not items are checked for consistency & reliability retested if correlation can be obtained. Techniques to assess & improve internal validity. • Face validity: On the surface of things, does the investigation test what is claims to be testing! • Content Validity: Does the content of the test cover the whole topic area? Detailed systematic examination of all components are measured & until it is agreed that the content is appropriate. • Concurrent validity: When the measures are obtained at the same time as the test scores. This indicates the extent to which the test scores accurately estimate an individual’s current state with regards to the criterion. E.g. On a test that measures levels of depression, the test would be said to have concurrent validity if it measured the current levels of depression experienced by the participant. • Predictive validity: occurs when the criterion measures are obtained at a time after the test. Examples of test with predictive validity are career or aptitude tests, which are helpful in determining who is likely to succeed or fail in certain subjects or occupations. Just a little extra on ethics! There are several ethic committees: 1. Departmental ethics:-The DEC carries out approval on undergraduate & postgraduate proposals. At least 3 members should be involved who do not have an invested interest in the research but have the appropriate expertise to look over the proposal. They yeah/nay the proposal ask for modifications if they deem it to be necessary. They may refer the proposal to the IEC if researchers are needed from other disciplines. Institutional ethics (IEC) • Formed by Psychologists and researchers from other disciplines. • They have a wider expertise & practical knowledge of the possible issues (law or insurance.) • • The chair of the IEC will yeah or nay or ask for resubmission upon modification External ethics committee (EEC). 1. Some research cannot be approved by either the DEC or IEC and will need the EEC. 2. This is likely to be the case for proposals involving Ps from NHS etc.. 3. The ‘National research ethics service’ (NREC) is responsible the approval process. 4. The EEC consists of experts with no vested interest in the research. • Monitoring the guidelines serves as a final means of protecting Ps. If Psychologists are found to be contravening guidelines they can be suspended or have their license to practice removed or expelled from the society (BPS).