Web Caching

advertisement

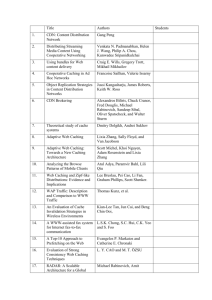

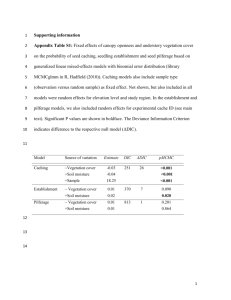

An Overview of Proxy Caching Algorithms Haifeng Wang Web Caching • Internet traffic • Load on web servers • Access delay web caching provides an efficient remedy to the latency problem and network traffic by bringing documents closer to clients. Web Caching Location • Client Caching • Server Caching • Proxy Caching ---- widely used form There are many benefit of proxy caching. It reduces network traffic, average latency of fetching Web documents, and the load on busy Web servers. Web Caching Location Web Caching Replacement Algorithm • effective use of caching, an informative decision has to be made to evict document from the cache in case of cache saturation. • key to the effectiveness of proxy caches that can yield high hit ratio. • differ to page replacement. Why? Characteristic of Web Caching • Web caching is variable-size caching • The cost of retrieving missed Web documents from their original servers depends on many factors. • Web documents are frequently updated • Zipf-like popularity of web documents Key Parameters There are four key parameters that most proxy replacement policies considering in design • • • • Frequency Information Recency Information Document size Network cost Classification of caching policies according traffic information consideration Frequency information Recency information LFU Hyper-G LRU CERA LRV SLRU GDS Log2(SIZE) LRU-MIN LRU-threshold Hybrid SIZE Replacement Algorithm(1) 1) LRU (Least-Recently-Used) LRU evicts the least recently accessed document first 2) LRU-Threshold It works the same way as LRU except that documents that are larger than a given threshold are never cached. 3) LRU-MIN LRU-MIN gives preference to small-size documents to stay in the cache. Replacement Algorithm(2) 4) LFU (Lease-Frequently-Used) LFU evicts the least frequently accessed document first 5) Hyper-G Hyper-G is an extension of the LFU policy, where ties are broken according to the last access time. 6) LLF LLF considers the document download time as its primary and the document with the lowest download time is evicted first Replacement Algorithm(3) 7) Size Size evicts the largest documents first 8) Log2-Size Log2-Size consider document size as the primary key according to [log2(size)], large documents are evicted first, using the last access time as a secondary key. Replacement Algorithm(4) 9) GDS (GreedyDual-Size) The GDS algorithm associates a value H with each cached page p. H is set to cost/size upon an access to a document. When a replacement needs to be made, the page with the lowest H value, minH, is replaced, and then all pages reduce their H values by minH. If a page is accessed, its H value is restored to cost/size upon an access to a document. Replacement Algorithm(5) 10) CERA (Cost-Effective-Replacement-Algorithm) CERA use a benefit value (BV) which is assigned to each object to represent its importance in the cache. When the cache is full, the object with the lowest BV is replaced. BV = (Cost / Size) * Pr + Age 11) Hybrid Hybrid is aimed at reducing the total latency. A function is computed for each document which is designed to capture the utility of retaining a given document in the cache. The document with the smallest function value is then evicted. Replacement Algorithm(6) 12) LRV (Lowest-Relative-Value) LRV includes the cost and size in the calculation of a value that estimates the utility of keeping a document in the cache. It evicts the document with the lowest value. The calculation of the value is based on extensive empirical analysis of trace data. 13) SLRU (Size-Adjust LRU) Document is ordered according to ratio calculated according frequency, cost and size, it evicts the document with the lowest ratio first. Performance Issue • No conclusion on which algorithm a proxy should use. • Document size is significance and need to incorporate it in the design of replacement policy. • Good algorithm adjusts dynamically to changes in the workload characteristics.