Branch prediction!

advertisement

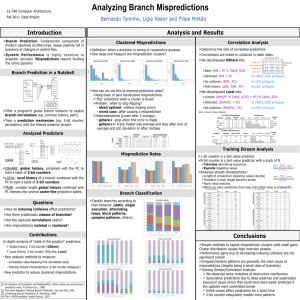

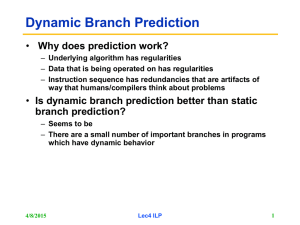

Dynamic History-Length Fitting: A third level of adaptivity for branch prediction ISCA '98 Toni Juan Sanji Sanjeevan Juan J. Navarro Department of Computer Architecture University Politècnica de Catalunya Presented by Danyao Wang ECE1718, Fall 2008 Overview • Branch prediction background • Dynamic branch predictors • Dynamic history-length fitting (DHLF) – Without context switches – With context switches • Results • Conclusion 2 Why branch prediction? • Superscalar processors with deep pipelines – Intel Core 2 Duo: 14 stages – AMD Athlon 64: 12 stages – Intel Pentium 4: 31 stages • Many cycles before branch is resolved – Wasting time if wait… – Would be good if can do some useful work… • Branch prediction! 3 What does it do? sub r1, r2, r3 bne r1, r0, L1 add r4, r5, r6 … L1: add r4, r7, r8 sub r9, r4, r2 Branch resolved fetch decode sub fetch decode bne fetch decode add fetch decode Branch fetched sub Predict taken. Fetch from L1 Time Execute speculatively Validate prediction: Correct 4 What happens when mispredicted? sub r1, r2, r3 bne r1, r0, L1 add r4, r5, r6 … L1: add r4, r7, r8 sub r9, r4, r2 Branch resolved fetch decode sub fetch decode bne fetch decode add fetch decode Branch fetched Predict taken. Fetch from L1 sub squash Time Execute speculatively Validate prediction: Incorrect! 5 How to predict branches? • Statically at compile time – Simple hardware – Not accurate enough… • Dynamically at execution time – Hardware predictors • • • • Last-outcome predictor Saturation counter Pattern predictor Tournament predictor More Complex More Accurate 6 Last-Outcome Branch Predictor • Simplest dynamic branch predictor • Branch prediction table with 1-bit entries • Intuition: history repeats itself 1-bit Prediction: T or NT -Read at Fetch -Write on misprediction lower N bits of PC PC index 2N entries Branch Prediction Table 7 Saturation Counter Predictor • Observation: branches highly bimodal • n-bit saturation counter – Hysteresis – n-bit entries in branch prediction table Strong bias e.g. 2-bit bimodal predictor N Pred. Not-Taken T 00 01 N T N T 10 Pred. Taken 11 T N WEAK bias 8 Pattern Predictors • Near-by branches often correlate • Looks for patterns in branch history – Branch History Register (BHR): m most recent branch outcomes saturation counter Two-Level Predictor lower n bits of PC PC f BHR N-bit index 2N entries m-bit history Branch Prediction Table 9 Tournament Predictor • No one-size-suits-all predictor • Dynamically choose among different predictors PC Predictor A Predictor B Predictor C Chooser or metapredictor 10 What is the best predictor? Optimal Better 11 Observations • Predictor performance depends on history length • Optimal history length differs for programs • Predictors with fixed history length underperforming potential • … dynamic history length? 12 Dynamic History-Length Fitting (DHLF) Intuition • Tournament predictor – Picks best out of many predictors – Spatial multiplexing – Area cost … • DHLF: time multiplexing – Try different history lengths during execution – Adapt history length to code – Hope to find the best one 14 2-Level Predictor Revisited saturation counter lower n bits of PC PC f BHR n-bit index 2n entries m-bit history Predetermined Figure out dynamically Branch Prediction Table • Index = f(PC, BHR) • gshare, f = xor, m < n • 2-bit saturation counter 15 DHLF Approach • Current history length • Best so far length • Misprediction counter • Branch counter • Table of measured misprediction rates per length – Initialized to zero • Sampling at fixed intervals (step size) – Try new length: get MR – Adjust if worse than best seen before – Move to a random length if length has not changed for a while • Avoids local minima 16 DHLF Examples Index = 12 bits step = 16K Optimal 17 Experimental Methodology • SPECint95 • gshare and dhlf-gshare • Trace-driven simulation • Simulated up to 200M conditional branches • Branch history register & pattern history table immediately updated with the true outcome 18 DHLF Performance Better • Area overhead – Index length = 10; step size = 16K; overhead = 7% – Index length = 16; step size = 16K; overhead = 0.02% 19 Optimization Strategies • Step size – Small: learns faster • Has to be big enough for meaningful misprediction stats – Big: learns slower • Change length incrementally – Test as many lengths as possible • Warm-up period – No MR count for 1 interval after length change 20 Context Switches • Branch prediction table trashed periodically • Lower prediction accuracy immediately after a context switch • Context switch frequency affects optimal history length 21 Impact on Misprediction Rate Context-switch distance: # branches executed between context switches Better gshare. Index = 16 bits 22 Coping with Context Switches • Upon context switch – Discard current misprediction counter – Save current predictor data • misprediction table • current history length • Approx. 221 bits for 16-bit index, step = 16K, 13 bit misprediction counter • Returning from a context switch – Warm-up: no MR counter for 1 interval 23 DHLF with Context Switches dhlf-gshare with step value = 16K gshare with all possible history length Misprediction rate x Better Branch prediction table flush every 70K instructions to simulate context switch. 24 Contributions • Dynamically finds near-optimal history lengths • Performs well for programs with different branch behaviours • Performs well under context switches • Can be applied to any two-level branch predictor • Small area overhead 25 Backup Slides DHLF Performance: SPECint95 Better Better dhlf-share; step size = 16K. Compared to all possible history lengths (no context switch) 27 DHLP with Context Switches Better Better dhlf-gshare; step size = 16K; context-switch distance = 70K 28 dhlf-gskew Better Step value = 16K. Compared to all history lengths for gskew, 29 dhlf-gskew with Context Switch Better Step size = 16K; Context-switch distance = 70K. 30 DHLF Structure DHLF Data Structure Initial history length Misprediction table 0 1 step dynamic branches Run next interval current misprediction > min achieved? Yes N entries N ptr. to min. misprediction count ptr. to entry for current history length branch counter No Adjust history length misprediction counter 31 Questions • Is fixed context switch distance realistic? • Does updating the PHT with true branch data immediately affect results? – Previous studies show little impact due to this 32