Presentation

advertisement

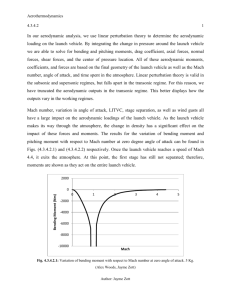

Machine-Independent Virtual Memory Management for Paged Uniprocessor and Multiprocessor Architectures Jordan Acedera Ishmael Davis Justin Augspurger Karl Banks Introduction • Many OS do not have VM support • Mach goals: – Exploit hardware/software relationship – Portability – Handling of page faults • Mach extends UNIX support • Compatibility Compatibility • Mach runs on the following systems: – VAX processors – IBM RT PC – SUN 3 – Encore Multimax – Sequent Balance 21000 Mach Design • Basic abstractions – Task – Thread – Port – Message – Memory Object Mach Design • Basic Operations – Allocate memory – Deallocate memory – Set protection status – Specify inheritance – Create/Manage memory objects • Limits of Mach Design Mach Design The Implementation of Mach Virtual Memory Presented by: Karl Banks Data Structures • Resident page table: keeps track of Mach pages residing in main memory (machine independent) • Address map: a doubly linked list of map entries, each of which maps a range of virtual addresses to a region of a memory object • Memory object: a unit of backing storage such as a disk file or a swap area • Pmap: the memory-mapping data structure used by the hardware (machine dependent) Pager • Managing task; one associated with each memory object • Handles page faults and page-out requests outside of the kernel • If kernel sends pageout request to a user-level pager, it can decide which page to swap out • If pager is uncooperative, default pager will be invoked to perform the necessary pageout Mach Implementation Diagram Virtual address space of a process Default Pager Swap area External Pager File System Sharing Memory: Copy-on-Write • Case: tasks only read • Supports memory-sharing across tasks Sharing Memory: Copy-on-Write • Case: write “copied” data • New page allocated only to writing task • Shadow object created to hold modified pages Sharing Memory: Shadow Objects • Collects modified pages resulting from copy-onwrite page faults • Initially empty with pointer to its shadowed object • Only contains modified pages, relies on original object for rest • Can create chain of shadow objects • System proceeds through list until page is found • Complexity handled by garbage collection Sharing Memory: Read/Write • Data structure for copy-on-write not appropriate (read/write sharing could involve mapping several memory objects) • Level of indirection needed for a shared object Sharing Memory: Sharing Maps • Identical to address map, points to shared object • Address maps point to sharing maps in addition to memory objects • Map operations are then applied simply to sharing map • Sharing maps can be split and merged, thus no need to reference others Virtual Memory Management Part 2 Jordan Acedera Karl Banks Ishmael Davis Justin Augspurger Porting Mach • • First installed on VAX architecture machines By May 1986 it was available for use – • IBM RT PC, SUN 3, Sequent Balance, and Encore MultiMAX machines. A year later there were 75 IBM RT PC’s running Mach Porting Mach • • • All 4 machine types took about the same time to complete the porting process Most time spent was debugging the compilers and device drivers Estimated time was about 3 weeks for the pmap module implementation – Large part of that time was spent understanding the code Assessing Various Memory Management Architectures • • Mach does not need the hardware data structure to manage virtual memory Mach – • Need malleable TLB, with little code needed written for the pmap Pmap module will manipulate the hardware defined in-memory structures, which will control the state of the internal MMU TLB. – Though this is true, each hardware architecture has had issues with both the uni and multi processors. Uniprocessor Issues • Mach on the VAX – • Problem – • In theory a 2Gb address space is allocated to a process not practical - A large amount of space needed linear page table (8 MB) UNIX – – Page tables in physical memory Addresses only get 8, 16, or 64MB per process Uniprocessor Issues • VAX VMS – • Pageable tables in the kernel’s virtual address space. Mach on the VAX – – Page tables kept in physical memory Only constructs the parts needed to map the virtual to real address for the pages currently being used. Uniprocessor Issues • IBM RT PC – Uses a single inverted page (not per-task table) • – • Describes mapping for the addresses Uses the hashing function for the virtual address translation (allows a full 4GB address space) Mach on IBM RT PC – – – Reduced memory requirements benefits Simplified page table requirements Only allows one mapping for each physical page (can cause page faults when sharing) Uniprocessor Issues • SUN 3 – – Segments and page tables are used for the address maps (up to 256 MB each) Helps with implementing spares addressing • • Only 8 contexts can exist at one time Mach on the SUN 3 – SUN 3 had an issue with display memory addressable as “high” physical memory. • The Mach OS handled the issue with machine dependent code Uniprocessor Issues • Encore Multimax and Sequent Balance – Both Machines used the National 32082 MMU, which had problems • • • Only 16 MB of VM can be addressed per page table Only 32 MB of physical memory can be addressed. A read-modify-write fault reported as a read fault – – Mach depended on correct faults being handled This was taken care of with the next MMU – National 32382 Multiprocessor Issues • • • None of the multiprocessors running Mach supported TLB consistency To guarantee consistency, the kernel will have to know which processors have the old mapping in the TLB and make it flush Unfortunately – its impossible to reference or modify a TLB remotely or any of the multi processors running Mach Multiprocessor Issues • 3 solutions – 1 interrupt all CPU’s using a shared portion of an address and flush their buffers • – 2 hold on map changing until all CPU’s have been flushed using a timer interrupt • – When a change is critical When mappings need to be removed from the hardware address maps 3 allow temp inconsistency • Acceptable because the operation semantics do not need to be simultaneous Integrating Loosely-coupled and Tightlycoupled Systems • Difficulties in building a universal VM model – different address translation hardware – different shared memory access between multiple CPUs • fully shared/uniform access time (Encore MultiMax, Sequent Balance) • shared/non-uniform access time (BBN Butterfly, IBM RP3) • non-shared, message-based (Intel Hypercube) • Mach is ported only for uniform shared memory (tightlycoupled) multiprocessor systems • Mach does contain mechanisms for loosely-coupled multiprocessor systems. Measuring VM Performance • Mach performance is favored Measuring VM Performance • • Program compiling, Compiling Mach kernel Mach performance is favored Relations to Previous Work • Mach's VM functionality derives from earlier OS – Accent and/or Multics • Virtual space segment creation corresponding with files and other permanent data • Efficient, large VM transfers between protected address spaces – Apollo's Aegis, IBM's System/38, CMU's Hydra • Memory mapped objects – Sequent's Dynix, Encore's Umax • Shared VM systems for multiprocessors Relations to Previous Work • Mach differs in its sophisticated VM features not tied to a specific hardware base • Mach differs in its ability to additionally work in a distributed environment Conclusion • Growth of portable software and use of more sophisticated VM mechanisms lead to a need for a less intimate relationship between memory architecture and software • Mach shows possibilities of – Portable VM management with few reliances on system hardware – VM management without negative performance impact • Mach runs on a variety of machine architectures