Text-Parsing-in

advertisement

Text Parsing in Python

- Gayatri Nittala

- Madhubala Vasireddy

Text Parsing

► The

three W’s!

► Efficiency and Perfection

What is Text Parsing?

► common

programming task

► extract or split a sequence of characters

Why is Text Parsing?

► Simple

file parsing

A tab separated file

► Data

extraction

Extract specific information from log file

► Find

and replace

► Parsers – syntactic analysis

► NLP

Extract information from corpus

POS Tagging

Text Parsing Methods

► String

Functions

► Regular Expressions

► Parsers

String Functions

► String

module in python

Faster, easier to understand and maintain

► If

you can do, DO IT!

► Different built-in functions

Find-Replace

Split-Join

Startswith and Endswith

Is methods

Find and Replace

► find,

index, rindex, replace

► EX: Replace a string in all files in a directory

files = glob.glob(path)

for line in fileinput.input(files,inplace=1):

lineno = 0

lineno = string.find(line, stext)

if lineno >0:

line =line.replace(stext, rtext)

sys.stdout.write(line)

startswith and endswith

► Extract

quoted words from the given text

myString = "\"123\"";

if (myString.startswith("\""))

print "string with double quotes“

► Find

if the sentences are interrogative or

exclamative

► What

an amazing game that was!

► Do you like this?

endings = ('!', '?')

sentence.endswith(endings)

isMethods

► to

check alphabets, numerals, character

case etc

m = 'xxxasdf ‘

m.isalpha()

False

Regular Expressions

► concise

way for complex patterns

► amazingly powerful

► wide variety of operations

► when you go beyond simple, think about

regular expressions!

Real world problems

► Match

IP Addresses, email addresses, URLs

► Match balanced sets of parenthesis

► Substitute words

► Tokenize

► Validate

► Count

► Delete duplicates

► Natural Language processing

RE in Python

► Unleash

the power - built-in re module

► Functions

to compile patterns

► complie

to perform matches

►

match, search, findall, finditer

to perform opertaions on match object

►

group, start, end, span

to substitute

►

►-

sub, subn

Metacharacters

Compiling patterns

► re.complile()

► pattern

for IP Address

^[0-9]+\.[0-9]+\.[0-9]+\.[0-9]+$

^\d+\.\d+\.\d+\.\d+$

^\d{1,3}\.\d{1,3}\.\d{1,3}\.\d{1,3}$

^([01]?\d\d?|2[0-4]\d|25[0-])\.

([01]?\d\d?|2[0-4]\d|25[0-5])\.

([01]?\d\d?|2[0-4]\d|25[0-5])\.

([01]?\d\d?|2[0-4]\d|25[0-5])$

Compiling patterns

► pattern

for matching parenthesis

\(.*\)

\([^)]*\)

\([^()]*\)

Substitute

Perform several string substitutions on a given string

import re

def make_xlat(*args, **kwargs):

adict = dict(*args, **kwargs)

rx = re.compile('|'.join(map(re.escape, adict)))

def one_xlate(match):

return adict[match.group(0)]

def xlate(text):

return rx.sub(one_xlate, text)

return xlate

►

Count

► Split

and count words in the given text

p = re.compile(r'\W+')

len(p.split('This is a test for split().'))

Tokenize

► Parsing

and Natural Language Processing

s = 'tokenize these words'

words = re.compile(r'\b\w+\b|\$')

words.findall(s)

['tokenize', 'these', 'words']

Common Pitfalls

► operations

on fixed strings, single character

class, no case sensitive issues

► re.sub() and string.replace()

► re.sub() and string.translate()

► match vs. search

► greedy vs. non-greedy

PARSERS

► Flat

and Nested texts

► Nested tags, Programming language

constructs

► Better to do less than to do more!

Parsing Non flat texts

► Grammar

► States

► Generate

tokens and Act on them

► Lexer - Generates a stream of tokens

► Parser - Generate a parse tree out of the

tokens

► Lex and Yacc

Grammar Vs RE

►

Floating Point

#---- EBNF-style description of Python ---#

floatnumber ::= pointfloat | exponentfloat

pointfloat ::= [intpart] fraction | intpart "."

exponentfloat ::= (intpart | pointfloat) exponent

intpart

::= digit+

fraction

::= "." digit+

exponent

::= ("e" | "E") ["+" | "-"] digit+

digit

::= "0"..."9"

Grammar Vs RE

pat = r'''(?x)

(

(

(

# exponentfloat

# intpart or pointfloat

# pointfloat

(\d+)?[.]\d+ # optional intpart with fraction

|

\d+[.]

# intpart with period

)

# end pointfloat

|

\d+

# intpart

)

# end intpart or pointfloat

[eE][+-]?\d+

# exponent

)

# end exponentfloat

|

(

# pointfloat

(\d+)?[.]\d+

# optional intpart with fraction

|

\d+[.]

# intpart with period

)

# end pointfloat

'''

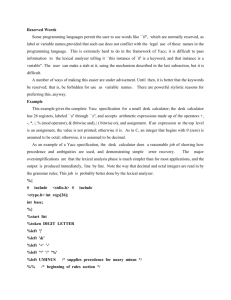

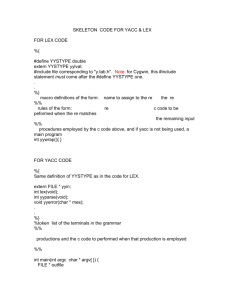

PLY - The Python Lex and Yacc

► higher-level

and cleaner grammar language

► LALR(1) parsing

► extensive input validation, error reporting,

and diagnostics

► Two moduoles lex.py and yacc.py

Using PLY - Lex and Yacc

► Lex:

► Import

the [lex] module

► Define a list or tuple variable 'tokens', the

lexer is allowed to produce

► Define tokens - by assigning to a specially

named variable ('t_tokenName')

► Build the lexer

mylexer = lex.lex()

mylexer.input(mytext) # handled by yacc

Lex

t_NAME

= r'[a-zA-Z_][a-zA-Z0-9_]*'

def t_NUMBER(t):

r'\d+'

try:

t.value = int(t.value)

except ValueError:

print "Integer value too large", t.value

t.value = 0

return t

t_ignore = " \t"

Yacc

► Import

the 'yacc' module

► Get a token map from a lexer

► Define a collection of grammar rules

► Build the parser

yacc.yacc()

yacc.parse('x=3')

Yacc

► Specially

named functions having a 'p_' prefix

def p_statement_assign(p):

'statement : NAME "=" expression'

names[p[1]] = p[3]

def p_statement_expr(p):

'statement : expression'

print p[1]

Summary

String Functions

A thumb rule - if you can do, do it.

► Regular Expressions

Complex patterns - something beyond simple!

► Lex and Yacc

Parse non flat texts - that follow some rules

►

References

► http://docs.python.org/

► http://code.activestate.com/recipes/langs/python/

► http://www.regular-expressions.info/

► http://www.dabeaz.com/ply/ply.html

► Mastering

Regular Expressions by Jeffrey E F.

Friedl

► Python Cookbook by Alex Martelli, Anna Martelli &

David Ascher

► Text processing in Python by David Mertz

Thank You

Q&A