Data Quality

Considerations

M&E Capacity Strengthening Workshop,

Maputo

19 and 20 September 2011

Arif Rashid, TOPS

Data Quality

Project Implementation

Data Management System

Project activities are implemented in

the field. These activities are designed

to produce results that are quantifiable.

An information system represents these

activities by collecting the results that were

produced and mapping them to a recording

system.

Data Quality: How well the DMS represents the fact

True picture

of the field

?

Data

Management

System

Slide # 1

Why Data Quality?

•

Program is “evidence-based”

•

Data quality Data use

•

Accountability

Slide # 2

Conceptual Framework of Data Quality?

Dimensions of Data Quality

Quality Data

Intermediate aggregation levels

(e.g. districts/ regions, etc.)

Service delivery points

Data management and reporting

system

M&E Unit in the Country Office

Accuracy, Completeness, Reliability, Timeliness,

Confidentiality, Precision, Integrity

Functional components of Data Management

Systems Needed to Ensure Data Quality

M&E Structures, Roles and Responsibilities

Indicator definitions and reporting

guidelines

Data collection and reporting forms/tools

Data management processes

Data quality mechanisms

M&E capacity and system feedback

Slide # 3

Dimensions of data quality

• Accuracy/Validity

– Accurate data are considered correct. Accurate data

minimize error (e.g., recording or interviewer bias,

transcription error, sampling error) to a point of being

negligible.

• Reliability

– Data generated by a project’s information system are

based on protocols and procedures. The data are

objectively verifiable. The data are reliable because

they are measured and collected consistently.

Slide # 4

Dimensions of data quality

• Precision

– The data have sufficient detail information. For

example, an indicator requires the number of

individuals who received training on integrated pest

management by sex. An information system lacks

precision if it is not designed to record the sex of the

individual who received training.

• Completeness

– Completeness means that an information system from

which the results are derived is appropriately

inclusive: it represents the complete list of eligible

persons or units and not just a fraction of the list.

Slide # 5

Dimensions of data quality

• Timeliness

– Data are timely when they are up-to-date (current),

and when the information is available on time.

• Integrity

– Data have integrity when the system used to generate

them are protected from deliberate bias or

manipulation for political or personal reasons.

Slide # 6

Dimensions of data quality

• Confidentiality

– Confidentiality means that the respondents are

assured that their data will be maintained according

to national and/or international standards for data.

This means that personal data are not disclosed

inappropriately, and that data in hard copy and

electronic form are treated with appropriate levels of

security (e.g. kept in locked cabinets and in password

protected files.

Slide # 7

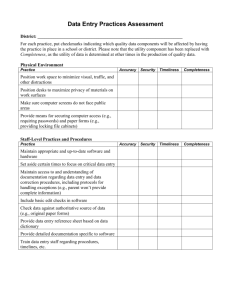

Data quality Assessments

Slide # 8

Data quality Assessments

• Two dimensions of assessments:

1. Assessment of data management and reporting

systems

2. Follow-up verification of reported data for key

indicators (spot checks of actual figures)

Slide # 9

Systems assessment tools

M&E structures,

functions and

capabilities

1 Are key M&E and data-management staff identified with clearly

assigned responsibilities?

2 Have the majority of key M&E and data management staff

received the required training?

Indicator definitions

and reporting

guidelines

3

Are there operational indicator definitions meeting relevant

standards that are systematically followed by all service points?

4

Has the project clearly documented what is reported to who, and

how and when reporting is required?

Data collection and

reporting forms/tools

5

Are there standard data-collection and reporting forms that are

systematically used?

6

Are data recorded with sufficient precision/detail to measure

relevant indicators?

7

Are source documents kept and made available in accordance

with a written policy?

Slide # 10

Systems assessment tools

Data management

processes

Does clear documentation of collection, aggregation and

manipulation steps exist?

Are data quality challenges identified and are mechanisms in

place for addressing them?

Are there clearly defined and followed procedures to identify

and reconcile discrepancies in reports?

Are there clearly defined and followed procedures to periodically

verify source data?

M&E capacity and

system feedback

Do M&E staff have clear understanding about the roles and how

data collection and analysis fits into the overall program quality?

Do M&E staff have clear understanding with the PMP, IPTT and

M&E Plan?

Do M&E staff have required skills in data collection, aggregation,

analysis, interpretation and reporting ?

Are there clearly defined feedback mechanism to improve data

and system quality?

Slide # 11

Schematic of follow-up verification

Slide # 12

M&E system design for data quality

• Appropriate design of M&E system is

necessary to comply with both aspects of DQA

– Ensure that all dimensions of data quality are

incorporated into M&E design

– Ensure that all processes and data management

operations are implemented and fully

documented (ensure a comprehensive paper trail

to facilitate follow-up verification)

Slide # 13

This presentation was made

possible by the generous support

of the American people through

the United States Agency for

International Development

(USAID). The contents are the

responsibility of Save the Children

and do not necessarily reflect the

views of USAID or the United

States Government.