Relational Cloud: A Database-as-a

advertisement

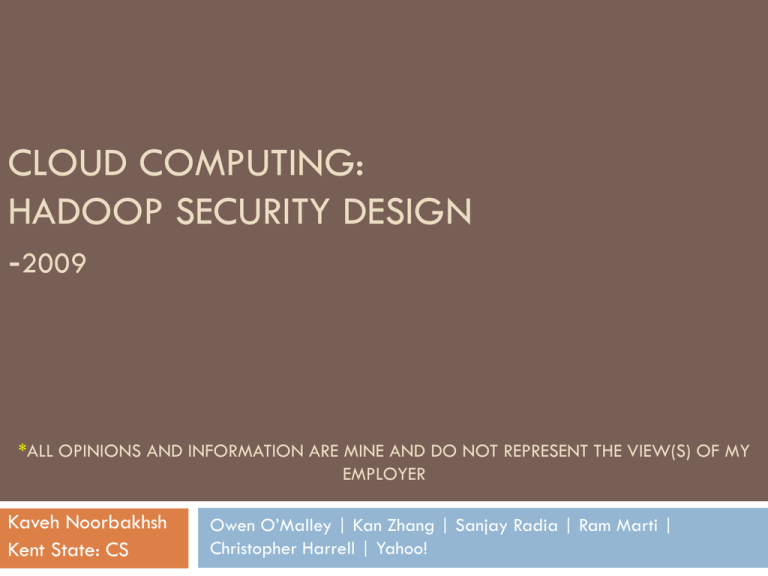

CLOUD COMPUTING: HADOOP SECURITY DESIGN -2009 *ALL OPINIONS AND INFORMATION ARE MINE AND DO NOT REPRESENT THE VIEW(S) OF MY EMPLOYER Kaveh Noorbakhsh Kent State: CS Owen O’Malley | Kan Zhang | Sanjay Radia | Ram Marti | Christopher Harrell | Yahoo! Brief History: Cloud Computing as a Service 1961: 1969 •John McCarthy •ARAPANET Introduces Concept of Cloud Computing as a business model 1997 •“Cloud Computing” coined by Ramnath Chellappa 1999 2002 2004 2006 2008 2009 2011 •Saleforce.com •Amazon Web •HDFS & •Google Docs •Eucalyptus •MS Azure •Amazon RDS st Services Map/Reduce •Enterprise •Amazon EC2 •1 Open •Amazon RDS supports in Nutch Oracle Applications Source AWS •Yahoo hires •MySQL via simple API for •Office 365 Doug Cutting supported web interface Private Clouds •OpenNebula •Private and Hybrid clouds •Hadoop hits web scale Hadoop – Funny Name, Big Impact Map/Reduce An Introduction Map/Reduce allows computation to scale out over many “cheap” systems rather than one expensive super computer Divide and Conquer “Work” Partition w1 w2 w3 “worker” “worker” “worker” r1 r2 r3 “Result” Combine Two Layers MapReduce: Code runs here HDFS: Data lives here Advantages of the Cloud Database as a Service = DBaaS Infrastructure as a Service = Iaas Software as a Service = SaaS Platform as a Service = PaaS Share hardware and energy costs Share employee costs Fast spin-up and tear down Expand quickly to meet demands Costs ideally proportional to usage Scalability Cloud Services Spending Billions of Dollars 160 140 120 100 2009 2010 2014 80 60 40 20 0 Cloud Services Revenue Cloud vs Total IT Spending Billions of Dollars 4500 4000 3500 3000 2500 2009 2010 2014 2000 1500 1000 500 0 Cloud Services Revenue Total IT Expendetures Security Challenges of the Cloud Where is my data living? Where is my data going? You may not know where you data is exactly since the data can be distributed among many physical disks In the cloud, especially in map/reduce, data is constantly in moving from node to node and nodes may be across multiple mini-clouds Who has access to my data? There may be other clients using the cloud, as well as, administrators and others who maintained the cloud that could have access to the data if it is not properly protected. Hadoop Security Concerns Hadoop services do not authenticate users or other services. (a) A user can access an HDFS or MapReduce cluster as any other user. This makes it impossible to enforce access control in an uncooperative environment. For example, file permission checking on HDFS can be easily circumvented. (b) An attacker can masquerade as Hadoop services. For example, user code running on a MapReduce cluster can register itself as a new TaskTracker. DataNodes do not enforce any access control on accesses to its data blocks. This makes it possible for an unauthorized client to read a data block as long as she can supply its block ID. It’s also possible for anyone to write arbitrary data blocks to DataNodes. Security Requirements for Hadoop Users are only allowed to access HDFS files that they have permission to access. Users are only allowed to access or modify their own MapReduce jobs. User to service mutual authentication to prevent unauthorized NameN- odes, DataNodes, JobTrackers, or TaskTrackers. Service to service mutual authentication to prevent unauthorized services from joining a cluster’s HDFS or MapReduce service. The degradation of performance should be no more than 3%. 1. A ppl icat i ons accessing fi les on H D F S cl ust er s Non-MapReduc plicat ions, including hadoop fs, access files st ored on one or more H clust ers. T he applicat ion should only be able t o access files and ser t hey are aut horized t o access. See figure 1. Variat ions: Proposed Solution – Use Case 1 (a) Access HDFS direct ly using HDFS prot ocol. Accessing Data (b) Access HDFS indirect ly t hough HDFS proxy servers via t he H FileSyst em or HT T P get . 1) User/App requests access to a data block. 2) Name Node authenticates and gives the user a block token. 3) User/App uses block token on Data Node to access block for READ, WRITE, COPY or REPLACE. (joe) b r e k Application Name Node delg(jo e ) MapReduce Task kerb(hdfs) bloc k toke n Data Node o ck t o l b ken Figure 1: HDFS High-level Dat aflow 2. A ppl icat i ons accessi ng t hi r d-par t y ( non-H ado op) ser v ices MapReduce applicat ions and MapReduce t asks accessing files or o t ions support ed by t hird party services. An applicat ion should on able t o access services t hey are aut horized t o access. Examples of t Proposed Solution – Use Case 2 2.2 High Level Use Cases 2 USE CAS Submitting Jobs 1) A user may obtain a delegation token through Kerberos. Application kerb(joe) Job Tracker kerb(mapreduce) 2) Token given to user jobs for subsequent authentication to NameNode as the user. 3) Jobs can use the delegation token to access data that user/app has access to Task Tracker job token Task HDFS HDFS HDFS ) (joe g l de other credential trus t Other Service NFS Figure 2: MapReduce High-level Dat aflow Core Principles Analysis Confidentiality Users/Apps will only have access to the data blocks they should have via block tokens Analysis Pass Core Principles Analysis Integrity Data is only available at the block level if the block token matches. There is an assumption that the data is good because the blocks are not checked Analysis Pass Fail Core Principles Analysis Availability Job Tracker and Name Nodes are single points of failure for system. Tokens persist for a small period of time so the system is resilient to short outages of Name Node and Job Tracker Analysis Fail Pass Conclusion The token method for authentication for both data and process access makes sense in a highly distributed system like hadoop. However, the fact that tokens have so much power and are not constantly re-checked leaves this design open to very serious TOCTOU attacks. As compared to the currently model(aka no security) this represents a major step forward. THE END Questions?