SOCIAL WORK EDUCATION ASSESSMENT PROJECT (SWEAP):

Responding to the

Challenges of Assessment

Pre-Conference Workshop

BPD Annual Conference

Louisville, KY

March 19, 2014

•

WORKSHOP OUTLINE

Introductions

o

o

o

•

Overview of Accreditation

o

o

o

o

•

EPAS 2008 (&2015)

Competencies

Characteristic Knowledge, Values & Skills

Practice Behaviors

SWEAP Instruments

o

o

o

o

o

o

•

History of Accreditation

Direct vs. Indirect Measures

Implicit Curriculum

Multiple Measures

Organizational Framework for Program Assessment

o

o

o

o

•

Introducing SWEAP /Goodbye BEAP

The SWEAP Team

Workshop Participants

Entrance

Exit

Alumni/Graduate

Employer

Curriculum Instrument (FCAI)

Field Instrument (FPPAI)

BREAK

WORKSHOP OUTLINE

(CONTINUED)

• Unique Benefits of SWEAP

• Tying SWEAP to Program Assessment

o

o

o

o

Linking SWEAP to EPAS

Matrix

Program Integration Example

Using SWEAP beyond EPAS

• Sharing Assessment Ideas

o Group Work

• Navigating SWEAP

o

o

o

o

o

Website

Ordering

Raw Data Policy

Processing

Reports

• Questions

INTRODUCING SWEAP

• Was BEAP

• Now SWEAP

o Not just for undergraduate programs anymore

o Can assess foundation year for graduate programs as well

o Can be modified for program specific advanced year assessment

THE SWEAP TEAM

Vicky Buchan

Colorado State University

victoria.buchan@colostate.edu

Kathryn Krase

LIU Brooklyn

kathryn.krase@liu.edu

Brian Christenson

Lewis-Clark State College

blchristenson@lcsc.edu

Phil Ng

BEAP@PHILNG.NET

Tobi DeLong Hamilton

Lewis-Clark State College, CDA

tadelong-hamilton@lcsc.edu

Ruth Gerritsen-McKane

University of Utah

ruth.gerritsen-mckane@socwk.utah.edu

Sarah Jackman

Sarah.jackman@socwk.utah.edu

Patrick Panos

University of Utah

patrickpanos@gmail.com

Roy (Butch) Rodenhiser

Boise State University

RoyRodenhiser@boisestate.edu

INTRODUCING

WORKSHOP PARTICIPANTS

•

•

•

•

Your Name

Your Program

Your Role

Have you used SWEAP?

o If so, which instrument(s)?

OVERVIEW OF ACCREDITATION:

HISTORY OF CSWE ACCREDITATION

o

o

o

o

What was the process like before BEAP?

How has it changed over time?

How do changes to EPAS impact it?

Move to competency based educational

standards in EPAS 2008

OVERVIEW OF ACCREDITATION:

DIRECT VS. INDIRECT MEASURES

o Direct Measures

• “Student products or performances that

demonstrate that specific learning has taken

place.”

• Examples (SWEAP and Non-SWEAP)

o Indirect Measures

• “May imply that learning has taken place

(e.g., student perceptions of learning) but do

not specifically demonstrate that learning or

skill.”

• Examples (SWEAP and Non-SWEAP)

o Which do you need and why?

OVERVIEW OF ACCREDITATION:

IMPLICIT CURRICULUM

o What is Implicit Curriculum?

• “Educational environment in which the explicit

curriculum is presented. It is composed of the

following elements: the program’s

commitment to diversity; admissions policies

and procedures; advisement, retention, and

termination policies; student participation in

governance; faculty; administrative structure;

and resources.” (EPAS, 2008)

• How do you measure it?

• Why should you measure it?

OVERVIEW OF ACCREDITATION:

MULTIPLE MEASURES

o The Importance of Multiple Measures

o SWEAP alone is not enough

ORGANIZATIONAL FRAMEWORK FOR

PROGRAM ASSESSMENT

EPAS 2008 (& 2015)

• Working under EPAS 2008

• EPAS 2015 underway… expected changes

o Fewer competencies

o Fewer practice behaviors

ORGANIZATIONAL FRAMEWORK FOR

PROGRAM ASSESSMENT

COMPETENCIES

• EPAS 2.1—Core Competencies

o Competency-based education

o Outcome performance approach to curriculum design.

o Measurable practice behaviors comprised of knowledge,

values, & skills.

o Need to demonstrate integration & application

competencies in practice with individuals, families, groups,

organizations, and communities.

o 10 competencies listed along with description of

characteristic knowledge, values, skills, & practice

behaviors that may be used to operationalize the

curriculum and assessment methods.

o Programs may add competencies consistent with their

missions and goals.

ORGANIZATIONAL FRAMEWORK FOR

PROGRAM ASSESSMENT

COMPETENCIES

• 2.1.1—Identify as a professional social worker and conduct

oneself accordingly.

• 2.1.2—Apply social work ethical principles to guide professional

practice.

• 2.1.3—Apply critical thinking to inform and communicate

professional judgments.

• 2.1.4—Engage diversity and difference in practice.

• 2.1.5—Advance human rights and social and economic justice.

• 2.1.6—Engage in research-informed practice and practiceinformed research.

• 2.1.7—Apply knowledge of human behavior and the social

environment.

• 2.1.8—Engage in policy practice to advance social and

economic well-being and to deliver effective social work

services.

• 2.1.9—Respond to contexts that shape practice.

• 2.1.10— Engage, assess, intervene, and evaluate with

individuals, families, groups, organizations, and communities.

ORGANIZATIONAL FRAMEWORK FOR

PROGRAM ASSESSMENT:

CHARACTERISTIC KNOWLEDGE, VALUES & SKILLS

• Current focus on measuring practice behaviors.

• Don’t forget about knowledge, values & skills.

ORGANIZATIONAL FRAMEWORK FOR

PROGRAM ASSESSMENT

PRACTICE BEHAVIORS

• Multiple practice behaviors per competency

• Each practice behavior MUST be measured for selfstudy/reaccreditation

• TWO measures required for each practice behavior

o At least one measure must be DIRECT

SWEAP INSTRUMENTS

WHAT ARE THEY AND HOW DO I USE THEM?

•

•

•

•

•

Entrance

Exit

Alumni/Graduate & Employer

Curriculum Instrument (FCAI)

Field Instrument (FPPAI)

ENTRANCE

PURPOSE

• Provides demographic profile of entering students.

• Completed at time of entrance into the program

(Program Defined).

• Provides overview of financial resources students

are using or plan to utilize.

• Provides employment status & background

information regarding both volunteer and paid

human service experience.

• Helps track planned or unplanned changes in the

profile of students in the program.

• Evaluate impact of policy changes, such as

admissions procedures, over time.

ENTRANCE

QUESTIONS

o

o

o

o

o

o

o

o

o

o

o

o

o

Student tracking: ID number & Survey completion date

Gender

Year in school

Overall GPA & GPA in major & Highest possible GPA at school

Length of current social work-related work experience

(volunteer & paid)

Citizenship/ length of residence in USA

Employment plans during social work education

Hours per week expected to work during education

Sources of financial aid expected

Language fluency

Expected date of graduation

Race/ Ethnicity

Disabilities/Accommodation

EXIT

PURPOSE

• Completed by students just prior to graduation.

o

Often administered in field seminar or capstone seminar.

• Feedback from students about their experiences while in the

program.

• Addresses:

o Evaluation of curriculum objectives based on EPAS.

o Post-graduate plans, related to both employment and

graduate education, are addressed.

• Collects demographic information to compare with the

entrance profile.

EXIT

QUESTIONS

• Educational Experience

o Including implicit curriculum assessment

• Current Employment

• Employment-seeking activities

• Current & anticipated Social Work Employment

o Primary function & major roles

• Post Graduate Educational Plans

EXIT

QUESTIONS

• Students Evaluate how well program prepared them to

perform practice behaviors

• Professional Activities

o Use of research techniques to evaluate client

progress & Use of program evaluation methodology

• Personal Demographic Information

o Gender, Citizenship, Language fluency, & Disabilities

ALUMNI/GRADUATE

PURPOSE

• Intended for completion two years after graduation

o Standardized timing for administration is essential to create a reliable dataset

for comparison over time.

• Alumni evaluate how well program prepared them for

professional practice.

• Alumni employed in social work and those not

employed in social work are surveyed.

• Also gathers information on current employment,

professional development activities, and plans/

accomplishments related to further education.

ALUMNI/GRADUATE

QUESTIONS

• Current Employment

• Current Social Work Employment

• Evaluation of preparation by the Program in the 10

EPAS competency areas (using Likert-type scale)

• Educational Activities

• Professional Activities

• Demographics

EMPLOYER

PURPOSE

• Intended for completion two years after graduation

• Addresses both accreditation and university concern for

feedback from the practice community.

• Measures graduate’s preparation for practice based on

supervisor’s assessment.

• Alumni/ae request employer complete the survey:

o Addresses primary concern of confidentiality.

o Use of student identified allows connection to other instruments.

EMPLOYER

QUESTIONS

• Educational background of supervisor/employer

• Twelve (12) items which evaluate alumni/ae

proficiency in all EPAS competencies.

BREAK TIME

Foundation Curriculum Assessment

Instrument

CURRICULUM INSTRUMENT (FCAI)

PURPOSE

1. Provides Pre/Post test in seven major

curricular areas of the foundation year.

2. Provides a direct measure to assist

programs with evaluation of their

curriculum.

3. Assists with identification of curricular areas

that may need attention.

4. Provides national comparative data.

Curricular Components

Curriculum Area

Number of

Questions

Practice

13

Human Behavior & Social Environment

10

Policy

9

Research

9

Ethics and values

8

Diversity

8

Social and Economic Justice

7

Sample HBSE Question

• The concept “person-in-environment”

includes which of the following:

a. Clients are influenced by their

environment

b. Clients influence their environment

c. Behavior is understood in the context of

one’s environment

d. All of the above

Sample Practice Question

• Determining progress toward goal

achievement is one facet of the _____

stage.

o a. Engagement

o b. Evaluation

o c. Assessment

o d. Planning

Overview of FCAI Respondents

Instrument

2011

2012

2013

Total

Entrance

1,986

2,009

8,432

12,427

964

1,714

5,369

8,047

2,950

3,723

13,801

20,474

Exit

Totals

Reliability Testing

• Version 9

– Tested in two junior practice classes

– Students tested twice, 2 weeks

apart

– Pearson’s correlation coefficient

• r = .86

Item difficulty index

• Overall difficulty or average should be around .5

(Cohen & Swerdlik, 2005)

• FCAI = .523 (n=415)

• “This is a very good difficulty level for the test.

Not likely to misrepresent the knowledge level of

test takers”.

Reliability & Effect Size

• Cronbach’s alpha = .784

• Effect Size d = 6.87

Current Data

1/13 to 9/13

• Number of Schools using the FCAI in

2013

110

• Number of Respondents in 2013:

o Pre-test: 8432

o Post-test: 5369

School X / National Comparison

PRE TEST

POST

TEST

SD

SIG

School X

31.24

(48.81%)

39.35

(61.48%)

6.19

.000

National

30.38

(48.10%)

37.54

(58.60%)

7.59

.000

Overall Scores Pre-Post

BSW

Entering

BSW

Exiting

(N = 8432)

(N =5369)

Raw

Score

32.8

40.05

Mean %

correct

51.2% / 64

62.5%/64

T-test

380.2

S.D.

7. 8

Sig

.000

BSW Student Scores by Curricular Area

One Program FCAI Entrance & Exit

Curricular Area

Question

Suggested

Competency

Correct responses

Correct

and % Entrance responses and %

Exit

Practice

Question 1

2.1.1C

14/64

21.8%

39/64

61%

Question 2

2.1.10A

46/64

71.8%

60/64

93.7%

Question 4

2.1.10H

21/64

32.8%

43/64

67.2%

Expansion beyond BSW

• Based upon CSWE assertions related to educational

levels in social work education, we expanded

testing to three additional groups:

o MSW foundation students:

• entering

• exiting

o Advanced standing students:

• entering

Points to keep in Mind about

the FACI

1. Purpose of this instrument: to review and

improve curriculum

2. Program will want to “monitor” scores over

several years (or several cohorts) for trends.

3. The FCAI can be considered a measure of

“value added” from program entry to exit.

4. Benchmarks: can be set two ways,

a. by competency

b. overall score

Field Practicum/Placement

Assessment Instrument

(FPPAI)

FIELD PRACTICUM/PLACEMENT

ASSESSMENT INSTRUMENT

(FPPAI)

• Responds to need for a standardized

field/practicum assessment instrument that

measures student achievement of practice

behaviors.

FIELD INSTRUMENT (FPPAI)

PILOTING PHASE

•

•

•

•

Initial Piloting for BSW in May 2008

Second Pilot in Fall 2008 /Spring 2009

Third Pilot in Fall 2009

Reliability Analysis

o Chronbach’s Alpha of 0.91 or higher in each practice

behavior

• Full implementation in BSW: Fall 2010

• Piloting in MSW: Spring-Fall 2012

• Full implementation in MSW (Foundation): Fall 2013

FIELD INSTRUMENT (FPPAI)

METHODOLOGY

• 58 Likert Scale questions measuring practice

behaviors linked to the EPAS 2008 competencies.

• Qualitative feedback form for each domain

available for program use.

• Available online and in print format.

• Individual program outcomes report with national

comparisons available.

• Individual program outcomes report with national

comparisons for EPAS 2008 Competencies &

Practice Behaviors including CSWE benchmark

reporting.

• Can be used as a final field assessment and midtest/post test design.

FIELD INSTRUMENT (FPPAI)

SCALE

FIELD INSTRUMENT (FPPAI)

CURRENT STATUS

•

•

•

•

84 programs currently use this instrument

More than 3,132 administrations to date

National data comparisons are available

Cronbach’s Alpha reliability test of internal

consistency at midpoint (Pilot and first two years

Testing): 0.969

• Cronbach’s Alpha reliability test of internal

consistency at final (Pilot and first two years

testing): 0.975

BREAK TIME

SWEAP

UNIQUE BENEFITS

Student demographics

Numerous data points for comparison

Explicit and Implicit curriculum assessment

Doesn’t end at graduation

Peer comparison by region, program type,

auspice & nationally

• …and more

•

•

•

•

•

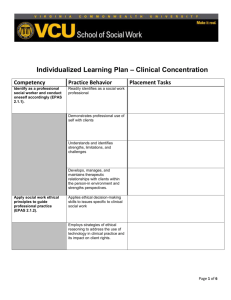

TYING SWEAP

TO PROGRAM ASSESSMENT

LINKING SWEAP TO EPAS

• All instruments updated to reflect 2008 EPAS

• All instruments will be updated and available as

soon as 2015 EPAS goes into effect

• Competency Matrix for 2008 EPAS(Handout)

TYING SWEAP

TO PROGRAM ASSESSMENT

Educational Policy

2.1.1—Identify as a professional

social worker and conduct oneself

accordingly

Practice Behavior

use supervision and consultation

Measures

SWEAP-Exit

SWEAP-FPPAI

SWEAP-Employer

SWEAP-Alumni

TYING SWEAP TO PROGRAM

ASSESSMENT: MATRIX

Competency

2.1.7—Apply knowledge of

human behavior and the

social environment.A. utilize conceptual

frameworks to guide the

processes of assessment,

intervention, and evaluation;

B. critique and apply

knowledge to understand

person and environment.

Alumni

Exit

Employer

FCAI

FPPAI

TYING SWEAP

TO PROGRAM ASSESSMENT

PROGRAM INTEGRATION EXAMPLE

• Program Integration Example (Handout)

TYING SWEAP

TO PROGRAM ASSESSMENT

USING SWEAP BEYOND EPAS

• Curricular Development

• Addressing Implicit Curriculum Concerns

• Understanding student body

o Changes in student body over time

SHARING ASSESSMENT IDEAS

Break into small groups,

tackle the assigned

competency discussing

the following:

How can/do you measure

competencies in your

programs?

Offer SWEAP & NonSWEAP options

SHARING ASSESSMENT IDEAS

Break into small groups, tackle

the assigned competency

discussing the following:

How can/do we measure

Implicit Curriculum?

Offer SWEAP &

Non-SWEAP Measures

NAVIGATING SWEAP

THE WEBSITE

http://sweap.utah.edu

o Navigating the site

o Locating the results of your instruments

NAVIGATING SWEAP

ORDERING

o Online through website.

o Pay by credit-card, or send check.

o Receive email with .pdfs to be printed, or

links to online instruments.

o Printing .pdfs

• Can be double-sided, BUT DO NOT STAPLE

For questions about your order or status:

sarah.jackman@socwk.utah.edu

Online Instruments

• All SWEAP instruments are available in electronic format.

• eTickets (electronic tickets) are simple to use. You order

them via the SWEAP order page on our website, just like

you would order instruments to print on paper.

• Each eTicket is an individual instrument and cannot be

copied.

• The simplest way to administer instruments with eTickets is

to send an email to each student, field educator or

alumnus/a who is expected to complete the instrument.

In that email, you provide an individual eTicket link.

• The respondent enters the letters and numbers that

follow the equal sign (=) on the eTicket link. For instance,

to access the email example, the code to enter in the

pink box is RITNFN26197 (see next slide).

NAVIGATING SWEAP

RAW DATA POLICY

• We offer return of de-identified data to programs

with the following data removed:

o

o

o

o

o

o

Year of Birth

Day of Birth

Parts of Social Security Numbers

ZIP code of Employment or Residence.

Sex / Gender

Ethnic Identity

• In addition, specific answers to the FCAI instruments

will not be returned, nor specific answers to the

Values at Exit or Entrance. These will be reported in

an aggregate only.

• FPPAI instruments, due to their nature, will return all

identifying information.

NAVIGATING SWEAP

RAW DATA POLICY

• There will be a surcharge to utilize this service, it will

be a one time charge of $25.00 to have all

permissible data returned to the program.

NAVIGATING SWEAP

PROCESSING

• Paper instruments need to be mailed to Utah.

o Processing involves scanning forms into system and then

calculating data.

o Can take up 3-6 weeks, depending on time of year.

• Online instruments

o Processing is very quick.

o Can be days, instead of weeks.

o No need to be scanned at our end.

NAVIGATING SWEAP

REPORTS

• Receive email when reports are ready.

• Retrieve reports through website.

• Can request reports with tailored comparisons.

Coming in 2015!

• Bridging the gap for direct measures: New

direct measures in-line with 2015 EPAS

• Outcome Reports on your webpage as

required by CSWE

• All current instruments updated for EPAS

2015

QUESTIONS?