kMemvisor

advertisement

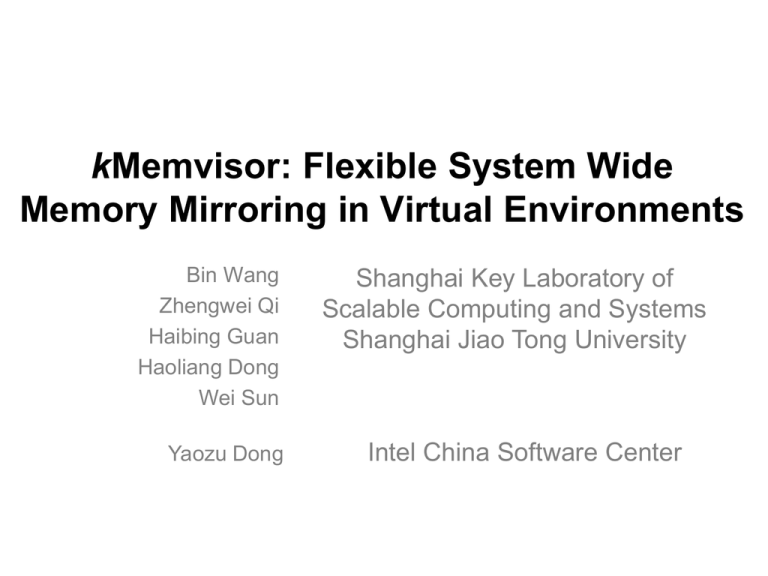

kMemvisor: Flexible System Wide Memory Mirroring in Virtual Environments Bin Wang Zhengwei Qi Haibing Guan Haoliang Dong Wei Sun Yaozu Dong Shanghai Key Laboratory of Scalable Computing and Systems Shanghai Jiao Tong University Intel China Software Center Is your memory error-prone? Today's memories do become errorprone. • [B. Schroeder et al. SIGMETRICS 09] Memory failures are common in clusters • 8% of DIMMs have correctable errors per year • 1.29% uncorrectable errors in Google testbed Today's memories do become errorprone. • [B. Schroeder et al. SIGMETRICS 09] Memory failures are common in clusters • 8% of DIMMs have correctable errors per year • 1.29% uncorrectable errors in Google testbed Memory -intensive App. Memory -intensive App. Memory -intensive App. Memory HA in Cloud Computing 1.29% error rate Memory HA in Cloud Computing 1.29% error rate 13,000 failures per year 1,083 failures per month 35 failures per day 1.5 failure per hour Memory HA in Cloud Computing 1.29% error rate 13,000 failures per year 1,083 failures per month 35 failures per day 1.5 failure per hour 99.95% Service Level Aggrement Memory HA in Cloud Computing 1.29% error rate 4.38 hours downtime per year 21.56 minutes downtime per month 5.04 minutes downtime per day 13,000 failures per year 1,083 failures per month 35 failures per day 1.5 failure per hour 99.95% Service Level Aggrement Memory HA in Cloud Computing 1.29% error rate 4.38 hours downtime per year 21.56 minutes downtime per month 5.04 minutes downtime per day 13,000 failures per year 1,083 failures per month 35 failures per day 1.5 failure per hour 99.95% Service Level Aggrement [Andy A. Hwang et al. ASPLOS 12] Memory Errors happen at a significant rate in all four sites with 2.5 to 5.5% of nodes affected per system Existing Solutions Hardware ECC (Hp, IBM, Google et al.) Mirrored Memory (Hp, IBM, Google et al.) Existing Solutions • Software - Duo-backup (GFS, Amazon Dynamo) System level tolerance - Checkpoint, hot spare+ VM migration /replication Application-Specific and High overhead (eg. Remus [NSDI 08] with 40 Checkpoints/sec, overhead 103%) Design Guideline • Low cost • Efficiency & compatibility - Arbitrary platform - On the fly+ hot spare • Low maintaining - Little impact to others (eg., networking utilization) - Without migration • Cloud requirement - VM granularity kMemvisor • A hypervisor providing system-wide memory mirroring based on hardware virtualization • Supporting VMs with or without mirror memory feature on the same physical machine • Supporting NModularRedundancy for some special mission critical applications kMemvisor High-level Architecture Ordinary VM App App Level HA VM … App System-wide HA VM … Guest OS Guest OS … App Guest OS kMemvisor CPU Management Memory Management Modify CPU mva = mirror(nva) Code Translation Management Hardware Translate Memory Code Page Table … ... MOV addr, data MOV mirror_addr, data ... … Load Disk Code ... MOV addr, data ... … Memory Mirroring Virtual Addr. Physical Mem. Memory Mirroring Create native PTE Virtual Addr. Physical Mem. Memory Mirroring Create native PTE Virtual Addr. Physical Mem. Memory Mirroring Create mirror PTE Virtual Addr. Physical Mem. Memory Mirroring Create mirror PTE Virtual Addr. Physical Mem. Memory Mirroring Virtual Addr. Physical Mem. mov $2, addr 2 2 Memory Mirroring Virtual Addr. Physical Mem. mov mov 2 $2, addr $2, mirror(addr) 2 Retrieve Memory Failure Virtual Addr. Physical Mem. X Memory corruption 2 Retrieve Memory Failure Virtual Addr. Physical Mem. X 2 Retrieve Memory Failure Virtual Addr. Physical Mem. X 2 Re-create PTE Retrieve Memory Failure Virtual Addr. Physical Mem. X 2 Re-create PTE Retrieve Memory Failure Virtual Addr. Physical Mem. X 2 2 Copy Create Mirror Page Table malloc() Application System Call mmap() Guest OS Hyper Call update_va_mapping() 1 kMemvisor 2 Native Create native PTE Create mirror PTE Mirror Virtual Addr. Physical Mem. Hardware Modified Memory Layout Native Space Virtual Memory Mirror Space Physical Memory 0xFFFFFFFFFFFF 0xFFFFFC000000 kMemvisor Space Native Kernel Space 0x800000000000 High Quality Memory Mirrored Memory Mirrored User Stack Space Native User Stack Space Mirrored User Heap Space 0x000000000000 Native User Heap Space mva = mirror(nva)=nva+offset Native Memory Memory Synchronization Native instructions Modified instructions … … movq $4, 144(%rdi) … … call log_text … addq ... %rdx, (%rax) … … pushq %rbp … … .Log_text: ... ... Compiler (cc1) Sysbench.c Binary Tanslation Sysbench.s movq $4, 144(%rdi) movq $4, offset+144(%rdi) call log_text addq %rdx, (%rax) addq %rdx,offset(%rax) pushq %rbp movq %rbp, offset(%rsp) .Log_text: pushq %rax movq 8(%rsp), %rax movq %rax, (offset+8)(%rsp) popq %rax Assembler (as) M_sysbench.s Linker (ld) Sysbench.o Modified Libraries Sysbench.out Implicit Memory Instructions …… call pushl movl movl movl popl …… grep %eax %eax, offset(%esp) 4(%eax), %eax %eax, offset+4(%esp) %eax Explicit and Implicit Instructions …… call pushl movl movl movl popl …… grep %eax %eax, offset(%esp) 4(%eax), %eax %eax, offset+4(%esp) %eax Would not be executed untill call returns causing data inconsistence Explicit and Implicit Instructions …… call pushl movl movl movl popl …… grep: pushl movl movl movl popl …... grep %eax %eax, offset(%esp) 4(%eax), %eax %eax, offset+4(%esp) %eax %eax %eax, offset(%esp) 4(%eax), %eax %eax, offset+4(%esp) %eax Would not be executed untill call returns causing data inconsistence Explicit and Implicit Instructions …… call pushl movl movl movl popl …… grep: pushl movl movl movl popl …... grep %eax %eax, offset(%esp) 4(%eax), %eax %eax, offset+4(%esp) %eax %eax %eax, offset(%esp) 4(%eax), %eax %eax, offset+4(%esp) %eax Would not be executed untill call returns causing data inconsistence Instrumenting mirror instructions at the start of the called procedure The Stack After An int Instruction Completes Privilege changed Pushed by processor Native Mirror ss ss esp eflags cs eip esp error code Copied by kMemvisor esp eflags cs eip error code The Stack After An int Instruction Completes Privilege changed Pushed by processor Native Mirror ss ss esp eflags cs Copied by kMemvisor eip error code eip esp error code Privilege not Native changed Mirror Data A Data A Pushed by processor esp Data B eflags cs esp eflags cs Copied by kMemvisor Data B eflags cs eip eip error code error code Test Environment • Hardware - Dell PowerEdge T610 server:6-core 2.67GHz Intel Xeon CPU with 12MB L3 cache - Samsung 8 GB DDR3 RAMs with ECC and a 148 GB SATA Disk • Software - Hypervisor: Xen-3.4.2 - Kernel: 2.6.30 - Guest OS: Busybox-1.19.2 Malloc Micro-test with Different Block Size The impact on performance is somewhat large when the allocated memory is less than 256KB but much more limited when the size is larger. Memory Sequential Read & Write Ave rageove rhe ad 33% No overhead Overhead for sequential memory write Overhead for sequential memory read XV6 Benchmark Large stove rhe ad 7.2% Ave rageove rhe ad 5% Large stove rhe ad 60% Ave rageove rhe ad 43% 200 Native 180 Modified Native 1.6 Modified 1.4 1.2 1 0.8 0.6 0.4 Normalized Latency Normalized Latency 1.8 1.2 160 Count of memory instructions 140 1 120 0.8 100 0.6 80 60 0.4 40 0.2 0.2 0 20 0 sbrk bigwrite mem fork Usertests performance comparison 0 echo mkdir wc cat ls Command performance comparison Count of instructions 1.4 Web Server & Database Large stove rhe ad 10% Latency 2.5 Native Modified 2 1.5 Double overhead Normalized 1 Performance of thttpd Impacted by lock operations 0.5 0 create insert select update delete The performance impact for create, insert, select, update, and delete in SQLite The cache mechanism will cause frequent memory writing operations. Compilation Time Ave rageove rhe ad 6% Mirror instructions detail information SQLite overhead 5.64% Compilation time overhead brought by kMemvisor Sometimes, implicit write instructions are less but usually more complicated themselves which would cost extra overhead. Discussion • Special memory area - Hypervisor + Kernel page table • Other device memory operation - IO operation • Address conflict - mva = nva+offset • Challenge in binary translation - Self-modifying code & value • Multi-threads and multi-cores - Multi-thread: emulate mirrored instruction - Multi-cores: explicit mutex lock Conclusion • A software mirrored memory solution – CPU-intensive tasks is almost unaffected – Our stressful memory write benchmark shows the backup overhead of 55%. – Average overhead in real world applications 30% • Dynamic binary translation • Full kernel mirroring Thanks!