presentation_v00

advertisement

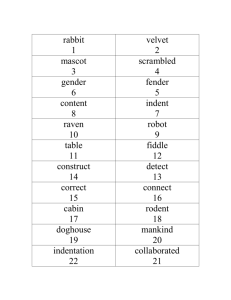

Mandarin Chinese Speech Recognition Mandarin Chinese Tonal language (inflection matters!) Monosyllabic language 1st tone – High, constant pitch (Like saying “aaah”) 2nd tone – Rising pitch (“Huh?”) 3rd tone – Low pitch (“ugh”) 4th tone – High pitch with a rapid descent (“No!”) “5th tone” – Neutral used for de-emphasized syllables Each character represents a single base syllable and tone Most words consist of 1, 2, or 4 characters Heavily contextual language Mandarin Chinese and Speech Processing Accoustic representations of Chinese syllables Structural Form (consonant) + vowel + (consonant) Mandarin Chinese and Speech Processing Phone Sets Initial/final phones [1] e.g. Shi, ge, zi = (shi + ib), (ge + e), (z + if) Initial phones: unvoiced 1 phone Final phones: voiced (tone 1-5) Can consist of multiple phones Mandarin Chinese and Speech Processing Strong tonal recognition is crucial to distinguish between homonyms [3] (especially w/o context) Creating tone models is difficult Discontinuities exist in the F0 contour between voiced and unvoiced regions Prosody Prosody: “the rhythmic and intonational aspect of language” [2] Embedded Tone Modeling[4] Explicit Tone Modeling[4] Tone Modeling Embedded Tone Modeling Tonal acoustic units are joined with spectral features at each frame [4] Explicit Tone Modeling Tone recognition is completed independently and combined after post-processing [4] Tone Modeling Pitch, energy, and duration (Prosody) combined with lexical and syntactic features improves tonal labeling Coarticulation Variations in syllables can cause variations in tone: Bu4 + Dui4 = Bu2 Dui4 (wrong) Ni3 + Hao3 = Ni2 Hao3 (hello) Emebedded Tone Modeling: Two Stream Modeling Ni, Liu, Xu Spectral Stream –MFCC’s (Mel frequency cepstral coefficients) Describe vocal tract information Distinctive for phones (short time duration) Pitch/Tone Stream – requires smoothing Describe vibrations of the vocal chords Independent of Spectral features d/dt(pitch) aka tone and d2/dt2(pitch) are added Embedded in an entire syllable Affected by coarticulation (requires a longer time window) – i.e. Sandhi Tone – context dependency Embedded Tone Modeling: Two Stream Modeling [4] Tonal Identification Features F0 Energy Duration Coarticulation (cont. speech) Initially use 2 stream embedded model followed by explicit modeling during lattice rescoring (alignment?) Explicit tone modeling uses max. entropy framework [4] (discriminative model) Explicit Tone Modeling [4] No. 1 Feature Description Duration of current, previous, and following syllables # of Features 3 2 3 Previous syllable is or is not sp 4 Statistical Parameters of pitch and log-energy of current syllable (i.e. max, min, mean, etc.) 10 5 Normalized max and mean of pitch and energy in each syllable in the context window 12 6 7 Location of current syllable within word Slope and intercept of F0 contour of current syllable, its delta, and delta-delta Tones of preceding and proceding syllables 1 6 1 2 Other Work Chang, Zhou, Di, Huang, & Lee [1] 3 Methods Powerful Language Model (no tone modeling) Embedded 2 Stream CER = 7.32% Tone Stream + Feature Stream CER = 6.43% Embedded 1 Stream Developed Pitch extractor pitch track added to feature vector CER = 6.03% Other Work Qian, Soong [3] F0 contour smoothing Multi-Space Distribution (MSD) Models 2 prob. Spaces Unvoiced: Discrete Voiced (F0 Contour): Continuous Other Work Lamel, Gauvain, Le, Oparin, Meng [6] Multi-Layer Perceptron Features Compare Language Models Combined with MFCC’s and Pitch features N-Gram: Back-off Language Model Neural Network Language Model Language Model Adaptation Other Work O. Kalinli [7] Replace prosodic features with biologically inspired auditory attention cues Cochlear filtering, inner hair cell, etc. Other features are extracted from the auditory spectrum Intensity Frequency contrast Temporal contrast Orientation (phase) Other Work Qian, Xu, Soong [8] Cross-Lingual Voice Transformation Phonetic mapping between languages Difficult for Mandarin and English Very different prosodic features References [1] Eric Chang, Jianlai Zhou, Shuo Di, Chao Huang, & Kai-fu Li, “Large Vocabulary Mandarin Speech Recognition with different Approached in Modeling Tones” [2] Meriam-Webster Dictionary, http://www.merriam-webster.com/ [3] Yao Qian & Frank Soong, “A Multispace Distribution (MSD) and Two Stream Tone Modeling Approach to Mandarin Speech Recognition”, Science Direct, 2009 [4]Chongjia Ni, Wenju Liu, & Bo Xu, “Improved Large vocabulary Mandarin Speech Recognition using Prosodic and Lexical Information in Maximum Entropy Framework” [5] Yi Liu & Pascale Fung, “Pronunciation Modeling for Spontaneous Mandarin Speech Recognition”, International Journal of Speech Technology, 2004 [6] Lori Lamel, J.L. Gauvain, V.B. Le, I. Oparin, S. Meng, “Improved Models For Mandarin Speech to Text Transcription, ICASSP, 2011 [7] O. Kalinli, “Tone and Pitch Accent Classification Using Auditory Attention Cues”, ICASSP, 2011