The Complexities of Understanding Speech in Background Noise.

advertisement

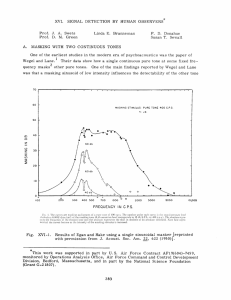

The Complexities of Understanding Speech in Background Noise Stuart Rosen UCL Speech, Hearing and Phonetic Sciences First International Conference on Cognitive Hearing Science for Communication A caveat about ‘cognition’ • Important aspects of this problem are not ‘cognitive’ but … • Cognitive processing … – relies on adequate sensory representations, and … – can compensate for impoverished sensory representations. Why is this interesting? • Most speech is not heard in quiet, anechoic conditions. • People vary a lot in how well they can understand speech in the presence of other sounds. – Effects of hearing impairment – Effects of age – Auditory processing disorder (APD)? Some determinants of performance: I • The nature of the target speech material – Predictability • context • number of alternative utterances • frequency of usage • size of lexical ‘neighbourhoods’ Some determinants of performance: II • The configuration of the environment – Open air or in a room? – How ‘dry’ is a room? • effects of reverberation – spatial separation between target and noise • or, the transmission system (e.g. mobile telephone) – distortion & noise added by the system Some determinants of performance: III • Talker characteristics – Different talkers vary considerably in intrinsic intelligibility – Talkers vary their own speech depending upon demands of the situation • hyper/hypo distinction of Lindblom (1990) – Match between talker and listener accents Some determinants of performance: IV • Listener characteristics – Linguistic development • vocabulary knowledge • ability to use context • the presence of language impairments • L1 vs L2 – Hearing sensitivity and any hearing prosthesis used – Neuro-developmental disorders • Language impairment • Autism spectrum disorder • APD Some determinants of performance: V • The nature of the background noises – level (SNR) – fluctuations in level – spectral characteristics – genuine ‘noise’: aperiodic or periodic? – and/or other talkers • how many there are • speaking your own language or a language you don’t know – How ‘attention-grabbing’ the background noises are The simplest case: A steady-state background noise Much is understood about what makes one steady noise more or less interfering than another spectral shape SNR ‘Energetic’ masking • Noises interfere with speech to the extent that have energy in the same frequency regions • Can be quantified in the ‘articulation index’ • Reflects direct interaction of masker and speech in the cochlea, which acts as a frequency analyser. But noises are typically not steady … Fluctuating maskers afford ‘glimpses’ of the target signal target glimpses masker • People with normal hearing can listen in the ‘dips’ of an amplitude modulated masker SRT for VCVs in simple on/off fluctuations as a function of the duration of the fluctuation. Howard-Jones & Rosen (1993) Acustica better performance ‘dip listening’ or ‘glimpsing’ better performance ‘Dips’ can be limited in frequency (‘checkerboard noise’) SRT for VCVs in 10 Hz modulations with different numbers of channels. Howard-Jones & Rosen (1993) JASA But maskers can be periodic too, most importantly, when speech is in the background. Miller (1947) ‘The masking of speech’ It has been said that the best place to hide a leaf is in the forest, and presumably the best place to hide a voice is among other voices. Listening to speech in ‘noise’ Children’s Coordinate Response Measure Bouncy in quiet in steady noise in modulated noise against another talker A useful distinction • Energetic masking – maskers interfere with speech to the extent that have energy in the same time/frequency regions – primarily reflecting direct interaction of masker and speech in the cochlea – relevance of glimpsing/dip listening • Temporal and/or spectral ‘dips’ in the masker allow ‘glimpses’ of target speech • Informational masking everything else! Informational masking • Something to do with target/masker similarity? – signal and masker ‘are both audible but the listener is unable to disentangle the elements of the target speech from a similar-sounding distracter’ (Brungart, 2001) Informational masking: a finer distinction (Shin-Cunningham, 2008) • Problems in ‘object formation’ – Related to auditory scene analysis – similarities in auditory properties make segregation difficult • voice pitch, timbre, rate 1 woman, 1 man • Problems in ‘object selection’ 2 men – Related to attention and distraction – the masker may distract attention from the target • e.g., more interference from a known as opposed to a foreign language EM & IM appear to operate at different parts in the auditory pathway • Energetic masking at the periphery, in the cochlea – Early developing abilities – Increased EM from hearing impairment – Unlikely to be a factor in APD • Informational masking at higher centres – – – – Late developing abilities? Increased IM in older listeners? Increased IM in developmental disorders? But aspects of IM can be made difficult by peripheral factors • e.g., CI users difficulties with auditory scene analysis little glimpsing for CI users Nelson et al. (2003) speech-spectrum-shaped masking noise squarewave modulated added to IEEE sentences better performance → normal listeners CI users better performance → not only poor frequency selectivity, but lack of sensation of voice pitch (poor perception of TFS) makes auditory scene analysis difficult: How do you tell the noise from the speech? But IM can be excessive in the presence of normal hearing … better performance Children find it hard to ignore another talker better performance Slow development of abilities that minimise IM better performance Increased IM in Specific Language Impairment (SLI) 9 SLI & 10 TD children aged 6-10 years CCRM sentences MSc work of Csaba RedeyNagy steady noise ed speech Increased IM in some people with High Functioning Autism (HFA) control better HFA worse HFA CCRM sentences in various backgrounds PhD work of Katharine Mair • evidence for a temporal processing deficit but … • not the crucial factor in excessive masking for speech Increased IM in some people with High Functioning Autism (HFA) control better HFA worse HFA HFA poor performers (and younger children) are highly susceptible to informational masking … but what aspect? ASA? attention? linguistic aspects? CCRM sentences in various backgrounds PhD work of Katharine Mair An ecologically valid test bed for evaluating the roles of EM and IM: Speech in n-talker babble for n=1,2,3…∞ talkers Miller (1947) Increasing the number of talkers in the masker better performance → SNR (dB) +12 +6 0 -6 -12 -18 ‘It is relatively easy for a listener to distinguish between two voices, but as the number of rival voices is increased the desired speech is lost in the general jabber.’ • target words from multiple males • babble: equal numbers of m/f (1 VOICE is male) better performance → IEEE sentences in n-talker babble • What happens as n increases? – glimpsing opportunities so EM – linguistic content so IM (selection?) – number of Fo contours so IM (ASA) 1-talker voice pitch source with envelopes derived from n-talker babble 1-talker 2-talker 16-talker babble-modulated 1-talker F0 (plus with an unmodulated envelope) 2-talker voice pitch source with envelopes derived from n-talker babble 1-talker 2-talker 16-talker babble-modulated 2-talker F0 (plus with an unmodulated envelope) better performance Unintelligible maskers on noisevocoded IEEE sentences noise Periodicity in the maskers leads to better performance, probably through better ASA It’s easier to ignore a single F0 contour, rather than two 2 Fo contours but ... 1 Fo contour Why improved performance for steady-state vs 16-talker envelopes? Worse still, why glimpsing in noise?! Final remarks • The balance of EM & IM effects presumably varies with the age and hearing status of the listener • The linguistic effects seen may represent a separate aspect of IM apart from object formation and selection. • Unraveling the contributions of various factors in understanding the masking of speech by other sounds is very important … – But very complex! Tack så mycket! Work supported by: UCL Speech, Hearing and Phonetic Sciences National Institutes of Health DC006014 Bloedel Hearing Research Center Thanks to my collaborators: Sophie Scott, Katharine Mair, Tim Green, Csaba Redey-Nagy, Jude Barwell, Zoe Lyall & Arooj Majeed of UCL Pam Souza, Northwestern U