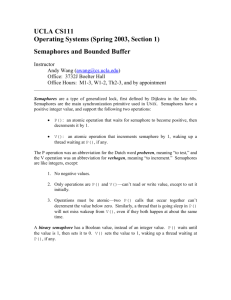

INDEX 1. Introduction 2. Critical Section Problem and Solution Techniques 3. Semaphore Implementation 4. Producer-Consumer Problem 5. Deadlock Handling 6. Concurrency Control in File Systems 7. Real-world Operating System Case Study 8. Conclusion 9. Bibliography Synchronization Strategies in Operating Systems: A Comprehensive Exploration 1. Introduction This assignment aims to explore and implement synchronization strategies in operating systems. Synchronisation is vital in an operative system because it ensures all processes that occur at the same time are handled correctly, efficiently, and according to the situation scalable. We will explore different synchronisation mechanisms like: Mutual exclusion, Semaphores, Monitors, Spinlocks, Condition Variables, Reader-Writers lock, and barrier Synchronisation. Without effective synchronisation, computers will be unable to perform operations efficiently leading to errors, delays, waste of resource, lack of communications between user and system. In this assignment we are going to address the following synchronisation challenges: - Race Conditions - Deadlocks - Starvation - Priority Inversion - Efficiency - Scalability These challenges are very important in order to have a robust, efficient and scalable software that can take advantage of modern architecture and a wide range of workloads. It is important to mention that there is no magic recipe to ensure proper synchronisation, it is a matter of delicate calculation and balance of benefits and drawbacks. Every situation will provide a different scenario where we will have to navigate through these challenges to develop an efficient, robust, fast, and reliable software solution. 2. Critical Section Problem and Solution Techniques Critical Section Problem arises when multiple processes want to use the same resources at the same time. The role of the operative System is to ensure that only one of them get the resources at the same time, but also make sure that all the processes get their turn to access the resources and finish its task without interference. Critical Section is a part of the program where the shared resource is accessed and if not handled properly it may lead to inconsistency or data corruption. The solution techniques for this problem are the following: a. Semaphores 1) Binary semaphores Called mutexes, are used for mutual exclusion, as its name says: it acts like a semaphore (Pass or NO pass) but in this case there are 2 states: Locked and unlocked. 2) Counting Semaphores The main difference with binary semaphores is that counting semaphores maintain a count that increments when they release the resource and decreases when they get it. b. Locks 1) Spinlocks These technique continuously tries locks to see if they are available. It can be compared as a key trying to open a lock until it opens. 2) Mutexes Short for Mutual exclusion locks. These are similar to a binary semaphore. If the lock is close it can not be accessed. It has 2 states: locked and unlocked. c. Monitors A monitor is a tool that encapsulates resources and procedures that provides access to it. Only that process can have access to the resources allocated by the monitor. Monitor are more complex than primitive synchronisation. d. Conditional variables This allows processes top wait for a resource until certain condition become true or false. There are some challenges related like: a. Mutual exclusion Mutual exclusion refers that when one resource is being used, it should not be available for anyone else until it finishes. This can be achieved by the use of semaphores and locks but the abuse of these can cause bottlenecks and affect the performance. b. Progress Progress refers that if no process is executing at its critical section and other process want to access their critical section, then only the processes that are not in their remainder section can participate in deciding which one will be next in entering critical section. c. Bounded Waiting Bounded waiting refers that it will ensure that when a process request access to a critical section, there will be a limitation on the number of processes that can then enter the critical section before the requesting process. 3. Semaphore Implementation The Semaphore implementation requires first to define data structures and operations to manage the state of the semaphores, then control the access to shared resources. Semaphores are very versatile synchronisation primitives and can be used in many different applications like: Consumer-producer problems, Resource Pooling, Reader-Writer problem, Concurrency control in Database Systems, Thread pool management, Barrier synchronisation, Task Synchronisation. Following we will recreate the process of semaphore implementation with multiple processes accessing resources using a simple example that can be easily recreated in any software compiler: We have an empty list called “buffer”. We have 2 classes, one called Producing which creates random numbers continuously from 1 to 100. Another class called Eating pick one number from the buffer and print it in the screen. The problem here is that both classes require access to the buffer list in order to write and read the data. How semaphores enforce synchronisation in this scenario?: We will use 3 semaphores: “mutex” for mutual exclusion, “empty” to track empty slots in the buffer, and “full” to track filled slots in the buffer. The Producing class gets the “empty” semaphore to make it wait for an empty slot in the buffer list. Then the mutex semaphore grant access to the list safely. When an item has been added, it triggers the “mutex” semaphore and triggers the full semaphore. The Eating Class recognise the “full” semaphore to wait for a filled slot and the mutex semaphore to access buffer safely. Once one item has been consumed, it releases de “mutex” and then releases the “empty” semaphore. As we can see in this example, semaphores successfully manage the access to the list called buffer and allow smooth operation. 4. Producer-Consumer Problem We will get in details about the Producer-Consumer Problem which nis very common in programming. As our previous example, this problem consists in 2 processes on that produces and other that consumes, both use the same buffer. The problem here is how to avoid both processes use the buffer at the same time having into considerations that Producers should not produce data when the buffer is full, Consumers should no consume data from an empty buffer and Producers and consumers should access the buffer safely. a. Implementation of a solution using synchronization mechanisms To tackle this problem we can make use of synchronisation mechanisms as semaphores, mutexes, condition variables. - Using Semaphores: First we need 2 semaphores, one to track empty slots and another to track full slots. Producers decrement the “empty” semaphore before inserting an item and increment the ‘full” semaphore right after. Consumers decrement the “full” semaphore, consume an item, amd increment the “empty” semaphore. - Using mutexes: This is used to provide mutual exclusion. Producers and consumer acquire the mutex before accessing the buffer and release after. When producers and consumers hold the mutex they can respectively insert or consume items. This ensures only one of the members accesses the buffer at one time. - Using condition variables: Conditions are used to signal and wait for changes. Producers signal the conditions when they insert an item, giving a signal to consumer that the buffer is available. b. Explanation of how proper data sharing and synchronization between producers and consumers are ensured. This is ensured by the following: - Shared Buffer: Both actor chare the same buffer. - Mutual exclusion: only one actor is able to use the buffer at a time. - Synchronisation: Make the switch among actors efficiently. - Empty and Full buffer conditions: These conditions are used to track the conditions. - Proper waiting and Signalling: Actors should wait the proper signal before proceeding. 5. Deadlock Handling Deadlock is a serious issue un programming that happens when 2 processes hold on, waiting for each other to release the resource. This causes the program to stand still. Deadlock handling mechanisms are designed to detect, prevent and recover from deadlock situations. a. Deadlock prevention. - Resource Ordering Defines an order regarding resource acquisition - Resource allocation graph This is to avoid circular wait conditions based on allocation graphs to detect them. - Timeouts Sets a determine amount of time to avoid processes going forever. b. Deadlock detection and recovery. - Resource allocation graph Use of an allocation graph de cycles. If I cycle is detected the deadlock detection algorithms identify the processes involved and proceed with recovery actions. - Deadlock detection algorithm It is possible the use of algorithms to detect deadlocks. Like: Banker’s or Wait-for graph. - Process termination It is possible for the system to terminate the process and restart them. - Resource pre-emption If a deadlock is detected, the system may release resources to end the deadlock. c. Avoidance and Prevention Strategies. - Avoidance Algorithms These algorithms analyse and detect patterns to ensure enough resources are available according to requirements. - Prevention policies Guidelines or policies can help to avoid or prevent deadlocks from happening. Examples of situations leading to deadlock - Resource allocation - Nested Locking - Circular Waiting - Multiple Resource Requests - Deadly embrace - Locking with interrupts Demonstration of how each mechanism helps prevent or recover from deadlock. - Resource allocation: By enforcing a pre-defined order for resources. Nested Locking: By implementing a locking hierarchy. Circular Waiting: Terminating processes involved in the circular Waiting. - Multiple Resource Requests: If a process cannot get all required processes, it releases some resources and try later. Deadly embrace: When deadlock detected , processes involved can be terminated or rolled back to a previous state. Locking with interrupts: Processes can be terminated or rolled back top previous state 6. Concurrency Control in File Systems This is the mechanism used to manage simultaneous access to files by multiple processes. These file systems must assure data integrity and consistency. Concurrency control is achieved by the following mechanisms: a. File Locking File locking locks files so they can not be used when a process is using them. b. Read-Write Locks Also known as shared-exclusive locks allow multiple processers to read from a file, but just only one can write at a time. c. File System transactions When dealing with files, multiple operations can be grouped into one transaction. This allows to do multiple action with one unique access. d. File System journaling This keeps a journal about the changes and it is able to roll back in case of a crash. e. File System Locking protocols Locking protocols are rules for acquiring and releasing files. This is to ensure mutual exclusion, Examples and scenarios illustrating the importance of concurrency control: a. Multiple users editing the same file In a shared network drive, multiple users may try to save a file at the same time. In case this happens, there should be rules in order to determine which user is saving the file first and who is next. b. Database Transactions Database transactions requires high levels of concurrency control in order to maintain their ACID properties (Atomicity, Consistency, Isolation and Durability). c. Web Serving requests. A web server receives many requests at the same time. d. Cloud storage system Same as the web server, cloud storage system is used not only by one user. This is the reason concurrency control is very important. e. Operating System File Access An operative system manages multiple processes running at the same time all the time. f. Version Control System Version Control systems like GIT, put resources available to many developers at the same time. 7. Real-world Operating System Case Study macOS, developed by Apple Inc., is a Unix-based operating system known for its intuitive user interface, security features, and seamless integration with Apple's ecosystem of devices and services. Launched in 2001 as Mac OS X and later rebranded as macOS, the operating system powers Apple's line of desktop and laptop computers. macOS offers a sleek and visually appealing user experience. macOS, like other modern operating systems, employs various mechanisms to handle process synchronization challenges, ensuring that concurrent processes can share resources and coordinate actions without any race conditions, deadlocks, or data corruption. How macOS handles process synchronization challenges: a. Thread Synchronisation Primitives macOS uses a wide range of synchronisation primitives used in this assessment. Some of them are: mutexes, condition variables, semaphores, barriers. These primitives can be used to protect critical sections of code, coordinate thread execution, and manage access to shared resources. This ensures that only one thread can access a critical section at a time and that threads wait appropriately for resources to become available. b. Grand Central Dispatch (GCD) GCD is a concurrency framework provided by macOS. GCD automatically manages thread creation, scheduling, and synchronization, optimizing resource utilization and improving performance. GCD provides dispatch queues, which are first-in-first-out queues for executing tasks concurrently. c. Locking mechanisms macOS supports various locking mechanisms, such as POSIX locks, file locks (fcntl), and atomic operations, which can be used to protect shared resources and prevent race conditions. d. Inter-Process Communication (IPC) macOS provides several IPC mechanisms, such as Mach ports, sockets, and shared memory, which allow processes to communicate and synchronize their actions. e. Kernel Synchronisation Mechanisms macOS's kernel implements various synchronization mechanisms to manage access to kernel data structures and resources. Kernel locks and spinlocks are also primitives used to protect sections of the kernel code. 8. Conclusion Summary of key findings and insights from the exploration of synchronization strategies: - The critical section problem shows the need to synchronise the access to shared resources to multiple processes to avoid race conditions. - Semaphores are a very flexible mechanism to coordinate the access to resources. - One of the more important challenges is to balance the performance and concurrency based on the application we are working on. - Concurrency in Files Systems have their own mechanisms to control and manage concurrent access while maintaining data consistency. - The importance of concurrency control is present in diverse scenarios like, webservers, clouds, Operative systems, etc. The discussed concepts surrounding synchronization strategies in operating systems offer important insights about challenges and implications of concurrent programming. Understanding these concepts is crucial for developers, system architects, and software engineers who deal with designing and implementing multi-threaded and multi-process applications. The use of appropriate synchronization mechanisms and strategies, such as mutexes, semaphores, and locking protocols, etc can mitigate the risk of race conditions, deadlocks, and data corruption, ensuring reliability, performance, and scalability in systems. 9. Bibliography - Ann Mclver-Mchoes, Ida M. Flynn, Understanding Operative System, 8th Edition, Course Technology ISE, 2017 A Silberchatz, P B Galvin, G Gagne. Operative System Concepts, Edition 10, Jhon Wiley & Sons Inc, 2021 W Stallings, Operating Systems, 9th Edition, pearson Education Limited, 2017