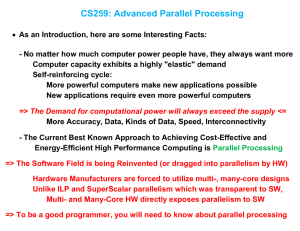

Parallelism Non-negotiable Martin Krastev, ChaosGroup Concurrency & Parallelism While we will mostly refer to parallelism throughout this talk, concurrency is the underlying concept that enables parallelism; there are different schools of thought on the subject of concurrency and parallelism, but here we adopt the notion that: ● ● Concurrency is the mere absence of sequentiality; we have concurrency when some of the steps of an algorithm can happen out of order without changing the final outcome. Example: A=B*C+D*F Parallelism is the simultaneous occurrence of such steps. Example: A = sum( B * C time D*F) So, about parallel computing ● The advent of multiprogramming AKA multi-tasking ○ ● Para-virtualized, time-shared multi-tasking -- emerging in 1960s, prominent across mainframes by 1970s (IBM System/360) Single-task parallelism ○ Started with Ridiculously Computationally-Heavy™ tasks which had no alternative but to get well understood and parallelised somehow; often those turned out to be Embarrassingly Parallel™. Such super-tasks soon became delegated to super-computers -- purpose-built parallel machines appearing in the early 1970’s (https://en.wikipedia.org/wiki/Vector_processor) ..Well Tasks we throw at computers are getting ever heavier, but sequential computing is not getting much faster anymore. Yes, really No, seriously Help us, parallelism You’re our only hope First thing first -- taxonomy Established types of parallelism: ● ● ● Instruction-level parallelism (pipelining; super-scalarity, VLIW) Data-level prallalism (SIMD/SIMT/SPMD) Task-level parallelism (multi-threading, multi-tasking, distributed comp.) Covert types: ● Memory-level parallelism Parallel Peformance Models Merely knowing that better parallelization would increase performance is not sufficient; we want prediction models that can set our expectations and, thus, drive our effort. ● ● ● Amdahl’s Law (https://en.wikipedia.org/wiki/Amdahl%27s_law) Gustafson-Barsis’s Law (https://en.wikipedia.org/wiki/Gustafson%27s_law) Roofline model (https://en.wikipedia.org/wiki/Roofline_model) Amdahl’s Law By Daniels220 at English Wikipedia, CC BY-SA 3.0, https://commons.wikimedia.org/w/index.php?curid=6678551 Gustafson-Barsis’s Law Let’s fix the task time and star throwing more and more work and processors at the parallel portion of our task: productivity = (1 - x) + processors * x, x : parallel portion, plot axis in % y : processors, from 1 to ∞ The larger the parallel portion of our taks, the sooner we get unbound linear scaling of productivity! The moral of the story? (for the gamers among us) For a given framerate, we can increase the resolution to infinity! -- Gustafson-Barsis YET For a given resolution, we cannot increase the framerate to infinity. -- Gene Amdahl (sorry, Doom players) Roofline Model By Giu.natale - Own work, CC BY-SA 4.0, https://commons.wikimedia.org/w/index.php?curid=49641351 Instruction-level parallelism Lowest hanging fruit -- we feed it sequentialisms, it produces parallelism for us! So what’s wrong with that? - We already ate it. By early 2000’s pipeline and super-scalarity efficiency gains plateaued. Any advances in that direction since then have been minor. We’ve hit a hardware wall there. Instruction-level parallelism Measured in IPC (Instructions Per Clock; or its reciprocal CPI), but.. ● ● ● Dominated by Data Locality (duh!) Even with good Data Locality, compiler (writers) can fail at reading the fine print of CPU μarch, and kill IPC when we least expect it IPC is not always the decisive factor of single-core performance: execution_time = executed_instuctions / (IPC * clock_rate) VLIW -- a cheaper approach to super-scalarity. Perhaps too cheap? Instruction-level parallelism Simultaneous Multi-Threading (SMT), AKA Hyperthreading ● ● ● ● ● ● Another cheap way to improve ILP in super-scalar cores. Multiple contexts; instructions are fetched from multiple (2, 4, 8) streams. Improves opportunities for instruction scheduling. Requires more than one thread to function (duh!) Can give throughput improvements from 10’s of % to 0%. Can be detrimental to latency-critical single-tread tasks! : ( Data-level parallelism Arrays AKA Where there’s one, there are many. “The three four-letter horsemen acronyms of DLP” ● ● ● SIMD (Single-instruction, Multiple-data) SIMT (Single-instruction, Multiple-threads) SPMD (Single-program, Multiple-data) Data-level parallelism: SIMD S = MUL(B, C) B0 T = MUL(E, F) B1 C0 B2 C1 B3 C2 E0 C3 E1 F0 E2 F1 E3 F2 U = ADD(S, T) ... S0 S1 S2 S3 U0 T0 U1 U2 U3 T1 T2 T3 F3 Data-level parallelism: SIMD ● ● ● ● Same work, fewer instructions (remember execution_time from ILP?) Found in virtually all modern CPU ISAs (but not standardized) Packed memory accesses: AoS -> SoA -> AoSoA; gather-scatter Compilers can extract DLP from loops, and generate SIMD for us; your mileage will vary. Data-level parallelism: SIMT S = MUL(B, C) B7 T = MUL(E, F) B1 C7 B0 C1 B3 C0 C3 U = ADD(S, T) ... S7 U7 S1 S0 S3 ... ... ... ... T7 T1 T0 T3 U1 U0 U3 Data-level parallelism: SIMT ● ● The GPU evolution of SIMD -- same kernel executed in lock-step within SIMD blocks (warps), multiplied across many warps, keeping in-flight thousands of threads; warp treads issue independent memory addresses (but strongly prefer coalescing) Many threads generating many memory accesses enables Memory-level parallelism (more on that in a sec) Data-level parallelism: SPMD S = MUL(B, C) B1 B7 T = MUL(E, F) B3 B0 C1 C7 C3 C0 U = ADD(S, T) ... S1 S7 S3 S0 ... ... ... T1 T7 ... T3 T0 U7 U1 U3 U0 Data-level parallelism: SPMD ● ● The CPU “answer” to SIMT -- same kernel executed in parallel (not in lock-step) on tens of cores, and (perhaps) in lock-step on the individual lanes of (ever-wider) SIMD units Same kernel executed on GPU and CPU does SIMT on GPUs and SPMD on CPUs. Memory-level parallelism Postulate: in a memory-latency-bound scenario, the more memory requests we can generate ahead of time, the higher our efficiency is. Reality check: we’re standing before a Memory Wall (that Data Locality..) ● ● ● MLP originally devised as a clever way for ILP to by-pass the Memory Wall; but a real breakthrough in MLP requires orders-of-magnitude higher speculative-execution rates than current OOO designs : / Hey, SIMT provides orders-of-magnitude more memory request w/o speculation! There the sky bandwidth is the limit! Ergo SPMD cannot exploit MLP to the extent SIMT can Thread/Task-level parallelism Simplistic view: business as usual with some synchronisation, right? Right.. Memory Consistency, Sequential Consistency and Cache Coherence, or How to Avoid Creating Diverging Views of the Same Universe™ ● ● ● The (invisible) foundation: cache-coherence hardware protocols Atomics & fences Transactional memory ..And then consider UMA vs NUMA vs distributed computing. Cache-coherence hw protocols State-of-data state machines: ● ● ● MSI (Modified/Shared/Invalid) MESI (Modified/Exclusive/Shared/Invalid) MOESI (Modified/Owned/Exclusive/Invalid) ..To name a few. Concurrency control models ● Pessimistic: locks -> dealocks : ( Demand the world acknowledges our state before we move on. ● Optimistic: lockless -> livelocks : / Optimists often get more work done than pessimists. ‘Залудо работѝ, залудо не стой!’ Atomics When single-threaded, our code is sequentially-consistent both in value computations and in side effects. This is not the case when the effects of our code are observed from other threads. Ergo, this may not be the case even when the results of our code are observed from its own thread when running multi-threaded! The reason for that is that shared resources (among threads) are not treated the same way by the underlying coherence protocols, as they are by C-like programming languages under normal conditions. We need special semantics for shared resources. Enter atomics. Atomics Atomic types provide atomic read-modify-writes over fundamental data types, e.g. arithmetics and compare-set/compare-exchange over integrals an pointers. They also provide guarantees for the visibility of the effect of the op to other threads. According to the C++ 2011 memory-order model, atomics order can be: ● ● ● ● ● ● Relaxed Consume Acquire Release Acquire-release Sequentially-consistent Fences, AKA Barriers On an implementation level, atomics often rely on fences. But memory fences can be used on their own as synchronisation primitives. Fences guarantee sequential consistency for the mem accesses, subject to the fence, in accordance with those being ‘before’ or ‘after’ the fence point; the guarantee holds for the issuing thread, but more importantly, for any external observers (e.g. other threads). Fences can be: ● ● ● Load/Read -- affects the loads from memory Store/Write -- affects the stores to memory Combined -- affects all memory accesses, load and stores alike Transactional memory A typically optimistic model -- thread requests an atomic memory transaction (marks begin/end), which may or may not succeed. ● ● First hw implementations in mainframe/server chips, but tech gradually makes its way to the desktop. Intel first introduced it in Haswell (TSX extension) -- eventually discovered a bug in their implementation and disabled the feature. Re-introduced in later Broadwell models; originally-affected models never recovered. Let’s wrap it up for today (take-aways) ● ● ● ● ● If your task is suitable for SIMT, by God and country, write it for SIMT! If not, then still given DLP, SPMD might be your best bet; make use of SIMD! When sharing resources, optimistic concurrency control may be generally harder to implement than pessimistic, but is often more optimal. Don’t leave ILP optimisations for last -- ILP issues could be the tip of data locality problems -- use profilers thoughout the dev cycle of your project. Don’t blindly trust the compiler to provide the best ILP for you -- get familiar with the architecture/μarch, so you can get the most out of the compiler. References [0] Karl Rupp, 40 Years of Microprocessor Trend Data https://www.karlrupp.net/2015/06/40-years-of-microprocessor-trend-data/ [1] William Dally, Efficiency and Parallelism: The Challenges of Future Computing https://www.youtube.com/watch?v=l1ImS3gbg08 [2] Andrew Glew, MLP yes! ILP no! https://people.eecs.berkeley.edu/~kubitron/asplos98/abstracts/andrew_glew.pdf [3] L. Ceze, J. Tuck and J. Torrellas Are We Ready for High Memory-Level Parallelism? http://iacoma.cs.uiuc.edu/iacoma-papers/wmpi06.pdf [4] Herb Sutter, Strong and Weak Hardware Memory Models https://herbsutter.com/2012/08/02/strong-and-weak-hardware-memory-models/ [5] Peter Sewell, C/C++11 mappings to processors https://www.cl.cam.ac.uk/~pes20/cpp/cpp0xmappings.html [6] Jeff Preshing, Acquire and Release Semantics http://preshing.com/20120913/acquire-and-release-semantics/ [7] Jeff Preshing, The Purpose of memory_order_consume in C++11 http://preshing.com/20140709/the-purpose-of-memory_order_consume-in-cpp11/