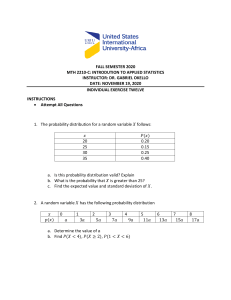

Chapter 4. One Random Variable

H. F. Francis Lu

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

1 / 98

Outline

4.1 The Cumulative Distribution Function

4.2 The Probability Density Function

4.3 The Expected Value of X

4.4 Important Continuous Random Variables

4.5 Functions of a Random Variable

4.6 The Markov and Chebyshev Inequalities

4.7 Transform Methods

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

2 / 98

In this chapter we will study continuous random variable.

Example 1. Consider an experiment whose output is a real value

taken randomly and equally likely from [0, 1).

1

2

3

4

What is the probability that the output is ≤ 0.3?

Ans: the probability is 0.3

What is the probability that the output is ≥ 0.4?

Ans: the probability is 0.6

What is the probability that the output is between 0.3 and

0.4?

Ans: the probability is 0.1

What is the probability that the output equals 0.5?

Ans: the probability is 0

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

3 / 98

4.1 The Cumulative Distribution

Function

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

4 / 98

The Cumulative Distribution Function

The cumulative distribution function (CDF) of a random variable

X is defined as the probability of the event {X ≤ x}

FX (x) = P[X ≤ x]

for −∞ < x < ∞

The axioms of probability and their corollaries imply that the CDF

has the following properties:

1

0 ≤ FX (x) ≤ 1

2

limx→∞ FX (x) = 1

3

limx→−∞ FX (x) = 0

4

FX (x) is a nondecreasing function of x:

if a < b then FX (a) ≤ FX (b)

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

5 / 98

5

FX (x) is continuous from the right

for h > 0, FX (b) = limh→0 FX (b + h) = FX (b + )

left-continuous

right-continuous

See Example 2

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

6 / 98

6

The probability of events that correspond to intervals of the

form {a < X ≤ b} can be expressed in terms of the CDF :

P[{a < X ≤ b}] = FX (b) − FX (a)

Proof:

Since

{X ≤ a} ∪ {a < X ≤ b} = {X ≤ b}

and since the two events on the left-hand side are mutually

exclusive, we have by Axiom III that

FX (a) + P[{a < X ≤ b}] = FX (b)

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

7 / 98

7

To compute the probability of the event {X = b} let > 0

P[{b − < X ≤ b}] = FX (b) − FX (b − )

Then as → 0+ we have

P[X = b] = FX (b) − FX (b − )

which is the magnitude of the jump of the CDF at point b.

It follows that if the CDF is continuous at point b, then the

event {X = b} has probability zero.

See Example 1, part 4.

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

8 / 98

To compute the probabilities of other types of intervals

{a ≤ X ≤ b} = {X = a} ∪ {a < X ≤ b}

we have

P[a ≤ X ≤ b]

= P[X = a] + P[a < X ≤ b]

= FX (a) − FX (a− ) + FX (b) − FX (a)

= FX (b) − FX (a− )

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

9 / 98

If the CDF is continuous at the points x = a and x = b, then

following probabilities are equal:

P[a < X < b], P[a ≤ X ≤ b], P[a < X ≤ b], P[a ≤ x < b]

since if the CDF is continuous at the endpoints of an interval, then

the endpoints have zero probability.

8

The probability of the event {X > x} is

P[X > x] = 1 − FX (x)

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

10 / 98

Example 2. Suppose that a coin is tossed three times and the

sequence of heads and tails is noted. The sample space for this

experiment is

S = {HHH, HHT , HTH, HTT , THH, THT , TTH, TTT }.

Now let X be the number of heads in three coin tosses. X assigns

each outcome ζ ∈ S a number from the set SX = {0, 1, 2, 3}.

The table below lists the eight outcomes of S and the

corresponding values of X .

ζ∶

HHH HHT HTH THH HTT THT TTH TTT

X (ζ) ∶

3

2

2

2

1

1

1

0

X is then a random variable taking on values in the set SX .

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

11 / 98

We know that X takes on only the values 0, 1, 2, and 3 with

probabilities 1/8, 3/8, 3/8, 1/8, respectively, so FX (x) is simply the

sum of the probabilities of the outcomes from {0, 1, 2, 3} that are

less than or equal to x.

The resulting CDF has discontinuities at the points 0, 1, 2, 3.

Consider the CDF in the vicinity of the point x = 1. For δ a small

positive number, we have

FX (1 − δ) = P[X ≤ 1 − δ] = P[0 heads] =

1

8

However

FX (1) = P[X ≤ 1] = P[0 or 1 heads] =

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

1 3 1

+ =

8 8 2

H. F. Francis Lu

12 / 98

also

1

2

The CDF can be written compactly in terms of the unit step

function :

0, x < 0

U(x) = {

1, x ≥ 0

FX (1 + δ) = P[X ≤ 1 + δ] = P[0 or 1 heads] =

Then

1

3

3

1

FX (x) = U(x) + U(x − 1) + U(x − 2) + U(x − 3)

8

8

8

8

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

13 / 98

The binomial random variable n = 3, p = 12 has CDF

FX (x)

1

7

8

1

2

1

8

0

2020 Probability Theory: Chapter 4

1

2

Ver. 2020.04.13

x

3

H. F. Francis Lu

14 / 98

Example 3. Let X be the number of heads in three tosses of a

fair coin. Use the CDF to find the probability of events

A = {1 < X ≤ 2}, B = {0.5 ≤ X < 2.5} and C = {1 ≤ X < 2}

Sol.

P[1 < X ≤ 2] = FX (2) − FX (1) =

7 1 3

− =

8 2 8

P[0.5 ≤ X < 2.5] = FX (2.5− ) − FX (0.5− ) =

P[1 ≤ X < 2] = FX (2− ) − FX (1− ) =

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

7 1 6

− =

8 8 8

1 1 3

− =

2 8 8

H. F. Francis Lu

15 / 98

Example 4. Let X be a continuous random variable taking

values from [a, b] ⊂ R equally likely. The CDF FX (x) is given by

⎧

0,

⎪

⎪

⎪ x−a

FX (x) = ⎨ b−a ,

⎪

⎪ 1,

⎪

⎩

2020 Probability Theory: Chapter 4

if x < a

if x ∈ [a, b)

if x > b

Ver. 2020.04.13

H. F. Francis Lu

16 / 98

Three Types of Random Variables

1

A discrete random variable X is defined as a random variable

whose CDF FX (x) is a right-continuous staircase function of

x with jumps at elements in a countable set SX = {x1 , x2 , . . .}

FX (x) = ∑ pX (xk ) = ∑ pX (xk )U(x − xk )

xk ≤x

xk ∈SX

where pX (xk ) = P[X = xk ] gives the magnitude of the jump at

point x = xk in CDF.

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

17 / 98

2

A continuous random variable is defined as a random variable

whose CDF FX (x) is an integral of some nonnegative function

f (x)

FX (x) = ∫

x

−∞

f (ω) dω

that is continuous everywhere and sufficient smooth, implying

P[X = x] = 0

3

for all x

A random variable of mixed type is random variable with a

CDF that has jumps on a countable set of points x1 , x2 , . . .

but that also increases continuously over at least one interval

of values of x.

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

18 / 98

4.2 The Probability Density Function

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

19 / 98

The probability density function (PDF) of X, if exists, is defined as

the derivative of FX (x) :

d

fX (x) = dx

FX (x)

The PDF represents the “density” of probability at the point x in

the followings sense : The probability that X is in the vicinity of x,

i.e. {x < X ≤ x + dx}, is

P[x < X ≤ x + dx] = FX (x + dx) − FX (x) =

FX (x + dx) − FX (x)

⋅ dx

dx

If the CDF has a derivative at x, then as dx → 0+

P[x < X ≤ x + dx] ≈ fX (x) ⋅ dx

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

20 / 98

Sectio

fX (x)

fX (x)

x x ! dx

P!x $ X " x ! dx" ! fX (x)dx

x

(a)

FIGURE 4.4

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

21 / 98

Properties of PDF fX (x)

1

The derivative of CDF, when exists, is nonnegative since the

CDF is a nondecreasing function of x

0 ≤ fX (x) < ∞

2

The CDF of X can be obtained by integrating the PDF :

FX (x) = ∫

3

x

−∞

fX (ω) dω

The probability of an interval is the area under fX (x) in that

interval

P[a ≤ X ≤ b] = FX (b) − FX (a) = ∫

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

b

a

fX (ω) dω

H. F. Francis Lu

22 / 98

Section 4.2 The Probability Density Function

149

fX (x)

x

a

b

x

P!a " X " b" # #ab fX (x)dx

(b)

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

23 / 98

4

∞

∫−∞ fX (x)dx = 1

It follows that a valid PDF can be formed from any

nonnegative, piecewise continuous function g (x) that has a

finite integral :

∞

∫ g (x) dx = c < ∞

−∞

By letting fX (x) = g (x)/c , we obtain a function that satisfies

the normalization condition.

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

24 / 98

or 0 6 x … 1, 5X … x6 occurs when 5u … 2px6 so

Example 5. Let X be a continuous random variable taking

valuesFXfrom

b] ⊂

likely.

CDF F=X x(x) is0 given

1x2 =[a,

P3X

…R

x4 equally

= P35u …

2px64The

= 2px/2p

6 x … by

1.

⎧

0,

a therefore:

⎪

nally, for x 7 1, all outcomes u lead to

…if1x6<x6,

⎪

⎪ 5X1u2

x−a

FX (x) = ⎨ b−a , if x ∈ [a, b)

= P30 6 u … 2p4 = 1

for x 7 1.

FX1x2 = P3X … x4⎪

⎪

⎪

if x ≥ b

⎩ 1,

We saywhich

that Ximplies

is a uniform

variable

in the

that random

the PDF

is given

by unit interval. Figure 4.2(a) shows th

f the general uniform random variable X. We see that FX1x2 is a nondecreasing contin

⎧ 0,

if x its

< aminimum values to its maximum va

unction that grows from 0 to 1 as ⎪

from

⎪

⎪x ranges

1

fX (x) = ⎨ b−a , if x ∈ (a, b)

⎪

⎪ 0,

⎪

if x > b or x < a

⎩

FX (x)

fX (x)

1

b" a

1

x

x

a

2020 Probability Theory: Chapter 4

b

(a)

a

Ver. 2020.04.13

H. F. Francis Lu

b

(b)

25 / 98

Example 6. (Exponential Random Variable)

The transmission time X of messages in a communication system

obeys the exponential probability law with parameter λ > 0, that is,

P[X > x] = {

Then

CDF:

e −λx ,

1,

if x ≥ 0

if x < 0

FX (x) = P[X ≤ x] = (1 − e −λx ) U(x)

PDF:

fX (x) = FX′ (x) = λe −λx U(x)

With T = λ1 ,

2

λ

P[T < X ≤ 2T ] = ∫ 1 λe −λx dx = e −1 − e −2 ≈ 0.233

λ

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

26 / 98

f X (x)

FX (x)

1

λ e − λx

1 − e − λx

x

(

b)

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

27 / 98

Laplacian Random Variable

Example 7. The PDF of the samples of the amplitude of speech

waveforms is found to decay exponentially at a rate α, so the

following Laplacian PDF is proposed:

fX (x) = ce −α∣x∣ ,

for all x ∈ R

Find the constant c, and then find the probability P[∣X ∣ ≤ v ].

Sol.

Note

1=∫

∞

−∞

fX (x) dx = c ∫

∞

−∞

e −α∣x∣ dx = 2c ∫

0

∞

e −αx dx =

2c

α

Hence c = α2 . Now

P[∣X ∣ ≤ v ] = ∫

2020 Probability Theory: Chapter 4

v α

−v

Ver. 2020.04.13

2

e −α∣x∣ dx = 1 − e −αv .

H. F. Francis Lu

28 / 98

Laplacian PDF

1

0.9

0.8

0.7

f X (x)

0.6

0.5

0.4

0.3

0.2

0.1

0

-2

-1

0

1

2

x

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

29 / 98

Example 8.

Let X be a binomial random variable with CDF

3 1 1

3

3

1

FX (x) = ∑ ( ) ⋅ = U(x) + U(x − 1) + U(x − 2) + U(x − 3)

8 8

8

8

8

k≤x k

Though FX (x) is not differentiable at x = 0, 1, 2, 3, the PDF fX (x)

can still be represented in terms of Dirac delta symbol

1

3

3

1

fX (x) = δ(x) + δ(x − 1) + δ(x − 2) + δ(x − 3)

8

8

8

8

f X (x)

FX (x)

1

7

8

1

2

1

8

0

1

8

1

2

x

3

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

3

8

3

8

1

8

0

1

2

H. F. Francis Lu

3

x

30 / 98

Remark 1. Recall that the definition of Dirac delta δ(x) is a

symbol satisfying

∞

∫−∞ g (x)δ(x − a) dx = g (a)

for any (test) function g (x) and for a ∈ R. We therefore have

x

∞

∫−∞ δ(ω − a) dω = ∫−∞ δ(ω − a)U(x − ω) dω = U(x − a)

It then follows that

x

∫−∞ fX (ω) dω

x 1

3

3

1

= ∫ [ δ(ω) + δ(ω − 1) + δ(ω − 2) + δ(ω − 3)] dω

8

8

8

−∞ 8

1

3

3

1

= U(x) + U(x − 1) + U(x − 2) + U(x − 3)

8

8

8

8

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

31 / 98

Conditional CDF’s and PDF’s

If some event X given A is concerned, then the conditional CDF of

X given A is defined by

FX (x∣A) = P[X ≤ x ∣ A] =

P[{X ≤ x} ∩ A]

P[A]

if P[A] > 0

FX (x∣A) satisfies all the properties of a CDF.

The conditional PDF of X given A, if exists, is then defined by

fX (x∣A) =

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

d

FX (x∣A)

dx

H. F. Francis Lu

32 / 98

Example 9. The lifetime X of a machine has a continuous CDF

FX (x). Find the conditional CDF and PDF given the event

A = {X > t}, i.e., machine is still working after time t.

Sol.

The conditional CDF is

FX (x ∣ X > t) = P[X ≤ x ∣ X > t] =

P[{X ≤ x} ∩ {X > t}]

P[{X > t}]

The intersection of the two events in the numerator is equal to the

empty set when x < t and to {t < X ≤ x} when x ≥ t. Thus

0,

FX (x ∣ X > t) = { FX (x)−FX (t)

1−FX (t)

x ≤t

, x >t

The conditional PDF is found by differentiating with respect to x

fX (x ∣ X > t) = {

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

0,

fX (x)

1−FX (t) ,

x <t

x >t

H. F. Francis Lu

33 / 98

4.3 The Expected Value of X

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

34 / 98

Let X be a continuous random variable with PDF fX (x). The

expected value of X is

mX ∶= E[X ] = ∫

∞

−∞

x fX (x) dx

the variance of X

σX2 = Var (X ) = E[(X − mX )2 ] = ∫

∞

−∞

x 2 fX (x) dx − mX2

and the m-th moment of X is defined as

E[X m ] = ∫

∞

−∞

x m fX (x) dx

provided that the above improper integral converges.

Note: depending on FX (x), mX , σX , E(X )m could be finite or

infinite, see Example 13.

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

35 / 98

The Expected Value of Y = g (X )

Let X be a continuous random variable with PDF fX (x) and let

Y = g (X ). Then

E[Y ] = ∫

∞

−∞

g (x)fX (x) dx

Example 10. Let Y = a cos(ωt + Θ), where a, ω, t are constants

and Θ is a uniform random variable in the interval (0, 2π).

The random variable Y results from sampling the amplitude of a

sinusoidal wave with random phase.

Find the expected value of Y and the expected value of the power

of Y .

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

36 / 98

Sol.

E[Y ] = E[a cos(ωt + Θ)]

2π

1

dθ

2π

0

2π

= −a sin(ωt + θ)∣0 = 0

=∫

a cos(ωt + θ)

The average power of Y is

E[(Y − mY )2 ] = E[(a cos(ωt + Θ)) ]

a2 a2

= E [ + cos (2ωt + 2Θ)]

2

2

2

2

2π

a

a2

a

=

+ ∫

cos (2ωt + 2θ) dθ =

2

2 0

2

2

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

37 / 98

4.4 Important Continuous Random

Variables

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

38 / 98

Uniform random variable

X is said to be a uniform random variable over [a, b) with a < b if

fX (x) =

1

[U(x − a) − U(x − b)]

b−a

Example 11.

x

b+a

dx =

2

a b−a

2 + ab + b 2

b x2

a

E[X 2 ] = ∫

dx =

3

a b−a

2

(b

−

a)

σX2 = E[X 2 ] − mX2 =

12

mX = E[X ] = ∫

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

b

H. F. Francis Lu

39 / 98

Exponential Random Variable

X is said to be an exponential with parameter λ > 0 if

fX (x) = λe −λx U(x)

Example 12.

mX = E[X ] = ∫

E[X 2 ] = ∫

∞

0

∞

0

xλe −λx dx =

x 2 fX (x) dx =

σX2 = E[X 2 ] − mX2 =

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

1

λ2

2

λ2

1

λ

H. F. Francis Lu

40 / 98

More on Exponential Random Variables

Exponential random variable can be seen as a limiting form of

Geometric random variable.

Let λ be the average number of arrivals per second

Consider a sequence of subintervals, each of duration n1 sec.

The subintervals correspond to a sequence of independent

Bernoulli trials with p = λn .

Let Xn denote the number of subinterval until the first arrival.

Xn is a geometric random variable with PMF

pXn (k) = (1 − p)k−1 p

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

41 / 98

For any t ∈ R+ , consider the following probability

lim P[the time until first arrival ≤ t]

n→∞

= lim P {Xn ≤ nt}

n→∞

λ nt

= lim [1 − (1 − ) ]

n→∞

n

−λt

=1−e

which is the CDF of an exponential random variable.

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

42 / 98

Exponential random variable possesses the memoryless property

Proposition 1

Let X be an exponential random variable with PDF fX (x) =

λe −λx U(x).

P[X > t + h ∣ X > t] = P[X > h]

for h > 0

Proof:

P[X > t + h ∣ X > t] =

2020 Probability Theory: Chapter 4

P [X > t + h] e −λ(t+h)

=

= e −λh = P[X > h]

P[X > t]

e −λt

Ver. 2020.04.13

H. F. Francis Lu

43 / 98

Example 13.

CDF

X is said to be a Pareto random variable if it has

b a

FX (x) = [1 − ( ) ] U(x − b)

x

for some parameter a, b > 0

It follows that the PDF

fX (x) = FX′ (x) =

a ⋅ ba

U(x − b)

x a+1

is a well-behaved function for x ≠ b.

If a ∈ (0, 1],

∞

a ⋅ ba

dx Ð→ ∞

x a+1

b

∞

a ⋅ ba

E[X 2 ] = ∫ x 2 a+1 dx Ð→ ∞

x

b

E[X ] = ∫

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

x

H. F. Francis Lu

44 / 98

If a ∈ (1, 2]

∞

a ⋅ ba

ab

dx

=

x a+1

a−1

b

a

∞

a

⋅

b

E[X 2 ] = ∫ x 2 a+1 dx Ð→ ∞

x

b

E[X ] = ∫

x

If a > 2,

∞

a ⋅ ba

ab 2

dx

=

x a+1

a−2

b

2

b

a

σX2 =

(a − 1)2 (a − 2)

E[X 2 ] = ∫

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

x2

H. F. Francis Lu

45 / 98

A Gaussian random variable X with mean m and variance σ 2 has

the following PDF

1

(x − m)2

fX (x) = √

exp (−

)

2σ 2

2πσ 2

For simplicity, we will henceforth write X ∼ N (m, σ 2 )

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

46 / 98

∞

Example 14. Prove ∫−∞ fX (x) dx = 1 for Gaussian PDF.

Sol.

∞

2

(x − m)2

√

) dx]

[∫

exp (−

2σ 2

−∞

2πσ 2

∞

∞

x 2 +y 2

1

=

e − 2 dx dy

∫

∫

2π −∞ −∞

∞

2π

1

r2

re − 2 dθ dr ( x = r cos θ, y = r sin θ )

=

∫

∫

2π 0

0

1

=1

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

47 / 98

To verify the mean and variance for a Gaussian random variable,

we need the following lemma.

Lemma 2

The gamma function

Γ(z) = ∫

∞

x z−1 e −x dx

0

for z > 0 satisfies

√

1

Γ( ) = π

2

Γ(z + 1) = z Γ(z)

Γ(m + 1) = m! for 0 ≤ m ∈ Z

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

48 / 98

Example 15. Show that the random variable X ∼ N (m, σ 2 ) has

mean m and variance σ 2 .

Sol.

∞

(x − m)2

x

√

exp (−

) dx

2σ 2

−∞

2πσ 2

∞ y +m

y2

√

=∫

exp (− 2 ) dy ( set y = x − m )

2σ

−∞

2πσ 2

∞

y

y2

√

=m+∫

exp (− 2 ) dy

2σ

−∞

2πσ 2

´¹¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹¸¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¶

E[X ] = ∫

Var (X ) = ∫

∞ (x − m)2

−∞

2020 Probability Theory: Chapter 4

=0

(x − m)2

√

exp (−

) dx

2σ 2

2πσ 2

∞

x2

x2

√

exp (− 2 ) dx

=∫

2σ

−∞

2πσ 2

2

∞

σ

2σ 2 3

1

= √ ∫ y 2 e −y dy = √ Γ ( ) = σ 2

π −∞

π 2

Ver. 2020.04.13

H. F. Francis Lu

49 / 98

Proposition 3

Let X ∼ N (m, σ 2 ); then Y = X −m

σ ∼ N (0, 1)

Definition 1 (CDF and Q function)

Let X ∼ N (0, 1). Then the CDF of X is

Φ(x) ∶= FX (x) = ∫

x

1

t2

√ e − 2 dt

−∞

2π

The Q function for Gaussian tail probability is given by

Q(x) = P[X > x] = 1 − Φ(x) = ∫

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

∞

x

1

t2

√ e − 2 dt

2π

H. F. Francis Lu

50 / 98

Proposition 4

Q(0) = 12 , Q(−x) = 1 − Q(x), Φ(−x) = 1 − Φ(x).

Proposition 5

Let X ∼ N (m, σ 2 ) be a random variable Then

FX (x) = Φ (

x −m

)

σ

Proof:

⎡

⎤

⎢

⎥

⎥

⎢

⎢X − m x − m⎥

⎥ = Φ (x − m)

⎢

≤

FX (x) = P [X ≤ x] = P ⎢

σ ⎥⎥

σ

⎢ σ

⎢´¹¹ ¹ ¹¸¹ ¹ ¹ ¶

⎥

⎥

⎢ ∼N (0,1)

⎦

⎣

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

51 / 98

Suppose that we have a partition of the sample space S into the

union of disjoint events B1 , B2 , . . . , Bn .

Let FX (x∣Bi ) be the conditional CDF of X given event Bi .

The Theorem on Total Probability implies

n

n

i=1

i=1

FX (x) = P[X ≤ x] = ∑ P[X ≤ x ∣ Bi ]P[Bi ] = ∑ FX (x ∣ Bi )P[Bi ]

The PDF is obtained by differentiation

fX (x) =

2020 Probability Theory: Chapter 4

n

d

FX (x) = ∑ fX (x∣Bi )P[Bi ]

dx

i=1

Ver. 2020.04.13

H. F. Francis Lu

52 / 98

Signal Detection

Example 16. An equally probable binary message is transmitted

as a signal S ∈ {−1, 1}. The communication channel corrupts the

transmission with an additive Gaussian noise N (0, σ 2 ). The

receiver concludes that the signal −1 or +1 was transmitted if the

received value is < 0 or > 0 respectively. What is the probability of

error?

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

53 / 98

Example 3.8: Signal Detection

What is the probability of error?

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

54 / 98

Sol.

Let N ∼ N (0, σ 2 ) be the noise. Then the received signal is

R =S +N

and we are asked to find

Pe = P[S = +1, R < 0] + P[S = −1, R > 0]

= P[R < 0∣S = +1]P[S = +1]

+P[R > 0 ∣ S = −1]P[S = −1]

with P[S = +1] = P[S = −1] = 12 .

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

55 / 98

We have

when S = +1,

when S = −1,

R = S + N < 0 ⇐⇒ N < −1

R = S + N > 0 ⇐⇒ N > +1

Hence

P[R < 0 ∣ S = +1] = P[N < −1 ∣ S = +1]

−1

1

1

= P[N < −1] = Φ ( ) = 1 − Φ ( ) = Q ( )

σ

σ

σ

1

P[R > 0 ∣ S = −1] = P[N > 1] = Q ( )

σ

and

1

1

1

1

1

Pe = Q ( ) + Q ( ) = Q ( )

2

σ

2

σ

σ

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

56 / 98

4.5 Functions of a Random Variable

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

57 / 98

The Most Basic Principle

Let X be a random variable and let g (x) be a real-valued function

defined on R.

Then Y = g (X ), is also a random variable. The value of Y is

determined by evaluating the function g (x) at the value assumed

by the random variable X .

Then the CDF for Y is given by

FY (y ) = P {g (X ) ≤ y } = ∫

A(y )

fX (x) dx

where A(y ) = {x ∶ g (x) ≤ y }

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

58 / 98

Example 17.

√

Let X be a uniform random variable on [0, 1] and let Y = X .

Find the CDF and PDF for Y .

Sol.

For CDF, we have

⎧

0, y < 0

⎪

√

⎪

⎪

2

FY (y ) = P[ X ≤ y ] = P[X ≤ y ] = ⎨ y 2 , y ∈ [0, 1]

⎪

⎪

⎪

⎩ 1, y ≥ 1

hence the PDF for Y is given by

⎧

0,

⎪

⎪

⎪

⎪

d

⎪ 2y ,

fY (y ) =

FY (y ) = ⎨

undefined,

⎪

dy

⎪

⎪

⎪

⎪

⎩ 0,

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

y <0

y ∈ [0, 1)

y =1

y >1

H. F. Francis Lu

59 / 98

Example 18. Let the random variable Y be defined by

Y = aX + b

where a is a nonzero constant. Suppose that X has CDF FX (x),

then find FY (y ).

Sol.

The event {Y ≤ y } occurs when A = {aX + b ≤ y } occurs. If a > 0,

then A = {X ≤ y −b

a }. Hence

FY (y ) = P [X ≤

y −b

y −b

] = FX (

),

a

a

a>0

If a < 0, then A = {X ≥ y −b

a }, and

FY (y ) = P [X ≥

y −b

y −b

] = 1 − FX (

),

a

a

a<0

Use the Chain rule

dFx (u) dFx (u) du

=

dy

du dy

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

y H.

−bF. Francis Lu

60 / 98

We then have

1

y −b

fY (y ) = fX (

),

a

a

a>0

and

y −b

1

fX (

), a < 0

−a

a

The above two results can be written compactly as

fY (y ) =

fY (y ) =

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

1

y −b

fX (

)

∣a∣

a

H. F. Francis Lu

61 / 98

Example 19. Let X ∼ N (mx , σx2 ) be a Gaussian random variable

and let Y = aX + b. What is fY (y )?

Sol.

Recall that

fX (x) = √

1

2πσx2

exp (−

(x − mx )2

)

2σx2

From the previous example we see

− mx ) ⎞

⎛ ( y −b

y −b

1

1

)= √

fY (y ) = fX (

exp − a 2

∣a∣

a

2σx

⎠

⎝

∣a∣ 2πσx2

2

(y − amx − b)

=√

exp (−

)

2

2

2a2 σx2

2πa σx

2

1

showing that Y ∼ N (amx + b, a2 σx2 ).

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

62 / 98

Recall from the most basic principle for functions of random

variable when X is a continuous random variable with CDF FX (x)

and Y = g (X ) for some real function g .

Then the CDF for Y is given by

FY (y0 ) = P {g (X ) ≤ y0 } = ∫

A(y0 )

dFX (x)

where A(y0 ) = {x ∶ g (x) ≤ y0 }

We next consider the case when h = g −1 exists and is differentiable

at y0

y0 = g (x0 ) is strictly increasing at (x0 , y0 )

y0 = g (x0 ) is strictly decreasing at (x0 , y0 )

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

63 / 98

y0 = g (x0) is strictly increasing at (x0, y0)

In this case we have

FY (y0 ) = P {g (X ) ≤ y0 } = P {X ≤ g −1

(y0 )}Formula

= FX (g −1 (yfor

0 )) a Str

PDF

Assume the PDF for X exists; then we have Function of a Con

fY (y0 )

d

= dy

FY (y0 )

d

= dy

FX (g −1 (y ))∣

y0

y =y0

d −1

g (y )∣

= fX (g −1 (y0 )) ⋅ dy

y =y0

´¹¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹¸ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¶

>0

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

Event {X(u)

H. F. Francis Lu

g 1 (y0 )

g 1 (y0 )}

64 / 98

y0 = g (x0) is strictly decreasing at (x0, y0)

In this case we have

−1

−1

PDF

Formula for a Strictly

Monotonic

FY (y

0 ) = P {g (X ) ≤ y0 } = P {X ≥ g (y0 )} = 1 − FX (g (y0 ))

Function of a Continuous RV

Assume the PDF for X exists; then we have

fY (y0 )

d

FY (y0 )

= dy

y0

d

FX (g −1 (y ))∣

= − dy

y =y0

d −1

= −fX (g −1 (y0 )) ⋅ dy

g (y )∣

y =y0

´¹¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹¸ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¶

<0

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

g 1 (y0 )

Event {X(u)

H. F. Francis Lu

g 1 (y0 )}

65 / 98

PDF for Functions of Random Variables

Let X be a continuous random variable with PDF fX (x) and

Y = g (X ) for some real function g that is invertible and

differentiable. Then

fY (y ) = fX (g −1 (y )) ∣

d −1

g (y )∣

dy

and

FY (y ) = {

2020 Probability Theory: Chapter 4

FX (g −1 (y )),

1 − FX (g −1 (y )),

Ver. 2020.04.13

if g ′ (y ) > 0 for all y

if g ′ (y ) < 0 for all y

H. F. Francis Lu

66 / 98

Example 20. Let the random variable Y be defined by Y = X 2 ,

where X is a continuous random variable. Find the CDF and PDF

of Y .

Sol.

The event {Y ≤ y } occurs when

√

√

{X 2 ≤ y } Ô⇒ {− y ≤ X ≤ y }

for y nonnegative. The event is null when y is negative. Thus

FY (y ) = {

0,

y <0

√

√

FX ( y ) − FX (− y ), y ≥ 0

and differentiating with respect to y ,

√

√

fX ( y ) fX (− y )

fY (y ) = √ −

√

2 y

−2 y

√

√

fX ( y ) fX (− y )

= √ +

√

2 y

2 y

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

67 / 98

Example 21. (A Chi-Square Random Variable)

Let X be a Gaussian random variable with mean m = 0 and

standard deviation σ = 1. Let Y = X 2 . Find the PDF of Y .

Sol.

From the previous example we have

√

√

fX ( y ) fX (− y )

fY (y ) = √ +

√

2 y

2 y

y

e− 2

=√

2y π

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

68 / 98

If the equation y0 = g (x) has n solutions x1 , x2 , . . . , xn .

Consider the event

Cy = {y < Y < y + dy }

and let By be its equivalent event

By = {x1 < X < x1 +dx1 }∪{x2 < X < x2 +dx2 }∪⋯∪{xn < X < xn +dxn }

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

69 / 98

Then we have

P[Cy ] = fY (y ) ∣dy ∣

P[By ] = fX (x1 ) ∣dx1 ∣ + ⋯ + fX (xn ) ∣dxn ∣

Since Cy and By are equivalent events, we must have

fY (y ) ∣dy ∣ = fX (x1 ) ∣dx1 ∣ + ⋯ + fX (xn ) ∣dxn ∣

or equivalently

fY (y ) = fX (x1 ) ∣

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

dxn

dx1

∣ + ⋯ + fX (xn ) ∣

∣

dy

dy

H. F. Francis Lu

70 / 98

Example 22. Let Y = cos(X ), where X is a uniform random

variable over (0, 2π]. Find the PDF pf Y

Sol.

It can be seen for −1 < y < 1, the equation y = cos(x) has two

solutions

x0 = cos−1 (y ) x1 = 2π − cos−1 (y )

hence

dx0

1

= −√

dy

1 − y2

dx1

1

=√

dy

1 − y2

1

Since fX (x) = 2π

, this implies

fY (y ) = fX (x1 ) ∣

dx2

1

dx1

∣ + fX (x2 ) ∣

∣= √

dy

dy

π 1 − y2

for y ∈ (−1, 1).

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

71 / 98

4.6 The Markov and Chebyshev

Inequalities

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

72 / 98

Let X be a nonnegative random variable with mean E[X ].

The Markov inequality states that for a > 0

P[X ≥ a] ≤

E[X ]

a

Proof:

P[X ≥ a] = ∫

a

≤∫

a

≤∫

∞

fX (x) dx

∞ x

a

∞ x

a

E[X ]

=

a

0

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

fX (x) dx

fX (x) dx

H. F. Francis Lu

73 / 98

Example 23. The average height of children in a kindergarten

class is 3 feet, 6 inches. Find the bound on the probability that a

kid in the class is taller than 9 feet.

Sol.

The Markov inequality gives

P[H ≥ 9] ≤

42

= 0.389

108

Example 24. Let X be a uniform random variable on [0, 4].

Then E[X ] = 2 and for a > 0

P[X ≥ a] =

(4 − a)+ E[X ] 2

≤

=

4

a

a

where (x)+ = max{x, 0}.

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

74 / 98

Chebyshev-Bienaymé Inequality

While Markov inequality deals only with nonnegative random

variable, it is easy to extend it to all random variables.

Let X be an arbitrary random variable with mean mx = E[X ]. Set

Y = ∣X − mx ∣

and Y is nonnegative. Now with a > 0

P[Y ≥ a]

= P[∣X − mx ∣ ≥ a]

2

= P[∣X − mx ∣ ≥ a2 ]

2

E[∣X − mx ∣ ]

( Markov ineq. )

≤

a2

σ2

= 2x

a

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

75 / 98

General Chebyshev Inequality

Let X be an arbitrary random variable with mean mx ; then for any

a > 0 and 0 < p < ∞

P[∣X − mx ∣ ≥ a] ≤

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

E[(X − mx )p ]

ap

H. F. Francis Lu

76 / 98

Example 25. The mean and standard deviation of the response

time in a multi-user computer system are known to be respectively

15 seconds and 3 seconds. Estimate the probability that the

response time is more than 5 seconds away from the mean.

Sol.

m = 15, σ = 3, and a = 5. So

P[∣X − 15∣ ≥ 5] ≤

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

9

= 0.36

25

H. F. Francis Lu

77 / 98

Example 26. If X has mean m and variance σ 2 , then Chebyshev

inequality for a = kσ gives

P[∣X − m∣ ≥ kσ] ≤

1

k2

Now suppose that we know that X is a Gaussian random variable,

then for k = 2,

1

P[∣X − m∣ ≥ 2σ] ≤ = 0.25

4

However, since X is Gaussian, we can easily calculate the exact

probability by using Q function. That is, we have

P[∣X − m∣ ≥ 2σ] = 2Q(2) ≈ 0.0456

This verifies the Chebyshev inequality.

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

78 / 98

4.7 Transform Methods

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

79 / 98

The Characteristic Function

The characteristic function of a random variable X is defined by

ΦX (ω) = E[e ı ωX ] = ∫

∞

−∞

fX (x)e ı ωx dx = FFT {fX (x)}

Since ΦX (ω) is the Fourier transform of fX (x), then the PDF of X

is given by the Fourier transform inversion formula

−1

fX (x) = FFT

{ΦX (ω)} =

∞

1

ΦX (ω)e − ı ωx dω

2π ∫−∞

It then follows that PDF and its characteristic function (if exists)

form a unique Fourier transform pair.

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

80 / 98

If X is a discrete random variable, then the PDF of X takes on the

form

fX (x) = ∑ pX (k)δ(x − k)

k

where pX (k) is the PMF for X . Then the Characteristic function

for X is

ΦX (ω) = FFT {fX (x)} = FFT {∑ pX (k)δ(x − k)}

k

= ∑ pX (k)FFT {δ(x − k)} = ∑ pX (k)e ı ωk

k

k

or equivalently

ΦX (ω) = FDTFT {pX (xk )}

Hence the PMF pX (k) is given by the inverse DTFT of ΦX (ω)

−1

pX (k) = FDTFT

{ΦX (ω)} =

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

2π

1

ΦX (ω)e − ı ωk dω

∫

2π 0

H. F. Francis Lu

81 / 98

Example 27. Let

1

⎧

, x =2

⎪

⎪

⎪ 21

pX (x) = ⎨ 6 , x = 3

⎪

1

⎪

⎪

⎩ 3, x = 5

Find ΦX (ω)

Sol.

1

1

1

ΦX (ω) = Ee X = e 2 ı ω + e 3 ı ω + e 5 ı ω

2

6

3

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

82 / 98

Example 28. The characteristic function for a geometric random

variable is given by

∞

ΦX (ω) = ∑ p(1 − p)k−1 e ı ωk

k=1

∞

= ∑ p(1 − p)t e ı ω(t+1)

( t =k −1 )

t=0

= pe ı ω ∑((1 − p)e ı ω )t

t≥0

pe ı ω

=

1 − (1 − p)e ı ω

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

83 / 98

Proposition 6

Let X ∼ N (0, 1). Then

−ω 2

ΦX (ω) = e 2

Proof:

∞

1

x2

e ı ωx √ e − 2 dx

−∞

2π

∞

1 − (x− ı ω)2 − ω2

2

2 dx

√ e

=∫

−∞

2π

∞

(x− ı ω)2

1

−ω 2

√ e − 2 dx

=e 2 ∫

−∞

2π

´¹¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¸¹¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¹ ¶

ΦX (ω) = ∫

N ( ı ω,1)

=e

2020 Probability Theory: Chapter 4

2

− ω2

Ver. 2020.04.13

H. F. Francis Lu

84 / 98

Proposition 7

Let Y = aX + b. Then

ΦY (ω) = e ı ωb ΦX (ωa)

Proof:

ΦY (ω) = Ee ı ωY = Ee ı ω(aX +b) = e ı ωb Ee ı ωaX = e ı ωb ΦX (ωa)

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

85 / 98

Theorem 8

Let X ∼ N (m, σ 2 ); then

ΦX (ω) = exp (−

σ2ω2

+ ı ωm)

2

Proof:

Let Y ∼ N (0, 1); then X = σY + m in distribution.

ΦX (ω) = e ı ωm ΦX (ωσ) = exp (−

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

σ2ω2

+ ı ωm)

2

H. F. Francis Lu

86 / 98

Moment Theorem

Recall

ΦX (ω) = E[e ı ωX ] = ∫

∞

−∞

fX (x)e ı ωx dx

Differentiating both sides n times with respect to ω

∞

dn

Φ

(ω)

=

fX (x)( ı x)n e ı ωx dx

X

∫

dω n

−∞

Evaluating at ω = 0 gives

∞

dn

n

n

Φ

(ω)∣

=

X

∫−∞ fX (x)( ı x) dx = E[( ı X ) ]

dω n

ω=0

we thus have

E[X n ] =

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

1 dn

ΦX (ω)∣ω=0

ı n dω n

H. F. Francis Lu

87 / 98

Example 29. Let X be an exponential random variable with

parameter λ. Then fX (x) = λe −λx u(x) implies

ΦX (ω) = ∫

∞

0

Since

Φ′X (ω) =

λe −λx e ı ωx dx =

ıλ

(λ − ı ω)2

′′

ΦX (ω) =

λ

λ − ıω

−2λ

(λ − ı ω)3

we have

Φ′X (ω = 0) 1

=

ı

λ

′′

Φ

(ω

=

0)

2

E[X 2 ] = X 2

= 2

ı

λ

E[X ] =

and Var (X ) = λ12

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

88 / 98

Sometimes it might be easier with work with the double-sided

Laplace transform

MX (s) = E[e sX ] = ∫

∞

−∞

e sx fX (x) dx = L {fX (x)}

for some s ∈ C such that the above improper integral converges.

This is called the moment generating function for X

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

89 / 98

From MGF to Moments

Recall

MX (s) = L {fX (x)} = ∫

∞

−∞

Note

e sx fX (x) dx

∞

dn

sx n

M

(s)

=

X

∫−∞ e x fX (x) dx

ds n

(1)

∞

dn

M

(s)∣

=

x n fX (x) dx = E[X n ]

X

∫

ds n

−∞

s=0

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

90 / 98

Example 30.

Let X be an exponential random variable with parameter λ > 0.

Find moments of X

Sol.

Recall for Re{s} < λ,

MX (s) =

λ

λ−s

We thus have

E[X n ] =

2020 Probability Theory: Chapter 4

dn

n!λ

n!

M

(s)∣

=

∣

=

X

ds n

(λ − s)n+1 s=0 λn

s=0

Ver. 2020.04.13

H. F. Francis Lu

91 / 98

Example 31.

Let X be a Poisson random variable with parameter λ.

λx e −λ

ax

= e −λ ∑

x!

x≥0

x≥0 x!

−λ a

λ(e s −1)

=e e =e

MX (s) = ∑ e sx

( set a = e s λ )

for all s ∈ C. Moreover

E[X ] = MX′ (s = 0) = λ es eλ (e −1) ∣s=0 = λ

s

′′

E[X 2 ] = MX (s = 0) = λ es eλ (e −1) + λ2 (es ) eλ (e −1) ∣s=0 = λ + λ2

2020 Probability Theory: Chapter 4

s

Ver. 2020.04.13

2

s

H. F. Francis Lu

92 / 98

If X is a discrete, integer-valued random variable, then we could

use z-transform to replace the characteristic function

∞

GX (z) = E[z X ] = ∑ z k pX (k)

k=−∞

This is called the probability generating function for pX (k).

Note that

ΦX (ω) = GX (z)∣z=e ı ω

and

MX (s) = GX (z)∣z=e s

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

93 / 98

Example 32.

Let X be a binomial random variable with PMF

pX (k) = (kn)p k (1 − p)n−k . Find GX (z).

Sol.

n

n

GX (z) = Ez X = ∑ ( )p k (1 − p)n−k z k

k=0 k

n

n

= ∑ ( )(pz)k (1 − p)n−k

k=0 k

= (pz + 1 − p)n

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

H. F. Francis Lu

94 / 98

Using a derivation similar to that used in the moment theorem, it

is easy to show that the PMF of X is given by

pX (k) =

1 dk

GX (z)∣z=0

k! dz k

By taking the first two derivatives of GX (z) and evaluating the

result at z = 1, it is possible to find the first two moments of X :

d

GX (z)∣ = ∑ pX (k)kz k−1 ∣ = ∑ kpX (k) = E[X ]

dz

z=1

k

k

z=1

2

d

GX (z)∣ = ∑ k(k − 1)pX (k)z k−2 ∣

dz 2

z=1

k

z=1

= ∑ k(k − 1)pX (k) = E[X 2 ] − E[X ]

k

Then we have

E[X ] = GX′ (z = 1)

2020 Probability Theory: Chapter 4

′′

Var (X ) = GX (z = 1) + E[X ] − (E[X ])

Ver. 2020.04.13

2

H. F. Francis Lu

95 / 98

Example 33.

Let X be a Poisson random variable with parameter λ.

GX (z) = MX (s)∣z=e s = e λ(e −1) ∣z=e s = e λ(z−1)

s

Moreover

E[X ] = GX′ (z = 1) = λ eλ (z−1) ∣z=1 = λ

′′

GX (z = 1) = λ2 eλ (z−1) ∣z=1 = λ2

′′

Var (X ) = GX (z = 1) + E[X ] − (E[X ]) = λ

2020 Probability Theory: Chapter 4

Ver. 2020.04.13

2

H. F. Francis Lu

96 / 98

Chernoff Inequality

Another way to generalize Markov ineq. is by exponential function.

Let X be an arbitrary random variable Then for any a ∈ R and

s > 0 we have

E[e sX ] MX (s)

P[X ≥ a] = P[e sX ≥ e sa ] ≤

=

e sa

e sa

Similarly,

E[e −sX ] MX (−s)

P[X ≤ a] = P[e −sX ≥ e −sa ] ≤

=

e −sa

e −sa

Theorem 9 (Chernoff Inequality)

For any a ∈ R and s > 0

P[X ≥ a] ≤

2020 Probability Theory: Chapter 4

MX (s)

e sa

Ver. 2020.04.13

P[X ≤ a] ≤

MX (−s)

e −sa

H. F. Francis Lu

97 / 98

Example 34. Let X ∼ N (0, 1). For any a > 0 we have

Q(a) = P[X ≥ a] ≤

MX (s)

s2

−as

2

=

e

e as

by Chernoff inequality. Note

d s 2 −as

s2

e2

= (s − a)e 2 −as

ds

d 2 s 2 −as

e2

>0

ds 2

and

showing the upper bound is convex, hence we have

Q(a) ≤ inf e 2 −as = e 2 −as ∣

s2

s2

s>0

Corollary 10

For a > 0,

2020 Probability Theory: Chapter 4

s=a

= e− 2

a2

Q(a) ≤ e − 2

a2

Ver. 2020.04.13

H. F. Francis Lu

98 / 98