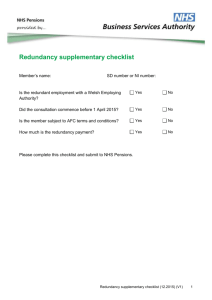

SCIS&ISIS 2014, Kitakyushu, Japan, December 3-6, 2014 Multi-Root I/O Virtualization Based Redundant Systems Sendren Sheng-Dong Xu1,*, member, IEEE, Chia-Hong Wang1, Teng-Chang Chang1, and Shun-Feng Su1,2, Fellow, IEEE 1 Graduate Institute of Automation and Control, National Taiwan University of Science and Technology, Taipei, Taiwan 2 Department of Electrical Engineering, National Taiwan University of Science and Technology, Taipei, Taiwan *E-mail: sdxu@mail.ntust.edu.tw hardware [11]. Recently, using virtualization is one feasible method for redundancy. Virtualization can easily achieve physical functions of hardware, including CPU, OS or peripherals. Hardware virtualization or platform virtualization refers to the creation of a virtual machine that acts like a real computer with an operating system. Software executed on these virtual machines is separated from the underlying hardware resources. A failure of a hosting server becomes a serious problem in consolidated server systems using virtualization. Virtual machines depend on physical devices and virtualization platform on the hosting server. When the hosting server goes down due to any failures of their components, all virtual machines on this server are unable to escape from service down. The more virtual machines the hosting server hosts, the more serious damage a failure of this hosting server causes [12]. Abstract—Redundancy, a method being designed to prevent failures due to software/hardware problem, is one of the most common applications in fault-tolerance systems. In this paper, we provide a multi-root I/O virtualization (MR-IOV) based redundant system architecture which supports high performance, reliability, and scalability to improve conventional redundant architecture with hardware multiplexer for the failover function. In order to fix this drawback, we proposed a redundant architecture to save these statuses in the shared memory, and the backup system will apply the states to fail-over primary host. From experiment results, we observe that the proposed architecture is feasible and it is better than the conventional redundant architecture. Keywords—fault tolerance, fault-tolerant systems, multi-root I/O virtualization (MR-IOV), PCI Express, redundant systems, single-root I/O virtualization (SR-IOV) This paper will present an application of MR-IOV (multiroot I/O virtualization) virtualization method to a case which establishes a redundant configuration against host server failures with multiple host servers. The rest of the paper is organized as follows. Section II describes some related works, the configuration, and requirements for consolidated server systems using virtualization in PCI Express switch. Section III provides a problem definition for determining redundant virtual machine configurations while minimizing the number of required hosting servers. Section IV discusses the experiments and architecture comparison for redundant applications. Section V is the summary and conclusion of this paper. I. INTRODUCTION Redundancy is the duplication of critical components or functions of a system with the intention of increasing reliability of the system. Usually in the form of a backup or fail-safe, it is the quality of systems or elements of a system that is backed up with secondary resources. Redundant configurations have been used in research and design to provide system fault tolerance. The fault tolerance is concerned with the continuation of correct operation of system despite an internal fault [1]. The fault tolerance is a method to design the reliable system using unreliable components and is achieved by using different methods of time or temporal redundancy [2]-[3], information redundancy [4], software redundancy [5], and hardware redundancy [6]-[8]. Hardware redundancy is one of the most common applications of fault-tolerance systems, designed to prevent failures due to hardware components. Typically, components have multiple backups and are separated into smaller "segments" that act to contain a fault, and extra redundancy is built into all physical connectors, power supplies, fans, etc. [9]. In accordance with hardware redundancy, N identical copies of program are executed in N hardware channels. For example, STAR [13] and FTSC [14] have N =2; C.vmp [15], FTMP [16], and SIFT [17] have N = 3; the Space Shuttle [18] has N = 4, DEDIX [19] has N changing from 2 to 20 [10]. Software using real-time redundancy for fault-tolerance is based on nullifying programming errors, or filling in static “emergency” subprograms to the crashed programs. There are many ways to conduct such faultregulation, depending on the application and the available 978-1-4799-5955-6/14/$31.00 ©2014 IEEE II. BASIC KNOWLEDGE AND RELATED WORKS Conventional redundant architecture is shown in Fig. 1. In this mechanism, it uses the hardware multiplexer or the switching method to achieve host fail-over. It depends on the state pin of the host to inform the hardware multiplexer. In the hardware multiplexer, it contains some control logic to determine which source will lead to the destination. Fortunately, the success of PCI Express has been primarily as a fan-out interconnection, enabling CPU, I/O, and storage devices – all of which have PCI Express access points – to communicate. There has been penetration into more sophisticated applications, such as host failover, and the PCI Express interconnection standard has even been used as a backplane to connect PCI Express based subsystems. Due to the performance of PCI Express at generation 3 and its widespread adoption on devices, the popular interconnection 1302 SCIS&ISIS 2014, Kitakyushu, Japan, December 3-6, 2014 In a dual-host application, provision is made for both a primary (or active) host and a secondary (or backup) one. During normal operation, heartbeat messages are sent from primary to secondary to indicate that it is still alive. Checkpoint message containing the current state and transaction history are also sent periodically from primary to secondary. The job of the backup host is to monitor the state of the primary upon detection of its failure, to take over as primary host continuing system operation from the last valid check point. In our used model, the secondary or backup host is connected to the system via a nontransparent bridge while the primary or active host is connected via a transparent bridge. has become an attractive alternative to current solutions as a fabric for data center and cloud computing applications. The PLX PEX8976 [20] device offers multi-host PCI Express switching capacity enabling users to connect multiple hosts to their respective endpoints via scalable, high bandwidth, non-blocking interconnection to a wide variety of application. This solution employs an enhanced architecture which allows users to configure the device in single-host or multi-host mode. In multi-host mode, PEX8976 can be configured with up to 4 upstream to host system, and each has its own dedicated downstream ports. The PEX8796 allows the hosts to communicate their status to each other through accessing special register – door-bell or mailbox registers. The secondary host connection could be directly to its Root Complex or through a fabric connection. In the latter case, both hosts may be active simultaneously. In this case, heartbeat and checkpoint messages would flow in both directions. The BARs on both sides of the non-transparent bridge are used to create tunnels through which each host may send messages to the other host. The doorbell registers available in the NTB may be used for heartbeat messages. The memory access tunnels are used for checkpoint and other data transfer. Failure of the primary host is detected when the secondary host fails to receive a certain number of the regularly scheduled heartbeat messages. As part of the fail over process, the secondary host’s port copies primary SR-IOV status in management port. Failure of the primary host likely leaves switch buffers backlogged and device endpoints with incomplete transactions. During the failover process, the secondary host causes the buffers to be flushed and terminates incomplete transactions at endpoints. It then reconfigures the system with itself as host and restarts the devices and applications in some application specific way, using checkpoint data in management CPU [21]. Fig. 1. The conventional redundant architecture. III. PROPOSED METHOD Multiple hosts are supported by a non-transparent bridge and a RDMA-NIC emulating DMA controller at every host port. Each host communication by exchanging ID routed vendor defines message in the global space isolated from the hosts by the non-transparent bridges. We use vendor provided PF(Physical Function) and VF(Virtual Function) drivers to implement MR sharing of SR-IOV endpoint function. It is achieved by a CSR redirection process that allows the management CPU to snoop and intervene on configuration space transfers and configure the requisite address and ID translations transparently to software running on the servers. Our system architecture is illustrated in Fig. 2. It supports the multi-root sharing of SR-IOV endpoint. Use a management port to manage virtual and physical functions in SR-IOV endpoint. The virtual functions of multiple SR-IOV endpoints can be shared among multiple hosts or system. A physical function can be shared by several virtual functions in the same endpoint. A management port is used for I/O management and fabric routing. It connects to all switches in the fabric via a separate control plane. The procedural of creating virtual SR-IOV end point in multi-hosts purpose is describe as following: In PCI Express at generation 3, it remains the nontransparent bridges but owns significant enhancements. For host to host communications, look-up table address translation in the NTBs provides more flexible and improves performance in the systems, allowing small, successive local windows to be scattered across the global space and protecting local memory from external corruption by means of write enable permissions, read enable and a RID check field in each entry. Finally, the addition of a DMA messaging engine changes the host to host communications model from a load/store operation to a networking model allows the applications written to standard networking API to run over PCI Express network essentially unchanged. 978-1-4799-5955-6/14/$31.00 ©2014 IEEE Step 1: Host sends configure transaction to manager CPU through management port. Step 2: Management CPU receives the configuration request from memory. Step 3: After the receiving, the management CPU issues the configure transaction to PCI-E endpoint connected to downstream port. Step 4: Management CPU response the transaction status to the host. 1303 SCIS&ISIS 2014, Kitakyushu, Japan, December 3-6, 2014 TABLE I. In this scenario, we assume Host 2 is our redundant or backup system. We create a shared memory area in Host 2 allow primary system (i.e., Host 1) to save the status of MRIOV in Virtual Function. Our proposed method is using Interprocess communication (IPC) to create the connection channel between Host 1 and 2. After the connection is complete, sync and exchange these data or status in shared memory periodically. When Host 2 obtains the event or alarm signal that Host 1 is failed from management CPU, it applies these statuses from shared memory to substitute Host 1’s status and processing remained task in further. After Host 1 is reset or resume completely, then get these resources back from Host 2. Finally, the management CPU will inform Host 2 to deliver these tasks and status to Host 1. ARCHITECTURE COMPARISON. Architecture Item Conventional Ours Simple Complex Yes Yes No (Hardware-type) Yes (Software-type) System reliable No Yes Maintain effort Difficult Easy Virtualization support No Yes MR/SR -IOV support No System Scale Host/Device Scalable Number Flexiblity Yes a. Depending on Hardware switching V. CONCLUSION In this paper, we discuss a class of redundant systems and architectures. Conventional redundant Architecture adopts hardware multiplexer to fail-over connected device from host system. It depends on heartbeat or status signal to determine their fail-over source. When the primary host is fail, it results in disconnection with SR-IOV device. In order to keep the state, we proposed a redundant architecture to save these status in shared memory. The secondary host applies the state to failover primary host. Finally, the experiment results shows that the proposed architecture is feasible and better than conventional redundant architecture. REFERENCES [1] A. Avizienis, J. C. Laprie, B. Randell, and C. Landwehr, “Basic concepts and taxonomy of dependable and secure computing,” IEEE Trans. Dependable and Secure Computing, vol. 1, no. 1, pp. 11-33, Jan. 2004. [2] S. Borkar, “Designing reliable systems from unreliable components: The challenges of transistor variability and degradation,” IEEE Micro, vol. 25, no. 6, pp. 10-16, Nov. 2005. [3] A. Timor, A. Mendelson, Y. Birk, and N. Suri, “Using underutilized CPU resources to enhance its reliability,” IEEE Trans. Dependable and Secure Computing, vol. 7, no. 1, pp. 94-109, 2010. [4] A. Ejlali, B. M. Al-Hashimi, M. T. Schmitz, P. Rosinger, and S. G. Miremadi, “Combined time and information redundancy for SEUtolerance in energy-efficient real-time systems,” IEEE Trans. Very Large Scale Integration (VLSI) Systems, vol. 14, no. 4, pp. 323-335, Apr. 2006. [5] T. Tsai, “Fault tolerance via N-modular software redundancy,” Proc. 28th Int’l Symp. Fault-Tolerant Computing (FTCS-28), pp. 201-206, 1998. [6] S. Mitra, N. R. Saxena, and E. J. McCluskey, “A design diversity metric and analysis of redundant systems,” IEEE Trans. Computers, vol. 51, no. 5, pp. 498-510, May 2002. [7] W. Dabney, L. Etzkorn, and G. W. Cox, “A fault-tolerant approach to test control utilizing dual-redundant processors,” Advances in Eng. Software, vol. 39, pp. 371-383, 2008. [8] R. Samet, “Recovery device for real-time dual-redundant computer systems,” IEEE Trans. Dependable and Secure Computing, vol. 8, no. 3, pp. 391-403, May-June 2011. [9] Fault-tolerant computer system, Wikipedia, http://en.wikipedia.org/wiki/Fault-tolerant_computer_system [10] R. Samet, “Recovery device for real-time dual-redundant computer systems,” IEEE Trans. Dependable and Secure Computing, vol. 8, no. 3, pp. 391-403, May-June 2011. Fig. 2. The proposed system architecture. IV. EXPERIMENT RESULT Our experience environment is evaluated on Linux RHEL 6.3 64-bit. The Management software, NIC driver and RDMA driver (stack support Open Fabrics 3.5 [22]) can be referred to PLX support [20]. The shared I/O driver is HBA vendor provided. Table I is the architecture comparison for redundant system. In comparison with the conventional method, our architecture is based on virtualization concept to recover failed host system. It provides the mechanism using software stack method to isolate physical and virtual in the SR-IOV endpoint. It creates a shared memory area to handshake their status between/among multi-host system. 978-1-4799-5955-6/14/$31.00 ©2014 IEEE 1304 SCIS&ISIS 2014, Kitakyushu, Japan, December 3-6, 2014 [17] J. H. Wensley, L. Lamport, J. Goldberg, and M. W. Green, K.N. Levitt, P.M. Melliar-Smith, R.E. Shostak, and C.B. Weinstock, “SIFT— design and analysis of a fault-tolerant computer for aircraft control,” Proc. IEEE, vol. 66, no. 10, pp. 1240-1255, Oct. 1978. [18] J. R. Sklaroff, “Redundancy management technique for space shuttle computers,” IBM J. Research and Development, vol. 20, pp. 20-28, 1976. [19] A. Avizienis, P. Gunningberg, J. P. J. Kelly, R. T. Lyu, L. Strigini, P. J. Traverse, K. S. Tso, and U. Voges, “The UCLA DEDIX system: a distributed testbed for multiple version software,” International Symposium on Fault Tolerant Computing, pp. 126-134, June 1985. [20] PEX8796 datasheet ver. 1.2, PLX Technology, Inc., 24 July 2012. [21] Jack Regula,“Using Non-transparent Bridging in PCI Express Systems,” PLX Technology, Inc., 1 June 2004. [22] Open Fabrics Alliance, https://www.openfabrics.org/index.php [11] D. K. Pradhan, “Fault-Tolerant Computer System Design Book Contents,” pp. 221-235, 1996. [12] F. Machida, M. Kawato, and Y. Maeno, “Redundant virtual machine placement for fault-tolerant consolidated server clusters,” Network Operations and Management Symposium (NOMS), vol. 32, no. 39, pp. 19-23, April 2010. [13] A. Avizienis, G. C. Gilley, F. P. Mathur, D. A. Rennels, J. A. Rohr, and D. K. Rubin, “The STAR (Self-Testing and Repairing) computer: an investigation of the theory and practice of fault-tolerant computer design,” IEEE Trans. Computers, vol. 20, no. 11, pp. 1312-1321, Nov. 1971. [14] D. D. Burchby, L. W. Kern, and W. A. Sturm, “Specification of the fault-tolerant spaceborne computer (FTSC),” Proc. 1976 International Symposium on Fault-Tolerant Computing, pp. 129-133, June 1976. [15] D. Siewiorek, M. Canepa, and S. Clark, “C.vmp: the architecture of a fault-tolerant multiprocessors,” Proc. 1977 International Symposium on Fault- Tolerant Computing, June 1977. [16] A. L. Hopkins, T. B. Smith, and J. H. Lala, “FTMP — a highly reliable fault-tolerant multiprocessor for aircraft,” IEEE Trans. Computers, vol. 66, no. 10, 1978. 978-1-4799-5955-6/14/$31.00 ©2014 IEEE 1305