Deep Learning Lecture Notes: Regression, Classification, SVM

advertisement

Week 1

Deep learning is based on the approach of having many hierarchy levels. The hierarchy of

concepts enable the computer to learn complicated concepts by building them out of simpler

ones.

A computer can reason automatically about statements in formal languages using logical

inference rules. This is known as the knowledge base approach to AI.

AI systems need the ability to acquire their own knowledge by extracting patterns from raw data.

This capability is known as machine learning.

The performance of simple machine learning algorithms depends heavily on the representation of

the data they are given.

Each piece of information included in the representation is known as a feature.

Representation learning: Use machine learning to discover not only the mapping from

representation to output but also the representation itself.

- Learned representations often result in much better performance than can be obtained with

hand designed representations.

- Auto-encoder is the combination of an encoder function and a decoder function

When designing features or algorithms for learning features, our goal is usually to separate the

factors of variation that explain the observed data.

- Most applications require us to disentangle the factors of variation and discard the ones that

we do not care about.

Deep learning solves the central problem of obtaining representations in representation learning

by introducing representations that are expressed in terms of other, simpler representations.

- The quintessential example of a deep learning model is the feedforward deep network, or

multi later perceptron (MLP). A multilayer perceptron is just a mathematical function mapping

some set of input values to output values. The function is formed by composing many

simpler functions.

Visible layer: contains the variables that we are able to observe.

Hidden layers: extract increasingly abstract features.

- Values are not given in the data, instead the models must determine which concepts are

useful for explaining the relationships in the observed data.

For machine learning you have features x which are used to make predictions y.̂

Labels are what you want to predict.

Features are the variables you use to make the prediction. They make up the representation.

The objective of regression: we want to predict a continuous output value (scalar), given an input

vector.

- ŷ = f (x; w)

- ŷ = prediction

- f = regression function

- x = input vector

- W = paramaters to learn

- Input is transformed using parameters

Linear regression:

- ŷ = f (x; w) = x T w

- T represents dot product, number of parameters == number of features

- We want the weighted sum of the parameters. This is done by taking the dot product of the

vectors.

Weights and biases:

- If the input is a vector of zeros x = [0,0,0… . ]T the output is always 0.

- To overcome this we add bias (also known as an intercept)

- X = [x,1]

- W = [w,b]

- So we always have one more parameter to learn.

- Bias is an extra parameter that we always get, it is the same for all datapoints.

Goodness of fit: given a machine learning model, how good is it. We measure that and give it a

score.

- Typically measure the difference between the ground truth and the prediction.

- Loss function: (yn − yn̂ )2

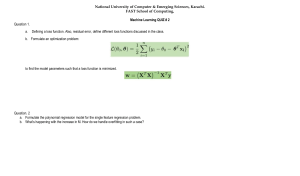

- Learning objective (SSE):

- xnT w == yn̂

1

(yn − xnT w)2

∑

2

- The equation is squared to punish bigger mistakes/differences

Linear regression forward and loss: parameters are needed to compute the loss, while the loss is

needed to know how well the parameters perform.

The best parameters W are the ones with the lowest sum of squared errors (SSE).

To find the minimum SSE, we need to take the derivative of the SEE and set it to zero.

- s(w) =

-

1

(yn − xnT w)2 becomes:

∑

2

d

(s w) = −

(yn − xnT w)xn (derivative)

∑

dw

- We transform it to vecoterised form:

d

s(w) = − (y − w T x)x T

dw

- Setting the derivative to 0 gives: −(y − w T x)x T = 0

- Solving this gives: w = (x x T )−1 * x y T

Linear regression can be solved in one equation. Unfortunately most machine learning models

cannot be solved this directly. Most problems have more than 1 (non-convex) minimum so then

the mathematical approach from before does not work.

Gradient descent:

- Slow iterative way to get the nearest minimum

- The gradient tells use the slope of a function

- Greedy approach

- Useful when non-convex

- Step by step guide:

1. Initialise parameters randomly

2. Take gradient and update parameters (keep taking new parameters and taking the

gradient until minimum is found)

3. Stop when at a minimum and can’t go lower. Meaning new step is not better than

previous step.

Regression is nothing more than finding those parameters that minimise our squared errors.

Parameters are values that we need to learn.

Hyper parameters are parameters that we would like to learn but unfortunately cannot learn, so

then we have to set them.

Learning rate (lambda) λ is an important hyper parameter.

Setting the step size in gradient descent:

- Too low, a small learning rate requires many updates before reaching the minimum point.

- Just right, the optimal learning rate swiftly reaches the minimum point

- Too high, too large learning rate causes drastic updates which lead to divergent behaviours

and overshooting the minimum.

Stochastic gradient descent:

- Go over subsets of examples, compute gradient for subset and update.

- Solves problem of going over all samples with gradient descent.

Linear regressions is a one layer network with:

- Forward propagation: compute ŷ = w T x

- Backward propagation: compute gradient of x

1

2

- Loss: square difference (y − y)̂ , gradient (y − y)̂

2

Polynomial regression:

- New forward function: ŷ = w T x + w T (x 2) + . . . + w T (x n)

- The higher the value of n, the more non-linear the regression function.

You can solve overfitting by adding more data, but this does require a lot of data.

Tackling overfitting with Regularisation:

- Data point xn

- True value yn

- Predicted value ŷ = f (x n : w)

-

Learning objective: min

1

(yn − w T xn)2 + λ R(w)

2∑

n

- λ is a hyperparameter (learning rate)

2

- With R(w) = ∑ wd

d

- The lower the values of the weights the lower the error.

- Intuition: high weights are key factors in overfitting

- Find a balance between fit and complexity

- Using only R(w) would result in value 0 for w being the best option

- It involves adding a penalty term to the model's optimization objective, discouraging overly

complex models by penalizing large parameter values or high complexity.

Week 2

Regression vs Classification:

- Regression:

- Predict a continuous output given inputs

- Find a line that is as close as possible to all features

- Classification

- Predict discrete output given inputs

- Find a line that separates inputs of different classes

Logistic regression (is a classifier, not a regression):

- Logistic regression = linear regression + decision function

- Properties of a decision function:

- Cap outputs between two extreme values (eg 0,1)

- Have useful gradients

- Smoothly interpolate between two extremes

The sigmoid function (is a decision function):

- Is essential for classification.

- Given any input z the output is the probability or likelihood of a class.

- Input is any real value, output is between 0 & 1.

- σ (z) =

1

1 + e −z

d

- Differentiable d z σ (z) = σ (z)(1 − σ (z))

Logistic regression forward and loss:

- Forward function: ŷ = σ (w T x)

- The squared loss no longer makes sense as we work with probabilities.

- New loss goal: if label=0, low sigmoid output, if label=1 high sigmoid output.

Likelihood:

- We want to predict values as close as possible to the correct labels.

- In other words we want to maximise the likelihood:

- L=

1

Πi ŷ yi(1 − yî )1−yi

N

- If the label is 1, we want the output to be as high as possible, which in the sigmoid

functions is as close to 1 as possible.

- If the label is 0, we want the output to be as low as possible, which in the sigmoid function

is as close to 0 as possible.

- In machine learning we want to minimise, leading to the negative log likelihood:

- L =−

1

(yi log( yî ) + (1 − yi )log(1 − yî ))

N∑

i

- So we want to minimise the negative log likelihood. Because computers are better at

minimising than maximising.

- Minimising the negative log likelihood:

- L = − (y log(σ (w T x)) + (1 − y)log(1 − (σ (w T x))))

- Shorthand: L = − (yi log(a) + (1 − yi )log(1 − a))

- a = σ (w T x)

- Derivative:

dl

= (a − y)x

dw

- a = σ (w T x)

Support Vector Machines (margin based classifier):

- Other way of thinking about classification: margins

- Feature x is n-dimensional representation, label y is binary {-1,1}

- Goal; find weights w such that f(x,w) predicts y.̂ ŷ = sign( f (x, w))

- Lines that separate points

- (X,Y) are both inputs, one output, a point.

- We want high margins, space between points and line

- Balancing act between margins and errors, we want minimal errors and maximum margins.

- The further away from the hyperplane, the more confident the algorithm

- F(x,w) = confidence

- We want F(x,w) = 0

- When are predictions good? (When the hinge loss is low)

- Datapoint xn

- Ground truth label yn

- Prediction f (xn, w)

- The hinge loss:

- L = | 1 − yn f (xn, w) | = m a x(0,1 − yn f (xn, w))

- Take my function, multiply it by the label (-1 or +1), subtract that from 1 and then do

that in the max-function.

- You always get 0 loss if the value of the output of the function has the same sign and

is bigger than 1.

- The value 1 in the function is used as a safe guard so that you don’t flip too late. We

create a “danger” area that penalises preventively.

- This function want us to have a margin. It says whatever your output be, it should

have the same sign as my label but I want you to be very sure, a margin away.

- We penalise misclassifications but also very close calls.

n

- We want to minimise L = ∑ | 1 − yn f (xn, w) |

i=1

- Find w such that:

- There are not too many errors

- There is a large margin between the hyperplane and the examples

n

1

2

- minW 2 | | w | | + C ∑ ζi

i=1

- Subject to yi(w * xi + b) ≧ 1 − ζi & ζi ≧ 0 ∀i

- Optimised both margin maximisation and error minimisation. Highly effective linear

classifier, difficult closed form solutions.

- The hinge loss penalizes misclassified samples by a margin, and the margin increases

linearly with how far the sample is from the decision boundary. Ideally, a good

prediction using SVM occurs when the hinge loss is minimized, meaning that the SVM

correctly separates the classes with a wide margin and fewer misclassifications.

Final words on SVMs:

- Optimisation is complicated: dual forms, quadratic programming etc

- Non linear extensions possible: kernel SVMs

- SVMs used to be the go to classifiers, but not anymore

Nearest Neighbours Classification:

- Definition: Label of test example is determined by the label of the nearest training example

- 1-nn decision boundaries:

- No explicit hyperplanes and decision boundaries

- Decision boundaries formed by Voronoi diagram of training set

- Decision rules for multiple neighbours (k-nn):

- Value of k can greatly influence the outcome

- General k-nn rule: majority vote

- K is a hyper parameter, we need to learn it

- What happens is we have a tie between classes?

- Randomly chose

- Uneven amount <- only works for 1-nn

- Bias

- Average closest distance

- Pros and cons of k-nn:

- Pros:

- No training

- Complex decision boundaries

- Inherent to binary and multi class

- Cons:

- Does not scale well

- Sensitive to outliers (overfitting)

Nearest Class Mean Classifier (NCM):

- “Learning” compute mean of each class

- “Recognition/inference” find nearest cluster

- What is the relation to SVMs?

- Decision boundary is a hyperplane orthogonal to the line going through both classes.

- Find nearest cluster, compute class mean of this cluster, assign same mean to self.

In between k-nn and NCM:

- Cluster features from each class

- For each example: class = label of nearest cluster

Benefits of decision tree:

- Explainability/interpretation

- Works with both discrete and continuous features

- Works with incompatible features

- Fast to train and evaluate

Intuition of decision trees:

- Main intuition:

- We want a “simple” tree

- We want each node to be as “pure” as possible, using a single feature.

- “Pureness”:

- Mathematical term: entropy

- Uniform subtree: high entropy

- Pure subtree: no entropy (no need to split again)

- Entropy is information

Entropy for two classes:

- Notation:

- Let S denote all the training sample

- Let P denote the ratio of positives in S

- Let N denote the ratio of negative in S

- Entropy Formula: E(S ) = P log2(P) − N log2(N )

- Maximum value at equal class distribution

- Minimal value if only one class left in S (“Pure”)

In a decision tree you always split over 1 feature at a time.

Multi-class Entropy:

- The entropy at a certain node:

- E(S ) = − P1 log2(P1) − P2 log2(P2) − . . . . − Pn log2(Pn)

n

- E(S ) = − ∑ Pi log2(Pi )

i=1

- Weighted average of entropies at a split A:

- E(S ) = −

T(A)

| Si |

E(Si )

∑ |S|

i=1

We can compute entropy, but how to split?

- Which split is best?

- The one that decreases the entropy the most, called information gain.

- G (S, A) = E(S ) − I(S, A)

- Higher information gain is better.

- G (S, A) = entropy before split - (proportion left * entropy left) - (proportion right *

entropy right)

Limitations of decision trees:

- With many examples and features, we obtain deep trees

- Perfect training separation, but poor generalisation (overfitting)

- Three solutions:

1. Stop growing the tree (suboptimal training fit)

2. Grow full tree, then prune (shorten) tree

3. Combine multiple trees

Decision tree splits have to be about one feature, using vertical and horizontal lines only.

Random forest: combing multiple frees:

- Rather than training one tree perfectly, train multiple trees imperfectly.

- Train many trees, each trained on a random subset of features and/or examples

- Hyper parameters: k trees, D tree depth, R number of features per split

- Training

- Initialise empty forest F

- For tree k=1, ….. k

- For depth d=1…..D

- Randomly select R features

- Split node based on features

- Add k to F

- Inference in random forest:

- Traverse each tree separately for a test example

- Average class scores over trees and select maximum average score

Bias variance tradeoff:

- We want 2 things: capture core aspects of training data and generalise to test data

- Bias means non adaptive, more complex model less bias

- Variance is overfitting, more complex model more variance

- We want low bias and low variance

- Decision tree typically has low bias and high variance

- Random forrest typically has low bias and low variance

Random forest is a case of ensembling

Ensembling:

- Main idea: generate multiple models and combine them into one classifier

- Two considerations:

- How do we get multiple models?

- How do we aggregate outputs of multiple models? How do we normalise everything so we

can combine it?

- We make everything a probability

Bagging:

- Bootstrap sampling:

- For training set D containing N examples, create a training subset D’ by drawing N

examples at random with replacements from D

- Bagging:

- Create k bootstrap subsets D1,…Dk

- Trying a classifier for each subset

- Classify test example by majority vote over subset classifiers

Boosting:

- Within standard bagging and ensembling, models are trained separately

- Boosting makes the models work together, we train models to complement each other.

AdaBoost:

- Attach a weight to each training example

- Select a “weak classifier” with the lowest training error

- Increase weight of incorrectly classified examples

- Iteratively select new best weak classifier with Lowe weighted error

Properties of AdaBoost:

- AdaBoost asymptotically converges to the minimum possible exponential training loss, as

long as weak leaners perform above average.

- Increased complexity due to more iterations and weak classifiers does not necessarily lead

to overfitting

- Limitations of AdaBoost:

- What happens when weak classifiers are strong?

- No training error, hence no benefit

- What is an advantage of standard ensembling?

- AdaBoost is slow because training is in sequence and not parallel.

AML Week 3

Deep learning is regression or classification + feature learning, typically over many layers.

Deep learning: A family of parametric, non linear and hierarchical representation learning

functions, which are massively optimised with stochastic gradient descent to encode domain

knowledge, ie domain invariances, stationarity.

- each layer has its own parameters

The perceptron:

- Single layer perceptron for binary classification

- One weight wi per input xi

- Multiply each input with its wights, sum, and add bias

- If results lager than the threshold, return 1 otherwise return 0

Training a perceptron:

- Learning algorithm:

- Initialise weights randomly

- Take one sample xi and predict yi

- For erroneous predictions update weights

- If prediction ŷ = 0 and ground truth yi = 1, increase weights

- If prediction ŷ = 1 and ground truth yi = 0, decrease weights

- Repeat until no errors are made

Perceptrons turned out to only solve linearly separable problems.

Deep learning training goal and overview:

- We have a dataset of inputs and outputs

- Initiate all weights and biases with random values

- Learn weights and biases through “forward-backward” propagation:

- Forward step: map input to predicted output

- Loss step: compare predicted output to ground truth output

- Backward step: correct predictions by propagating gradients

- It refers to the process of calculating and updating the gradients of the model's

parameters (weights and biases) with respect to a given loss function.

Forward propagation:

- In the basics, the same as the perceptron:

- Start from input, multiply with weights, sum, add bias

- Repeat for all following layers until you reach the end

- There is one main new element (next to multiple layers):

- Activation functions after each layer

Why have activation functions?

- Each hidden/output neuron is a linear sum

- A combination of linear functions is still a linear function

- Activation functions transform the output of each neuron. This results in non-linear functions

Non-linear activations:

- ReLU function

- if input > 0:

- return input

- else:

- return 0

- Sigmoid function

- Pros of sigmoid:

- Bounded (usefulness depends on application)

- Pleasing math

- Pros of ReLU:

- Easy to implement

- Strong gradient signal

Forward pass conclusion:

- Going from input to output is a standard procedure

- Go from one layer to the next until you are at the output

- At each layer, weighted sum with activation function

Gradient Descent:

- Finding minima

- We can find the best value of w by examining the gradient of J(w)

- Minimal loss when gradient is 0

- Gradient descent over many layers is back-propagation

- Weights are optimised when loss is as low as possible

- The go to method for optimisation in deep networks

- Start with W0, for t=1 …. T

d

- Wt+1 = Wt − γ d w * f (Wt )

t

- With γ a small value

Training deep networks:

1. Move input through network to yield prediction

2. Compare prediction to ground truth

3. Back-propagate errors to all weights

The backward propagation algorithm:

- Back-propagation = gradient propagation over the whole network

- Propagate gradient obtained at output back to all neurons

- Propagation over layers is done with the chain rule

Forward-backward:

1. Initialise parameters with random values

2. Forward propagation

3. Calculate error at the output

4. Back-propagate error

5. Update weights

6. Repeat

Binary classification:

- Now we want the output to give a decision, by clamping between 0 and 1.

- We can do so using the sigmoid function

Binary cross-entropy loss:

- Let yi ∈ {0,1} denote the binary label of example i

- Let pi ∈ {0,1} denote the output of example i

- Out goal: minimise pi if yi = 0, maximise if yi = 1

- Minimise: −(yi * log( pi ) + (1 − yi ) * log(1 − pi ))

- Maximise: piyi * (1 − pi ) yi

Multi class classification:

- For k classes, make the network have k output values

- Normalise outputs using Softmax function:

-

p(x)i =

exp(xi )

k

∑j=1 exp(xj )

- Minimise the following loss:

k

y log( p(x)j )

- ∑ i

j=1

Hyper parameters:

- Deep networks come with many hyper parameters, it is not always known which settings are

best.

- Important hyper parameters to think about:

- Number of layers

- Number of parameters per layer

- Number of training iterations

- Learning rate for (stochastic) gradient descent

- Regularisation weight

Image recognition in the old days:

1. Extract local features

2. Aggregative features over images

3. Train classical models on aggregations

The convolution operator:

- Linear operation: f (x, w) = x T w

- Global operation, separate weight per feature

- Dimensionality of w = dimensionality of x

- 1 output value

- Convolutional operation: f (x, w) = x * w

- Local operation, shared weights over local regions

- Dimensionality of w much smaller then dimensionality of x

- Output (almost) same size as input

- What a convolution does, it locally does the linear operator (par 1 * feature 1 + par 2 * feature

2 …. + par n * feature n)

- 2D convolution:

- Input: image A of size W*H

- Filter: “image” B of size k * k (k is set by hand)

- Output: image C of size W*H

2D convolutions step by step:

- Input image 7 * 7

- Filter size 3 * 3

- Do the convolution by sliding the filter over all possible image locations

- Output is 5 * 5

Dealing with the border:

- Main idea: increase the image size before convolutions

- Image increase is half the filter size minus 1.

- This can be done the following ways:

- Zero padding <- most common

- Wrap around

- Copy

- Reflect

Convolution examples:

Possible exam Question Convolutional layers:

Images with deep learning: convolutional networks

- Image as input, go through several layers of convolutional filters, predict label.

- We want to “learn” the filters that help us recognise classes

- The convolution layer introduces a third dimension, the channel dimension

- The idea is that an image goes in and we get an image of the same size spatially but different

channel sizes as output. Repeat over and over again.

- The key is that the convolutions (filter values), they become parameters

The convolutional layer:

- One output value is the result of a convolution with an input area and a convolution filter

- The convolution is local in width and height, full (global) in depth

- Convolutional networks on images always have a third dimension

- Each filter yields a different output value for the same location

- We stack the outputs in the depth dimension of the new layer

- If we have 32 by 32 by 3:

- What is the 3? RGB dimension (channel dimension)

- What is the output size for D filters (with padding)?

- 32 by 32 by D

- Does the output size depend on the input size or the filter size? If padding then input size,

otherwise both.

- The outputs of a single filter over all spatial locations is called a filter map

- Each filter creates a “filtered” image of its own which are then stacked to create the output

image

Filters and layer dimensionality:

- First layer:

- Input 32 * 32 * 3 and A filters of 5 * 5 * 3

- Output is 32 * 32 * A

- Second layer:

- Input 32 * 32 * A and B filters of 5 * 5 * A

- Output is 32 * 32 * B

Pooling:

- All convolutional networks perform pooling

- Reduces size (downscaling)

- Reduces amount of computations

- Invariance to small deformations

- What are ways of pooling?

- Convolutions with stride

- Average pooling

- Max pooling <- most common

- Max pooling:

- Forward:

- Maintain highest activation

- Backward

- Gradient flow through highest activation

The convolution network:

- Learn local filters at each layer

- Weights of local filters are shared over spatial locations

- Each convolutional layer outputs multiple filter maps

- Downsize filter maps through pooling

- Final layers either standard network layers or global pooling

We go from low to high semantics over the layers.

AML Week 4

How do we represent textual data?

- Word representation

- Categorical features

- One-hot encoding

- Bag of Words

- TF-IDF

- Tokenisation and stemming

Bag of Words representation:

- Sentence 1: “the cat sat on the hat”

- Sentence 2: “the dog ate the cat and the hat”

- Vocabulary: {and, ate, cat, dog, hat, on, sat, the}

- Sentence 1: {0, 0, 1, 0, 1, 1, 1, 2 }

- Sentence 2: {1, 1, 1, 1, 1, 0, 0, 3 }

Tokenising:

- Forming words from sequences of characters

- Surprisingly complex in English, can be harder in other languages

- “It not cool that”

tokenise

- “It”, “not”, “cool”, “that”

- Tokenisations problems:

- Combination of words can be important

- Both hyphenated and non hyphenated forms of many words are common

- Number can be important including decimals

- Periods can occur in numbers, abbreviations, URLs, ends of sentences and other

situations

Stemming:

- Many morphological variations of words

- In many cases, these have the same or very similar meaning

- Stemmers attempt to reduce morphological variations of words to a common stem

- It is generally a small but significant effectiveness improvement

- Two basic types:

- Dictionary based: uses lists of related words

- Algorithmic: uses program to determine related words

Document Frequencies (DF):

- Challenge: quantify importance of words

- Make use of document frequency

- Terms that occur in few documents are more informative

- Terms that occur in many documents are less informative

Inverse Document Frequencies (IDF):

- How should we translate document frequencies into term weights?

- Common way to do this is by counting the logarithm of the inverse of the document

frequency (IDF)

Word similarly vector models:

- Sparse vector representations:

1. Mutual-information weighted word co-occurance matrices

- Dense vector representations:

- 2. Singular value decomposition (and latent semantic analysis)

- 3. Neural network inspired models (skip-grams, CBOW)

- Prediction based models: (skip-gram, CBOW)

- Learn embeddings as part of the process of word prediction

- Advantages:

- Fast, easy to train

- Available online

- Including sets of retrained embeddings

Compositionality:

- How can we represent documents?

1. Aggregate word vectors

2. Improve compositionality by tuning and aggregating word vectors

3. Improve compositionality by tuning word vectors, learning rules of composing

passages

Architecture vs Embedding compositionality:

- Do we need compositionality to perform document level tasks?

- Create document representation, then classify representation simple architecture like a

feed forward network

- Consume word representations directly. More complex architecture that handles

aggregating terms

Recurrent Neural Networks:

- Consume words one at a time, update vector h after each. After last word, classify h using a

FFN

CNN with text:

- Use a single CNN

- Filters “slide” over 1 dimension

Context-dependent language models:

- Bi-directional encoder representations from transformers (BERT)

- Based on Transformers

- State of the art performance across many tasks

Hyperparameters:

- Hyper-parameters are parameters that can not be directly learned from data and need to be

set manually

- No direct way to estimate from data, we have to set them manually

The one illegal thing to do:

- Never use test set and labels to determine the best settings

Validation: (test set):

- Take out a portion of training set to be used for evaluation

- Pros of such a setup:

- Fair way to determine desirable hyper-parameter values

- Cons of such a setup:

- Does not work well when the amount of data is limited

Cross-validation:

- Split data into portions, rotated evaluation on different holdout sets

Validation vs Cross-Validation:

- Cross-validation is preferred when data is scare and models are low complexity

- Validation is preferred when data is sufficient and/or models are complex

Evaluating a machine learning system:

- Technical:

- How fast does it train?

- How fast is the prediction?

- ……..

- User happiness:

- UI design

- Cost

- …….

Relevance depends on the user

Classification accuracy:

- Accuracy = fraction of correct decisions

- When is accuracy a bad measure?

- When data is unbalanced

Recall and Precision:

- Recall: number of relevant items predicted / total number of relevant items in collection

- Precision: number of relevant items predicted / total number of items predicted

- Recall =

TP

TP + FN

- Precision =

TP

TP + FP

Average precision:

1. Sort results by score (high to low)

2. Start at the top ranked example and move downwards

3. Every time you encounter a positive example (recall goes up), compute the precision at

that location

4. Sum the precision of all encounters

5. Divide by total number of relevant documents

N

∑r=1 (P(r) * rel(r))

- A P = Num berOf RelevantDocum ents

- Example: (1: True, 2: False, 3: True, 4:True, 5:False)

- 1: P(r) = 1, rel(r) = 1 so

1*1

=1

1

- 2: P(r) = 1, rel(r) = 0 so ignore

- 3: P(r) = 2, rel(r) = 1 so

2

3

- 4: P(r) = 3, rel(r) = 1 so

3

4

2 = amount of True cases, 3 is amount of total cases yet

- 5: P(r) = 3 rel(r) = 0 so ignore

2

- AP =

3

1+ 3 + 4

3

3 is amount of relevant cases = amount of True cases

ROC curves:

- Area under the curve is an estimate of the correct prediction probability

- When using previous example data (1: True, 2: False, 3: True, 4:True, 5:False)

- Go up when True, go right when False. Amount of blocks under line / total amount of

-

blocks is your score

AML Week 5

What is Reinforcement Learning (RL):

- Focus on sequential decision making problems

- Learn from sequential interactions with environment to optimise long term objective

- Key features:

- RL learns the best action to take by trial and error

- The reward can be delayed

- Might have to sacrifice immediate reward to gain more long term reward

Reinforcement Learning vs Supervised Learning:

- RL:

- Given the current input, you make a decision, and the next input depends on your decision

- Learn to do

- Supervised Learning:

- The decisions you make do not effect what you see in the future

- Learn to predict

Two steps to solve RL problems:

1. Formalisation:

- Conceptualise real-world problem into appropriate math

2. Finding a solution:

- Model learning goal

- Use the appropriate method to achieve this goal

Formalisation: Markov Decision Process

- A mathematical framework to model sequential decision making tasks

- Assumption that a decision can be made based on only the input

- Sequential interaction flow:

The interaction flow:

- At each time step

-

Markov Decision Process (MDP):

- Formally, a finite MDP consists of:

- A finite set of states; S

- A finite set of actions for each state: A

- A dynamic function:

- p(s′, r | s, a) = Pr[St = s′, Rt = r | St−1 = s, At−1 = a]

- S’ = next state

- Sometimes written as transition function: p(s′| s, a) = probability of going to new state

s’

- And reward function p(r | s, a, s′) = probability of getting reward when going to new

state s’

- A discount factor γ ∈ [0,1], discounts things further away in time , give more weight to

things closer/soon, so care more about things near in time then further away in time.

Assumptions of MDP:

- Discrete time steps

- The next state only depends on the current state and action (Markovity)

- Rewards depend only on the state, actions and next state

Policy:

- Policy represents agent behaviour: at a certain state, what action should be taken?

- It is mapping from state to action:

- π (a | s) = Pr[At = a | St = s]

- Conditioned on the state

- What is the optimal policy at each state?

- Depend on what you do in the future

- Conditioned on the policy you take in the future

Formally measure how good a policy is:

- Value function Vπ (s):

- Expectation of future sum of rewards

- At state s, what future reward can be expected

- Vπ (s) = Eπ[Gt | St = s]

- E = expectation

- St = state

- Gt = discounted return, uses γ to weigh future rewards as less important, sum of

rewards in the future - necessary for decision making

Measure how good an action is in a state?

- Same but also conditioned on action opposed to just being conditioned on the state

How to get optimal policies?

Not all problems are MDPs:

- For example:

- Multi-armed bandit problems

- Partially observable problems

- Multi agent problems

RL is not perfect:

- Long learning time: lots of data

- Uncertainty in practise: high variance

- Deep Rl: sensitive to hyper parameter choice

Transformers:

- Based on one key idea: Attention, cross connecting everything from the input to the output

- The idea is that if I have a sentence I want every word to be connected to every other word

and use that to compute similarities

- Step 1) input embedding (see it like a look up table) (word to vector)

- Step 2) self attention:

- Starts with 3 linear layers (query, key, value) simultaneously from the same input

- First two linear transformations are used for a matrix product. Output is a set of scores

which gives the similarity between each of the pairs of words. A pair wise similarity matrix

- Next we use scaling function on this matrix, this speeds up training

- Do Softmax, a way of normalising the similarities

- We take the 3d linear layer transformation, and we do the product of that and the Softmax

output. This gives up the output

- So what self attention does, it takes an input and it just creates new factors for that input.

But is does so by using self attention (self similarity normalised)

- Transformers let go of inductive biases. Just learn by looking at yourself

- Fix problem of ordering with positional embeddings, every word has a feature vector and we

append some information to that feature vector like a few extra values that say something

about where in the sequence this word is. This is done by adding some extra dimensions.

- Positional embeddings can be seen as hyper parameters

- Step 3) Same thing happens again but then with the output as input, this is then optimised

with Softmax

- This creates sequence to sequence models.

- The key difference with recurrent networks is every time we use the entire input sequence

and the entire sequence made so far as output sequence to generate a new word.

- Stops when the end token is in the output.

- In summary: Transformers are sequence to sequence models. Key to transformers is selfattention. Generate new words, sequentially.

Transformers can be used for any input as long as you make the input a “sentence” (vector)

Transformers put very little restrictions on how the network should learn.

- This is a problem when data is limited but with a lot of data it becomes a plus

Generative machine learning:

- So far all of machine learning has been discriminative learning, given an input x, give me the

probability of a label y, label y is conditioned on input x. For example, discriminate between

classes given an input. P(y|x)

- In Generative learning we model the distribution of the inputs instead of the labels of the

input. P(x)

- So we model the distribution of the data itself

ChatGPT is sequence to sequence models + RL

Why is ChatGPT so convincing?

- It is a result of exposure to extreme scale

- Its network consists of billions of parameters

- It is a product of careful and elaborate hyper parameter tuning

- Human feedback RL creates output formats we like

- Ultimately ChatGPT has condensed the internet and gives it to us in a pleasing narrative style

Graph Machine Learning:

- Working on graph data:

- Real-world data often does not preside on strutted grids. We can not train a standard deep

network on this kind of data

- Graph convolutional networks:

- Main ideas: pass messages between pairs of nodes and agglomerate

- A graph network is nothing more then a node representation network

- Input is nodes and edges, output is a feature vector per node. The feature vectors are

optimised based on their relations

- Prediction can then be done on the nodes, edges or the entire graph

- A graph layer does nothing more then taking some node features and outputting some

transformed node features

- This done multiple times with some activation in-between is a graph network

- The idea of every graph layer basically is; I’m gonna learn my new representation by

looking at the neighbourhood around me, so all my connected nodes and then

update my representation accordingly. Then do a relu and do it again and again and I

have my final output and on that I can do my loss.

- In a graph the convolutional layer is orderless

The graph convolutional layer:

- The new representation of a node is nothing more than; I take my old representation with a

linear layer. So you transform your own feature and sum over all your neighbours with their

own transformation. So you get two matrixes, one for yourself and one for your neighbours.

Sum these up and that becomes your new representation.

- Needs to be done for every node per graph layer

- With each layer you expand your scope, more layers = more connectivity

- Desirable properties:

- Weight sharing over all locations

- Invariant to permutations

- Linear complexity O(E)

- Applicable both in transductive and inductive settings

- Limitations:

- Requires gating mechanism/residual connections for depth

- Only indirect support for edge features

Visualising node representations:

- Parameters initialised randomly

- 2-dimensional output per node

- Node representations align with connectivity

Node classification: classification on each node

- Graph network optimisation method

Graph classification: take all node features, sum them up into a graph, feed into linear layer and

do classification, and back propagate.

- Graph network optimisation method

Link prediction: take the feature vector for node 1, node 2 and do dot product, the higher the dot

product the more similar they are. Feed that into a linear layer.

- Graph network optimisation method

Take away messages for graph networks:

- Graphs have a very loose structure, only nodes and edge relations

- Graph layers learn node representation by passing messages

- On top we can infer labels for nodes, graphs and edges

Self Supervised Machine Learning helps us deal with scale

- It can learn representation of just the data. No labels needed

From supervised to self-supervised learning:

- Without labels, we do not have a semantic prediction

- We can still learn meaningful features by making our own classification from the data itself

AML Week 6

Data Warehouse:

- Pros:

- Business Intelligence (BI)

- Analytics

- Structures & clean data

- Predefined schemas

- Cons:

- No support for semi or unstructured data

- Inflexible schemas

- Struggled with volume and velocity upticks

- Long processing time

Data Lake:

- Unstructured storage “dump of data”

- Pros:

- Flexible data storage

- Streaming support

- Cost efficient in the cloud

- Supports unstructured data, structured data and semi structured data

- Support for AI and Machine learning

- Cons:

- No transactional support

- Poor data reliability

- Slow analysis performance

- Data governance concerns

- Data warehouses still needed

The Data Lakehouse:

- Combination of data lake and data warehouse

- Data lake as foundation, unified data storage on top, unified security governance and

cataloging on top of that

Unify your data and governance for lower TCO, faster value

Democratise access to insights for all users

Develop generative AI applications on your data with full privacy

AML Practise Exam Notes

The importance of the interaction between the loss and the regulariser for binary SVMs:

- The loss function:

- The loss function measures the discrepancy between the predicted outputs of the model

and the actual target labels. In the context of SVM, it aims to minimize classification errors

or deviations of the predicted decision boundaries from the true class labels. SVM typically

uses the hinge loss, penalizing misclassifications and aiming to maximize the margin

between different classes.

- Reguliser:

- The regularizer is a penalty term incorporated into the objective function to control the

model's complexity and prevent overfitting. It helps in finding a balance between fitting the

training data well and avoiding excessive reliance on noisy or irrelevant features. In SVM,

the regularization term is usually the L2 norm of the weight vector (also known as the

squared norm or ridge penalty).

- Importance of Interaction:

- The interaction between the loss function and the regularizer in the SVM objective function

is crucial for achieving good generalization and model performance.

- Balancing these two components influences how the model handles errors and complexity

- Balance optimising for low training error and model complexity

Discuss one way to enable non-linear decisions when using SVM classifiers:

- In SVM classifiers, enabling non-linear decisions can be achieved by using the "kernel trick."

The kernel trick allows SVMs to implicitly operate in a higher-dimensional feature space

without explicitly transforming the input features, thereby facilitating non-linear decision

boundaries.

Describe two required changes to transform a binary SVM classifier to a regressor:

- Objective Function Modification:

- Change in Objective Function, modify the objective function from maximizing the margin to

minimizing the error between predicted and actual continuous values.

- Change Loss Function, Replace the hinge loss, which is used in SVM classification, with a

suitable loss function for regression tasks. Common choices include Mean Squared Error

(MSE).

- Output Transformation:

- Transformation of Outputs, In the binary SVM classifier, the outputs are discrete class

labels (e.g., -1 or +1). In regression, the outputs are continuous

- Output Interpretation, In the transformed SVM regressor, the output from the decision

function might not be directly interpretable as the predicted value

Explain the purpose of forward- and backward propagation with respect to learning the

parameters of the neural net:

- Forward:

- Purpose: Forward propagation refers to the process where input data is passed forward

through the network's layers to generate predictions or outputs.

- Backward:

- Purpose: Backward propagation, or backpropagation, is the phase where the network

learns by updating its parameters based on the computed errors between predictions and

actual targets.

Explain and discuss the differences between a fully connected layer and a convolutional layer:

- Local vs. Global Connectivity:

- Fully connected layers have global connectivity, considering all neurons in the previous

layer, while convolutional layers have local connectivity, focusing on specific receptive

fields.

- Parameter Sharing:

- Convolutional layers use parameter sharing (same weights shared across a local region),

leading to fewer parameters compared to fully connected layers, making them more

efficient, especially for high-dimensional inputs like images.

- Spatial Hierarchies:

- Convolutional layers exploit spatial hierarchies in data by learning local patterns and

hierarchical representations, making them highly effective for image-related tasks, where

spatial information is crucial.

When two vectors are more similar the Euclidean distance becomes smaller while the dot product

value becomes bigger.

To combat overfitting you can:

- Add more data

- Add a reguliser

- Use a simpler model

When minimising a function with gradient descent - and a proper learning rate- the objective value

will decrease (or stay equal) after every iteration. When using stochastic gradient descent (SGD)

this is not the case. Why?

- SGD approximates the true gradient

Precision and Recall:

Bias and Variance:

Convolutional layers are:

- Depthwise global

- Local in width and height

Powered by TCPDF (www.tcpdf.org)