MPC Optimization for Double Pendulum Control

advertisement

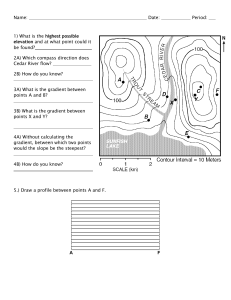

MR3038. EI-AD24-153. Embedded optimization techniques for multivariable control strategies improvement “Implementation of linear discrete MPC for a Double Pendulum on a Cart” By Victor Bobadilla Hernández - A01770261 Research advisors: Dr. Carlos Sotelo Dr. David Alejandro Sotelo Dr. Juan Antonio Algarín-Pinto. December the 2nd 2024 INDEX 1. Abstract ............................................................................................... Error! Bookmark not defined. 2. Modelling the plant ............................................................................................................................... 3 2.1 Kinematic model ................................................................................................................................. 4 2.2 Kinetic Energy .................................................................................................................................... 4 2.3 Potential energy .................................................................................................................................. 5 3. Nonlinear State Space ........................................................................................................................... 8 4. Simulation of dynamics ........................................................................................................................ 9 5. Validation of model ............................................................................................................................ 10 6. Linearization ....................................................................................................................................... 11 7. Control ................................................................................................................................................ 13 7.1 Controllability ................................................................................................................................... 13 7.2 LQR .................................................................................................................................................. 14 7.3 MPC .................................................................................................................................................. 14 8. Discrete Time MPC ............................................................................................................................ 15 9. Cost function ....................................................................................................................................... 17 9.1 Continuous to discrete dynamics: .............................................................................................. 19 9.2 Main control loop: ..................................................................................................................... 19 9.3 LinMPC: .................................................................................................................................... 19 9.4 HFQ : ......................................................................................... Error! Bookmark not defined. 9.5 Selector Matrix PI: ............................................................................................................................ 21 9.6 1 PSI: ............................................................................................................................................ 21 10. Gradient Descent function GD....................................................................................................... 21 11. Nesterov gradient descent .............................................................................................................. 23 12. Momentum gradient descent .......................................................................................................... 23 13. Results............................................................................................................................................ 24 14. References ...................................................................................................................................... 33 2 1. Abstract Model Predictive Control is one of the most powerful control strategies today, due to its ability to work with both linear and nonlinear MIMO and SISO systems, but especially for its ability to set constraints on the control inputs, making it a powerful technique that is highly robust to disturbances and able to operate near the limits of a system. Despite its advantages, MPC is computationally demanding because it needs to solve an optimization problem at each step, making it difficult to implement on less robust hardware or simplifying the model to reduce complexity. Compared to other control methods, MPC´s ability to predict the behaviour of a plant makes it robust to disturbances, since it's not necessarily reactive. However, one of the issues it faces is the high computational burden due to the optimization of a cost function at every time step and for plants with cost functions that aren't strictly convex, the iteration steps to find the optimal solution may take too long for MPC to be applied in systems with fast-changing dynamics. Research in this area has brought upon many techniques such as convex optimization, Newton's method to approximate a gradient, Fast gradient methods and more. This paper shows an implementation of a linear discrete unconstrained MPC for a Double Inverted Pendulum on a Cart. This system was chosen due to the nonlinear and chaotic behaviour of the plant, which makes for a good benchmark test for our controller and if successfully controlled, could be implemented for more complex systems. The methodology for analysing the performance of the MPC controller will be by implementing a LQR as a benchmark, since they are similar in that both optimize a cost function, although LQR solves a single optimization problem offline, and MPC solves an optimization problem at every time step. In this paper we focus on analysing and comparing 3 different optimization techniques using different variations of Gradient Descent which are Normal gradient Descent with adaptive step size, Momentum Gradient Descent and Nesterov´s Accelerated Gradient. The reason is that many plants have nonconvex cost functions which may cause normal gradient descent to oscillate to much or get stuck on a local minimum, the hypothesis of this paper is that, by using Nesterov´s Accelerated Gradient and Momentum Gradient descent we can reduce the iteration steps needed for convergence. 2. Modelling the plant We will model the system dynamics using the Lagrangian to obtain the system's equations of motion. First, we model the kinematics of our system, characterizing the position of both pendulums and the cart, as shown in Figure (). For our model we are assuming that the majority of the mass is at the tips of the pendulum rods, therefore the centre of gravity of each pendulum is at the furthest it can be, this makes our calculations easier and in a real life prototype having the centre of gravity further from the pivot increases our moment of inertia, reducing the energy required to maintain equilibrium since a greater moment of inertia means that the torque applied by gravity will accelerate the pendulum less and therefore our control effort can be reduced. 3 2.1 Kinematic model Figure 1. Kinematic model 𝐶 = 𝑥 𝑝̇1𝑥 = 𝑥̇ + 𝑙1 𝜃̇1 𝑐𝑜𝑠𝜃1 𝑝1𝑥 = 𝑥 + 𝑙1 𝑠𝑖𝑛𝜃1 𝑝̇1𝑦 = −𝑙1 𝜃̇1 𝑠𝑖𝑛𝜃1 𝑝1𝑦 = 𝑙1 𝑐𝑜𝑠𝜃1 𝑝̇ 2𝑥 = 𝑥̇ + 𝑙1 𝜃̇1 𝑐𝑜𝑠𝜃1 + 𝑙2 𝜃̇2 𝑐𝑜𝑠𝜃2 𝑝2𝑥 = 𝑥 + 𝑙1 𝑠𝑖𝑛𝜃1 + 𝑙2 𝑠𝑖𝑛𝜃2 𝑝̇ 2𝑦 = −𝑙1 𝜃̇1 𝑠𝑖𝑛𝜃1 − 𝑙2 𝜃̇2 𝑠𝑖𝑛𝜃2 𝑝2𝑦 = 𝑙1 𝑐𝑜𝑠𝜃1 + 𝑙2 𝑐𝑜𝑠𝜃2 We have now defined our system's kinematics. To derive the equations of motion, we will use the Lagrangian since it´s easier to derive the dynamics using energies rather than forces. 𝐿 = 𝑇−𝑈 Where 𝑇 and 𝑈 are the system's total kinetic and potential energy respectively, we will find the kinetic and potential energies of the cart and both pendulums individually and then add them together. 2.2 Kinetic Energy • Cart: 𝑇𝐶 = • 2 𝑚𝐶 𝑉𝐶2 Pendulum 1: 𝑇𝑃1 = 4 1 1 2 𝑚1 𝑉12 𝑉1 = √𝑝̇1𝑥 2 + 𝑝̇1𝑦 2 1 𝑚 (𝑝̇ 2 + 𝑝̇1𝑦 2 ) 2 1 1𝑥 𝑇𝑃1 = 𝑇𝑃1 = 𝑇𝑃1 = 1 𝑚 [(𝑥̇ + 𝑙1 𝜃̇1 𝑐𝑜𝑠𝜃1 )2 + (−𝑙1 𝜃̇1 𝑠𝑖𝑛𝜃1 )2 ] 2 1 1 2 2 𝑚 [𝑥̇ 2 + 2𝑥̇ 𝑙1 𝜃̇1 𝑐𝑜𝑠𝜃1 + 𝑙1 2 𝜃̇1 𝑐𝑜𝑠 2 𝜃1 + 𝑙1 2 𝜃̇1 𝑠𝑖𝑛2 𝜃1 ] 2 1 𝑇𝑃1 = 1 2 2 𝑚1 [𝑥̇ 2 + 2𝑥̇ 𝑙1 𝜃̇1 𝑐𝑜𝑠𝜃1 + 𝑙1 2 𝜃̇1 (𝑐𝑜𝑠 2 𝜃1 + 𝑠𝑖𝑛2 𝜃1 )] 𝑇𝑃1 = • 1 2 𝑚2 𝑉22 𝑇𝑃2 = 𝑇𝑃2 = 𝑇𝑃2 = 𝑇𝑃2 = 2 𝑚1 [𝑥̇ 2 + 2𝑥̇ 𝑙1 𝜃̇1 𝑐𝑜𝑠𝜃1 + 𝑙1 2 𝜃̇1 ] 1 2 𝑉2 = √𝑝̇2𝑥 2 + 𝑝̇2𝑦 2 1 𝑚 (𝑝̇ 2 + 𝑝̇2𝑦 2 ) 2 2 2𝑥 1 𝑚 [( 𝑥̇ + 𝑙1 𝜃̇1 𝑐𝑜𝑠𝜃1 + 𝑙2 𝜃̇2 𝑐𝑜𝑠𝜃2 )2 + ( −𝑙1 𝜃̇1 𝑠𝑖𝑛𝜃1 − 𝑙2 𝜃̇2 𝑠𝑖𝑛𝜃2 )2 ] 2 2 1 2 2 𝑚 [𝑥̇ 2 + 𝑙1 2 𝜃̇1 𝑐𝑜𝑠 2 𝜃1 + 𝑙2 2 𝜃̇2 𝑐𝑜𝑠 2 𝜃2 + 2(𝑥̇ 𝑙1 𝜃̇1 𝑐𝑜𝑠𝜃1 + 𝑥̇ 𝑙2 𝜃̇2 𝑐𝑜𝑠𝜃2 + 𝑙1 𝜃̇1 𝑐𝑜𝑠𝜃1 𝑙2 𝜃̇2 𝑐𝑜𝑠𝜃2 ) 2 2 2 2 + 𝑙1 2 𝜃̇1 𝑠𝑖𝑛2 𝜃1 + 𝑙2 2 𝜃̇2 𝑠𝑖𝑛2 𝜃2 − 2𝑙1 𝑙2 𝜃̇1 𝜃̇2 𝑠𝑖𝑛𝜃1 𝑠𝑖𝑛𝜃2 ] 2 2 𝑚2 [𝑥̇ 2 + 𝑙1 2 𝜃̇1 + 𝑙2 2 𝜃̇2 + 2𝑙1 𝑙2 𝜃̇1 𝜃̇2 (𝑐𝑜𝑠𝜃1 𝑐𝑜𝑠𝜃2 − 𝑠𝑖𝑛𝜃1 𝑠𝑖𝑛𝜃2 ) + 2𝑥̇ (𝑙1 𝜃̇1 𝑐𝑜𝑠𝜃1 + 𝑙2 𝜃̇2 𝑐𝑜𝑠𝜃2 )] 𝑇𝑃2 = 1 2 2 2 𝑚2 [𝑥̇ 2 + 𝑙1 2 𝜃̇1 + 𝑙2 2 𝜃̇2 + 2𝑙1 𝑙2 𝜃̇1 𝜃̇2 cos (𝜃1 + 𝜃2 ) + 2𝑥̇ (𝑙1 𝜃̇1 𝑐𝑜𝑠𝜃1 + 𝑙2 𝜃̇2 𝑐𝑜𝑠𝜃2 )] 2.3 Potential energy 5 2 Pendulum 2: 𝑇𝑃2 = • 1 Pendulum 1 & 2: 𝑈𝑃1 = 𝑚1 𝑔𝑙1 𝑐𝑜𝑠𝜃1 𝑈𝑃2 = 𝑚2 𝑔(𝑙1 𝑐𝑜𝑠𝜃1 + 𝑙2 𝑐𝑜𝑠𝜃2 ) 𝑈 = 𝑈𝑃1 + 𝑈𝑃2 = 𝑚1 𝑔𝑙1 𝑐𝑜𝑠𝜃1 + 𝑚2 𝑔(𝑙1 𝑐𝑜𝑠𝜃1 + 𝑙2 𝑐𝑜𝑠𝜃2 ) 𝐿=𝑇−𝑈 𝐿 = 𝑇𝐶 + 𝑇𝑃1 + 𝑇𝑃2 − 𝑈𝑃1 − 𝑈𝑃2 𝐿= 1 2 2 1 2 1 2 𝑚𝐶 𝑥̇ 2 + 𝑚1 [𝑥̇ 2 + 2𝑥̇ 𝑙1 𝜃̇1 𝑐𝑜𝑠𝜃1 + 𝑙1 2 𝜃̇1 ] + 𝑚2 [𝑥̇ 2 + 𝑙1 2 𝜃̇1 + 𝑙2 2 𝜃̇2 + 2𝑙1 𝑙2 𝜃̇1 𝜃̇2 cos(𝜃1 + 2 2 𝜃2 ) + 2𝑥̇ (𝑙1 𝜃̇1 𝑐𝑜𝑠𝜃1 + 𝑙2 𝜃̇2 𝑐𝑜𝑠𝜃2 )] − 𝑚1 𝑔𝑙1 𝑐𝑜𝑠𝜃1 − 𝑚2 𝑔(𝑙1 𝑐𝑜𝑠𝜃1 + 𝑙2 𝑐𝑜𝑠𝜃2 ) 𝐿= 1 2 2 2 2 1 1 1 (𝑚𝐶 + 𝑚1 + 𝑚2 )𝑥̇ 2 + 𝑚1 𝑥̇ 𝑙1 𝜃̇1 𝑐𝑜𝑠𝜃1 + 𝑚1 𝑙1 2 𝜃̇1 + 𝑚2 𝑙1 2 𝜃̇1 + 𝑚2 𝑙2 2 𝜃̇2 + 2 2 2 𝑚2 𝑙1 𝑙2 𝜃̇1 𝜃̇2 cos(𝜃1 + 𝜃2 ) + 𝑚2 𝑥̇ 𝑙1 𝜃̇1 𝑐𝑜𝑠𝜃1 + 𝑚2 𝑥̇ 𝑙2 𝜃̇2 𝑐𝑜𝑠𝜃2 − 𝑚1 𝑔𝑙1 𝑐𝑜𝑠𝜃1 − 𝑚2 𝑔(𝑙1 𝑐𝑜𝑠𝜃1 + 𝑙2 𝑐𝑜𝑠𝜃2 ) 𝐿= 1 2 2 1 (𝑚𝐶 + 𝑚1 + 𝑚2 )𝑥̇ 2 + 𝑚1 𝑥̇ 𝑙1 𝜃̇1 𝑐𝑜𝑠𝜃1 + (𝑚1 + 𝑚2 )𝑙1 2 𝜃̇1 + 2 1 2 2 𝑚2 𝑙2 2 𝜃̇2 + 𝑚2 𝑙1 𝑙2 𝜃̇1 𝜃̇2 cos(𝜃1 + 𝜃2 ) + 𝑚2 𝑥̇ 𝑙1 𝜃̇1 𝑐𝑜𝑠𝜃1 + 𝑚2 𝑥̇ 𝑙2 𝜃̇2 𝑐𝑜𝑠𝜃2 − (𝑚1 + 𝑚2 )𝑔𝑙1 𝑐𝑜𝑠𝜃1 − 𝑚2 𝑔 𝑙2 𝑐𝑜𝑠𝜃2 To obtain our equations of motion, we will need to derive the Euler-Lagrange equations first, by taking the partial derivatives of the Lagrangian with respect to the generalized coordinates and generalized velocities. Our double pendulum has 3 degrees of freedom since it can move along the 𝑥 axis, and rotate in 𝜃1 and 𝜃2 , therefore we will define our generalized coordinates as: 𝑥 𝜃 𝑞 = [ 1] 𝜃2 Therefore, our generalized velocities are: 𝑥̇ 𝑞̇ = [𝜃1̇ ] 𝜃2̇ These definitions are useful since a good practice for defining state space coordinates is to choose variables that store energy, and since the potential energy of the system is dependent on the generalized coordinates (except for the x position) and the kinetic energy of the system is dependent on the generalized velocities, 𝑞 and 𝑞̇ will also be defining our state space. In the Euler-Lagrange equation 𝐹𝑔𝑒𝑛 is our generalized force, in our case it is 0 for both pendulums equations of motion since we are not accounting for friction in this model, however for the equation of motion of the cart our 𝐹𝑔𝑒𝑛 is equal to 𝑢 which is our control input, which would be the torque that accelerates the cart. 𝜕𝐿 𝑑 𝜕𝐿 = ( ) + 𝐹𝑔𝑒𝑛 𝜕𝑞 𝑑𝑡 𝜕𝑞̇ 6 𝐿= 1 2 2 2 1 1 (𝑚𝐶 + 𝑚1 + 𝑚2 )𝑥̇ 2 + 𝑚1 𝑥̇ 𝑙1 𝜃̇1 𝑐𝑜𝑠𝜃1 + (𝑚1 + 𝑚2 )𝑙1 2 𝜃̇1 + 𝑚2 𝑙2 2 𝜃̇2 + 2 2 𝑚2 𝑙1 𝑙2 𝜃̇1 𝜃̇2 cos(𝜃1 + 𝜃2 ) + 𝑚2 𝑥̇ 𝑙1 𝜃̇1 𝑐𝑜𝑠𝜃1 + 𝑚2 𝑥̇ 𝑙2 𝜃̇2 𝑐𝑜𝑠𝜃2 − (𝑚1 + 𝑚2 )𝑔𝑙1 𝑐𝑜𝑠𝜃1 − 𝑚2 𝑔 𝑙2 𝑐𝑜𝑠𝜃2 Cart 𝜕𝐿 = 0 𝜕𝑥 𝜕𝐿 = (𝑚𝐶 + 𝑚1 + 𝑚2 )𝑥̇ + 𝑚1 𝑙1 𝜃̇1 𝑐𝑜𝑠𝜃1 + 𝑚2 𝑙1 𝜃̇1 𝑐𝑜𝑠𝜃1 + 𝑚2 𝑙2 𝜃̇2 𝑐𝑜𝑠𝜃2 𝜕𝑥̇ 𝑑 𝜕𝐿 ( ) = (𝑚𝐶 + 𝑚1 + 𝑚2 )𝑥̈ + (𝑚1 + 𝑚2 )𝑙1 (𝜃̇1 𝑐𝑜𝑠𝜃1 ) + 𝑚2 𝑙2 𝜃̇2 𝑐𝑜𝑠𝜃2 𝑑𝑡 𝜕𝑥̇ 𝑑 𝜕𝐿 2 2 ( ) = (𝑚𝐶 + 𝑚1 + 𝑚2 )𝑥̈ + (𝑚1 + 𝑚2 )𝑙1 (𝜃1̈ 𝑐𝑜𝑠𝜃1 − 𝜃̇1 𝑠𝑖𝑛𝜃1 ) + 𝑚2 𝑙2 (𝜃2̈ 𝑐𝑜𝑠𝜃2 − 𝜃̇2 𝑠𝑖𝑛𝜃2 ) 𝑑𝑡 𝜕𝑥̇ 𝜕𝐿 𝑑 𝜕𝐿 − ( )=𝑢 𝜕𝑥 𝑑𝑡 𝜕𝑥̇ 2 (𝑚𝐶 + 𝑚1 + 𝑚2 )𝑥̈ + (𝑚1 + 𝑚2 )𝑙1 𝑐𝑜𝑠𝜃1 𝜃1̈ + 𝑚2 𝑙2 𝑐𝑜𝑠𝜃2 𝜃2̈ = 𝑢 + (𝑚1 + 𝑚2 )𝑙1 𝑠𝑖𝑛𝜃1 𝜃̇1 + 𝑚2 𝑙2 𝑠𝑖𝑛𝜃2 𝜃̇2 • Pendulum 1: 𝐿= 1 (𝑚𝐶 2 2 2 1 1 + 𝑚1 + 𝑚2 )𝑥̇ 2 + 𝑚1 𝑥̇ 𝑙1 𝜃̇1 𝑐𝑜𝑠𝜃1 + (𝑚1 + 𝑚2 )𝑙1 2 𝜃̇1 + 𝑚2 𝑙2 2 𝜃̇2 + 2 2 𝑚2 𝑙1 𝑙2 𝜃̇1 𝜃̇2 cos(𝜃1 + 𝜃2 ) + 𝑚2 𝑥̇ 𝑙1 𝜃̇1 𝑐𝑜𝑠𝜃1 + 𝑚2 𝑥̇ 𝑙2 𝜃̇2 𝑐𝑜𝑠𝜃2 − (𝑚1 + 𝑚2 )𝑔𝑙1 𝑐𝑜𝑠𝜃1 − 𝑚2 𝑔 𝑙2 𝑐𝑜𝑠𝜃2 𝜕𝐿 𝑑 𝜕𝐿 − ( )=0 𝜕𝜃1 𝑑𝑡 𝜕𝜃̇1 𝜕𝐿 = −(𝑚1 + 𝑚2 )𝑙1 𝑥̇ 𝜃̇1 𝑠𝑖𝑛𝜃1 −𝑚2 𝑙1 𝑙2 𝜃̇1 𝜃̇2 sin(𝜃1 + 𝜃2 ) − (𝑚1 + 𝑚2 )𝑔𝑙1 𝑠𝑖𝑛𝜃1 𝜕𝜃1 𝜕𝐿 = 𝑚1 𝑙1 (𝑥̇ 𝑐𝑜𝑠𝜃1 ) + (𝑚1 + 𝑚2 )𝑙1 2 𝜃̇1 + 𝑚2 𝑙1 𝑙2 𝜃̇2 cos(𝜃1 + 𝜃2 ) + 𝑚2 𝑙1 (𝑥̇ 𝑐𝑜𝑠𝜃1 ) 𝜕𝜃1̇ 𝑑 𝜕𝐿 ( ) = (𝑚1 + 𝑚2 )𝑙1 (𝑥̈ 𝑐𝑜𝑠𝜃1 − 𝑥̇ 𝜃̇1 𝑠𝑖𝑛𝜃1 ) + (𝑚1 + 𝑚2 )𝑙1 2 𝜃1̈ + 𝑚2 𝑙1 𝑙2 (𝜃2̈ cos(𝜃1 + 𝜃2 ) 𝑑𝑡 𝜕𝜃1̇ − 𝜃̇2 sin(𝜃1 + 𝜃2 ) (𝜃̇1 + 𝜃̇2 )) −𝑚1 𝑙1 𝑥̇ 𝜃̇1 𝑠𝑖𝑛𝜃1 −𝑚2 𝑙1 𝑙2 𝜃̇1 𝜃̇2 sin(𝜃1 + 𝜃2 ) − 𝑚2 𝑙1 𝑥̇ 𝜃̇1 𝑠𝑖𝑛𝜃1 + (𝑚1 + 𝑚2 )𝑔𝑙1 𝑠𝑖𝑛𝜃1 − (𝑚1 + 𝑚2 )𝑙1 (𝑥̈ 𝑐𝑜𝑠𝜃1 − 𝑥̇ 𝜃̇1 𝑠𝑖𝑛𝜃1 ) − (𝑚1 + 𝑚2 )𝑙1 2 𝜃1̈ − 𝑚2 𝑙1 𝑙2 (𝜃2̈ cos(𝜃1 + 𝜃2 ) + 𝜃̇2 sin(𝜃1 + 𝜃2 ) (𝜃̇1 + 𝜃̇2 )) = 0 7 2 −𝑚1 𝑙1 𝑥̇ 𝜃̇1 𝑠𝑖𝑛𝜃1 −𝑚2 𝑙1 𝑙2 𝜃̇1 𝜃̇2 sin(𝜃1 + 𝜃2 ) − 𝑚2 𝑙1 𝑥̇ 𝜃̇1 𝑠𝑖𝑛𝜃1 + (𝑚1 + 𝑚2 )𝑔𝑙1 𝑠𝑖𝑛𝜃1 − (𝑚1 + 𝑚2 )𝑙1 𝑥̈ 𝑐𝑜𝑠𝜃1 + (𝑚1 + 𝑚2 )𝑙1 𝑥̇ 𝜃̇1 𝑠𝑖𝑛𝜃1 − (𝑚1 + 𝑚2 )𝑙1 2 𝜃1̈ − 𝑚2 𝑙1 𝑙2 𝜃2̈ cos(𝜃1 + 𝜃2 ) − 𝑚 𝑙 𝑙 𝜃̇ 𝜃̇ sin(𝜃 + 𝜃 ) − 𝑚 𝑙 𝑙 𝜃 2̇ sin(𝜃 + 𝜃 ) = 0 2 1 2 1 2 1 2 2 1 2 2 1 2 (𝑚1 + 𝑚2 )𝑙1 𝑐𝑜𝑠𝜃1 𝑥̈ + (𝑚1 + 𝑚2 )𝑙1 2 𝜃1̈ + 𝑚2 𝑙1 𝑙2 cos(𝜃1 + 𝜃2 )𝜃2̈ = −𝑚 𝑙 𝑙 𝜃̇ 𝜃̇ sin(𝜃 + 𝜃 ) − 𝑚 𝑙 𝑙 𝜃 2̇ sin(𝜃 + 𝜃 ) + (𝑚 + 𝑚 )𝑙 𝑥̇ 𝜃̇ 𝑠𝑖𝑛𝜃 2 1 2 1 2 1 2 2 1 2 2 1 2 1 2 1 1 1 + (𝑚1 + 𝑚2 )𝑔𝑙1 𝑠𝑖𝑛𝜃1 − 𝑚1 𝑙1 𝑥̇ 𝜃̇1 𝑠𝑖𝑛𝜃1 −𝑚2 𝑙1 𝑙2 𝜃̇1 𝜃̇2 sin(𝜃1 + 𝜃2 ) − 𝑚2 𝑙1 𝑥̇ 𝜃̇1 𝑠𝑖𝑛𝜃1 (𝑚1 + 𝑚2 )𝑙1 𝑐𝑜𝑠𝜃1 𝑥̈ + (𝑚1 + 𝑚2 )𝑙1 2 𝜃1̈ + 𝑚2 𝑙1 𝑙2 cos(𝜃1 + 𝜃2 )𝜃2̈ = −2𝑚 𝑙 𝑙 𝜃̇ 𝜃̇ sin(𝜃 + 𝜃 ) − 𝑚 𝑙 𝑙 𝜃 2̇ sin(𝜃 + 𝜃 ) + (𝑚 + 𝑚 )𝑔𝑙 𝑠𝑖𝑛𝜃 2 1 2 1 2 • 1 2 2 1 2 2 1 2 1 2 1 1 Pendulum 2: 𝜕𝐿 𝑑 𝜕𝐿 − ( )=0 𝜕𝜃2 𝑑𝑡 𝜕𝜃̇2 𝜕𝐿 = −𝑚2 𝑙1 𝑙2 𝜃̇ 1 𝜃̇ 2 sin(𝜃1 + 𝜃2 ) − 𝑚2 𝑥̇ 𝑙2 𝜃̇ 2 𝑠𝑖𝑛𝜃2 + 𝑚2 𝑔 𝑙2 𝑠𝑖𝑛𝜃2 𝜕 𝜃2 𝜕𝐿 2 = 𝑚2 𝑙2 𝜃̇ 2 + 𝑚2 𝑙1 𝑙2 (𝜃̇ 1 cos(𝜃1 + 𝜃2 )) + 𝑚2 𝑙2 𝑥̇ 𝑐𝑜𝑠𝜃2 𝜕 𝜃2̇ 𝑑 𝜕𝐿 2 ( ) = 𝑚2 𝑙2 𝜃2̈ + 𝑚2 𝑙1 𝑙2 (𝜃1̈ cos(𝜃1 + 𝜃2 ) − 𝜃̇ 1 sin(𝜃1 + 𝜃2 )(𝜃̇ 1 + 𝜃̇ 2 )) + 𝑚2 𝑙2 (𝑥̈ 𝑐𝑜𝑠𝜃2 − 𝑥̇ 𝜃̇ 2 𝑠𝑖𝑛𝜃2 𝑑𝑡 𝜕 𝜃2̇ −𝑚2 𝑙1 𝑙2 𝜃̇1 𝜃̇2 sin(𝜃1 + 𝜃2 ) − 𝑚2 𝑥̇ 𝑙2 𝜃̇2 𝑠𝑖𝑛𝜃2 + 𝑚2 𝑔 𝑙2 𝑠𝑖𝑛𝜃2 − 𝑚2 𝑙2 2 𝜃2̈ − 𝑚2 𝑙1 𝑙2 (𝜃1̈ cos(𝜃1 + 𝜃2 ) − 𝜃̇1 sin(𝜃1 + 𝜃2 )(𝜃̇1 + 𝜃̇2 )) − 𝑚2 𝑙2 (𝑥̈ 𝑐𝑜𝑠𝜃2 − 𝑥̇ 𝜃̇2 𝑠𝑖𝑛𝜃2 = 0 3. Nonlinear State Space Taking the equations of motion, we can represent the nonlinear state space matrix form of the system as shown below. 𝑎4 𝜃12̇ + 𝑎5 𝜃22̇ 𝑎1 𝑎2 𝑎3 𝑥̈ 0 1 ̈ 2 ̇ 𝑏 𝑏 𝑏 𝜃 −𝑏 [ 1 2 3 ] [ 1] = [ −𝑏4 𝜃2 ] + [ 5 ] + [0] 𝑢 𝑑1 𝑑2 𝑑3 𝜃2̈ −𝑑5 0 𝑑 𝜃 2̇ 4 1 𝑥̈ 𝑓1 1 𝑀 [𝜃1̈ ] = [ 𝑓2 ] + [0] 𝑢 𝑓3 0 𝜃2̈ Table 1. Nonlinear Matrix Coefficients. 8 𝑎1 = (𝑚1 + 𝑚2 + 𝑚𝑐 ) 𝑎2 = (𝑚1 + 𝑚2 )𝑙1 𝑐𝑜𝑠𝜃1 𝑎3 = 𝑚2 𝑙2 𝑐𝑜𝑠𝜃2 𝑏1 = (𝑚1 + 𝑚2 )𝑙1 𝑐𝑜𝑠𝜃1 𝑏2 = (𝑚1 + 𝑚2 )𝑙1 2 𝑏3 = 𝑚2 𝑙1 𝑙2 cos(𝜃2 + 𝜃1 ) 𝑎4 = (𝑚1 + 𝑚2 )𝑙1 𝑠𝑖𝑛𝜃1 𝑎5 = 𝑚2 𝑙2 𝑠𝑖𝑛𝜃2 𝑏4 = 𝑚2 𝑙1 𝑙2 sin(𝜃2 + 𝜃1 ) 𝑏5 = (𝑚1 + 𝑚2 )𝑔𝑙1 𝑠𝑖𝑛𝜃1 𝑑1 = 𝑚2 𝑙2 𝑐𝑜𝑠𝜃2 𝑑2 = 𝑚2 𝑙1 𝑙2 cos(𝜃2 + 𝜃1 ) 𝑑3 = 𝑚2 𝑙2 2 𝑑4 = 𝑚2 𝑙1 𝑙2 sin(𝜃2 + 𝜃1 ) 𝑑5 = 𝑚2 𝑔𝑙2 𝑠𝑖𝑛𝜃2 4. Simulation of dynamics We define our systems dynamics in a function as in Figure 2. We introduce an element of damping proportional to the velocities of the cart and pendulums. This function returns the matrices 𝑀 and 𝑓(X, u) and to obtain our dynamics we take 𝑀−1 and multiply it by 𝐹. M is symmetric so its invertible. 𝑥̈ [𝜃1̈ ] = 𝑀−1 𝐹 𝜃2̈ Figure 2. Pendulum Dynamics code To numerically integrate our dynamics, we will use a 4th order Runge-Kutta to reduce the number of steps and avoid numerical errors that Simpson’s rule or Riemann sums tend to generate as shown in Figure 3. Figure 3. Nonlinear dynamics RK4 integrator function 9 5. Validation of model To validate the model dynamics, we simulate our pendulum with no control inputs and no energy dissipation. If the sum of potential and kinetic energies is constant, then we can prove that the pendulum´s dynamics make sense, as shown in Figure 4. Figure 4. Kinetic and Potential Energy of the system. As shown before, the pendulum energy remains constant which is a good indication, however, another way we can prove that the model is valid is by using different initial conditions that are close to one another. Since a double pendulum is a chaotic system, small changes to the initial conditions will yield very different trajectories even if they are close. We can do this using a phase diagram of the system and since we have a state space of 6 variables we can pair the generalized coordinates with the generalized velocities. We then program different initial conditions and plot them. In Figure 5 we plot 3 different initial conditions with a difference of 10−8 degrees with respect to each other. As we can see, the trajectories do diverge enough to be noticeable. 10 Figure 5. Phase diagram of state pairs Linearization Our system is highly nonlinear, and our control will be a linear MPC and LQR, therefore we need to linearize the dynamics around an operating point. To do this we first analyse the equilibrium configurations of the system. Table 2. Equilibrium configurations of the Double Pendulum Equilibrium Configuration Pendulum 1 Pendulum 2 Stability 1 Down Down Stable 2 Down Up Unstable 3 Up Down Unstable 4 Up Up Unstable We will focus on Equilibrium position 4 but in practice we can control all 4 positions if the configuration of the system stays within close range of the operating point. To do this we will compute the Jacobian matrix of our nonlinear function. 𝑋̇ = 𝑓(𝑋, 𝑢) Let us remember that the Jacobian tells us the sensitivity of a system of functions to “nudges” in its variables, and the computation is just to take the gradient of these functions around an operating point. 11 Figure 6. Block diagram Multifunction plant 𝜕𝑓1 ∇𝑓1 𝜕𝑧1 𝐽=[ ⋮ ]= ⋮ 𝜕𝑓𝑚 ∇𝑓𝑚 [ 𝜕𝑧1 𝜕𝑓1 𝜕𝑧𝑛 ⋱ ⋮ 𝜕𝑓𝑚 ⋯ 𝜕𝑧𝑛 ] ⋯ Figure 7. Single variable visualization of the Jacobian Once we compute the Jacobian matrix, we analyse it at an operating point 𝑍0 . Δ𝑓 = 𝐽(𝑍0 ) Δ𝑍 We can define the Jacobian as the change in 𝑓 with respect to the change in the state vector 𝑍 then solve for F to obtain the linearized function around the operating point. 𝐹 − 𝐹0 = 𝐽(𝑍0 ) Z − 𝑍0 𝐹 = 𝐹0 + 𝐽(𝑍0 )Δ𝑍 We will do the same for our system since we need the state space representation to be in the form: 𝑋̇ = 𝐴𝑋 + 𝐵𝑢 We will take the Jacobian with respect to the state vectors and control inputs. These will be our A and B matrices respectively, but since this is a linearization our state space matrices will be in the form 𝑋̇ = 12 𝐴∆𝑋 + 𝐵∆𝑢 where ∆𝑋 is the difference between the state variables at the equilibrium position and the state vector, the same goes for ∆𝑢, therefore: 𝐴= 𝜕𝐹 𝜕𝑋 𝐵= 𝜕𝐹 𝜕𝑢 𝑋̇ = 𝐴(𝑋 − 𝑋 ∗ ) + 𝐵(𝑢 − 𝑢∗ ) Where 𝑋 ∗ and 𝑢∗ are the operating points of the linearization and the updated state vector. Using MATLAB´s symbolic library and calculating the Jacobian with respect to the state vector and the control input respectively as shown in Figure 8, we obtain the linearized state transition matrix and control input matrix. Figure 8. Linearization Function 6. Control 7.1 Controllability A system is controllable if we can transition our states to anywhere in state space in a finite amount of time, a simple way to evaluate this is to compute the controllability matrix: 𝐶 = [𝐵 𝐴𝐵 𝐴2 𝐵 … 𝐴𝑛−1 𝐵] Where n is the number of columns in the state transition matrix A. If the matrix C is full rank, then this means that the system is controllable. Using MATLAB´s ctrb (A, B) function and getting its rank determines that the system is indeed controllable as shown in Figure (). 13 Figure 9. Controllability of System 7.2 LQR To evaluate the MPC´s performance an LQR control is implemented as a benchmark, and since the system has already been linearized, we can solve the Algebraic Ricatti Equation and obtain the full state feedback gain K. As we can see from Figure 10, the LQR control leads all states to 0. Figure 10. LQR state performance 7.3 MPC Basic Structure: Model Predictive Control uses a model of the plant to make predictions of the evolution of the system and compute the optimal control solving an optimization problem at each time step. For this paper we focus on a linear discrete MPC and its structure is shown in Figure 11 14 Figure 11. Linear MPC block diagram 7. Discrete Time MPC We will use a Zero Order Hold to convert the linear dynamics from continuous to discrete as shown in Figure 12. Figure 12. Continuous to Discrete zero order hold In practice we also need to discretize the A and B matrices. Since we are solving for a linear system, the solution will be of the form: 𝑡 𝑋(𝑡) = 𝑋 ∗ + 𝑒 𝐴(𝑡−𝑡0 ) 𝑋(𝑡0 ) + ∫ 𝑒 𝐴(𝑡−𝜏) 𝐵𝑢(𝜏)𝑑𝜏 𝑡0 With this expression we can discretize the dynamics: 𝐴𝑑 = 𝑒 𝐴(𝑡−𝑡0 ) 𝑡 𝐵𝑑 = ∫ 𝑒 𝐴(𝑡−𝜏) 𝐵𝑢(𝜏)𝑑𝜏 𝑡0 Since the control input 𝑢 is constant from the interval 𝑡0 < 𝑡 < 𝑡𝑠 , the control input matrix can be solved as: 15 𝑡 𝐵𝑑 = 𝑢(𝜏) ∫ (𝑒 𝐴(𝑡−𝜏) 𝑑𝜏)𝐵 𝑡0 We remember that since this is a linearization we take ∆𝑋𝑘 and not the state vector itself and since the operating point for the control input matrix is 0 (because we want the control input to be as close to 0 when the pendulum is upright). Since the MPC methodology requires predictions, we can express these predictions as follows: 𝑋𝑘+1 = 𝑋 ∗ + 𝐴𝑑 ∆𝑋𝑘 + 𝐵𝑑 𝑢𝑘 𝑋𝑘+2 = 𝑋 ∗ + 𝐴𝑑 ∆𝑋𝑘+1 + 𝐵𝑑 𝑢𝑘+1 𝑋𝑘+3 = 𝑋 ∗ + 𝐴𝑑 ∆𝑋𝑘+2 + 𝐵𝑑 𝑢𝑘+2 We do this for k up to 𝑘 + 𝑁 where 𝑁 is the prediction horizon. For nonlinear fast dynamical systems, a prediction horizon between 15 to 30 is considered good. Generalizing the latter expressions we obtain the following: 𝑋𝑘+1 = 𝑋 ∗ + 𝐴𝑑 ∆𝑋𝑘 + 𝐵𝑑 𝑢𝑘 𝑋𝑘+2 = 𝑋 ∗ + 𝐴𝑑 2 (𝑋𝑘 − 𝑋 ∗ ) + 𝐴𝑑 𝐵𝑑 𝑢𝑘 + 𝐵𝑑 𝑢𝑘+1 𝑋𝑘+3 = 𝑋 ∗ + 𝐴𝑑 3 (𝑋𝑘 − 𝑋 ∗ ) + 𝐴𝑑 2 𝐵𝑑 𝑢𝑘 + 𝐴𝑑 𝐵𝑑 𝑢𝑘+1 + 𝐵𝑑 𝑢𝑘+2 ⋮ 𝑋𝑘+𝑁 = 𝑋 ∗ + 𝐴𝑑 𝑁 ∆𝑋𝑘 + 𝐴𝑑 𝑁−1 𝐵𝑑 𝑢𝑘 + ⋯ + 𝐵𝑑 𝑢𝑘+𝑁−1 𝐴𝑑 𝐵𝑑 0 ⋯ 0 𝑢𝑘 𝑋𝑘+1 2 2 𝑢𝑘+1 𝑋 𝐴 𝐴𝑑 𝐵𝑑 𝐵𝑑 ⋯ 0 [ 𝑘+2 ] = 𝑋 ∗ + 𝑑 ∆𝑋𝑘 + [ ⋮ ] ⋱ ⋮ ⋮ ⋮ ⋮ ⋮ 𝑋𝑘+𝑁 [𝐴𝑑 𝑁 ] [𝐴𝑑 𝑁−1 𝐵𝑑 𝐴𝑑 𝑁−1 𝐵𝑑 ⋯ 𝐵𝑑 ] 𝑢𝑘+𝑁−1 Since we want our controller to track a given reference 𝑌, we can multiply our concatenated matrix 𝑋̃(𝑘) by the matrix C for each value in the matrix as follows. 𝑌𝑘+1 𝑋𝑘+1 𝑌𝑘+2 𝑋 [ ] = 𝐶 [ 𝑘+2 ] ⋮ ⋮ 𝑌𝑘+𝑁 𝑋𝑘+𝑁 To simplify programming, we can use a selector matrix to represent the accumulation of the control inputs to the system which we denote below as 𝜓𝑖 . (𝑚,𝑁) Π𝑖 16 = [0̅ 0̅ ⋯ 𝐼 ⋯ 0̅ 0̅] 𝐵𝑑 0 ⋯ 0 2 𝐴𝑑 𝐵𝑑 𝐵𝑑 ⋯ 0 𝑋̃(𝑘) = 𝑋 ∗ + 𝜙∆𝑋𝑘 + 𝑢̃(k) ⋱ ⋮ ⋮ ⋮ [𝐴𝑑 𝑁−1 𝐵𝑑 𝐴𝑑 𝑁−1 𝐵𝑑 ⋯ 𝐵𝑑 ] (𝑛 ,𝑁) Π1 𝑢 𝑋(𝑘 + 𝑖) = 𝑋 ∗ + 𝜙𝑖 ∆𝑋𝑘 + [𝐴𝑖 𝐵 ⋯ 𝐵] 𝐴𝐵 (𝑛 ,𝑁) Π2 𝑢 𝑢̃(k) ⋮ (𝑛 ,𝑁) [Π𝑖 𝑢 ] The expression below will be used for predicting the future states of the system. 𝑋(𝑘 + 𝑖) = 𝑋 ∗ + 𝜙𝑖 ∆𝑋𝑘 + 𝜓𝑖 𝑢̃(k) 8. Cost function Let us remember that the cost function is a weighted sum of the differences between the reference and the output of the plant, as well as the control effort minus the desired control. Since we want to minimize the control effort, the desired control will be 0. Also, the reference will be static, therefore we will treat it as a constant. 𝑁 𝐽 = ∑|∏ 𝑖 𝑖=1 𝑁 (𝑛𝑢,𝑁) 𝑌̃ − 𝑟𝑒𝑓|2𝑄 + | ∏ 𝑢̃(k) |2𝑅 𝑖 (𝑛𝑥 ,𝑁) (𝑛𝑢,𝑁) 𝑖 𝑖 𝐽 = ∑ |𝐶 ∏ 𝑖=1 (𝑛𝑦 ,𝑁) 𝑋̃(𝑘) − 𝑟𝑒𝑓|2𝑄 + | ∏ 𝑢̃(k) |2𝑅 𝑁 𝐽 = ∑ |𝐶(𝑋 ∗ + 𝜙𝑖 ∆𝑋𝑘 + 𝜓𝑖 𝑢̃(k)) − 𝑟𝑒𝑓|2𝑄 + | ∏ (𝑛𝑢 ,𝑁) 𝑖 𝑖=1 𝑁 𝐽 = ∑ |𝐶𝑋 ∗ + 𝐶 𝜙𝑖 ∆𝑋𝑘 + 𝐶𝜓𝑖 𝑢̃(k)) − 𝑟𝑒𝑓|2𝑄 + | ∏ (𝑛𝑢 ,𝑁) 𝑖 𝑖=1 𝑢̃(k) |2𝑅 𝑢̃(k) |2𝑅 Since 𝑋 ∗ and 𝑟𝑒𝑓 are constant during the calculation of the cost function we can take the difference between them and call it a new variable 𝑒, this will reduce complexity in calculations. 𝑁 𝐽 = ∑ |𝐶 𝜙𝑖 ∆𝑋𝑘 + 𝐶𝜓𝑖 𝑢̃ (k) + 𝑒|2𝑄 + | ∏ 𝑖=1 (𝑛𝑢 ,𝑁) 𝑖 𝑢 ̃ (k) |2𝑅 𝐽𝑖 = ∆𝑋𝑘 𝑇 𝜙𝑖 𝑇 𝐶 𝑇 𝑄𝐶 𝜙𝑖 ∆𝑋𝑘 + 𝑢̃ (k)𝑇 𝜓𝑖 𝑇 𝐶𝑇 𝑄𝐶𝜓𝑖 𝑢̃ (k) + 𝑒𝑇 𝑄𝑒 + 2∆𝑋𝑘 𝑇 𝜙𝑖 𝑇 𝐶 𝑇 Q 𝐶𝜓𝑖 𝑢̃ (k) + 2∆𝑋𝑘 𝑇 𝜙𝑖 𝑇 𝐶 𝑇 Qe (𝑛 ,𝑁) 𝑇 + 2𝑢 ̃ (k)𝑇 𝜓𝑖 𝑇 𝐶𝑇 𝑄𝑒 + 𝑢̃ (k)𝑇 Π𝑖 𝑢 (𝑛 ,𝑁) 𝑅 Π𝑖 𝑢 𝑢 ̃ (k) Now that we have expanded the cost function, we can group the terms to get a quadratic form of the 17 expression as follows: 𝐽𝑖 = 1 𝑇 𝑢 𝐻𝑢 + 𝐹 𝑇 𝑢 2 The latter is useful if we want to use QP solvers for constrained optimization. 𝐽𝑖 = 𝑢̃ (k)𝑇 𝐻𝑢̃ (k) + [𝐹1 ∆𝑋𝑘 + 𝐹2 ]𝑇 𝑢̃ (k) 𝑁 (𝑛 ,𝑁) 𝑇 𝐻 = 2 ∑ [𝜓𝑖 𝑇 𝐶 𝑇 𝑄𝐶𝜓𝑖 + Π𝑖 𝑢 (𝑛 ,𝑁) 𝑅Π𝑖 𝑢 ] 𝑖=1 𝑁 𝐹1 = 2 ∑[𝜓𝑖 𝑇 𝐶 𝑇 𝑄𝐶𝜙𝑖 ] 𝑖=1 2𝑢̃(k)𝑇 𝜓𝑖 𝑇 𝐶 𝑇 𝑄𝑒 𝑁 𝐹2 = 2 ∑[2𝑒 𝑇 𝑄𝐶𝜓𝑖 ] 𝑖=1 To obtain the optimal control vector, we need to take the gradient of the cost function and equate it to 0, since when the gradient is 0, we have reached a peak or a valley, and if our Hessian matrix H is positive semidefinite, we have a convex function and we have a global minimum. ∇𝐽𝑢 = 𝜕𝐽 = 𝑢̃(k)𝑇 𝐻 + [𝐹1 ∆𝑋𝑘 + 𝐹2 ]𝑇 = 0 𝜕𝑢̃(k) Now we can take the transpose of both sides and solve for 𝑢̃(k). Since we are assuming 𝐻 is symmetric, 𝐻 = 𝐻 𝑇 and invertible. ∇𝐽𝑢 = ( 𝑢̃(k)𝑇 𝐻 + 𝐹 𝑇 = 0)𝑇 ∇𝐽𝑢 = 𝐻𝑢̃(k) + 𝐹 = 0 𝑢̃(k) = −𝐻 −1 𝐹 For strictly convex cost functions the expression −𝐻 −1 𝐹 gives the optimal control trajectory at each time step, however this is not the control yet, remembering that the methodology of MPC is taking the first value of these optimal control trajectory as follows. 𝑢̃(k) = −𝐻 −1 𝐹 𝑢𝑜𝑝𝑡 = ∏ (𝑛𝑢 ,𝑁) 𝑢̃(k) 1 Having all the latter expressions we can implement them in a MATLAB simulation as follows: 18 9.1 Continuous to discrete dynamics: Figure 13. Continuous to Discrete Matrices 9.2 Main control loop: The main control loop uses the LinMPC () function which offers different optimizers for the control input. To simulate the discretization, we use a condition that is applied when the modulus between the current time step and the sampling time equals 0 and in the beginning of the simulation. Figure 14. Simulation main loop 9.3 LinMPC: This function is a selection function that uses different optimization methods of the cost function, it uses the HFQ function which calculates the H and F matrices to obtain the optimal analytical control, but these only for convex cost functions. 19 Figure 15. Linear MPC selection function 9.4 HFQ: This function receives the discrete A and B matrices which we call Phi and Gamma, the current state X, the penalization matrices Q and R and the prediction horizon Np. Figure 16. H and F constructor function 20 9.5 Selector Matrix PI: Figure 17. Selection Matrix 9.6 PSI: Matrix 𝜓𝑖 is implemented in the function PSI, it takes the previous 𝜓𝑖 value, the current selection matrix, the discrete transition matrix and discrete control matrix, as well as the current iteration “i”. the z value works to concatenate the cumulative control inputs. Figure 18. Control Accumulation Matrix 9. Gradient Descent function GD Gradient descent is an optimization algorithm used to minimize a cost function by iteratively adjusting parameters in the direction of the steepest descent, determined by the negative gradient of the function. It starts with an initial guess and takes steps proportional to the gradient, with a step size defined by a learning rate. This process continues until the algorithm converges to a local or global minimum, depending on the function's shape. Gradient descent is widely used in machine learning for training models by minimizing error or loss functions. 21 Figure 19. Convex Function Gradient Descent One of the issues of gradient descent is its propensity to generate oscillations if the learning rate alpha is not chosen correctly, this can lead to oscillations or divergence which takes us away from our minima. One way we can solve this is by using an adaptive learning rate, one that is related to the gradient in some way such that as the gradient decrease our learning rate too, this reduces the oscillations. In our function an implementation of this adaptive learning rate is used by limiting the norm of the gradient vector, this ensures saturation of the learning rate in case the gradient is too big. As shown in Figure 20 we can see the implementation of Gradient descent for the optimization problem, this receives the max number of iterations, the H and F matrices, as well as the learning rate alpha and a gamma coefficient which serve as the initial learning rate. Figure 20. Gradient Descent Function 22 10. Nesterov gradient descent Nesterov's accelerated gradient descent improves momentum by making a prediction step before calculating the gradient, providing a more accurate adjustment. Instead of updating based solely on the current position, it estimates the next position and calculates the gradient there, allowing for more informed and efficient progress toward the minimum. This method reduces overshooting and ensures faster convergence in many scenarios. As shown in Figure 21 we can see the function for Nesterov’s Accelerated Gradient, it receives the same arguments as the Gradient descent function before Figure 21. Nesterov’s Accelerated Gradient Descent Function 11. Momentum gradient descent Momentum gradient descent enhances standard gradient descent by incorporating a momentum term that accumulates the gradients of past iterations. This helps the algorithm build velocity in a consistent direction, reducing oscillations and speeding up convergence. The update combines a fraction of the previous step (momentum) with the current gradient, smoothing the trajectory and overcoming small local minima or plateaus. 23 Figure 22. Momentum Gradient Descent Function 12. Results LQR In Figure 23 we can observe the tracking for the angles of both pendulums is smooth and with little oscillations, we can also observe a fast rise time and the time from transient to steady also looks fast. We do observe some overshoot, but it’s expected since it needs to compensate for the initial conditions. Figure 23. LQR angle trajectory As showed in Figure 24, the control effort has a maximum value of around 100 Newtons or a little above 24 (the precise value will be shown later), the system weighs 10kg, if we take that 𝑇 = 𝐹𝑑 and assume a wheel nominal diameter of 0.2 m then the Torque the motor needs to provide is around 10 𝑁𝑚, which is quite high however there are BLDC motors capable of providing that amount of torque. We could increase the penalization matrix for the control, but for our purposes this is good enough. We also see a decaying control effort which is good for energy reduction. Figure 24. LQR control effort For the tracking of position and velocity in Figure 25, we do see that the LQR can drive them to the desired state values although for all controls it seems that the position state is the one we have least control authority over, although we are penalizing its error much higher than other states Figure 25. Position, Velocity and Angle tracking LQR 25 Gradient Descent In Figure 26 that angle tracking is good, however we do the some oscillations as time increases, this might be because the error becomes small enough in the gradient descent that the step size is too big to converge to, so it also could be oscillating, however response time is good, below the 5 seconds the pendulums are stabilized. Figure 26. Angle tracking GD The maximum control effort shown in Figure 27, is lower compared to the LQR however as time goes we start oscillating, we could try to add some dampening to the control like using a derivative control to reduce oscillations. Figure 27. Control effort for GD 26 In Figure 28 we see the tracking of the position and velocity states. We also see oscillations in the tracking of velocity, but most importantly and this is for all the Gradient descent algorithms shown here, there is a big steady state error with respect to the position of the cart. We could use an Integral control to reduce it. Figure 28. Position, Velocity and Angle tracking GD In Figure 29 we see that the number of steps the Gradient descent algorithm needs to do averages at around 23 to 24 steps. Taking into account that we have a step size proportional to the gradient to smooth out oscillations. 27 Figure 29. Iterations at optimization GD Momentum Gradient Descent Figure 30. Angle tracking MGD Figure 31. Control effort MGD 28 Figure 32. iterations at optimization MGD Figure 33. Position, Velocity and Angle tracking MGD 29 Nesterov´s Accelerated Gradient Figure 34. Position, Velocity and Angle tracking NAG Figure 35. Control effort NAG 30 Figure 36. iterations at optimization MGD Performance Metrics Overview: To evaluate the performance of each optimization technique we will compute the following criteria: Rise Time: This is the time it takes for the signal to rise from 10% to 90% of its final value. It's important to understand how quickly the system responds to a change. A quick rise time is typically desired, but too fast can lead to overshoot and instability. Settling Time: This is the time it takes for the system’s response to remain within a certain range (typically within 2% of its final value). It’s important because it shows how quickly the system stabilizes after a change. Overshoot: This is the maximum value the system reaches beyond its final steady-state value, expressed as a percentage. High overshoot can indicate instability or that the control system is too aggressive. Steady-State Error: This is the difference between the desired final value (reference) and the actual steady-state value of the system. Ideally, we want this error to be zero, but for underactuated systems like the Double Inverted Pendulum on a Cart, some error may remain. Control Effort: Measures the magnitude of the control signal (input) required to drive the system towards the reference. High control effort can be undesirable because it indicates high energy consumption or excessive forces. 31 Metrics In the following figures we will see the metrics for the positional states 32 Conclusion In conclusion, each Gradient descent algorithm has its pros and Cons, we see better performance with respect to tracking and reduced oscillations for the normal gradient descent with adaptative step size, however the iterations for all 3 are at around the same amount, we would need to optimize for the 3 and change the penalization matrices to get the most efficient optimization for each. We see that somehow NAG and MGD seem to take the dampening from friction away since the system has increased oscillations. It´s important to consider that we could better tune the momentum and learning rate for each method to better the performance. Overall, the Double inverted pendulum was controlled successfully and stabilized, however for the real life implementation some modifications are needed, like taking into account the moments of inertia in our model as well as 6DOF dynamics. 13. References 1. 2. 33 Rawlings, J., Meadows, E., & Muske, K. (1994). Nonlinear model Predictive Control: a tutorial and survey. IFAC Proceedings Volumes, 27(2), 185– 197. https://doi.org/10.1016/s14746670(17)48151-1. R. Banerjee, N. Dey, U. Mondal and B. Hazra, "Stabilization of Double Link Inverted Pendulum Using LQR," 2018 International Conference on Current Trends towards Converging Technologies (ICCTCT), Coimbatore, India, 2018, pp. 1-6, a cascaded Model predictive control architecture for unmanned aerial vehicles. Mathematics, 12(5), 739. https://doi.org/10.3390/math12050739. 5. Kempf, I., Goulart, P., & Duncan, S. (2020). Fast Gradient Method for Model Predictive Control with Input Rate and Amplitude Constraints. IFACPapersOnLine, 53(2), 6542–6547. https://doi.org/10.1016/j.ifacol.2020.12.070. 6. Khalil, H. K. (1992). Nonlinear MacMillan Publishing Company. systems. 3. Van Parys, R., Verbandt, M., Swevers, J., & Pipeleers, G. (2019). Real-time proximal gradient method for embedded linear MPC. Mechatronics, 59, 1–9. https://doi.org/10.1016/j.mechatronics.2019.02.0 04 7. Gunjal, R., Nayyer, S. S., Wagh, S., & Singh, N. M. (2024). Nesterov’s Accelerated Gradient Descent: The Controlled Contraction approach. IEEE Control Systems Letters, 8, 163–168. https://doi.org/10.1109/lcsys.2024.3354827. 4. Borbolla-Burillo, P., Sotelo, D., Frye, M., GarzaCastañón, L. E., Juárez-Moreno, L., & Sotelo, C. (2024). Design and Real-Time implementation of 8. M. Lin, Z. Sun, Y Xia and J. Zhang, "Reinforcement Learning-Based Model Predictive Control for Discrete-Time Systems," in IEEE Transactions on Neural Networks and Learning Systems, vol. 35, no. 3, pp. 3312-3324, March 2024, doi: 10.1109/TNNLS.2023.3273590. 9. 1 Kordabad, A. B., Reinhardt, D., Anand, A. S., & Gros, S. (2023). Reinforcement Learning for MPC: Fundamentals and current challenges. IFAC-PapersOnLine, 56(2), 5773–5780. https://doi.org/10.1016/j.ifacol.2023.10.548. 10. Necoara, I., & Clipici, D. (2013). Efficient parallel coordinate descent algorithm for convex optimization problems with separable constraints: Application to distributed MPC. Journal of Process Control, 23(3), 243–253. https://doi.org/10.1016/j.jprocont.2012.12.012