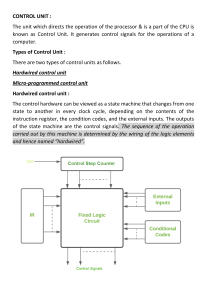

Understanding the Control Unit Functions and Importance in Computing Author: remko.online Year: 2025 Y Chapter 2: Understanding the Control Unit: Functions and Importance in Computing Key Functions of the Control Unit: Decoding and Execution The control unit (CU) is a pivotal component of a computer's central processing unit (CPU), orchestrating the operations of the processor and ensuring that instructions are executed in a systematic manner. Two of its primary functions are decoding and execution, which are essential for transforming high-level programming commands into actions that the computer can perform. Understanding these functions not only sheds light on how computers operate but also enhances our appreciation of the intricate processes that occur within a CPU. Decoding: Translating Instructions Decoding is the first step in the instruction cycle, where the control unit interprets the binary code of an instruction fetched from memory. Each instruction is represented in a specific format, typically consisting of an opcode (operation code) and operands. The opcode specifies the operation to be performed, while the operands provide the necessary data or addresses for the operation. For example, consider a simple instruction in assembly language: ADD R1, R2, R3 . In this case, the opcode is ADD , which tells the control unit to perform an addition operation. The operands R1 , R2 , and R3 indicate that the values in registers R2 and R3 should be added together, and the result should be stored in register R1. During the decoding phase, the control unit identifies the opcode and determines the type of operation to execute, as well as the locations of the operands. The decoding process involves several steps: 1. Instruction Fetching: The control unit retrieves the instruction from memory, using the program counter (PC) to keep track of the next instruction to be executed. 2. Instruction Interpretation: The CU interprets the fetched instruction, breaking it down into its opcode and operands. 3. Control Signal Generation: Based on the decoded instruction, the control unit generates control signals that direct other components of the CPU, such as the arithmetic logic unit (ALU) and registers, on how to proceed. Execution: Carrying Out the Instructions Once the instruction has been decoded, the next phase is execution. This is where the actual operation specified by the instruction is performed. The control unit coordinates the execution by sending appropriate signals to the ALU, which performs arithmetic and logical operations, and to the registers, which temporarily hold data. Continuing with our previous example, after decoding the ADD R1, R2, R3 instruction, the control unit will: 1. Activate the ALU: The CU sends a control signal to the ALU to perform the addition operation. 2. Transfer Data: The values from registers R2 and R3 are sent to the ALU. 3. Store the Result: Once the addition is complete, the result is sent back to register R1, as specified by the instruction. The execution phase can involve various types of operations, including arithmetic calculations (like addition, subtraction, multiplication), logical operations (such as AND, OR, NOT), and data movement (copying data from one location to another). Each of these operations requires the control unit to manage the flow of data and ensure that the correct components of the CPU are engaged. Practical Implications of Decoding and Execution The efficiency of the decoding and execution processes directly impacts the overall performance of a computer system. For instance, modern CPUs employ techniques such as pipelining, where multiple instructions are overlapped in execution. This means that while one instruction is being executed, another can be decoded, and yet another can be fetched from memory. Such optimizations significantly enhance processing speed and efficiency. Moreover, understanding these functions is crucial for software developers and engineers. When writing code, they must be aware of how their instructions will be interpreted and executed by the control unit. This knowledge can influence decisions regarding algorithm efficiency and resource management. In summary, the decoding and execution functions of the control unit are fundamental to the operation of a computer. They transform high-level instructions into actionable tasks, enabling the CPU to perform complex computations and manage data effectively. By grasping these concepts, we gain insight into the inner workings of computing systems and the critical role played by the control unit in ensuring smooth and efficient operation. For further reading on the architecture of CPUs and the role of the control unit, you can explore resources such as Computer Architecture: A Quantitative Approach by John L. Hennessy and David A. Patterson. Chapter 3: The Role of the Control Unit in Instruction Cycle: A Step-by-Step Guide The control unit (CU) is a critical component of a computer's central processing unit (CPU), orchestrating the execution of instructions in a systematic manner. To understand its role, we must first grasp the concept of the instruction cycle, which is the process through which a computer retrieves, decodes, and executes instructions. This chapter will delve into the step-bystep functions of the control unit during the instruction cycle, illustrating its importance in computing. The Instruction Cycle: An Overview The instruction cycle consists of three primary stages: fetch, decode, and execute. Each of these stages is essential for the proper functioning of a computer, and the control unit plays a pivotal role in managing these processes. 1. Fetch: In this initial stage, the control unit retrieves an instruction from memory. The instruction is located at a specific address, which is stored in the program counter (PC). The control unit sends a signal to the memory unit to fetch the instruction at the address indicated by the PC. Once the instruction is retrieved, the control unit increments the PC to point to the next instruction, preparing for the next cycle. Example: Imagine a simple program that adds two numbers. The first instruction might be to load the first number from memory. The control unit fetches this instruction, updates the program counter, and prepares to decode it. 2. Decode: After fetching the instruction, the control unit decodes it to understand what action is required. This involves interpreting the opcode (operation code) and identifying the operands (the data to be processed). The control unit uses a set of predefined rules to translate the binary instruction into a format that can be understood by the rest of the CPU. Example: Continuing with our addition program, the fetched instruction might be "LOAD A, 5", where "LOAD" is the opcode, and "A" and "5" are the operands. The control unit decodes this instruction to determine that it needs to load the value 5 into register A. 3. Execute: In the final stage, the control unit coordinates the execution of the instruction. It sends signals to the arithmetic logic unit (ALU) or other components to perform the required operation. The results of this operation may be stored back in memory or in a register, depending on the instruction. Example: If the next instruction is "ADD A, B", the control unit will instruct the ALU to add the values in registers A and B, and store the result in a designated register or memory location. The Control Unit's Functions in Detail The control unit's role in the instruction cycle is not merely passive; it actively manages the flow of data and instructions throughout the CPU. Here are some of its key functions: Timing and Control Signals: The control unit generates timing signals that synchronize the operations of the CPU components. These signals ensure that data is processed in the correct sequence and at the right time. For instance, it controls when to read from memory and when to write back results. Instruction Sequencing: The control unit determines the sequence in which instructions are executed. This is crucial for maintaining the logical flow of a program. If a branch instruction is encountered (e.g., an "if" statement), the control unit must adjust the program counter accordingly to jump to the correct instruction. Error Handling: The control unit also plays a role in detecting and handling errors during instruction execution. For example, if an invalid instruction is fetched, the control unit can trigger an exception or interrupt, allowing the system to respond appropriately. Micro-Operations: The control unit breaks down complex instructions into simpler micro-operations that can be executed in a step-by-step manner. This decomposition allows for more efficient processing and better resource management within the CPU. Practical Implications of the Control Unit's Role Understanding the control unit's functions is essential for anyone interested in computer architecture and programming. For instance, when optimizing code, programmers must be aware of how the control unit processes instructions. Efficient coding practices can minimize the number of instructions executed, thereby reducing the workload on the control unit and improving overall system performance. Moreover, the design of the control unit can significantly impact the performance of a CPU. Modern processors often employ techniques such as pipelining, where multiple instruction cycles overlap, allowing for faster execution. The control unit must manage this complexity, ensuring that instructions are executed in the correct order without conflicts. In summary, the control unit is the brain of the CPU, directing the flow of instructions and data throughout the instruction cycle. Its ability to fetch, decode, and execute instructions efficiently is fundamental to the performance of any computing system. Understanding its role not only enhances our knowledge of computer architecture but also informs better programming practices and system design. For further exploration of the control unit's functions and its impact on computing, consider reading about pipelining in modern CPUs and error handling mechanisms in computer systems. Chapter 4: Control Unit Architectures: Hardwired vs. Microprogrammed The control unit (CU) is a critical component of a computer's central processing unit (CPU), responsible for directing the operation of the processor and coordinating the activities of all other components. Understanding the architectures of control units—specifically, hardwired and microprogrammed designs— provides insight into how computers execute instructions and manage tasks efficiently. Hardwired Control Units A hardwired control unit uses fixed logic circuits to control the operations of the CPU. This architecture is designed using combinational logic circuits, which means that the control signals are generated directly from the input signals without the need for memory storage. The design is typically implemented using gates, flip-flops, and other digital components. Characteristics of Hardwired Control Units 1. Speed: Hardwired control units are generally faster than their microprogrammed counterparts because they do not require fetching control signals from memory. The control signals are generated in real-time based on the current state of the inputs. 2. Complexity: The design of hardwired control units can become complex as the number of instructions increases. Each instruction requires a specific set of control signals, leading to intricate logic circuits that can be challenging to design and maintain. 3. Flexibility: Once a hardwired control unit is designed and implemented, it is not easily modified. Any changes to the instruction set or control logic require a complete redesign of the hardware. Example of Hardwired Control Units A classic example of a hardwired control unit can be found in early microprocessors, such as the Intel 8085. This microprocessor utilized a hardwired control unit to execute its instruction set, allowing for rapid processing of commands. The control signals were generated based on the opcode of the instruction being executed, enabling the CPU to perform operations like data transfer, arithmetic calculations, and logical operations efficiently. Microprogrammed Control Units In contrast, a microprogrammed control unit uses a set of instructions stored in memory, known as microinstructions, to generate control signals. This architecture allows for greater flexibility and easier modification of the control logic. Characteristics of Microprogrammed Control Units 1. Flexibility: Microprogrammed control units can be easily modified by changing the microinstructions stored in memory. This adaptability makes it simpler to implement new instructions or modify existing ones without redesigning the hardware. 2. Complexity: While microprogrammed control units can simplify the design process, they may introduce additional complexity in terms of performance. Fetching microinstructions from memory can slow down the execution of instructions compared to hardwired designs. 3. Ease of Implementation: Microprogramming allows for a more straightforward implementation of complex instruction sets. The control logic can be defined in a high-level manner, making it easier for designers to understand and modify. Example of Microprogrammed Control Units A well-known example of a microprogrammed control unit is found in the Digital Equipment Corporation (DEC) PDP-11 series of computers. The PDP-11 utilized a microprogrammed control unit that allowed for a rich instruction set and the ability to easily implement new features. The microinstructions were stored in a control memory, enabling the system to execute complex operations with relative ease. Comparison of Hardwired and Microprogrammed Control Units When comparing hardwired and microprogrammed control units, several factors come into play: Performance: Hardwired control units typically offer better performance due to their speed, while microprogrammed units may experience delays due to memory access times. Design Complexity: Hardwired designs can become complex and difficult to manage, whereas microprogrammed designs allow for easier updates and modifications. Cost: Hardwired control units may be more cost-effective for simpler systems, while microprogrammed units can be more economical for complex systems that require frequent updates. In practical applications, the choice between hardwired and microprogrammed control units often depends on the specific requirements of the system being designed. For instance, embedded systems that require high-speed processing may favor hardwired designs, while general-purpose computers that need flexibility and adaptability may opt for microprogrammed architectures. Understanding these two architectures is essential for grasping how control units function within the broader context of computing. The choice of architecture can significantly impact the performance, flexibility, and complexity of a computer system, ultimately influencing how effectively it can execute tasks and respond to user needs. For further reading on control unit architectures, you can explore resources such as Computer Organization and Design by David Patterson and John Hennessy, which provides a comprehensive overview of these concepts. Chapter 5: Recent Developments in Control Unit Technology: Trends and Innovations The control unit (CU) is a pivotal component of a computer's central processing unit (CPU), responsible for directing the operation of the processor. It orchestrates the execution of instructions by managing the flow of data within the CPU and between the CPU and other components of the computer. As technology evolves, so too does the control unit, adapting to meet the demands of modern computing. This chapter explores recent developments in control unit technology, highlighting trends and innovations that are shaping the future of computing. 1. Microarchitecture Enhancements One of the most significant trends in control unit technology is the advancement of microarchitecture. Microarchitecture refers to the way a given instruction set architecture (ISA) is implemented in a particular processor. Recent innovations have focused on improving the efficiency and performance of control units through techniques such as pipelining and superscalar execution. Pipelining Pipelining is a technique that allows multiple instruction phases to be processed simultaneously. In a traditional non-pipelined architecture, each instruction must complete all its stages before the next one begins. This can lead to inefficiencies, as the CPU often has to wait for one instruction to finish before starting another. Pipelining breaks down instruction execution into discrete stages—fetch, decode, execute, and write-back— allowing the control unit to fetch a new instruction while the previous one is still being executed. For example, in a 5-stage pipeline, while one instruction is being executed, another can be decoded, and yet another can be fetched from memory. This overlapping of instruction execution significantly increases throughput and overall performance. Modern processors, such as Intel's Core series, utilize advanced pipelining techniques to achieve high levels of performance. Superscalar Architecture Superscalar architecture takes pipelining a step further by allowing multiple instructions to be issued and executed in parallel during a single clock cycle. This is made possible by having multiple execution units within the control unit, enabling it to handle several instructions simultaneously. For instance, a superscalar processor can execute two or more integer operations and a floating-point operation at the same time, effectively increasing the instruction throughput. 2. Adaptive Control Units Another notable trend is the development of adaptive control units that can dynamically adjust their operation based on workload and performance requirements. These control units utilize machine learning algorithms to analyze the execution patterns of programs and optimize resource allocation accordingly. Dynamic Voltage and Frequency Scaling (DVFS) One practical example of adaptive control is Dynamic Voltage and Frequency Scaling (DVFS). DVFS allows the control unit to adjust the voltage and frequency of the CPU based on the current workload. When the CPU is under heavy load, the control unit can increase the frequency to boost performance. Conversely, during lighter workloads, it can reduce the frequency and voltage to save power. This not only enhances performance but also improves energy efficiency, which is crucial in mobile and embedded systems. 3. Integration of AI and Machine Learning The integration of artificial intelligence (AI) and machine learning (ML) into control unit technology is another exciting development. AI algorithms can be employed to predict the next instructions that will be executed, allowing the control unit to pre-fetch data and instructions, thereby reducing latency. Predictive Caching For example, predictive caching uses historical data to anticipate which data will be needed next. By pre-loading this data into faster cache memory, the control unit can significantly reduce the time it takes to access data from slower main memory. This technique is particularly beneficial in applications that require real-time processing, such as video streaming and gaming. 4. Quantum Control Units As we look to the future, the emergence of quantum computing presents a new frontier for control unit technology. Quantum control units operate on the principles of quantum mechanics, utilizing qubits instead of traditional bits. This allows for vastly increased processing power and efficiency. Quantum Gate Operations In quantum computing, control units manage quantum gate operations, which manipulate qubits to perform calculations. These operations are fundamentally different from classical logic gates, as they can exist in multiple states simultaneously due to superposition. This capability enables quantum computers to solve complex problems much faster than classical computers. For instance, quantum control units are being explored for applications in cryptography, optimization problems, and drug discovery. 5. Enhanced Security Features With the increasing prevalence of cyber threats, recent developments in control unit technology have also focused on enhancing security features. Control units are now being designed with built-in security mechanisms to protect against various types of attacks, such as side-channel attacks and buffer overflows. Hardware Security Modules (HSM) One example of this is the implementation of Hardware Security Modules (HSM) within control units. HSMs are dedicated hardware components that manage digital keys and perform cryptographic operations. By integrating HSMs into the control unit, systems can ensure that sensitive data is processed securely, reducing the risk of unauthorized access and data breaches. In summary, the control unit is undergoing significant transformations driven by advancements in microarchitecture, adaptive technologies, AI integration, quantum computing, and enhanced security features. These innovations not only improve the performance and efficiency of computing systems but also pave the way for new applications and capabilities in the digital landscape. As we continue to explore the potential of control unit technology, it is clear that its evolution will play a pivotal role in shaping the future of computing. For further reading on control unit technology and its implications, you can explore resources such as Intel's Architecture Documentation and IBM's Quantum Computing Resources. Chapter 6: The Future of Control Units: Challenges and Opportunities As we delve into the future of control units in computing, it is essential to understand their pivotal role in the architecture of modern processors. Control units (CUs) are integral components that orchestrate the operations of a computer's central processing unit (CPU). They manage the flow of data within the CPU and between the CPU and other components, ensuring that instructions are executed in the correct sequence. However, as technology evolves, so too do the challenges and opportunities that lie ahead for control units. The Evolution of Control Units Historically, control units have undergone significant transformations. From the early days of simple hardwired control units, which used fixed logic circuits to manage operations, to the more sophisticated microprogrammed control units that utilize stored instructions to dictate behavior, the evolution has been remarkable. For instance, modern CPUs often employ a combination of both hardwired and microprogrammed techniques to optimize performance and flexibility. This evolution is driven by the increasing complexity of software applications and the demand for higher processing speeds. Challenges Ahead One of the primary challenges facing control units is the need to keep pace with the rapid advancements in technology. As we move towards more complex architectures, such as multi-core and many-core processors, control units must adapt to manage multiple instruction streams efficiently. This is particularly relevant in the context of parallel processing, where multiple operations are executed simultaneously. For example, in a multicore processor, each core may have its own control unit, which must coordinate with others to ensure that tasks are distributed effectively and that data dependencies are respected. Another significant challenge is energy efficiency. As computing devices become more powerful, they also consume more power, leading to increased heat generation and reduced battery life in portable devices. Control units must be designed to minimize power consumption while maintaining performance. Techniques such as dynamic voltage and frequency scaling (DVFS) allow control units to adjust their power usage based on workload, but implementing these techniques requires sophisticated control logic. Opportunities for Innovation Despite these challenges, the future of control units is ripe with opportunities for innovation. One promising area is the integration of artificial intelligence (AI) and machine learning (ML) into control unit design. By leveraging AI algorithms, control units can learn from usage patterns and optimize their operations in real-time. For instance, an AI-enhanced control unit could predict which instructions are likely to be executed next and pre-fetch them, significantly reducing latency and improving overall performance. Moreover, the rise of quantum computing presents a unique opportunity for control units. Quantum processors operate on fundamentally different principles than classical processors, and control units will need to evolve to manage quantum bits (qubits) and their complex interactions. This could lead to the development of entirely new architectures and control strategies that harness the power of quantum mechanics for unprecedented computational capabilities. Real-World Applications To illustrate the potential of future control units, consider the example of autonomous vehicles. These vehicles rely on complex algorithms to process vast amounts of data from sensors in real-time. Control units in these systems must manage multiple data streams, make split-second decisions, and communicate with other vehicles and infrastructure. The integration of advanced control units that utilize AI and machine learning can enhance the vehicle's ability to navigate safely and efficiently, adapting to changing conditions on the road. In the realm of cloud computing, control units also play a crucial role. As cloud services become more prevalent, the demand for efficient resource management increases. Control units that can dynamically allocate resources based on real-time demand can significantly improve the performance and cost-effectiveness of cloud services. For example, a control unit that monitors server loads and adjusts resource allocation accordingly can help prevent bottlenecks and ensure smooth operation. Conclusion The future of control units is characterized by both challenges and opportunities. As technology continues to advance, control units must evolve to meet the demands of increasingly complex computing environments. By embracing innovation and leveraging emerging technologies, control units can enhance their functionality and play a critical role in shaping the future of computing. The journey ahead is not without its hurdles, but the potential for growth and improvement is vast, promising a new era of efficiency and capability in computing systems. For further reading on the evolution of control units and their impact on computing, you can explore resources such as Computer Architecture: A Quantitative Approach by John L. Hennessy and David A. Patterson, which provides in-depth insights into the architecture of modern processors.