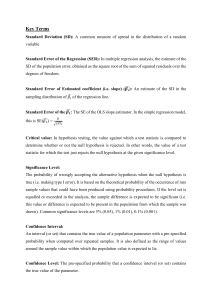

CHAPTER 1: DATA AND STATISTICS Analytics The scientific process of transforming data into insight for making better decisions. Big Data A set of data that cannot be managed, processed, or analyzed with commonly available software in a reasonable amount of time. Big data are characterized by great volume (a large amount of data), high velocity (fast collection and processing), or wide variety (could include nontraditional data such as video, audio, and text). Categorical data Labels or names used to identify an attribute of each element. Categorical data use either the nominal or ordinal scale of measurement and may be nonnumeric or numeric. Categorical variable A variable with categorical data. Census A survey to collect data on the entire population. Cross-sectional data Data collected at the same or approximately the same point in time. Data The facts and figures collected, analyzed, and summarized for presentation and interpretation. Data mining The process of using procedures from statistics and computer science to extract useful information from extremely large databases. Data set All the data collected in a particular study. Descriptive Analytics The set of analytical techniques that describe what has happened in the past. Descriptive statistics Tabular, graphical, and numerical summaries of data. Elements The entities on which data are collected. Interval scale The scale of measurement for a variable if the data demonstrate the proper ties of ordinal data and the interval between values is expressed in terms of a fixed unit of measure. Interval data are always numeric. Nominal scale The scale of measurement for a variable when the data are labels or names used to identify an attribute of an element. Nominal data may be nonnumeric or numeric. Observation The set of measurements obtained for a particular element. Ordinal scale The scale of measurement for a variable if the data exhibit the properties of nominal data and the order or rank of the data is meaningful. Ordinal data may be nonnumeric or numeric. Population The set of all elements of interest in a particular study. Quantitative data Numeric values that indicate how much or how many of something. Quantitative data are obtained using either the interval or ratio scale of measurement. Quantitative variable A variable with quantitative data. Ratio scale The scale of measurement for a variable if the data demonstrate all the prop erties of interval data and the ratio of two values is meaningful. Ratio data are always numeric. Sample A subset of the population. Sample survey A survey to collect data on a sample. Statistical inference The process of using data obtained from a sample to make estimates or test hypotheses about the characteristics of a population. Statistics The art and science of collecting, analyzing, presenting, and interpreting data. Variable A characteristic of interest for the elements CHAPPTER 2: DESCRIPTIVE STATISTICS: TABULAR AND GRAPHICAL DISPLAYS Bar chart A graphical device for depicting categorical data that have been summarized in a frequency, relative frequency, or percent frequency distribution. Class midpoint The value halfway between the lower and upper class limits. Crosstabulation A tabular summary of data for two variables. The classes for one variable are represented by the rows; the classes for the other variable are represented by the columns. Cumulative frequency distribution A tabular summary of quantitative data showing the number of data values that are less than or equal to the upper class limit of each class. Cumulative percent frequency distribution A tabular summary of quantitative data showing the percentage of data values that are less than or equal to the upper class limit of each class. Cumulative relative frequency distribution A tabular summary of quantitative data showing the fraction or proportion of data values that are less than or equal to the upper class limit of each class. Data visualization A term used to describe the use of graphical displays to summarize and present information about a data set. Dot plot A graphical device that summarizes data by the number of dots above each data value on the horizontal axis. Frequency distribution A tabular summary of data showing the number (frequency) of observations in each of several nonoverlapping categories or classes. Histogram A graphical display of a frequency distribution, relative frequency distribution, or percent frequency distribution of quantitative data constructed by placing the class intervals on the horizontal axis and the frequencies, relative frequencies, or percent frequencies on the vertical axis. Percent frequency distribution A tabular summary of data showing the percentage of observations in each of several nonoverlapping classes. Pie chart A graphical device for presenting data summaries based on subdivision of a circle into sectors that correspond to the relative frequency for each class. Relative frequency distribution A tabular summary of data showing the fraction or pro portion of observations in each of several nonoverlapping categories or classes. Scatter diagram A graphical display of the relationship between two quantitative vari ables. One variable is shown on the horizontal axis and the other variable is shown on the vertical axis. Side-by-side bar chart A graphical display for depicting multiple bar charts on the same display. Stacked bar chart A bar chart in which each bar is broken into rectangular segments of a different color showing the relative frequency of each class in a manner similar to a pie chart. Stem-and-leaf display A graphical display used to show simultaneously the rank order and shape of a distribution of data. CHAPTER 3: DESCRIPTIVE STATISTICS – NUMERICAL MEASURES Box plot A graphical summary of data based on a five-number summary. Chebyshev’s theorem A theorem that can be used to make statements about the pro portion of data values that must be within a specified number of standard deviations of the mean. Coefficient of variation A measure of relative variability computed by dividing the stand ard deviation by the mean and multiplying by 100. Correlation coefficient A measure of linear association between two variables that takes on values between −1 and +1. values near +1 indicate a strong positive linear relationship; values near −1 indicate a strong negative linear relationship; and values near zero indicate the lack of a linear relationship. Covariance A measure of linear association between two variables. Positive values indi cate a positive relationship; negative values indicate a negative relationship. Empirical rule A rule that can be used to compute the percentage of data values that must be within one, two, and three standard deviations of the mean for data that exhibit a bell-shaped distribution. Five-number summary A technique that uses five numbers to summarize the data: small est value, first quartile, median, third quartile, and largest value. Geometric mean A measure of location that is calculated by finding the nth root of the product of n values. Interquartile range (IQR) A measure of variability, defined to be the difference between the third and first quartiles. Mean A measure of central location computed by summing the data values and dividing by the number of observations. Median A measure of central location provided by the value in the middle when the data are arranged in ascending order. Mode A measure of location, defined as the value that occurs with greatest frequency. Outlier An unusually small or unusually large data value. Percentile A value such that at least p percent of the observations are less than or equal to this value and at least (100 − p) percent of the observations are greater than or equal to this value. The 50th percentile is the median Quartiles The 25th, 50th, and 75th percentiles, referred to as the first quartile, the second quartile (median), and third quartile, respectively. The quartiles can be used to divide a data set into four parts, with each part containing approximately 25% of the data. Range A measure of variability, defined to be the largest value minus the smallest value. Skewness A measure of the shape of a data distribution. Data skewed to the left result in negative skewness; a symmetric data distribution results in zero skewness; and data skewed to the right result in positive skewness. Standard deviation A measure of variability computed by taking the positive square root of the variance. Variance A measure of variability based on the squared deviations of the data values about the mean. Weighted mean The mean obtained by assigning each observation a weight that reflects its importance. z-score A value computed by dividing the deviation about the mean (xi − x) by the stand ard deviation s. A z-score is referred to as a standardized value and denotes the number of standard deviations xi is from the mean. CHAPTER 4: SAMPLING AND SAMPLING DISTRIBUTION Central limit theorem A theorem that enables one to use the normal probability distribution to approximate the sampling distribution of x whenever the sample size is large. Cluster sampling A probability sampling method in which the population is first divided into clusters and then a simple random sample of the clusters is taken. Consistency A property of a point estimator that is present whenever larger sample sizes tend to provide point estimates closer to the population parameter. Convenience sampling A nonprobability method of sampling whereby elements are selected for the sample on the basis of convenience. Finite population correction factor The term that is used whenever a finite population, rather than an infinite population, is being sampled. Frame A listing of the elements the sample will be selected from. Judgment sampling A nonprobability method of sampling whereby elements are selected for the sample based on the judgment of the person doing the study. Parameter A numerical characteristic of a population, such as a population mean m, a population standard deviation s, a population proportion p, and so on. Point estimate The value of a point estimator used in a particular instance as an estimate of a population parameter. Point estimator The sample statistic, that provides the point estimate of the population parameter. Random sample A random sample from an infinite population is a sample selected such that the following conditions are satisfied: (1) Each element selected comes from the same population; (2) each element is selected independently. Sampling distribution A probability distribution consisting of all possible values of a sample statistic. Sample statistic A sample characteristic, such as a sample mean x, a sample standard deviation s, a sample proportion p, and so on. The value of the sample statistic is used to estimate the value of the corresponding population parameter. Sampling without replacement Once an element has been included in the sample, it is removed from the population and cannot be selected a second time. Sampling with replacement Once an element has been included in the sample, it is returned to the population. A previously selected element can be selected again and therefore may appear in the sample more than once. Simple random sample A simple random sample of size n from a finite population of size n is a sample selected such that each possible sample of size n has the same probability of being selected. Standard error The standard deviation of a point estimator. Stratified random sampling A probability sampling method in which the population is first divided into strata and a simple random sample is then taken from each stratum. Systematic sampling A probability sampling method in which we randomly select one of the first k elements and then select every kth element thereafter. Target population The population for which statistical inferences such as point estimates are made. It is important for the target population to correspond as closely as possible to the sampled population. Unbiased A property of a point estimator that is present when the expected value of the point estimator is equal to the population parameter it estimates. CHAPTER 5: INTERVAL ESTIMATION (3/10) Confidence coefficient The confidence level expressed as a decimal value. For example, .95 is the confidence coefficient for a 95% confidence level. Confidence interval Another name for an interval estimate. Confidence level The confidence associated with an interval estimate. For example, if an interval estimation procedure provides intervals such that 95% of the intervals formed using the procedure will include the population parameter, the interval estimate is said to be constructed at the 95% confidence level. Degrees of freedom A parameter of the t distribution. When the t distribution is used in the computation of an interval estimate of a population mean, the appropriate t distribution has n − 1 degrees of freedom, where n is the size of the sample. Interval estimate An estimate of a population parameter that provides an interval believed to contain the value of the parameter. For the interval estimates in this chapter, it has the form: point estimate ± margin of error. Margin of error The ± value added to and subtracted from a point estimate in order to develop an interval estimate of a population parameter. t distribution A family of probability distributions that can be used to develop an interval estimate of a population mean whenever the population standard deviation s is unknown and is estimated by the sample standard deviation s. Sigma known The case when historical data or other information provides a good value for the population standard deviation prior to taking a sample. The interval estimation procedure uses this known value of s in computing the margin of error. Sigma unknown The more common case when no good basis exists for estimating the population standard deviation prior to taking the sample. The interval estimation procedure uses the sample standard deviation s in computing the margin of error. CHAPTER 6: HYPOTHESIS TEST (3/10) Alternative hypothesis the hypothesis concluded to be true if the null hypothesis is rejected. Critical value a value that is compared with the test statistic to determine whether h0 should be rejected: giá trị thực tế tính ra từ mẫu Level of significance the probability of making a type i error when the null hypothesis is true as an equality. : xác suất của sai lầm loại 1 (type i = type 1) Null hypothesis the hypothesis tentatively assumed true in the hypothesis testing procedure. One-tailed test a hypothesis test in which rejection of the null hypothesis occurs for values of the test statistic in one tail of its sampling distribution. p-value a probability that provides a measure of the evidence against the null hypothesis given by the sample. Smaller p-values indicate more evidence against h0. for a lower tail test, the p-value is the probability of obtaining a value for the test statistic as small as or smaller than that provided by the sample. for an upper tail test, the p-value is the probability of obtaining a value for the test statistic as large as or larger than that provided by the sample. for a two-tailed test, the p-value is the probability of obtaining a value for the test statistic at least as unlikely as or more unlikely than that provided by the sample. Power the probability of correctly rejecting h0 when it is false. Test statistic a statistic whose value helps determine whether a null hypothesis should be rejected. Two-tailed test a hypothesis test in which rejection of the null hypothesis occurs for values of the test statistic in either tail of its sampling distribution. Type I error the error of rejecting h0 when it is true. Type II error the error of accepting h0 when it is false. CHAPTER 7: REGRESSION ANOVA table The analysis of variance table used to summarize the computations associated with the F test for significance. Coefficient of determination A measure of the goodness of fit of the estimated regression equation. It can be interpreted as the proportion of the variability in the dependent variable y that is explained by the estimated regression equation. Dependent variable The variable that is being predicted or explained. It is denoted by y. Estimated regression equation The estimate of the regression equation developed from sample data by using the least squares method. Independent variable The variable that is doing the predicting or explaining. It is denoted by x. Least squares method A procedure used to develop the estimated regression equation. The objective is to minimize o(yi − yˆ i)2. Mean square error The unbiased estimate of the variance of the error term s2. It is denoted by MSE or s2. Normal probability plot A graph of the standardized residuals plotted against values of the normal scores. This plot helps determine whether the assumption that the error term has a normal probability distribution appears to be valid. Outlier A data point or observation that does not fit the trend shown by the remaining data. Prediction interval The interval estimate of an individual value of y for a given value of x. Regression equation The equation that describes how the mean or expected value of the dependent variable is related to the independent variable. Regression model The equation that describes how y is related to x and an error term Residual analysis The analysis of the residuals used to determine whether the assumptions made about the regression model appear to be valid. Residual analysis is also used to identify outliers and influential observations. Residual plot Graphical representation of the residuals that can be used to determine whether the assumptions made about the regression model appear to be valid. Scatter diagram A graph of bivariate data in which the independent variable is on the horizontal axis and the dependent variable is on the vertical axis. Simple linear regression Regression analysis involving one independent variable and one dependent variable in which the relationship between the variables is approximated by a straight line Standard error of the estimate The square root of the mean square error, denoted by s. It is the estimate of s, the standard deviation of the error term e. Standardized residual The value obtained by dividing a residual by its standard deviation Adjusted multiple coefficient of determination A measure of the goodness of fit of the estimated multiple regression equation that adjusts for the number of independent variables in the model and thus avoids overestimating the impact of adding more inde pendent variables. Categorical independent variable An independent variable with categorical data. Dummy variable A variable used to model the effect of categorical independent variables. A dummy variable may take only the value zero or one Estimated logistic regression equation The estimate of the logistic regression equation based on sample data; Estimated logit An estimate of the logit based on sample data; Estimated multiple regression equation The estimate of the multiple regression equation based on sample data and the least squares method; Least squares method The method used to develop the estimated regression equation. It minimizes the sum of squared residuals (the deviations between the observed values of the dependent variable, yi, and the predicted values of the dependent variable, yˆ i). Leverage A measure of how far the values of the independent variables are from their mean values. Multicollinearity The term used to describe the correlation among the independent variables. Multiple coefficient of determination A measure of the goodness of fit of the estimated multiple regression equation. It can be interpreted as the proportion of the variability in the dependent variable that is explained by the estimated regression equation. Multiple regression analysis Regression analysis involving two or more independent variables. Multiple regression equation The mathematical equation relating the expected value or mean value of the dependent variable to the values of the independent variables; Multiple regression model The mathematical equation that describes how the dependent variable y is related to the independent variables x1, x2, . . . , xp and an error term e. CHAPTER 8: TIME SERIES ANALYSIS AND FORECASTING Additive decomposition model in an additive decomposition model the actual time series value at time period t is obtained by adding the values of a trend component, a seasonal com ponent, and an irregular component. Cyclical pattern a cyclical pattern exists if the time series plot shows an alternating se quence of points below and above the trend line lasting more than one year. Deseasonalized time series a time series from which the effect of season has been removed by dividing each original time series observation by the corresponding sea sonal index. Exponential smoothing a forecasting method that uses a weighted average of past time series values as the forecast; it is a special case of the weighted moving averages method in which we select only one weight—the weight for the most recent observation. Forecast error the difference between the actual time series value and the forecast. Horizontal pattern a horizontal pattern exists when the data fluctuate around a constant mean. Mean absolute error (MAE) the average of the absolute values of the forecast errors. Mean absolute percentage error (MAPE) the average of the absolute values of the per centage forecast errors. Mean squared error (MSE) the average of the sum of squared forecast errors. Moving averages a forecasting method that uses the average of the most recent k data values in the time series as the forecast for the next period. Multiplicative decomposition model in a multiplicative decomposition model the actual time series value at time period t is obtained by multiplying the values of a trend component, a seasonal component, and an irregular component. Seasonal pattern a seasonal pattern exists if the time series plot exhibits a repeating pattern over successive periods. the successive periods are often one-year intervals, which is where the name seasonal pattern comes from. Smoothing constant a parameter of the exponential smoothing model that provides the weight given to the most recent time series value in the calculation of the forecast value. Stationary time series a time series whose statistical properties are independent of time. For a stationary time series the process generating the data has a constant mean and the variability of the time series is constant over time. Time series a sequence of observations on a variable measured at successive points in time or over successive periods of time. Time series decompostition a time series method that is used to separate or decompose a time series into seasonal and trend components. Time series plot a graphical presentation of the relationship between time and the time series variable. time is shown on the horizontal axis and the time series values are shown on the verical axis. Trend pattern a trend pattern exists if the time series plot shows gradual shifts or movements to relatively higher or lower values over a longer period of time. Weighted moving averages a forecasting method that involves selecting a different weight for the most recent k data values values in the time series and then computing a weighted average of the values. the sum of the weights must equal one