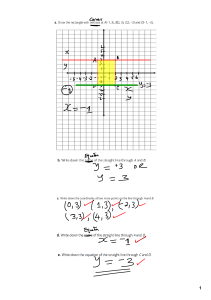

DETECTION AND DETERMINATION COORDINATES OF MOVING OBJECTS FROM A VIDEO Mamaraufov Odil Abdikhamitovich, scientific researcher, the Department of Software of IT, Tashkent University of IT, E-mail: odil.mamaraufov@gmail.com DETECTION AND DETERMINATION COORDINATES OF MOVING OBJECTS FROM A VIDEO Abstract: This paper is devoted to the creation of an efficient algorithm for determining the motion detection and isolation of an object based on background subtraction using dynamic threshold and morphology in the process. Background subtraction algorithm may also be used to detect multiple objects. Video images of human to identify methods to distinguish moving objects, the image of the reference image based on moving images through the search. Main components of the paper based on the analyses of stage of few hundred dynamic objects image sector, the separation and identification algorithms and software development. Algorithms developed can also be used for other applications (real-time classification of objects, etc.). Keywords: Video surveillance, motion detection, background subtraction, background. Introduction. This time the relevant tasks are the collection, analуsis and processing of information on road safetу, safetу control, traffic on city streets and highways, road accidents and their study. Also relevant is the problem of determining the speed of traffic on motorways, registration of motor vehicles at intersections, posts and vehicle registration, car traffic and frequent road accidents. So important is the creation and implementation of video surveillance systems installed in roads and intersections. For the video surveillance system is an actual resolution of contradictions between the quality of the generated image and hardware of existing channels of communication and data storage. In spite of the high capacity, the modern hard disks are not sufficient for storing large amounts of information for a long time, as it should be according to the specifications. Traditionally this contradiction is resolved by video compression with a noticeable decrease in their quality and loss of information. To improve the efficiency of video surveillance systems need to develop methods for video data compression without loss of information about the object of interest for the long term storage and transfer in real time, high-quality images via communication channels with limited bandwidth [3]. Video moving object leads to the appearance of two phases-phase of adaptation to the current camera angle shooting and maintenance of objects of interest. The fixed camera shot scene with little changing background (relative sequence) with moving objects is of great practical use in observation systems (maintenance of vehicles, people), security systems, etc. Existing Work. Currently, there are many methods of detecting motion, or in another way, methods of subtracting the background. In addition to methods of subtracting the background, methods for preprocessing and post-processing of data were implemented: the Gaussian filter, binarization, and the median filter. In the literature, there is a comparative analysis of video motion detection methods based on execution time, consumed resources and calculation of metrics: precision, recall, F-measure. None of the background modeling algorithms can cope with all possible problems. A good background subtraction algorithm should be resistant to changes in lighting and avoidance of non-stationary background objects such as swinging leaves, grass, rain, snow and shadows from moving objects should be avoided. And the background model must respond quickly to changes: such as starting and stopping vehicles or any other starting point. In the practice of disclosure and investigation of crimes, materials obtained by television monitoring systems that allow recording certain elements of the mechanism of committing a crime and criminals by means of video recording are quite often used. During the analysis of video images, forensic information can be obtained that helps to establish the spatial characteristics of the 253 Section 11. Technical science imprinted objects, their group identity and to carry out identification [4]. Existing gabitoscopic techniques of forensic identification of a person on the grounds of appearance [5], imprinted on a video images are based on portrait identification using static images [6]. In some cases, these methods are powerless, for example, when the person of the offender is not visible, fixed in an unsuccessful foreshortening, hidden by a mask or dressed up. The materials of the video recording contain a considerable amount of information on the volume and variety related to the functional elements of the appearance of a person (gait, facial expressions, articulation, gestures, etc.) [4]. These elements can be fixed and perceived for research only in dynamics as static illustrations of a person’s appearance do not convey these properties. Dynamic properties of changes in the appearance of a person are very informative and are characterized by individuality, dynamic stability, selective variability, which allows them to be used to solve forensic tasks. Recently, work is underway in our country and abroad to create new biometric technologies and security systems that measure various parameters and characteristics of humans [5]. These include the individual anatomical characteristics of a person, except for the face and fingerprints (the eye fundus, the iris of the eye, the shape of the palm of the hand, etc.), and its physiological and behavioral properties (voice, signature, gait, etc.). Such systems are created to restrict access to information, prevent intruders from entering protected areas and premises, but they can also be used in forensic science. The modern capabilities of biometric technologies already provide the necessary requirements for reliability of identification, easy for use and low cost of equipment. Methods of linear filtering images. The filtering methods when evaluating the real signal at some point in the frame to take into account a lot of (neighborhood) of neighboring pixels, using a certain similarity of the signal at these points. The concept of neighborhood is fairly conventional. The neighborhood can be formed only on frame closest neighbors, but may be a neighborhood containing enough and strong enough distant points of the frame. In this case, the degree of influence (weight) of the far and near points on the decisions taken by the filter at a given point of the frame, will be completely different. 254 Thus, the filtering based on the ideology of the rational use of the data as a working point, and from its vicinity. For solving the problems of filtration using probabilistic models and image noise and statistical optimality criteria apply. This is due to the random nature of the interference and the desire to obtain the minimum difference in average processing result from the ideal signal. The variety of methods and filtering algorithms associated with a wide variety of mathematical models and noise signals, as well as various optimality criteria. There is a whole class of methods attenuation noisy images, as well as able to perform other operations (blur, border selection) based on the original image of the linear filtering. Linear filtering involves the use of a pixel raster spatially invariant transformation that would emphasize the necessary elements of the image and to avoid the influence of other, less important. The filter is based on linear convolution operation, which is written for the discrete case as in formula (1) q [m ,n ] = f m ,n ]g [ m ,n (1) where q – resulting raster obtained after convolution, m, n – coordinates x and y, which is performed in the convolution, f, g – baseline rasters. As raster f taken convolution kernel, which is a matrix of small dimension (usually no more than 5 × 5 elements), g – the original image, which should be filtered. In more detail the operation of convolution is painted in the formula (2) u /2 q [m ,n ] = ∑ v /2 ∑ f [ j ,k ] ⋅ g [m − j ,n − k ], (2) j =−u /2k =− v /2 where j, k – meters horizontally and vertically in the calculation of convolution, u, v – linear convolution kernel sizes (width, height), the remaining symbols correspond to those of the formula (1). One of the special case of application of the linear filter to the video images is the implementation for the purpose of smoothing convolution. Anti-aliasing smoothes jumps brightness of the image and allows you to remove unwanted noise. Most often used for smoothing kernel convolution 3 × 3, since in most cases they can achieve the desired effect, the price is relatively high, but still acceptable effort. Kernels fold dimension of 5 × 5.7 × 7, etc. They used very rarely because of too much labor. For example, the use of 5 × 5 convolution with the kernel, in comparison with the case of giving a 3 × 3 (5/3) * (5/3) = 25/9 ≈ 2.78 DETECTION AND DETERMINATION COORDINATES OF MOVING OBJECTS FROM A VIDEO times more processing operations on a single image pixel. Odds before the cores are selected so that the conversion did not cause the displacement of the original brightness of the image. As you can see, the core of the convolution of even minimal dimension (3 × 3) will significantly distort the image, because the color of any specific pixel will affect its neighbors. Moreover, the larger dimension of the convolution kernel, the greater will be the impact of this. In addition, the smaller the ratio of the central matrix for the convolution kernel (the central coefficient corresponds to the pixel being processed), the greater the distortion. Distortion during this processing method is expressed as the lubrication of small parts, blurring edges, smoothing contrast transitions. Second we are interested in how to use linear filtering to the processing of video images – the convolution with the kernel to emphasize the edges (aka Edge Detect). Such convolution kernel allows highlight the contours of objects and to suppress other elements of the image. Among the convolution kernels for this purpose, there are several basic is: Sobel Edge Detector, Gauss Edge Detector, Prewitt Edge Detector, Second-Derivatives filter. Matrix for them as follows (4). Median filter, unlike the smoothing filter, realizes a non-linear noise reduction process. As for the impulse noise, then, for example, a median filter with a window of 3x3 is completely suppresses single uniform background emissions as well as a group of two, three or four pulsed emission. In general, for suppressing impulse noise band the window size should be at least twice the size of the group of interference. Along with the important advantage of the median filter is the fact that it is significantly less blurs the contours of objects in the image, this approach has a major drawback associated with the fact that the calculation of the median requires additional computational cost. After all, despite the fact that the complexity of calculating the median of the array element in the middle – there is order O(n), is necessary to resort to additional tricks to practice really achieve linear complexity. But worst case reaches the difficulty still O(n2), especially if the number of elements in the array is small (in this case, there are only 9). Brightness and Contrast Adjustments. We looked at some ways to remove noise from the original image. However, the stage of pre-processing of video on it usually does not end there. Removing noise to minimize the number of false positives in the difference motion detector from interfering with reception and transmission of images, but the images containing the same scene, then can still vary significantly. The reason for this difference is the change in the level of illumination for registration of different frames. Changing the lighting level it may be caused by turning on or off the artificial lighting or weather conditions change, if the shooting is done outdoors. Usually at this point element wise difference between two adjacent frames reach very high values, which leads to false alarm detector, which detects the movement of the whole space lighting changes. To avoid such false alarms, at the stage of pre-treatment is necessary to resort to an adjustment of the brightness level of video [4, 8]. In calculating the brightness of the pixels of the current frame will be based on the pixels of the previous frame: I2(x, y) = A(x, y)·I1(x, y) + B(x, y), (3) where I1(x, y) – the brightness value of the pixel of the previous frame, I2(x, y) – the brightness value of the pixel of the current frame, a(x, y), b(x, y) – linear transform coefficients. In general, a and b are the sets of data containing different values for each pixel. In practice, this requirement is usually simpler and replaced by an algorithm window area processing several tens of pixels within which the calculated values of the coefficients a and b, followed by an adjustment of these pixel values falling within a window in the current frame. Then, the window is moved to a new location, thus avoiding the entire frame. It only remains to calculate the coefficients a and b. In [8] proposed two methods, one of which is based on the multiplication of luminance values of pixels of the current frame by a factor that converts the current frame to the same average luminance as in the previous frame: a ( x , y ) = I 2 / I 1 (4) where I 1 and I 2 – the average brightness values in the previous and current frames, respectively. At this shear rate is not used: b(x, y) = 0. (5) The second way to agree on neighboring frames on the average brightness value and variance: a (x , y ) = σ 2 / σ1, (6) b ( x , y ) = I 2 − I 1σ 2 / σ 1 ( ) 255 Section 11. Technical science where σ1 and σ2 – mean square deviation of brightness in the previous and current frames, respectively, the remaining symbols are the same as in (4) and (5). Note that the processing of a sliding window averages and standard deviations of the value calculated by the inner pixels of the window. Histogram bias is eliminated by the linear contrast, the idea of which is to convert the intensity of each pixel of the image with respect to the maximum and minimum values in the histogram for the current frame: u ′[m ,n ] = (u [m ,n ] − c ) ⋅ (b − c ) / (d − c ) + a ,(7) where u[m, n] – pixel of the original image with the coordinates (m, n), u’ [m, n] – pixel of the output image with coordinates (m, n), a – the lowest possible intensity value, b – the maximum possible value intensity, с – the minimum intensity value among pixels of the frame, d – the highest possible intensity value among the pixels of the frame. Thus, all the intensity values that fall between the percentiles will be scaled and the other extreme values of a gain or b: a ,� if � u [m ,n ] ≤ plow % � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � p u ′[m ,n ] = u [m ,n ],� if � plow % < u [m ,n ] < phigh % (8) b ,� if � u [m ,n ] ≥ phigh % � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � where plow% – lower percentile (in this case, 1%), phigh% – the upper percentile (in this case 99%), the remaining symbols are similar to the notation for the formula (7) b −a u p [m ,n ] = (u [m ,n ] − plow % ) ⋅ +a . phigh % − plow % Nor cut-off top and bottom 1% of the values, can use 3% and 5% thresholds and etc. In this way, adjusting contrast noisiness on the tails of the distribution will not have a significant effect on the maximum and minimum for which will be scaled intensities, so this method is more resistant to the presence of noise. In addition to increasing the contrast, at the stage of preparation of video image processing to the difference motion detector, it is important to bring these images to the same species. By this we mean a transformation of the original image, after which the color intensity histogram becomes uniform for all intensity values, which means that all values are equally probable [6]. This effect is achieved by replacing the distribution function of the pixel intensity of the color value with 256 equal argument of the largest intensity by a factor of at available to the number of levels of intensity: k = u [m ,n ], ′ u [m ,n ] = (b − a ) ⋅ nk / N 2 , (9) where, u[m, n] – pixel of the original image with the coordinates (m, n), u’ [m, n] – pixel of the output image with coordinates (m, n), a – the lowest possible intensity value, b – the maximum possible value intensity, nk – number of pixels in the original image with an intensity level equal to or less than k, N – total number of pixels in the image. After treatment with this method, all frames of the video stream will be similar in the level of illumination (and the distribution of the number of pixels from the values of the intensity will be close to a uniform) that will prevent false alarms difference motion detection. The method of the frame difference. Calculation of frame difference is a very common method of detecting the primary motion, after performing which, generally speaking, we can say whether there is a flow of personnel movement. Until recently, many motion detectors functioned exactly according to this principle [4]. However, this approach gives a fairly rough estimate, leading to the inevitable presence of the false reaction detector noise recording equipment, changing lighting conditions, a slight swing of the camera and so on. Thus, the videos need to be pre-treated prior to the calculation of the difference between them. Algorithm for computing frame difference of two frames in the case of processing a color video in RGB format is as follows: 1) The input to the algorithm receives two video frames, which are two-byte sequence in RGB format. 2) calculates pixel inter-frame differences as follows: Rdi = R1i − R2i Gdi = G1i − G 2i (10) Bdi = B1i − B2i where Rdi ,Gdi , Bdi – values of red, green and blue color components of the i – th pixel of the resulting raster, R1i ,G1i , B1i , R2i ,G 2i , B2i – the values of red, green and blue color components of the i-th pixel in the first and second frame. 3) For each pixel the average value between the values of three color components: z i = (Rdi + Gdi + � Bdi ) / 3 (11) DETECTION AND DETERMINATION COORDINATES OF MOVING OBJECTS FROM A VIDEO 4) The average value is compared with a predetermined threshold. As a result, comparison of the binary mask is formed: 0,� z i < T (12) mi = i 1,� z < T where mi – i – th value of the mask element, T – comparing the threshold, sometimes referred to as a threshold or a sensitivity. Thus, the output of the algorithm is formed by a binary mask, one element of which correspond to the three color components of the corresponding source pixel two frames. The units are arranged in the mask in areas where possibly present motion at this stage, but can be separate elements false alarms mask erroneously set to 1. The two consecutive frames from the stream, but may use frames with a long interval, for example, equal to 1.3 can be used as a frame of the two input frames. The greater this interval, the higher the sensitivity of the detector to the inactive objects, experiencing only a very small shift in one frame and can be clipped, being ascribed to the noise component of the image. The advantage of this method is its simplicity and undemanding to computing resources. The method is widely used earlier on the grounds that there was insufficient processing power available to developers. However, it is now widely used, especially in multichannel security systems where it is necessary to process the signal from multiple cameras to one computer. After all, the complexity of the algorithm is of order O(n) and is carried out in just one pass, which is very important for a large raster dimension, such as 640 × 480 pixels, 768 × 576 pixels, with which modern video cameras often work. Results. Besides the fact that the implemented algorithm allows to process data in real time and to provide them moving objects, it has one very important feature. It lies in the fact that the program is written on the basis of this algorithm, it is a full-transforming the DirectShow filter. This means that, firstly, it is compatible with other DirectShow-filter and can be included in a filter graph, and secondly, it can easily be used by any application in which to detect movement in the video data. To this end, the program developers need only to know the unique identifier of the class and interfaces used in this filter detects motion. Then, after the resulting interface program can communicate with the connected to the column filter setting adjustable parameters and thus realizing its setting. The scheme used algorithm detail to the level of processing procedures, and input / output parameters is shown in (Figure 1). Figure 1. Stages of the modules 257 Section 11. Technical science The algorithm consists of the following steps: Step 1. Saved K‑2 (K‑2 frame is) Step 2. Saved K‑1 (K‑1 block) Step 3. Saved K – (current frame) Step 4. Ref. Frame K‑1 (base frame) Step 5. Ref. Frame K (updated base frame) Step 6. Mask_B (mask on the difference between the current and the base frame) Step 7. Rectangles (an array of rectangles flanking group of connected minzon) Step 8. Objects K‑1 (an array of objects from the previous frame) Step 9. Objects K (array of objects after the current frame processing) The following notation is used for operations: • • • • • • • • Interpolate – procedure of frame filtering Minus – the difference between the two Morphology – performs filtering operations of mathematical morphology SearchRetangles – search boxes on the difference mask ProcessObjects – the search for new objects and tracing old PostProcessObjects – removal of unnecessary objects SetRectAreaValue (rectangles, map) – marks the pixels belonging rectangles rectangular area on the specified map pixel map UpdateRef Frame – updates the pixels of the base frame. Figure 2. Software for Detection and determination of the coordinates of moving objects on the video When pressing the button shows Algoritm algorithm preprocessing and detection of a moving object (Figure 4). The software is developed in language Delphi 7. The software is installed on a computer running a surveillance camera. Software removes the images in the format *.BMP of video over a range of time and sequences of images supplied to the algorithm. The program contains both the original versions of functions that implement these operations, and optimized the use of which is possible if you want users to speed up the work of the detector and if it has a processor not lower than Intel Pentium IV. 258 Discussion. During the reviewed methods of image processing and detection of moving objects in a stream of video frames. For a number of methods have been implemented algorithms allowed to apply these techniques in the detector of moving objects. Based on implemented algorithms was developed detection of moving objects. Designed detector is characterized by high quality of detection, resistance to noise recording equipment, peculiarities of weather conditions, the ability to work in daylight and artificial light, high-speed processing provides 1 channel video with a resolution of 640 × 480 pixels at a speed of 8–10 frames per second. DETECTION AND DETERMINATION COORDINATES OF MOVING OBJECTS FROM A VIDEO Figure 3. Formation of the report as a spreadsheet Figure 4. Results of frame difference and median filtering When implementing modern powerful detector technology have been used, such as the COM and DirectShow, optimization was performed bottleneck algorithm using MMX instruction sets for SSE and acceleration of its operation. At all stages of the development work carried out thorough testing of individual parts of the algorithm, as well as the entire detector as a whole. Conclusion. Detecting and tracking moving objects are important topics in computer vision research. Classical detecting and tracking methods for steady cameras are not suitable for use with moving cameras because the assumptions for these two applications are different. In this work developed algorithms that can detect and track moving objects with a non-fixed position camera. The initial step of this research is to develop a new method for estimating camera motion parameters. 259 Section 11. Technical science References: 1. Anam S., Uchino E., Suetake N. Image boundary detection using the modified level set method and a diffusion filter. Procedia Computer Science. 17th International Conference in Knowledge Based and Intelligent Information and Engineering Systems. – 2013; 22:192–200. 2. Hussin R., Juhari M. R., Kang N. W., Ismail R. C., Kamarudin A. Digital image processing techniques for object detection from complex background image. Procedia Engineering. – 2012; 41:340–4. 3. Guyon C., Bouwmans T. and Zahzah E. H. Robust principal component analysis for background subtraction: Systematic evaluation and comparative analysis, – 2014. 4. Tareque M. H, Al Hasan A. S. Human lips-contour recognition and tracing. International Journal of Advanced Research in Artificial Intelligence. – 2014; 3(1):47–51. 5. Sujatha B., Santhanam T. Classical flexible lip model based relative weight finder for better lip reading utilizing multi aspect lip geometry. Journal of Computer Science. – 2010; 6(10): 1065–9. 6. Sreenivas D. K., Reddy C. S., Sreenivasulu G. Contour approximation of image recognition by using curvature scale space and invariant-moment based method. International Journal of Advances in Engineering and Technology. – 2014. – May; 7(2):359–71. 7. Yang C. et. al. “On-Line Kernel-Based Tracking in Joint Feature – 3 Spatial Spaces,” DEMO on IEEE CVPR, – 2004. 8. Zoran Zivkovic. Improved adaptive gaussian mixture model for background subtraction. In Pattern Recognition, ICPR – 2004. IEEE Proceedings of the 17th International Conference, – P. 28–31. 260