Statistical Thinking Tutorial: Parameter Estimation & Confidence Intervals

advertisement

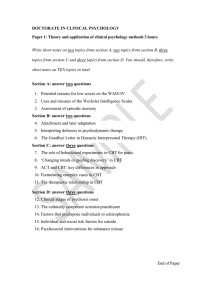

Statistical Thinking (ETC2420 / ETC5242) Tutorial – Week 3 Semester 2, 2024 library(tidyverse) library(kableExtra) library(gridExtra) library(broom) library(float) library(ggthemes) theme_set(theme_minimal(base_size = 12)) 1 Introduction This week we explore estimating parameters of interest, focusing on population means or comparisons of means between groups. We will practise working with real data, including creating appropriate visualisations and interpreting the results. The key statistical tool we use is a confidence interval. We will practise calculating these “by hand” as well as with the t.test() function. We also explore scenarios with both “paired” and “unpaired” data. To record your work, you should either create a new R Markdown file or download the R Markdown version of these notes, in which you can add your own notes and responses to each of the exercises. 2 Michaelson’s Speed of Light Data 2.1 Setting up The morley data frame contains observations from experiments undertaken by Albert Michelson in 1879 that aimed to measure the speed of light.1 There were a total of 100 measurements (Speed), coming from five different experiments (Expt), each repeated 20 times (Run). We are interested to see how the results from these experiments undertaken more than 140 years ago compare to our current knowledge. According to Wikipedia, the speed of light in a vacuum is 299,792,458 metres per second.2 Note that the Speed variable in the morley data frame represents the recorded speed in kilometres per second (km/s) after subtracting 299,000 km/s. Therefore, the value from Wikipedia corresponds to µW = 792.458 on that scale. Let µ represent the population average measurement from the Michelson’s experimental setup. In other words, if we could repeat the experimental runs identically a large number of times, the average measurement across them would be µ. For the purpose of today’s tutorial, assume that all of experiments and runs within 1 Michelson A (1882). Experimental Determination of the Velocity of Light Made at the U.S. Naval Academy, Annapolis. Astronomic Papers 1:135–138. U.S. Nautical Almanac Office. 2 https://en.wikipedia.org/wiki/Speed_of_light, accessed on 1 August 2024. 1 the experiments, are independent and were run in an identical manner. Therefore, we will treat the 100 distinct Speed values as a random sample from this population. The morley data frame should already be available in your R session (it is part of the datasets package, which is installed by default with a standard R installation). Let’s convert it to a tibble so we can use the tidyverse packages to work with it: light <- as_tibble(morley) 2.2 Data visualisation The data are continuous, so we could visualise them with a histogram. We’ll leave this as an exercise for you (see below). Instead, we demonstrate how to draw an estimated density plot (smoothed histogram) of the Speed variable (ignoring the Experiment and Run variables). We also add vertical lines to show some important values: red for the sample mean, blue for µW . light %>% ggplot(aes(x = Speed, y = after_stat(density))) + geom_density(fill = "cornsilk") + geom_vline(xintercept = mean(light$Speed), colour = "red") + annotate("text", x = mean(light$Speed), y = 0.0005, hjust = 0, label = "Sample mean", color = "red") + geom_vline(xintercept = 792.458, color = "blue") + annotate("text", x = 792.458, y = 0.0005, hjust = 1, label = "Wikipedia mean", color = "blue") + xlab("Speed (km/s, above 299,000)") 2 density 0.004 0.002 Wikipedia mean Sample mean 0.000 600 700 800 900 1000 Speed (km/s, above 299,000) Exercises 1–2 1. Describe these data. What conclusions can you draw from the plot? The density plots suggests that the data are multimodal (thus, probably not described well by a normal distribution). The data from the experiments seem to overestimate the speed of light. From the plot, we cannot easily determine if this difference is just due to sampling variation or a systematic difference (but later when we calculate the CI for y we’ll see that it is implausible that the difference is due only to sampling variation) 2. Draw a histogram of these data. 2.3 Summarise the data Now let’s produce a summary table, based on all 100 observations, containing the average speed (“mean”), the median speed (“median”), the standard deviation of the speed (“SD”) and the interquartile range (“IQR”). light_summary <- light %>% summarise(n = n(), mean = mean(Speed), median = median(Speed), SD = sd(Speed), IQR = IQR(Speed)) light_summary %>% kable() %>% kable_styling(latex_options = "hold_position") n mean median SD IQR 100 852.4 850 79.01055 85 In the above code, the first command creates the summaries, while the second one prints them out in a table. Both of these can be customised in many ways. For example, the SD statistic is displayed with far too many significant figures.3 We can specify that we want fewer by using the digits argument in kable(): 3 For a detailed discussion about significant figures and statistics, see the very final section in these notes. 3 light_summary %>% kable(digits = 1) %>% kable_styling(latex_options = "hold_position") n mean median SD IQR 100 852.4 850 79 85 Notice how in the above we have saved the summaries in a new tibble called light_summary. This was useful when we wanted to use this later multiple times, as we have here to demonstrate different ways to format the output. Explanation of these summary statistics: • The sample mean is the average of all of the measurements. • The sample median is a value for which half of the Speed values are larger and half of the Speed values are lower. Both the mean and the median are a measure of a “central” or “average” value of a distribution. In statistical jargon, we often refer to this concept as the location of the distribution. • The sample standard deviation (SD) is the square root of the sample variance, and is a measure of how “spread out” the values are. In statistical jargon, we refer to the concept of the “spread” of a distribution data as its dispersion. This can be quantified in different ways. One benefit of the standard deviation is that it is in the same units as the original observations. • The sample interquartile range (IQR) is another measure of dispersion, and is in the units of the original data. It represents the spread of the middle 50% of the data. For these data, the mean of the sample is a bit larger than the speed of light value from Wikipedia. The SD is small relative to the mean, and the IQR is also small. This suggests that the data are not too spread out. The median is also very close to the mean, so the data do not seem overly skewed. 2.4 Estimation Let’s use these data to estimate µ. We already have a point estimate from above; using the sample mean we have µ̂ = 852. To explicitly show the uncertainty of this estimate, it is better to calculate and report a confidence interval. Let’s do that, using the t.test() command as we saw in the lectures: t.test(x = morley$Speed)$conf.int ## [1] 836.7226 868.0774 ## attr(,"conf.level") ## [1] 0.95 Our 95% confidence interval for µ is (837, 868). How does this estimate compare to the value from Wikipedia? Notice that the confidence interval is substantially different to µW , which was about 792. Therefore, it is implausible that µ (the value that the experiments are centered around) is the same as µW . Michaelson’s experiments were generating values on average that were systematically higher than the true speed of light. 4 The fact that µ and µW clearly differ probably reflects some combination of weaknesses in the design of the experiment or the equipment that was available at the time, possible errors in the way the experiment was conducted, or errors in how the data were measured and processed. Exercises 3–6 3. Calculate the 95% confidence interval without using t.test(). # Step 1: Calculate the sample mean mean_x <- mean(x = morley$Speed) # Step 2: Calculate the sample standard deviation sd_x <- sd(x = morley$Speed) # Step 3: Determine the critical t-value for 95% confidence n <- length(x = morley$Speed) alpha <- 0.05 t_value <- qt(1 - alpha/2, df = n-1) # Step 4: Calculate the margin of error MOE <- t_value * sd_x / sqrt(n) # Step 5: Compute the confidence interval lower_bound <- mean_x - MOE upper_bound <- mean_x + MOE confidence_interval <- c(lower_bound, upper_bound) head(confidence_interval) ## [1] 836.7226 868.0774 light_summary$mean + c(-1,1) * qt(0.975, light_summary$n- 1) * light_summary$SD/sqrt(light_summary$n) ## [1] 836.7226 868.0774 4. Which of the following quantities are parameters and which are statistics? • µ • µW • µ̂ 5. If we were to repeat Michaelson’s experiments to get another 100 observations and used these to calculate a new 95% confidence interval, what is the probability that it would contain µ? What about µW ? Before actually doing the extra exeriment, we know that such a CI would have a probability of the 0.95 of containing u. This is a consequence of how we define and contruct We don’t know the exact probability that it would have uw. However, given that 6. For the confidence interval you calculated above, what is the probability that it contains µ? New LWI - New UCI: FIXED µ: FIXED Chance: Either 0 or 100% , no probability in here 5 3 Cognitive behavioural therapy: Does the treatment have an effect? Cognitive behavioural therapy (CBT) is a psychological treatment technique that aims to help a person change their thoughts (cognition) and their behavioural patterns. By learning to replace negative thoughts with more positive ones, and to correspondingly modify negative behaviours with the aim of improving feelings of anxiety and/or depression. Although CBT has been one of the most important treatments for anxiety and depression over many decades now, new methods for delivering the CBT treatment are regularly sought to try to improve the effectiveness of the general technique. A recent study was undertaken to assess such a new CBT delivery method. In total, 60 people were recruited to voluntarily participate in the study, all participants having had a recent clinically confirmed episode of anxiety or depression, or both. Each study participant was asked to complete a certain psychological assessment on two occasions, once before the new CBT delivery treatment method was applied, and once at the end of the treatment. As higher scores on the assessment are associated with an increase in anxiety and depression, it is hoped that the individual scores will be reduced following the new CBT treatment, compared with the corresponding scores obtained at the start of the study. Each participant’s scores on these two assessments are contained in the file CBT.csv. Each row of this file corresponds to one of the sixty (60) subjects who participated in the CBT delivery method experiment. Column 1 corresponds to the individual participant’s case number (case), while the values stored in columns 2 and 3 of each row (score1 and score2) relate to the assessment scores for the participant corresponding to the row case number, with score1 the score of the assessment completed before the start of CBT treatment and score2 the score of the assessment completed following the end of CBT treatment. In this example, we are interested in whether this CBT delivery method leads to a change in anxiety and depression. The simplest such change would be a difference in the scores measured in the study. Let µ1 be the population average assessment score before the treatment, and µ2 be the score after the treatment. Let their difference be δ = µ2 − µ1 . We want to estimate δ. Notice that we have paired data here. That means we will be able to take differences in the scores on a per-subject basis when estimating δ. 3.1 Setting up Start by downloading the CBT.csv file and import it into R as tibble: CBT <- read_csv("CBT.csv") view(CBT) 3.2 Data visualisation The following exercises will take you through producing two different visualisations of these data, positioned side-by-side. You might need to look up information on some of the necessary R functions if they are unfamiliar to you. Exercises 7–9 7. To begin with, add a new column to CBT that calculates the difference between the two scores for each subject. Specifically, call the new column Diff and define it as score2 minus score1. (Hint: use the mutate() function.) 6 CBT <- CBT %>% mutate(Diff = score2 - score1) CBT ## # A tibble: 60 x 4 ## case score1 score2 Diff ## <dbl> <dbl> <dbl> <dbl> ## 1 1 26.4 29.8 3.41 ## 2 2 32.2 30.7 -1.55 ## 3 3 28.5 13.3 -15.2 ## 4 4 23.6 28.2 4.65 ## 5 5 30.4 31.6 1.24 ## 6 6 28.2 20.8 -7.44 ## 7 7 31.6 20.8 -10.8 ## 8 8 44.6 32.9 -11.8 ## 9 9 34.1 30.7 -3.41 ## 10 10 30.4 24.5 -5.89 ## # i 50 more rows 8. For the first plot, we want an estimated density plot of these differences, similar in style to the plot we made for the speed of light data in the previous section. Create such a plot. Include a vertical red line showing the sample mean (of Diff) and a vertical black line at zero. 9. For the second plot, we want to display the distributions of the two scores side-by-side. To do that easily in the tidyverse, it will help if we first restructure the tibble so the scores are all in a single column; this is known as a “long” format (in contrast to a “wide” format, where such values are in separate columns). The pivot_longer() function can do this for us, as follows: CBT_longer <- CBT %>% pivot_longer(cols = 2:3, names_to = "assess", values_to = "score") CBT_longer ## # A tibble: 120 x 4 ## case Diff assess score ## <dbl> <dbl> <chr> <dbl> ## 1 1 3.41 score1 26.4 ## 2 1 3.41 score2 29.8 ## 3 2 -1.55 score1 32.2 ## 4 2 -1.55 score2 30.7 ## 5 3 -15.2 score1 28.5 ## 6 3 -15.2 score2 13.3 ## 7 4 4.65 score1 23.6 ## 8 4 4.65 score2 28.2 ## 9 5 1.24 score1 30.4 ## 10 5 1.24 score2 31.6 ## # i 110 more rows This gives us a new tibble, with the scores together in a single column called score, and a new column called assess that specifies the type of each score (either score1 or score2). Using this new tibble, create sideby-side violin plots to compare the distribution of the two scores. (Hint: use the geom_violin() function as part of your plotting commands.) Suppose that we saved these two plots into objects called p2density and p2violin respectively. We can display these plots side-by-side with the following command: 7 grid.arrange(p2density, p2violin, ncol = 2) 3.3 Further analyses and estimation Exercises 10–12 10. Produce a summary tibble, based on all 60 observations of Diff, that displays the number of observations n, and the sample mean, median, standard deviation and interquartile range. 11. Calculate a 95% confidence interval for Diff. 12. What insights can you draw your plots and data analyses? 4 Birth weight Load the birthwt data from the MASS package into your R session (install the package if you do not have it). library(MASS) data(birthwt) Take a look at these data and review the R help page for them. For today we will focus only on the variables named bwt and smoke. Exercises 13–16 13. Briefly explain what these two variables represent. 14. Produce at least one appropriate visualisation of the bwt variable, in relation to the smoking status of the mother during pregnancy. You are not required to produce exactly the same type of plots as above, rather produce what you think is of interest. (Have a go on your own to begin with. After that, have a look at Section 6.4 of the R Graphics Cookbook for some more ideas.) 15. Are the two groups independent samples? Explain why or why not. 16. Produce a summary tibble that displays the number of observations where the mother smoked (call it n1), and the sample mean birth weight of babies born to these mothers (call it mean1), along with the corresponding standard deviation (call it SD1). Display these in a table. Repeat this for the birth weights of babies born to mothers who did not smoke (with similar variable names but with a “0” instead of a “1”). Hint: you might like to use the filter() function. 4.1 Comparison of means Let µ1 and µ0 be respectively the population mean birth weights of babies whose mothers smoke, or do not smoke, during pregnancy. Let their difference be δ = µ1 − µ0 . Exercises 17–18 17. Calculate an approximate 95% confidence interval for δ. 18. Interpret the output in the context of the setting. 8 5 A note on significant figures In any final reports, we should only represent our data with enough significant figures as is warranted by the precision of those data. Using any more than this is misleading, and is referred to as false precision or spurious precision. For example, if we measure the width of a phone with a ruler that has tick marks at every millimeter (mm), then we should only report our measurement to the nearest mm. If we said that the phone was 4.3671 mm wide, no one should believe the last few digits. Similarly, when we report any statistics, we should be mindful that they also have an inherent precision. Remember that all statistics have a sampling distribution, and we can think of the variation in that distribution as being how precise we can “measure” that statistic. We can estimate this precision by calculating the standard error for that statistic; typically we do this when we are using the statistic as an estimator. Therefore, when we report statistics, we should take care to not overstate the precision. For example, suppose we are estimating θ and for a given sample we get θ̂ = 12.107348 and se(θ̂) = 3.11203. Is that how we should write it? Is anyone really going to believe that the third decimal place in θ is 7, given these data? Given the standard error, it’s quite plausible that the true value is not even close to 12, let alone worrying about the smaller decimal places. For this reason, it is good practice to round these values to many fewer digits when we display them in any final reports. In this case, we could write θ̂ = 12.1 and se(θ̂) = 3.1. You probably already do this type of simplification naturally in many commonplace contexts. For example, would you say that the number of people in the world is 8.1257 billion? More likely you would say “around 8.1 billion”, reflecting the fact that no one has actually counted this precisely. All of this only applies when we are reporting or communicating the final results. If are storing or reporting any intermediate values that need to be fed into further calculations, then we should retain as much precision as possible to avoid accumulating rounding errors. You can read more at: https://en.wikipedia.org/wiki/False_precision https://en.wikipedia.org/wiki/Significant_figures 9