NEW

EDITION

MACHINE

LEARNING

ATO Z -i

MASTERY FROM A TO Z WITH CODE

PRACTICAL EXAMPLES,

ALGORITHMS, AND APPLICATION.

VAN SHI KA

INDEX

Introduction to machine learning

1.

•

•

•

2.

Data in Machine Learning: The

backbone of insights

•

•

•

•

•

3.

In a nutshell, machine learning

Key point

features of machine learning

Type of data

Data splitting

Data preprocessing

Data advantages

Data disadvantages

Machine Learning: Pioneering

Innovations in Diverse Fields

4.

Work?

•

How Does Machine Learning

How machine learning engineers typically work

5.

Selecting a machine learning

algorithm

6.

•

•

•

7.

•

•

•

8.

•

Three sets

Training set

Validation set

Test set

Types of machine learning

Supervised learning

Unsupervised learning

Reinforcement learning

Supervised learning

Classification

•

Regression

9.

•

•

•

•

•

•

•

•

•

•

•

Classification

Introduction to Classification

Type of classification

Common classification algorithms

Type of learners in classification algorithm

Evaluating classification models

How classification works

Applications of classification algorithm

Implementation

Understanding classification in data mining

Steps in building a classification model

Importance of classification in data mining

10.

Explanation

11.

Classification & Regression

12.

Regression

•

•

•

•

•

Linear regression

Cost function for linear regression(squared error)

Gradient descent or stochastic gradient descent

Batch gradient descent

Batch SGD vs Minibatch SGD vs SGD

• Explain Briefly Batch Gradient Descent,

Stochastic Gradient Descent, and Mini-batch

Gradient Descent?

• List the Pros and Cons of Each.

• Polynomial Regression

• Overfitting and Underfitting

13.

Type of Regression

• Linear regression

14.

Cost function for Linear

regression

•

Type of Cost function in linear regression

15.

Naive Bayes

16.

Prior probabilities

17.

Evaluating your Naive Bayes

Classifiers

• Type of naive bayes classifiers

• Example

18.

•

•

Gradient Descent

Implementation

How does the gradient descent algorithm work

19.

Stochastic Gradient Descent

20.

Batch Gradient Descent

21.

Mini-Batch Gradient Descent

•

Key differences

22.

•

Polynomial Regression

How does it work

23.

Overfitting

• Why does overfitting occur?

•

How can you detect overfitting?

24.

Underfitting

• Why does underfitting occur?

• How can you detect underfitting?

25.

•

Unsupervised learning

How unsupervised learning works

26.

27.

Clustering algorithms

Anomaly detection algorithm

28.

Dimensionality Reduction

29.

What is Predictive Modeling?

30.

Why Dimensionality Reduction

important in machine learning &

Predictive Modeling?

31.

Two components of

Dimensionality Reduction

•

•

Feature selection

Feature extraction

32.

•

•

Decision tree

Gini Index

Information Gain

33.

Gini Index

34.

Information Gain

35.

Logistic regression

•

•

•

Type of logistic regression

How does logistic regression work?

Logistic regression equation

36.

Neural Networks

37.

Neural Networks: How They

Mimic The Brain

Introduction

to Machine Learning

~ It gives computers the ability to learn from data and

become more humans like in their behavior.

~ ML is based on statistical techniques and algorithms

to find patterns in data and make predictions.

~ Unlike traditional programming, ML models learn

from input data and generate their own programs.

~ Good quality data is essential for training ML models.

~ Different algorithms are used based on the type of

data and the task at hand.

In a nutshell, Machine Learning is

•

•

AI Subset: It's a part of Artificial Intelligence.

Automated Learning: Machines learn and

improve with minimum human intervention.

• Data~Driven: Models are trained on data to

find patterns and make decisions.

• No Explicit Programming: Unlike

traditional programming, ML models generate

their own programs.

Key Points

~ ML allows computers to learn from data and make

decisions like humans.

~ It's a subset of AI and relies on statistical techniques

and algorithms.

~ Input data and outputs are used to train ML models

during the learning phase.

~ ML requires good quality data for effective training.

~ Different algorithms are used depending on the data

and the task.

Remember: Machine Learning enables computers to

learn and adapt, making it an exciting and powerful

technology!

Features of machine learning

• Machine learning and data have a closely intertwined

relationship. Machine learning algorithms are designed

to learn and make predictions based on patterns

present in the data. The basic idea is that machine

learning models are trained on historical data to extract

patterns and relationships. Once trained, these models

can be used to make predictions or decisions on new,

unseen data.

• . By analyzing large volumes of data, organizations

can gain a deeper understanding of trends, customer

behaviors, market dynamics, and more..

• They can handle complex and unstructured data

types, such as images, text, and sensor data, which

may be challenging for traditional programming

approaches.

For example, in finance, machine learning models can

predict stock prices or detect fraud based on historical

transaction data. In healthcare, machine learning models

can predict disease outbreaks or assist in diagnosing

medical conditions based on patient data and medical

records.

• . By analyzing customer interactions, purchase history,

and browsing patterns, machine learning models can

create personalized experiences for customers.

Data in Machine Learning:

The

Backbone of

Insights

~ Crucial Component: Data forms the foundation of Machine

Learning, providing observations or measurements used to

train ML models.

~ Quality and Quantity: The performance of ML models

heavily relies on the quality and quantity of available data

for training and testing.

~ Various Forms: Data can be numerical, categorical, or

time~series, sourced from databases, spreadsheets, or APIs.

~ Learning Patterns: ML algorithms use data to learn

patterns and relationships between input variables and

target outputs for prediction and classification tasks.

Types of Data

1. Labeled Data: Includes a target variable for

prediction.

2. Unlabeled Data: Lacks a target variable.

3. Numeric Data: Represented by measurable values

(e.g., age,

income).

4. Categorical Data: Represents categories (e.g.,

gender, fruit type).

5. Ordinal Data: Categorical data with ordered

categories (e.g., clothing sizes, customer satisfaction scale).

Data Splitting

~ Training Data: Used to train the model on input~output

pairs.

~ Validation Data: Used to optimize model

hyperparameters during training.

~ Testing Data: Evaluates the model's performance on

unseen data after training.

Data Preprocessing

~ Cleaning and Normalizing: Preparing data for analysis

by handling missing values and scaling features.

~ Feature Selection/Engineering: Selecting relevant

features or creating new ones to improve model

performance.

Data Advantages

~ Improved Accuracy: More data allows ML models to

learn complex relationships, leading to better predictions.

~ Automation: ML automates decision~making and

repetitive tasks efficiently.

~ Personalization: ML enables personalized experiences

for users, increasing satisfaction.

~ Cost Savings: Automation reduces manual labor and

increases efficiency, leading to cost savings.

Data Disadvantages

~ Bias: Biased data can result in biased predictions and

classifications.

~ Privacy Concerns: Data collection raises privacy and

security risks.

~ Quality Impact: Poor data quality leads to inaccurate

model predictions.

~ Lack of Interpretability: Some ML models are complex

and hard to interpret, making decision understanding

difficult.

Machine Learning: Pioneering Innovations

in Diverse Fields

APPLICATIONS :

1. Image Recognition:

- Evolving from basic cat-dog classification to

sophisticated face recognition.

- Revolutionizing healthcare with disease recognition and

accurate diagnosis.

2. Speech Recognition:

- Empowering smart systems like Alexa and Siri for

seamless interactions.

- Enabling convenient voice-based Google searches and

virtual assistants.

3. Recommender Systems:

- Personalizing services based on user preferences and

search history.

- Examples: YouTube video recommendations,

personalized Netflix movie suggestions.

4. Fraud Detection:

- Efficiently identifying and preventing fraudulent

transactions and activities.

- Providing real-time notifications for suspicious user

behavior.

5. Self-Driving Cars:

- Enabling cars to navigate autonomously without human

intervention.

- Tesla cars as prominent examples of successful

autonomous driving technology.

6. Medical Diagnosis:

- Achieving high accuracy in disease classification and

diagnosis.

- Utilizing machine learning models for detecting human

and plant diseases.

7. Stock Market Trading:

- Predicting future price trends and market values through

time series forecasting.

- Assisting traders with intelligent systems for data-driven

decision-making.

8. Virtual Try On:

- Providing virtual simulation for trying on products like

glasses or accessories.

- Utilizing facial recognition to accurately place virtual

items on users' faces.

How Machine Learning

Engineers Typically Work:

1. Use of Libraries: Machine learning engineers often

leverage existing libraries and frameworks rather than

implementing algorithms from scratch.

2. Open Source Libraries: Many machine

learning libraries are open source, making them

accessible to a wide range of developers and

researchers.

3. Efficiency and Stability: Libraries are

developed and maintained to ensure stability and

efficiency in implementing complex algorithms.

4. Algorithm Selection: Engineers choose

libraries based on the specific problem they are trying

to solve. For example, they might use scikit-learn for

traditional machine learning tasks.

5. Training Models: To train a machine learning

model, engineers typically follow a systematic process:

def train(x, y):

from sklearn.linear_model import LinearRegression

model = LinearRegression().fit(x,y)

return model

model = train(x,y)

x_new = 23.0

y_new = model.predict(x_new)

print(y_new)

•

Import the necessary library or module (e.g.,

from sklearn.linear_model import

LinearRegression ).

•

Instantiate a model object (e.g., model =

LinearRegression() ).

•

Train the model using labeled data (e.g.,

model.fit(x, y) ).

•

Return the trained model (e.g., return model ).

6. Making Predictions: Once the model is

trained, it can be used to make predictions:

•

Provide new input data (e.g., x_new = 23.0 ).

•

Use the trained model to predict the output

(e.g., y_new = model.predict(x_new) ).

7. Output: Engineers often print or use the

predicted values for further analysis or decision­

making.

8. Iterative Process: Machine learning is often

an iterative process. Engineers may adjust

hyperparameters, try different algorithms, or fine-tune

the model based on performance evaluation.

9. Data Handling: Data preparation, cleaning,

and feature engineering are crucial steps before

training a machine learning model.

10. Evaluation: Engineers evaluate model

performance using various metrics and techniques to

ensure it meets the desired criteria.

11. Deployment: In real-world applications,

models are deployed to production environments, often

using platforms like cloud services or APIs.

12. Monitoring: Engineers monitor the deployed

models for performance, drift, and potential issues,

ensuring they remain effective over time.

In the example you provided, the engineer is using scikit-

learn, a popular machine learning library in Python, to train

a linear regression model. They follow a systematic

approach to load the library, create and train the model, and

make predictions. This is a common workflow for machine

learning engineers when working with established libraries

to solve real-world problems.

Selecting a machine learning

algorithm

1. Explainability:

•

Consider whether your model needs to be

explainable to a non-technical audience.

•

Some accurate algorithms, like neural networks,

can be "black boxes," making it challenging to

understand and explain their predictions.

•

Simpler algorithms such as kNN, linear

regression, or decision trees offer more

transparency in how predictions are made.

2. In-memory vs. Out-of-memory:

•

Determine if your dataset can fit into the

memory (RAM) of your server or computer.

•

If it fits in memory, you have a broader range of

algorithms to choose from.

•

If not, consider incremental learning algorithms

that can handle data in smaller chunks.

3. Number of Features and Examples:

•

Assess the number of training examples and

features in your dataset.

•

Some algorithms, like neural networks and

gradient boosting, can handle large datasets with

millions of features.

•

Others, like SVM, may perform well with more

modest capacity.

4. Categorical vs. Numerical Features:

•

Identify if your data consists of categorical

features, numerical features, or a mix.

•

Certain algorithms require numeric input,

necessitating techniques like one-hot encoding for

categorical data.

5. Nonlinearity of the Data:

•

Determine whether your data exhibits linear

separability or can be effectively modeled with

linear techniques.

•

Linear models like SVM with linear kernels,

logistic regression, or linear regression are suitable

for linear data.

•

Complex, nonlinear data may require deep

neural networks or ensemble algorithms.

6. Training Speed:

•

Consider the time allowance for training your

model.

•

Some algorithms, like neural networks, are

slower to train, while simpler ones like logistic

regression or decision trees are faster.

•

Parallel processing can significantly speed up

certain algorithms like random forests.

7. Prediction Speed:

•

Evaluate the speed requirements for generating

predictions, especially if the model will be used in

production.

•

Algorithms like SVMs, linear regression, or

logistic regression are fast for prediction.

•

Others, like kNN or deep neural networks, can

be slower.

8. Validation Set Testing:

•

If unsure about the best algorithm, it's common

to test multiple algorithms on a validation set to

assess their performance.

•

The choice of algorithm can be guided by

empirical testing and validation results.

These considerations help machine learning engineers make

informed decisions when selecting the most suitable

algorithm for a specific problem and dataset. The choice of

algorithm should align with the problem's requirements,

data characteristics, and computational constraints.

Three sets (Training set, Validation set,

and Test set)

Models learn the task

Which model

is the best?

How good

is this

model truly?

Certainly, here are the important key points about the use

of three sets (training set, validation set, and test set) in

machine learning:

1. Three Sets of Labeled Examples:

•

In practical machine learning, data analysts

typically work with three subsets of labeled

examples: training set, validation set, and test set.

•

These sets are used for different purposes in

the model development process.

2. Data Splitting:

•

After obtaining an annotated dataset, the first step is

to shuffle the examples randomly.

•

The dataset is then divided into three subsets:

training, validation, and test.

3. Set Sizes:

•

The training set is usually the largest, used for

building the machine learning model.

•

The validation and test sets are smaller and roughly

of similar size.

•

The model is not allowed to use examples from the

validation and test sets during training, which is why

they are often called "hold-out sets."

4. Proportion of Split:

There is no fixed or optimal proportion for splitting

•

the dataset into these three subsets.

In the past, a common rule of thumb was 70% for

•

training, 15% for validation, and 15% for testing.

In the age of big data, it may be reasonable to

•

allocate 95% for training and 2.5% each for validation

and testing.

5. Purpose of Validation Set:

•

The validation set serves two main purposes:

5.1.

It helps choose the appropriate learning algorithm.

5.2.

It assists in finding the best values for

hyperparameters.

6. Purpose of Test Set:

•

The test set is used to assess the model's

performance objectively.

•

It ensures that the model performs well on data

it hasn't seen during training.

7. Preventing Overfitting:

•

The use of validation and test sets helps prevent

overfitting, where a model becomes too specialized in

predicting the training data but fails on new, unseen

data.

8. Model Generalization:

•

The ultimate goal is to build a model that generalizes

well to new, unseen examples.

•

Performance on the validation and test sets provides

insights into the model's ability to generalize.

Using these three sets helps ensure that the machine

learning model is robust, performs well on unseen data, and

is suitable for practical use, rather than just memorizing

training examples.

Types of Machine Learning

1. Supervised Learning:

- Introduction: Supervised learning involves training a

model on labeled data, where the target variable is known.

- Learning Process: The model learns from input-output

pairs to make predictions on new, unseen data.

- Common Algorithms: Linear Regression, Decision Trees,

Support Vector Machines (SVM), Neural Networks.

2. Unsupervised Learning:

- Introduction: Unsupervised learning deals with unlabeled

data, where the model explores patterns and relationships

within the data on its own.

- Learning Process: The model identifies hidden structures

or clusters in the data without any explicit guidance.

Common Algorithms: K-Means Clustering, Hierarchical

Clustering,PrincipalComponentAnalysis(PCA).

Unsupervised Learning

Input

Output

3. Reinforcement Learning:

- Introduction: Reinforcement learning involves an agent

interacting with an environment to achieve a goal.

- Learning Process: The agent takes actions, receives

feedback (rewards/punishments), and adjusts its strategy to

maximize rewards.

- Applications: Game playing, Robotics, Autonomous

vehicles.

Supervised Learning

Here's an example of

supervised learning using

Python code with the scikitlearn library and a simple

linear regression algorithm:

#

Import the necessary libraries

from sklearn import linear.model

import numpy as np

#

Sample input data (features)

X = np.array([[l], [2], [3], [4], [5]])

#

Corresponding output data (labels)

y = np.array([2, 4, 5, 4, 5])

#

Create a linear regression model

model = linear_model.LinearRegression()

#

Fit the model to the data (training)

model.fit(X, y)

#

Make predictions

X.new = np.array([[6]])

prediction = model.predict(X.new)

#

Print the prediction

Introduction to Classification

- Classification is the process of categorizing data or objects

into predefined classes or categories based on their features

or attributes.

- It falls under supervised machine learning, where an

algorithm is trained on labeled data to predict the class or

category of new, unseen data.

Types of Classification

1. Binary Classification:

- Involves classifying data into two distinct classes or

categories.

- Example: Determining whether a person has a certain

disease or not.

2. Multiclass Classification:

- Involves classifying data into multiple classes or

categories.

- Example: Identifying the species of a flower based on its

characteristics.

Common Classification Algorithms

- Linear Classifiers: Create a straight decision boundary

between classes.

Examples Logistic Regression and Support Vector Machines (SVM).

- Non-linear Classifiers: Create complex decision

boundaries between classes. Examples K-Nearest Neighbors and Decision Trees.

Types of Learners in Classification Algorithms

- Slow Learners: Make predictions based on stored training

data. Examples include k-nearest neighbors.

- Fast Learners: Create models during training and use

them for predictions. Examples include decision trees and

support vector machines.

Evaluating Classification Models

- Classification Accuracy: Measures how many instances

are correctly classified out of the total instances.

- Confusion Matrix: Shows true positives, true negatives,

false positives, and false negatives for each class.

- Precision and Recall: Useful for imbalanced datasets,

measuring true positive rate and true negative rate.

- F1-Score: Harmonic mean of precision and recall for

imbalanced datasets.

- ROC Curve and AUC: Analyze classifier performance at

different thresholds.

- Cross-validation: Obtaining a reliable estimate of model

performance.

How Classification Works

1. Understanding the Problem: Define class labels and

their relationship with input data.

2. Data Preparation: Clean and preprocess data, split into

training and test sets.

3. Feature Extraction: Select relevant features from the

data.

4. Model Selection: Choose an appropriate classification

model.

5. Model Training: Train the model on the labeled training

data.

6. Model Evaluation: Assess the model's performance on

a validation set.

7. Fine-tuning the Model: Adjust model parameters for

better performance.

8. Model Deployment: Apply the trained model to make

predictions on new data.

Applications of Classification Algorithms

- Email spam filtering

- Credit risk assessment

- Medical diagnosis

- Image classification

- Sentiment analysis

- Fraud detection

- Quality control

- Recommendation systems

IMPLEMENTATION - Don’t worry the code explained

line by line .

# Importing the required libraries

import numpy as np

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

from sklearn import datasets

from sklearn import svm

from sklearn.tree import DecisionTreeClassifier

from sklearn.naive_bayes import GaussianNB

# import the iris dataset

iris = datasets.load_iris()

X = iris.data

y = iris.target

# splitting X and y into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.3, random_state=1)

1. # GAUSSIAN NAIVE BAYES

gnb = GaussianNB()

# train the model

gnb.fit(X_train, y_train)

# make predictions

gnb_pred = gnb.predict(X_test)

# print the accuracy

print("Accuracy of Gaussian Naive Bayes: ",

accuracy_score(y_test, gnb_pred))

2. # DECISION TREE CLASSIFIER

dt = DecisionTreeClassifier(random_state=0)

# train the model

dt.fit(X_train, y_train)

# make predictions

dt_pred = dt.predict(X_test)

# print the accuracy

print("Accuracy of Decision Tree Classifier: ",

accuracy_score(y_test, dt_pred))

3.# SUPPORT VECTOR MACHINE

svm_clf = svm.SVC(kernel = 'linear') -- # Linear Kernel

# train the model

svm_clf.fit(X_train, y_train)

# make predictions using svm

svm_clf_pred = svm_clf.predict(X_test)

# print the accuracy

print("Accuracy of Support Vector Machine: ",

accuracy_score(y_test, svm_clf_pred))

Output:

Accuracy of Gaussian Naive Bayes:

0.9333333333333333

Accuracy of Decision Tree Classifier:

Accuracy of Support Vector Machine:

0.9555555555555556

1.0

Understanding Classification in Data Mining

Definition of Data Mining: Data mining is the process of

analyzing and exploring large datasets to discover patterns

and gain insights from the data. It involves sorting and

identifying relationships in the data to solve problems and

perform data analysis.

Introduction to Classification: Classification is a data

mining task that involves assigning class labels to instances

in a dataset based on their features. The goal of

classification is to build a model that can accurately predict

the class labels of new instances based on their features.

Steps in Building a Classification Model:

1. Data Collection: Collect relevant data for the problem

at hand from various sources like surveys, questionnaires,

and databases.

2. Data Preprocessing: Handle missing values, deal with

outliers, and transform the data into a suitable format for

analysis.

3. Feature Selection: Identify the most relevant attributes

in the dataset for classification.

4. Model Selection: Choose the appropriate classification

algorithm, such as decision trees, support vector machines,

or neural networks.

5. Model Training: Use the selected algorithm to learn

patterns in the data from the training set.

6. Model Evaluation: Assess the performance of the

trained model on a validation set and a test set to ensure it

generalises well.

Importance of Classification in Data Mining:

- Classification is widely used in various domains, including

email filtering, sentiment analysis, and medical diagnosis.

- It helps in making informed decisions by categorizing data

into meaningful classes.

- By predicting outcomes, it assists in identifying risks and

opportunities.

Independent

m* Variables

Classification

Model

rataonrirai

outpX^ble

Figure 2: Classification of vegetables and groceries

#Everything explained in detail 1-14

Supervised Learning Types:

Supervised learning, a cornerstone of machine learning,

finds widespread utility across diverse domains such as

finance, healthcare, and house-price. It represents a

category of machine learning wherein algorithms are trained

using labelled data to foresee outcomes based on input

data.

In supervised learning, the algorithm forges a link between

input and output data. This relationship is discerned from

labelled datasets, containing pairs of input-output examples.

The algorithm endeavors to comprehend the connections

between input and output, equipping it to make precise

forecasts on fresh, unencountered data.

Labeled datasets in supervised learning encompass input

features coupled with corresponding output labels. Input

features encapsulate data attributes that inform predictions,

while output labels signify the desired results the algorithm

aims to predict.

Supervised learning diverges into two principal classes:

regression and classification. In regression, the algorithm

grapples with predicting continuous outcomes, be it

estimating the value of a house or gauging the temperature

of a city. In contrast, classification tackles categorical

predictions, like discerning whether a customer is likely to

embrace a product or abstain.

A cardinal benefit of supervised learning is its capacity to

craft intricate models proficient in making accurate

predictions on novel data. Nonetheless, this proficiency

demands an abundant supply of accurately labelled training

data. Additionally, the caliber and inclusiveness of the

training data wield a substantial influence on the model's

precision.

Supervised learning further fragments into two

branches:

1. Regression: Inferring Continuity

- Linear Regression:

- Polynomial Regression:

- Decision Trees for Regression:

2. Classification:

- Logistic Regression:

- Decision Trees for Classification:

- Support Vector Machines (SVM) for Classification:

■

■

Aspect

Classification

Regression

Purpose

Predicts categorical outcomes

Predicts continuous numeric

outcomes

Output

Discrete classes or labels

Continuous values

Example

Email spam detection

House price prediction

Target

Class labels (e.g., spam or not spam)

Numeric values (e.g., temperature)

Algorithms

Logistic Regression, Decision Trees,

Linear Regression, Polynomial

Support Vector Machines, Neural

Regression, Decision Trees,

Networks, etc.

Support Vector Machines, etc.

Accuracy, Precision, Recall, F1-score

Mean Squared Error, R-squared, etc.

Visualization

Confusion Matrix, ROC Curve, etc.

Scatter Plots, Residual Plots, etc.

Use Cases

Image recognition, Sentiment

Stock market prediction,

Evaluation

Metrics

Analysis

Medical diagnosis, Customer churn,

Population growth estimation,

Fraud detection, etc.

Sales forecasting, etc.

Task

Algorithm

Explanation

Example

Classification

Decision Tree

Hierarchical structure to

Predicting whether an

classify data based on

email is spam or not

features

Classification

Classification

Classification

Regression

Regression

Regression

Random Forest

Ensemble of decision trees

Identifying handwritten

Classifier

for improved classification

digits (0-9)

K - Nearest

Classifies based on majority

Identifying customer

Neighbors

class among k-nearest

segments for targeted

neighbors

marketing

Support Vector

Creates optimal decision

Detecting fraudulent credit

Machine

boundary to separate classes

card transactions

Linear

Predicts continuous values

Estimating house prices

Regression

using linear relationship

based on features

Polynomial

Fits curves to data for more

Modeling stock market

Regression

complex relationships

price trends

Decision Trees

Uses tree structure to predict

Forecasting future

for Regression

continuous values

temperature based on

weather data

Regression

Random Forest

Ensemble of decision trees

Predicting energy

Regressor

for improved regression

consumption in a building

#explanation

1. Machine Learning:

Machine learning is the process of enabling machines to

learn from data and improve their performance over time

without being explicitly programmed.

- Example: An email spam filter that learns to identify

spam messages based on patterns in the text content.

2. Supervised Learning:

Supervised learning is a type of machine learning where

the algorithm learns from labeled data, which includes input

features and corresponding output labels.

- Example: Training a model to predict housing prices

using historical data where each data point includes

features like square footage and location along with the

actual sale price.

3. Labeled Datasets:

Labeled datasets consist of input data points along with

the correct corresponding output labels, which serve as the

ground truth for training the model.

- Example: A dataset containing images of cats and dogs

along with labels indicating whether each image contains a

cat or a dog.

4. Regression:

Regression is a type of supervised learning where the goal

is to predict continuous numerical values based on input

features.

- Example: Predicting a person's annual income based on

factors such as education level, work experience, and

location.

5. Classification:

Classification is a type of supervised learning where the

goal is to categorize input data into predefined classes or

categories.

- Example: Classifying emails as either spam or not spam

based on the words and phrases contained in the email

content.

6. Linear Regression:

Linear regression is a regression algorithm that aims to

find a linear relationship between input features and the

predicted output.

- Example: Predicting the price of a used car based on its

mileage and age using a straight-line equation.

7. Logistic Regression:

Logistic regression is a classification algorithm that

predicts the probability of a binary outcome (e.g., true/false

or 0/1).

- Example: Determining whether a customer will churn

(cancel their subscription) from a service based on factors

like usage history and customer satisfaction.

8. Decision Trees:

Decision trees are hierarchical structures that make

decisions based on the values of input features, leading to

different outcomes.

- Example: Predicting whether a flight will be delayed

based on factors like departure time, airline, and weather

conditions.

9. Random Forests:

Random forests are an ensemble of multiple decision

trees that work together to make more accurate predictions.

- Example: Classifying customer preferences for a product

based on multiple factors like age, income, and purchase

history using a collection of decision trees.

10. Support Vector Machines (SVM):

SVM is a powerful algorithm used for both classification

and regression tasks that finds the optimal hyperplane to

separate data points into different classes.

- Example: Classifying whether a given email is a

phishing attempt or not based on features like sender

address, subject, and content using a support vector

machine.

11. Enabling Machines to Learn:

This refers to the process of allowing machines to

improve their performance by analyzing and understanding

patterns in data.

- Example: Teaching a self-driving car to navigate through

traffic by learning from the behavior of human drivers.

12. Input Features:

Input features are the attributes or characteristics of the

data that are used to make predictions.

- Example: In a weather prediction model, input features

could include temperature, humidity, and wind speed.

13. Output Labels:

Output labels are the desired outcomes or targets that

the algorithm aims to predict.

- Example: For a medical diagnosis model, the output

label could indicate whether a patient has a specific disease

or not.

14. Training Data and Testing Data:

Training data is used to teach the model, while testing

data is used to evaluate the model's performance.

- Example: Training a language translation model using

pairs of sentences in two languages, and then testing its

accuracy on new, unseen sentences.

(Note: The examples provided are for illustrative purposes

and may not reflect real-world accuracy or complexity.)

Classification and Regression

1.classification:

• Objective: Classification is a supervised learning task

where the goal is to assign predefined labels or

categories to input data based on its features. It's used

for tasks like spam detection, image recognition,

sentiment analysis, and more.

• output : The output of a classification model is a

discrete class label or category. It's typically

represented as a single value, such as "spam" or "not

spam," "cat," or "dog."

• Algorithms: Common classification algorithms include

logistic regression, decision trees, random forests,

support vector machines (SVM), k-nearest neighbors

(KNN), and deep neural networks.

• Evaluation : Classification models are evaluated using

metrics like accuracy, precision, recall, F1-score, and

ROC-AUC, depending on the nature of the problem and

the balance between classes.

• Loss Function : Cross-entropy is a commonly used loss

function for training classification models. It measures

the dissimilarity between predicted class probabilities

and actual labels.

• Example Application : Spam email detection, image

classification (e.g., identifying objects in images),

sentiment analysis (classifying text as positive,

negative, or neutral).

Here's an example of classification using

Python code with the scikit-learn library and a

simple decision tree classifier:

#

Import the necessary libraries

from sklearn import tree

import numpy as np

#

Sample input data (features)

X = np.array([[i, 2], [2, 3], [3, 4], [4, 5], [5, 6]])

#

Corresponding class labels

y = np.array([0, 1, 0, 1, 0])

#

# Example binary classification labels

Create a decision tree classifier model

model = tree.DecisionlreeClassifierO

#

Fit the model to the data (training)

model.fit(X, y)

#

Make predictions for new data

X.new = np.array([[3, 3]])

prediction = model.predict(X.new)

# Print the prediction

if prediction == 0:

print("The input belongs to Class 0")

else:

print("The input belongs to Class 1")

2.Regression:

• Objective : Regression is also a supervised

learning task, but its goal is to predict a

continuous numeric output or target variable. It's

used for tasks like predicting stock prices, house

prices, temperature, and more.

• Output : The output of a regression model is a

continuous value. For example, in predicting house

prices, the output might be a price in dollars.

• Algorithms : Common regression algorithms

include linear regression, polynomial regression,

Salary

decision trees, support vector regression (SVR),

and various neural network architectures.

• Evaluation : Regression models are evaluated

using metrics like mean squared error (MSE),

mean absolute error (MAE), root mean squared

error (RMSE), and R-squared (coefficient of

determination).

• Loss Function : Mean squared error (MSE) is a

widely used loss function for training regression

models. It measures the average squared

difference between predicted and actual values.

• Example Application : Predicting house prices

based on features like square footage, number of

bedrooms, and location; forecasting stock prices;

estimating temperature based on historical data.

Here's an example of regression using Python code

with the scikit-learn library and a simple linear

regressionalgorithm:______________________

# Import the necessary libraries

from sklearn import linear_model

import numpy as np

# Sample input data (features)

X = np.array([[l], [2], [3], L4], [5]])

# Corresponding output data (numeric values)

y = np.array([2, 4, 5, 4, 5])

# Create a linear regression model

model = linear_model.LinearRegression()

# Fit the model to the data (training)

model.fit(X, y)

# Make predictions for new data points

X_new = np.arrayf[[6]])

prediction = model.predict(X_new)

# Print the prediction

print("Prediction for X_new:", prediction)

Regression

• Linear Regression

• Cost Function for Linear Regression (Squared Error)

• Gradient Descent or Stochastic Gradient Descent

• Batch Gradient Descent

• Batch SGD Vs Minibatch SGD Vs SGD

• Explain Briefly Batch Gradient Descent, Stochastic

Gradient Descent, and Mini-batch Gradient Descent?

List the Pros and Cons of Each.

• Polynomial Regression

• Overfitting and Underfitting

# In supervised learning, the model is trained on a labeled

dataset, where each data point is associated with a label.

The goal of supervised learning is to learn a mapping from

input data to output labels.

There are several types of classifiers that can be used in

supervised learning. Some of the most common classifiers

include:

1. Naive Bayes classifier

2. Linear Regression

3. Neural Networks

Type of regression

Linear

Regression

?

Polynomial Regression

Sup port Vector

Regression

Types

of

Regression

Decision tree

Regression

Random Forest

Regression

Ridge Regression

7

Lasso Regression

Logistic

Regression

❖ LINEAR REGRESSION:

• Cost Function for linear regression(squared

error)

• Gradient descent or stochastic gradient

descent

• Adam algorithm (adaptive moment

estimation)

• Feature scaling

• Batch gradient descent

1.linear regression:

linear regression is a way to

understand and predict how one variable is influenced by

another by finding the best-fitting straight line through the

data points. It's a fundamental tool in statistics and data

analysis.

•

Linear regression is a statistical method used to find a

relationship between two variables: one is the

"independent variable" (often called the predictor), and

the other is the "dependent variable" (often called the

outcome).

Some key point are:

1. Basic Idea: Linear regression helps us understand

how changes in one variable are related to changes

in another. It's like trying to find a line that best fits

the data points on a graph.

2. Line of Best Fit: The goal is to find a straight line

that comes as close as possible to all the data

points. This line is the "line of best fit."

3. Equation: The equation of this line is in the form: Y

= mx + b, where:

• Y is the outcome you want to predict.

• x is the predictor variable.

• m is the slope of the line (how steep it is).

• b is the intercept (where the line hits the Y-axis).

Slope (m): The slope tells you how much Y is expected to

change for a one-unit change in x. If it's positive, as x goes

up, Y goes up; if it's negative, as x goes up, Y goes down.

4. Prediction: Linear regression can be used for prediction.

If you have a new value of x, you can plug it into the

equation to predict what Y is likely to be.

5. Errors: Linear regression accounts for errors or the

differences between the predicted values and the actual

values. The goal is to minimize these errors, making the line

a good fit for the data.

6. R-Squared: This is a number between 0 and 1 that tells

you how well the line fits the data. A higher R-squared

means a better fit.

7. Assumptions: Linear regression assumes that the

relationship between the variables is linear (forming a

straight line), and it assumes that the errors are normally

distributed.

# The equation for a simple linear regression model is:

X+E

*

Y=bo+b1

Let's break down this equation in detail:

• Y: This represents the dependent variable

• X: This represents the independent variable

• b0: This is the y-intercept, also known as the constant

term. It represents the value of Y when X is equal to 0.

In other words, it's the predicted value of Y when there

is no effect of X.

• b1: This is the slope of the line. It represents the

change in Y for a one-unit change in X.

• e (epsilon): This represents the error term or residual.

Linear Regression

linear regression represented by the equation h0(x)

= 00 + 01x1 + 02x2:

1. Hypothesis

Function (h0(x)): The

hypothesis function

represents the linear

relationship between the

input features (x1 and x2)

and the predicted output.

2.

00, 01, and 02:

These are the parameters

(coefficients) of the linear

regression model. 00 is the

intercept (bias term), 01

represents the weight for

the first feature (x1), and

02 represents the weight

for the second feature (x2).

These parameters are

learned during the training

process to minimize the

prediction error.

3. x1 and x2: These are

the input features or

independent variables. In a

linear regression model,

you have multiple features,

but in this equation, we

focus on x1 and x2.

4. Prediction: The

equation allows you to

make predictions for a

given set of input features

(x1 and x2). You plug these

features into the equation,

and the result h0(x)

represents the predicted

output.

5. Linear Relationship:

Linear regression assumes

a linear relationship

between the features and

the output. This means that

the predicted output is a

linear combination of the

features, and the model

tries to find the best linear

fit to the data.

6. Training: During the

training phase, the model

adjusts the parameters (00,

01, and 02) to minimize the

difference between the

predicted values (h0(x))

and the actual target

values in the training

dataset. This process is

typically done using a cost

function and optimization

techniques like gradient

descent.

7. Bias Term

(Intercept): 00 represents

the bias term or intercept.

It accounts for the constant

offset in the prediction,

even when the input

features are zero.

8. Gradient Descent:

Gradient descent is often

used to find the optimal

values of 90, 91, and 92 by

iteratively updating them in

the direction that reduces

the cost (prediction error).

9. Cost Function: The

cost function quantifies

how well the model's

predictions match the

actual target values. The

goal is to minimize this cost

function during training.

10. Least Squares: In

the context of linear

regression, the method of

least squares is commonly

used to find the optimal

parameters (90, 91, 92) by

minimizing the sum of

squared differences

between predicted and

actual values.

In summary, linear regression is a simple but powerful

algorithm for modeling and predicting continuous numeric

values. It relies on finding the best-fit linear relationship

between input features and the output, represented by 90,

91, and 92.

Cost Function for Linear Regression

(Squared Error)

Cost Function

In order to implement linear regression, we need to

first define the cost function.

• The cost function tells us how well the model is

doing, so we can improve it further.

•

Just a bit of context and recap before we dive into what

the cost function is:

Model: ( f ) function created by our learning

algorithm represented as f(x)=wx+b for linear

regression.

• w,b here are called parameters, or coefficients,

or weights. These terms are used interchangeably.

• Depending on what the values of w,b are our

function changes.

•

Lets break down what each of these terms mean here in the

formula above.

It takes the prediction y^(y cap) and compares it to

the target y by taking y^-y ,This difference is called

error, aka how far off our prediction is from the target.

• Next, we will compute the square of this error. We will

do this because we will want to compute this value

from different training examples in the training set.

• Finally, we want to measure the error across the entire

training set. Thus, we will sum up the squared error.

• To build a cost function that doesn’t automatically get

bigger as the training set size gets larger by

convention, we will compute the average squared error

instead of the total squared error, and we do that by

dividing by m like this.

•

The last part remaining here is that by convention, the

cost function that is used in ML divides by 2 times m .

This extra division by 2 is to make sure our later

calculations look neater, but the cost function is still

effective if this step is disregarded.

• J(w,b) is the cost function and is also called the

squared error cost function since we are taking the

squared error of these terms.

• The squared error cost function is by far the most

commonly used cost function for linear regression, and

for all regression problems at large.

• Goal: Find the parameters w or w,b that result in the

smallest possible value for the cost function J

•

* We will use batch gradient descent here; gradient descent

and its variations are used to train, not just linear

regression, but other more common models in AI.

Types of a cost function in linear

regression

In linear regression, different cost functions are used to

measure how well a model's predictions match the actual

data. Here are some common types of cost functions in

linear regression:

• Mean Error (ME): This cost function calculates the

average of all errors by simply finding the difference

between the predicted values (Y') and the actual values

(Y). Since errors can be positive or negative, they may

cancel each other out, resulting in an average error of

zero. It's not often recommended but forms the basis

for other cost functions.

• Mean Squared Error (MSE): MSE is a widely used cost

function. It measures the average of the squared

differences between predicted and actual values. By

squaring the errors, negative errors don't cancel out

positive ones. The formula for MSE is:

Where:

Yi: Actual value

Yi: Predicted value from the regression model

N: Number of data points

Salary of Employee

• Mean Absolute Error (MAE): MAE measures the

average absolute difference between predicted and

actual values. It considers all individual variances

equally and is useful for understanding the magnitude

of errors without regard to their direction. The formula

for MAE is:

Where:

Yi: Actual value

Yi: Predicted value from the regression model

N: Number of data points

• Root Mean Squared Error (RMSE): RMSE is the square

root of the mean of the squared errors. It's a measure

of the error between observed (actual) values and

predicted values. The formula for RMSE is:

RMSE =

(Si - Oi)2

Where:

Yi: Actual value

Yi: Predicted value from the regression model

N: Number of data points

In simpler terms, these cost functions help quantify how well

a regression model is performing. MSE and RMSE give more

weight to larger errors, while MAE treats all errors equally.

Choose the one that best suits your problem and the

importance of different errors in your analysis.

Here's a Python code example of how to

implement the cost function for linear

regression using squared error:

import numpy as np

# Define the actual target values (ground truth)

actual.values = np.array([2, 4, 5, 4, 5])

# Define the predicted values from your linear regression model

predicted_values = np.array([1.8, 3.9, 4.8, 4.2, 5.1])

# Calculate the squared error for each data point

squared.errors = (actual.values - predicted.values) ** 2

# Calculate the mean squared error (MSE)

mse = np.mean(squared.errors)

print("Mean Squared Error (MSE):", mse)

In this code:

actual_values represents the actual target values or

ground truth for your dataset.

• predicted_values represents the predicted values

generated by your linear regression model.

• squared_errors calculates the squared difference

between each actual and predicted value.

• mse computes the mean squared error by taking the

average of all the squared errors.

•

The mean squared error is a common metric used to assess

the performance of a linear regression model. Lower values

of MSE indicate a better fit of the model to the data,

meaning that the model's predictions are closer to the

actual target values.

Naive Bayes

Naive Bayes is a simple yet effective classification algorithm

that leverages Bayes' theorem and the assumption of

conditional independence among features. It is widely used

in text-related tasks and serves as a valuable tool for many

classification problems, especially when data is high­

dimensional and computational efficiency is crucial.

1. Probabilistic Classification: Naive Bayes is a

probabilistic classification algorithm that

assigns class labels to instances based on the

calculated probabilities. It estimates the

probability that a given instance belongs to

each class and selects the class with the

highest probability.

2. Bayes' Theorem: Naive Bayes is based on

Bayes' theorem, a fundamental concept in

probability theory. Bayes' theorem allows us to

update our beliefs about the probability of a

particular event based on new evidence or

information.

3. Independence Assumption:

• The "Naive" in Naive Bayes comes from the

assumption of independence among features or

attributes.

• It assumes that all features are conditionally

independent given the class label.

• This simplifying assumption makes calculations

tractable and efficient, though it may not hold true in

all real-world scenarios.

4. Formula:

• The Naive Bayes classification formula can be written

as:

P(y | X) = (P(X | y) * P(y)) / P(X)

Where:

• P(y | X) is the posterior probability of class y given

features X.

• P(X | y) is the likelihood of observing features X given

class y.

• P(y) is the prior probability of class y.

• P(X) is the evidence, the probability of observing

features X across all classes.

5. Classification Process:

• To classify a new data point:

Calculate the prior probabilities P(y) for each

class in the training data.

2. Estimate the likelihoods P(X | y) for each

feature in each class.

3. Apply Bayes' Theorem to compute the

posterior probabilities P(y | X) for each class.

4. Select the class with the highest posterior

probability as the predicted class.

1.

6. Conditional Independence: The "naive"

assumption in Naive Bayes is that the features used for

classification are conditionally independent, meaning

that the presence or absence of a particular feature is

assumed to be independent of the presence or absence

of other features, given the class label. This simplifies

the calculation of probabilities.

7. Feature Vector: Input data is represented as a

feature vector, where each feature corresponds to

some attribute or characteristic of the data. These

features are used to make predictions.

8. Types of Naive Bayes:

• Multinomial Naive Bayes: Commonly used for text

classification tasks, where features represent the

frequency of words or tokens in documents.

• Gaussian Naive Bayes: Suitable for continuous data

where features are assumed to follow a Gaussian

(normal) distribution.

• Bernoulli Naive Bayes: Appropriate for binary data,

where features are either present (1) or absent (0),

often used in spam detection.

9. Parameter Estimation: Naive Bayes uses statistics

from the training data to estimate probabilities. These

statistics include prior probabilities (the probability of

each class) and conditional probabilities (the

probability of each feature given the class).

10. Text Classification: Naive Bayes is particularly

popular in text classification tasks, such as spam email

detection, sentiment analysis, and document

categorization, due to its simplicity and effectiveness

with high-dimensional data.

11. Training and Prediction: Naive Bayes is

computationally efficient and has a relatively fast

training and prediction process, making it suitable for

large datasets.

12. Independence Violation: While Naive Bayes

assumes conditional independence among features,

this assumption is not always true in real-world data. In

practice, it may still perform well, but it's essential to

be aware of this limitation.

13. Applications: Besides text classification, Naive

Bayes is also used in applications like spam filtering,

document categorization, recommendation systems,

and medical diagnosis.

Prior probabilities

• Prior probabilities are like starting points in figuring

out if an email is "spam" or "not spam." They are like

initial guesses about the chances of an email being

spam before we look at the email's content. These

initial guesses are important because they affect our

final decision.

• To make this decision, we use a formula that combines

our initial guess (prior probability) with the evidence

from the email's content (class-conditional probability).

It's like giving more importance to what's in the email

while also considering what we originally thought.

• This formula helps us calculate the most likely label,

either "spam" or "not spam." The final equation for this

process can look like this:

Final Decision = Prior Probability x Class­

Conditional Probability

Or in a more commonly used way:

Final Decision=log(Prior Probability)+log(Class-Conditional

Probability)

In simple terms, it's about combining our initial hunch with

the email's content to decide if it's spam or not. This is a

basic idea behind a technique called Naive Bayesian

classification.

Evaluating your Naive Bayes

classifier

A confusion matrix is like a chart that helps you see how

well your classifier is doing. It compares the actual values

with what your classifier predicted. The rows in this chart

usually show the actual values, while the columns show the

predicted values.

Confusion matrix

Predicted classes

For example, if you were trying to predict numbers from 0 to

9 in images, you'd have a big chart with 10 rows and 10

columns. Each cell in the chart tells you how many times

your classifier got it right or got it wrong. So, if you want to

know how often your classifier mixed up the number 4 with

the number 9, you'd just look at the cell where the 4th row

and 9th column meet.

Confusion matrix example

Predictions

Types of Naive Bayes

classifiers

1. Gaussian Naive Bayes (GaussianNB): This one

is great when you have data that follows a normal,

bell-shaped curve, like continuous numbers. It

figures things out by calculating the average and

spread of each category.

2. Multinomial Naive Bayes (MultinomialNB):

When you're dealing with data that's counted and

falls into discrete categories, like word frequencies

in text (common in things like spam detection or

text classification), this type of Naive Bayes is your

friend.

3. Bernoulli Naive Bayes (BernoulliNB): If your

data is really simple, like just having two options,

such as "yes" or "no," or "1" or "0," then

BernoulliNB is handy. It's often used in problems

with binary outcomes.

Example

Certainly! Let's simplify the explanation of Bayes' formula

and its application with an example:

• Imagine we have an object, let's call it "Object X," and

we want to classify it into different classes, like "Class

1," "Class 2," and so on (up to "Class K").

• Now, we have some information about Object X, which

we'll represent as "n." This information could be

anything relevant to our classification task.

Bayes' Formula helps us calculate the probability that Object

X belongs to a specific class, let's say "Class i," given this

information "n." In simple terms, we want to know how likely

it is that Object X is in Class i based on what we know.

Here's how we do it step by step:

1. Prior Probability (P(ci)): First, we estimate how

likely each class is without considering any

information ("n"). If we don't have any specific

information, we might assume that all classes are

equally likely (so each class has a 1/K chance,

where K is the number of classes).

2. Likelihood (P(n|ci)): Next, we determine how

likely we would see the information "n" if Object X

truly belongs to Class i. This part depends on the

specific problem and data we have.

3. Evidence (P(n)): To complete the equation, we

calculate the overall probability of observing the

information "n" regardless of the class. This

involves considering the likelihood of "n" for each

class and the prior probability of each class.

Once we have these probabilities, we can use Bayes'

Formula to find the probability of Object X being in Class i

given the information "n":

P(ci|n) = P(n|ci) * P(ci) / P(n)

So, in practical terms, we're assessing how well the

information "n" matches with each class and combining it

with our prior belief about the classes to make a more

informed decision.

Keep in mind that the actual calculations will depend on the

specific problem and data, but this formula provides a

structured way to estimate the probability of class

membership based on available information.

Gradient Descent

• Objective: Gradient descent is a method used to find

the optimal values of parameters (in this case, 'w' and

'b') that minimize a cost function. It's commonly

applied in machine learning to train models like linear

regression.

• Cost Function: In linear regression, we aim to

minimize the mean squared error (MSE) between

predicted and actual values.

• Initialization: We start with initial values for 'w' and

'b,' often set to 0.

• Training Data: We use a dataset with features (in

this case, spending on radio ads) and corresponding

target values (sales units).

• Partial Derivatives: Gradient descent involves

calculating the partial derivatives of the cost function

with respect to 'w' and 'b.'

• Chain Rule: The chain rule is applied to find these

derivatives efficiently.

• Learning Rate (a): A hyperparameter that controls

the step size in each iteration. It influences the

convergence speed and stability.

• Update Rule: We iteratively update 'w' and 'b' using

the computed partial derivatives and the learning rate.

• Epochs: One pass through the entire training dataset

is called an epoch. We repeat this process for multiple

epochs until 'w' and 'b' converge to stable values.

• Convergence: We stop when 'w' and 'b' stop

changing significantly or when a predefined number of

epochs is reached

A convex function can be visualized as a gracefully

rolling landscape, featuring a solitary serene valley at

its heart, where a global minimum resides in splendid

isolation. On the flip side, a non-convex function

resembles a rugged terrain, with numerous hidden

valleys and peaks, making it unwise to apply the

gradient descent algorithm. Doing so might lead to an

entrapment at the first enticing valley encountered,

missing the possibility of reaching a more optimal,

remote low point.

In this context, gradient descent helps us find the best 'w'

and 'b' values to create a linear regression model that

predicts sales units based on radio advertising spending. It

iteratively adjusts these parameters to minimize prediction

errors, as measured by the MSE, and it continues this

process until the model is sufficiently accurate.

# Gradient Descent (GD) is an optimization algorithm used

to minimize a cost or loss function by iteratively updating

model parameters based on the gradients of the cost

function.

★ w: Represents the weight or slope of the linear

regression model.

★ b: Represents the bias or intercept of the linear

regression model.

★ J(w, b): Denotes the cost function, which measures

how well the model fits the data.

★ a (alpha): Represents the learning rate, a

hyperparameter controlling the step size in the

optimization process.

★ Objective: We aim to find the values of w and b that

minimize the cost function J(w, b). For linear regression,

a common cost function is the Mean Squared Error

(MSE):

J(w, b) = (1/2m) * Z(yi - (wxi + b))2

Where:

★ m: The number of training examples.

# Here are some important key points about Gradient

Descent along with Python code to illustrate the

concept:

• Objective: GD aims to find the values of model

parameters that minimize a cost function (often

denoted as J or L).

• Gradient Calculation: It computes the gradient of

the cost function with respect to each parameter. This

gradient represents the direction of the steepest

increase in the cost function.

• Parameter Update: GD updates the model

parameters in the opposite direction of the gradient to

reduce the cost.

Code: parameter = parameter - learning_rate *

gradient

• Iteration: GD repeats the gradient calculation and

parameter update process for a fixed number of

iterations or until convergence (when the change in the

cost function becomes very small).

Code: import numpy as np

# Generate synthetic data

np.random.seed(0)

X = 2 * np.random.rand(100, 1)

y = 4 + 3 * X + np.random.randn(100, 1)

# Initialize model parameters

theta = np.random.randn(2, 1) # Random initialization

learning_rate = 0.1

iterations = 1000

# Perform Gradient Descent

for iteration in range(iterations):

gradients = -2 * X.T.dot(y - X.dot(theta)) # Calculate

gradient

theta -= learning_rate * gradients # Update parameters

# The 'theta' values after convergence represent the

optimized model parameters

print("Optimized theta:", theta)

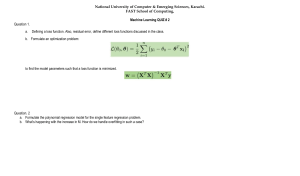

* * HOW DOES THE GRADIENT DESCENT ALGOTHM

WORK:

Hypothesis:

he(x) — 90 + 9xx

Parameters:

0o, #i

m

Cost Function:

2

(M3^) - J/w)

J(#o,0i) =

i=l

Goal:

minimize J(0o,0i)

00,01

Cost Function (J): A mathematical function that

quantifies how far off the model's predictions are

from the actual target values. The goal is to minimize

this function.

Model Parameters (0): These are the variables that

the algorithm adjusts to minimize the cost function.

In the context of linear regression, for example, 0

represents the weights and biases of the model.

Here's a simple Python code example of

gradient descent for a univariate linear

regression problem:

import numpy as np

# Sample data

X = np.array([l, 2, 3, 4, 5])

y = np.array([2, 4, 5, 4, 5])

# Initial guess for parameters

thetaO = 0.0

thetal = 0.0

# Learning rate

alpha = 0.01

# Number of iterations

num.iterations = 1OOO

# Gradient Descent

for _ in range(num_iterations):

# Calculate predictions

predictions = thetaO + thetal * X

#

Calculate predictions

predictions = thetaO + thetal * X

#

Calculate the gradient of the cost function

gradient.thetaO = np.mean(predictions - y)

gradient.thetal = np.mean((predictions - y) * X)

#

Update parameters using the learning rate and gradients

thetaO -= alpha * gradient.thetaO

thetal -= alpha * gradient.thetal

#

Final parameter values

print("Optimal thetaO:", thetaO)

print("Optimal thetal:", thetal)

Stochastic Gradient Descent

Stochastic Gradient Descent introduces randomness

and processes data in smaller batches, leading to

faster convergence and improved generalization.

However, it requires careful tuning of

hyperparameters and may exhibit more erratic

behavior compared to standard Gradient Descent.

1. Batch Size:

• SGD: It processes a single randomly selected

training example or a small random subset (mini­

batch) of training examples in each iteration.

• Batch Gradient Descent: It processes the entire

training dataset in each iteration.

2. Learning Rate:

• SGD: Often uses a smaller learning rate

Batch Gradient Descent

Uses the entire training dataset for gradient calculations.

Pros:

Stable convergence to the minimum.

Suitable for small to moderately sized datasets.

Cons:

Slow convergence, especially with large datasets.

Stochastic Gradient Descent (SGD):

Selects a random training instance for each iteration to

compute the gradient.

Pros:

• Faster training, especially with large datasets.

• Potential to escape local minima due to its

randomness.

Cons:

• Less stable convergence, as it oscillates around the

minimum.

• Final parameters may not be optimal due to

randomness.

• Requires tuning of the learning rate and a welldesigned training schedule.

Mini-batch Gradient Descent:

Computes gradients on randomly selected subsets (mini­

batches) of the training data.

Pros:

• Balances stability and efficiency.

• Utilizes hardware optimizations, especially with GPUs.

• Helps escape local minima better than Batch GD.

Cons:

• May still struggle to escape local minima compared to

SGD.

• The choice of mini-batch size can impact convergence

and may require tuning.

Key Differences:

• Batch GD uses the entire dataset, SGD uses single

instances, and Mini-batch GD uses small random

subsets.

• Batch GD provides stable convergence but is slow,

while SGD is fast but less stable. Mini-batch GD

balances speed and stability.

Batch GD can't handle large datasets, while SGD and Mini­

batch GD are suitable for such cases.

SGD requires tuning of learning rate and schedules for

effective convergence.

Mini-batch GD can benefit from hardware optimizations and

is widely used in deep learning.

Polynomial Regression

1. Polynomial Regression is a type of regression

analysis used in machine learning and statistics to

model the relationship between a dependent

variable (target) and one or more independent

variables (predictors) when the relationship is not

linear but follows a polynomial form. Here are the

key points to understand about Polynomial

Regression:

2. Polynomial Equation: In Polynomial Regression, the

relationship between the variables is represented

by a polynomial equation of a certain degree. The

equation takes the form:

Y=p0+p1X+p2X + p3X +... + PnX^n

Where:

•

•

•

Y: dependent variable.

X: independent variable.

p0, pl, p2, ..., pn are the coefficients of the

polynomial terms.

•

n is the degree of the polynomial, which determines

the number of terms.

3. Degree of the Polynomial: The degree of the

polynomial determines the complexity of the

model. A higher degree allows the model to fit the

data more closely but can lead to overfitting, where

the model captures noise in the data rather than

the underlying pattern. Choosing the right degree is

a crucial step in Polynomial Regression.

4. Linear vs. Non-linear Relationships: Polynomial

Regression is used when the relationship between

the variables is not linear, meaning that a straight

line (as in simple linear regression) does not

adequately capture the pattern in the data.

5. Overfitting: As mentioned earlier, high-degree

polynomials can lead to overfitting. Overfitting

occurs when the model fits the training data

extremely well but fails to generalize to new,

unseen data.

6. Model Evaluation: Just like linear regression,

Polynomial Regression models are evaluated using

metrics like Mean Squared Error (MSE), R-squared,

or others to assess how well they fit the data.

7. Visualization: Plotting the data points along with the

polynomial curve can be a useful way to

understand how well the model fits the data and

whether it captures the underlying trend accurately.

8. Applications: Polynomial Regression can be applied

in various fields, including finance, economics,

physics, biology, and engineering, where

relationships between variables are often nonlinear.

How Does it Work?

In Python, you can easily find the relationship between data

points and create a regression line without delving into

complex math. Let's break it down with a practical example:

Imagine you've recorded data from 18 cars passing a

tollbooth. You've noted the speed of each car and the time

of day (in hours) when they passed.

On a chart, the x-axis (horizontal) represents the

hours of the day.

• The y-axis (vertical) shows the speed of the cars.

•

This setup allows you to visually analyze and find patterns in

the data, like how speed changes throughout the day. With

Python, you can use specific methods to do this analysis and

draw a regression line without needing to handle the

mathematical formulas yourself.

Example

Start by drawing a scatter plot:

import matplotlib.pyplot as pit