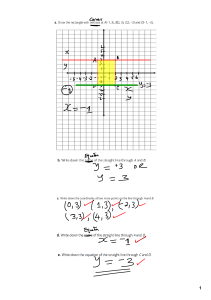

Data Mining and Machine Learning (proctored course) (CS 4407) written 4. K-NEAREST NEIGHBORS EXERCISE – ASSIGNMENT UNIT 4 Imaging objects in classes A and B having two numeric attributes/properties that we map to X and Y Cartesian coordinates so that we can plot class instances (cases) as points on a 2-D chart. In other words, our cases are represented as points with X and Y coordinates (p(X,Y)). Our simple classes A and B will have 3 object instances (cases) each. Class A will include points with coordinates (0,0), (1,1), and (2,2). Class B will include points with coordinates (6,6), (5.5, 7), and (6.5, 5). In R, we can write down the above arrangement as follows: # Class A training object instances (cases) A1=c(0,0) A2=c(1,1) A3=c(2,2) # Class B training objects instances (cases) B1=c(6,6) B2=c(5.5,7) B3=c(6.5,5) How are the classification training objects for class A and class B arranged on a chart? Here is how: The knn() function is housed in the class package and is invoked as follows: knn(train, test, cl, k) where train is a matrix or a data frame of training (classification) cases. test is a matrix or a data frame of test case(s) (one or more rows) cl is a vector of classification labels (with the number of elements matching the number of classes in the training data set) k is an integer value of closest cases (the k in the k-Nearest Neighbor Algorithm); normally, it is a small, odd integer number. Now, when we try out classification of a test object (with properties expressed as X and Y coordinates), the kNN algorithm will use the Euclidean distance metric calculated for every row (case) in the training matrix to find the closest one for k=1 and the majority of closest ones for k > 1 (where k should be an odd number). • Construct the cl parameter (the vector of classification labels). To use the knn() method, we must provide the test case(s), which correspond to a point roughly in the midpoint of the distance between A and B. Assume test=c(4,4) We now have everything in place to execute knn(). Let's try it again with k = 1 (classification based on closeness to a single neighbor). We will utilize the summary() method to provide more informative summaries of test object categorization results, which can operate polymorphically on its input parameter depending on its type. In our scenario, the result of the knn() function is used as the input argument to the summary() function. Calculate summary() function of knn() and show the results. So which means the test case belongs to the B class. Now define. test=c(3.5, 3.5) Visually, this test case point looks to be closer to the cluster of the A class cases (points) • Calculate summary() function of knn() and show the results • Which class does the test case now belong to? The test case belongs to the A class. Let's boost the number of nearest neighbors who vote during the categorization phase. The test case belongs to the B class. The last part is to build a matrix of test cases containing four points: (4,4) - should be classified as B (3,3) - should be classified as A (5,6) - should be classified as B (7,7) - should be classified as B As a result, we should expect three examples (points) from the B class and one from the A class in our test batch. Create test as a two-column matrix holding our test spots' X and Y coordinates. Run the preceding knn summary command once more: And I get an error Reference: James, G., Witten, D., Hastie, T., & Tibshirani, R. (2013). An Introduction to Statistical Learning with Applications in R. New York, NY: Springer. Read Chapter 4 available at https://www.stat.berkeley.edu/users/rabbee/s154/ISLR_First_Printing.pdf Skand, K. (2017, October 8). kNN(k-Nearest Neighbor) algorithm in R. Retrieved from https://rstudio-pubsstatic.s3.amazonaws.com/316172_a857ca788d1441f8be1bcd1e31f0e875.html King, W. B. Logistic Regression Tutorial. This tutorial demonstrates the use of Logistic Regression as a classifier algorithm. http://ww2.coastal.edu/kingw/statistics/R-tutorials/logistic.html