CS 439

Final Exam Review

Spring 2024

5/8/2024

12:00-3:00 PM

Hill 114 - Lecture Hall

Logistics

The final exam will be given inperson

You are allowed up to 1-page of

notes. Both sides ok. Write netID

and handover the notes after test.

Exam

Composition

• Open ended questions. Short responses.

• Graduating seniors must perform significantly better

if other indicators are low

• 6 questions – multiple parts

• 3-hour long exam

• The material will mostly be from post-midterm on

• Linear Regression

• Gradient descent

• Logistic Regression

• Feature Engineering

• Classification and regularization

• Unsupervised Learning

• Deep Learning

• Clustering

• Recommender systems

What general

skills will be

tested?

• SVD, PCA, interpretations, applications

• Linear regression, cost functions, gradient descent

• Logistics regression, non-linearity with sigmoid, relu

• Interpretation of linear models

• Bias-variance tradeoffs, regularization

• Choosing a cost function – L1, L2, huber

• Maximum likelihood estimators

• How to minimize the cost function and find optimal

parameters using differentiation/gradient descent?

• Memorization versus generalization

• Foundations of neural networks

• Computing general functions using NN’s

• Recommender systems

• AND MORE …..

PCA

Capturing Variance:

Each principal component is associated with an eigenvalue, representing the variance captured

by that component.

The first principal component captures the most variance, followed by the second, and so on.

By keeping only the top few principal components, we can retain most of the important

information in the data while discarding redundant or irrelevant information.

Orthogonality:

Principal components are mutually orthogonal, meaning they are perpendicular to each other.

This property ensures that the information captured by each component is independent of the

others.

Geometric Interpretation:

Geometrically, principal components represent the axes of a new coordinate system.

The data points are projected onto these axes, preserving the distance relationships between

them.

This allows us to visualize the data in a more meaningful way, focusing on the directions that

are most relevant.

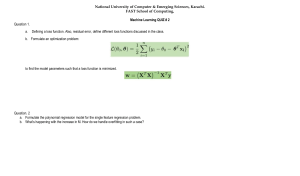

MLE

MLE

Find MLE of Bernoulli distribution with parameter p as L(p|x) = p^x * (1-p)^(1-x)

Linear Regression

The Concept

Key ideas in regression

Regression analyzes the relationship between two types of variables:

Dependent variable (Y): The variable you are trying to predict or explain.

Independent variable (X): The variable you believe influences the dependent variable.

Linear regression: Models a linear relationship between the independent and

dependent variables.

Non-linear regression: Models a non-linear relationship, requiring more

complex models and interpretations.

The coefficient of an independent variable indicates its directional impact on

the dependent variable.

The magnitude of the coefficient represents the strength of the relationship.

Regression models provide insights into relationships, but they cannot prove

causation.

Results should be interpreted within the context of the data and limitations

of the model.

Extending Linear Regression

beyond one variable

Developing Notation for Multivariate

Linear Models

Linear Model

Linear in the Parameters

Feature Functions

Squared Loss

Loss Minimization

Gradient Descent for Multivariate

Linear Regression

Where alpha is the learning rate

and convergence is obtained

when error from one iteration to

next is less than some threshold.

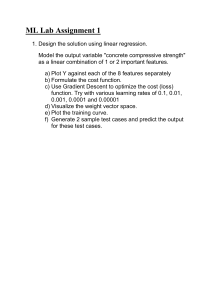

The effect of Learning Rate & convergence

alpha

sq_error

iterations

Θ0

Θ1

accuracy

0.01

2.88153

71

0.29013

0.53484

10-4

2.88080

92

0.29423

0.52744

10-5

2.88072

114

0.29559

0.52497

10-6

0.011

2.88147

65

0.29036

0.53444

10-4

0.001

2.8901

493

0.27558

0.56117

10-4

0.1

NaN

NaN

NaN

NaN

10-4

0.005

2.88251

129

0.28721

0.54014

10-4

0.02

NaN

NaN

NaN

NaN

10-4

0.009

2.88162

78

0.28980

0.53544

10-4

Reference: Regression Lab

• If alpha is too small, then the

convergence may be slow

• If alpha is too large, error may

not decrease on every iteration

and may not converge

• When choosing alpha, try

• 0.001, 0.01, 0.1, 1

• 0.003, 0.03, 0.3, etc

Question

E(Θ) – The error for given Θ

Which of the following graphs shows a converging

gradient descent algorithm?

E(Θ)

Θ

E(Θ)

E(Θ)

bad

Θ

Θ

E(Θ)

bad

bad

Θ

good

Feature Engineering

Feature Engineering ctd..

Linear in the Parameters

Feature Functions

For Example:

Features:

How to decide on new features

Often a data scientist must design “new” features to get better models

Rules of Design

• Understand and capture domain knowledge

• Feature Extraction: Transform

• One-Hot Encoding

• Introduce polynomial features

• Encoding Cyclical Features

• Dimensionality Reduction

• Feature Importance Analysis

• Improve express-ability and complexity of the data

Encoding Categorical Data

• Categorical Data

One-hot encoding:

• Text Data

• Bag-of-words & Ngram models

state

AL

…

CA

…

NY

…

WA

…

WY

NY

0

…

0

…

1

…

0

…

0

WA

0

…

0

…

0

…

1

…

0

CA

0

…

1

…

0

…

0

…

0

The Feature Matrix

Logistic Regression Model

Notation

hypothesis output and Interpretation

• Let hΘ(x) be the probability: P(y=1|x,Θ)

• Probability that y=1 given x,Θ

• Example:

• suppose x = [1, 0.7, 0.5]

• Compute hΘ(x) for some Θ, say hΘ(x) = 0.8

• “predict” that there is a 80% chance that the patient has a malignant tumor.

• P(y=0|x,Θ) = 1 - P(y=1|x,Θ)

Issues with a linear hypothesis function

• In logistic regression, we prefer outputs between 0 and 1 (why?)

• Then we can decide if the value < 0.5 it is more likely to be 0 and vice versa

• Linear hypothesis can lead to outputs outside of the range (0 1)

• Solution?

• Introduce a non-linear transformation to the hypothesis function

• Next: The Sigmoid function

Non

Linearizing

the

hypothesis

function

0 ≤ g(hΘ(x)) = g(ΘT x) ≤ 1

What are the properties of this function?

This Photo by Unknown Author is licensed under CC BY-NC-ND

Relu function

Regularization

• A quadratic model could fit the training set well

• might generalize well to new examples

• But a higher order model may fit the training set

“perfectly”

• might not generalize well to new examples

The idea

The solution:

• use the higher order model, but penalize the

higher order parameters a “lot”

• Optimization problem

• Minimize {low order model + λ*(high order

terms) }

• λ is a large value is called the regularization

parameter

Regularization

idea

• The degree of the polynomial acts as a natural

measure of the “complexity” of the model

• higher degree polynomials are more complex

(can fit any finite data set exactly)

• fitting the models requires extremely large

coefficients on these polynomials

• Regularization is the notion of keeping weights

small

The Regularization Function R(θ

R(θ)

Goal: Penalize model complexity

• More features

overfitting …

• How can we control

overfitting through θ

• Proposal:

set weights = 0

to remove features

Regularized Loss Minimization

R(θ)

Question: Should we penalize Θ0 as well?

Unsupervised learning

K-means clustering

Unlabeled data

Finding labels

K-means

Algorithm

Question: What is the asymptotic complexity of this algorithm?

Example of k-means

Consider the eight 2D points in a grid given by (0,0), (0,1),(1,0),(-1,1),(1,2),(-2,1),(2,2),(3,-1).

Hierarchical Clustering

example

• Cluster (0,0), (0,1),(1,0),(-1,1),(1,2),(-2,1),(2,2),(3,-1) into 2 clusters

based on Manhattan distance. What is the complexity of the

algorithm?

Neural Networks for Deep

Learning (DL)

39

Feature Learning

Hypothesis function

Set of non-linear features

ML Challenge: good performance ~ “good” features

DL Objective: algorithm will automatically “learn” the features

40

Architecture of a basic neural network (NN)

Question: What is the purpose of the hidden layer? Learn new features

41

This Photo by Unknown Author is licensed under CC BY-SA

Question: how many

total parameters need to

be optimized in this

(considering bias)

network?

Example

Consider one layer and

write equations to

produce the hypothesis

This Photo by Unknown Author is licensed under CC BY-SA

42

Computing OR function

1

Θ0

x1

Θ1

Θ2

h(Θ, x)

h(Θ, x)

0

1

1

1

x2

43

NAND Function

Gradient descent vs stochastic gradient descent

45

Recommender Systems

Definition (collaborative filtering)

Recommender systems that

makes recommendations based

“solely” upon the preferences

that other users have made for

those items

• “x bought y”

Highly Sparse matrix

Challenge: Fill in the missing entries

ratings

u

s

e

r

UserUser-user approach

Restrict sum to only k

users “most similar”

• Hypothesis: h(Θ, i, j)

• Prediction problem :

If there are no similar users to

i, the prediction for i would be

my average prediction

Find the difference

between the other user

and the mean

People have similar

ranges in relative rating

Similarity metric

• Pearson correlation

• Cosine similarity

Sum over items where both

have entries

Quiz

Consider the following user-item rating matrix.

What would be the prediction (user-user

method using Pearson correlation) for the

missing point?

X(4,2) = 4 + 1.(2- 3.5)/|4-5| = 2.5

Here we are using W(I,k) as the absolute distance

We can use Pearson correlation or cosine distance

as well.

Remember