Week 14 - Recursions

COMP5180 - Algorithms, Correctness and Efficiency

In this lecture

• Reduction

• Divide and conquer

• Recursion

• Factorial

• Exponentiation

• Fibonacci numbers

• Iteration via recursion

• Tower of Hanoi

Reduction

• Solving a problem by using an already existing solution to another

problem

Example: Finding the minimum of an array

• We want to write a program that takes an array of integers as an input

and outputs the smallest number from that array

• E.g. if the input is [26, 53, 14, 87, 34]

• The output should be 14

• We already have a program that sorts an integer array

Example: Finding the minimum of an array

[4,2,3,1]

arr

sortedArr

[1,2,3,4]

Sort

Example: Finding the minimum of an array

[26,53,14,87,34]

arr

Minimum

arr

sortedArr

min

14

Sort

Example: Finding the minimum of an array

arr

[4,7,3,8,2]

Minimum

Sort

[4,7,3,8,2]

arr

sortedArr

[2,3,4,7,8]

2

min

Example: Finding the minimum of an array

arr

Minimum

arr

sortedArr

min

Sort

Example: Finding the minimum of an array

Program Minimum:

input array arr

sortedArr = Sort(arr)

min = sortedArr[0]

output min

Reduction

• Solving a problem by using an already existing solution to another

problem

• Accept the existing solution as a black box – we don’t care what’s

inside as long as it works correctly

• Correctness of the outer solution does not depend on how exactly

the inner solution works

Reduction: analysing the Minimum example

• Correctness:

• If Sort works correctly, Minimum will work correctly too

• Complexity:

• No pre-processing is required for array before it is passed to Sort, basically O(1)

• The complexity of running the Sort program

• Selecting the first element of an array is a simple operation, basically O(1)

• The complexity of Minimum depends on the complexity of Sort

• If Sort is a quicksort, then it’s O(n*log(n)); if it’s bogosort, then it’s O(n!)

Reduction: more general approach

• Correctness:

• If the inner program works correctly, outer program should work correctly too

• Complexity:

• Complexity of Pre-processing the input to the inner program

• The complexity of running the inner program

• Number of times the inner program is used

• Complexity of post-processing the output from the inner program

• Complexity of whatever else the outer program does

An example from my research

• I want to write a program that runs an experiment that evaluates a

new decision tree algorithm using a raw dataset that I have

• I want to write a program that reads a dataset, pre-processes it,

separates it into a training set and a test set, initialises experiment

parameters, creates a new decision tree model, constructs it, prunes

it, tests it, saves the results to a file

• Long, complicated, but…

Divide and conquer

dataset = readDataset()

preProcess(dataset)

trainingSet,testSet = makeTrainingAndTestSets(dataset)

parameters = initialiseExperimentParameters()

decisionTreeModel = makeNewDecisionTreeModel()

construct(decisionTreeModel, trainingSet, parameters)

prune(decisionTreeModel)

testResults = test(decisionTreeModel, testSet)

save(testResults)

Recursion

• Self-reduction

• Using a solution to the problem as part of itself

• Commonly used in programming to solve a big problem that can be

divided into multiple smaller problems which are similar to the big

problem

Example of recursion being applicable

Examples of recursive solutions

• Factorial

• Exponentiation

• Fibonacci numbers

• Iteration via recursion

• Tower of Hanoi

Factorial!

• A mathematical function applicable to integers >= 0

• Uses the ! operator

• Represents a product of all numbers from 1 to the integer to which it

is applied

• E.g. 5! = 1*2*3*4*5 = 120

Factorial

• 0! = 1 (by definition)

• 1! = 1 = 1

• 2! = 1*2 = 2

• 3! = 1*2*3 = 6

• 4! = 1*2*3*4 = 24

• 5! = 1*2*3*4*5 = 120

• 6! = 1*2*3*4*5*6 = 720

•…

Factorial as a recursive function

• We can see that in order to get from, say, 4! to 5!, we need to

multiply it by 5

• And to then get to 6! We multiply by 6, to get to 7! We multiply that

by 7, and so on

• And we can define this pattern recursively

Factorial as a recursive function

• n! = (n-1)! * n

• This definition defines the factorial of an integer number n as the

factorial of the previous integer number multiplied by n

• Is this correct?

Factorial

• 0! = 1 (by definition)

• 1! = 1 = 1

• 2! = 1*2 = 2

• 3! = 1*2*3 = 6

• 4! = 1*2*3*4 = 24

• 5! = 1*2*3*4*5 = 120

• 6! = 1*2*3*4*5*6 = 720

•…

Factorial as a recursive function

• If we calculate 3! step by step:

• 3! = 2! * 3

• 2! = 1! * 2

• 1! = 0! * 1

• 0! = -1! * 0

• ???

Termination condition

• If we want a recursive program to eventually arrive at a solution, we

need to tell it when to stop making new recursive calls

• We call this a Termination Condition

• There can be multiple termination conditions in the same recursive

program

Factorial

• 0! = 1 (by definition)

• 1! = 1 = 1

• 2! = 1*2 = 2

• 3! = 1*2*3 = 6

• 4! = 1*2*3*4 = 24

• 5! = 1*2*3*4*5 = 120

• 6! = 1*2*3*4*5*6 = 720

•…

Factorial as a recursive function

• If n = 0, then n! = 1

• Otherwise, n! = (n-1!) * n

Factorial as a recursive function

Function factorial(n)

if n = 0

return 1

else

return factorial(n-1) * n

end

Another example: Fibonacci numbers

• Fibonacci numbers are a sequence of integer numbers

• Starts with 1, 1

• Each number after that is a sum of the two previous numbers in the

sequence

Fibonacci numbers

• f(1) = 1 (by definition)

• f(2) = 1 (by definition)

• f(3) = 1 + 1 = 2

• f(4) = 1 + 2 = 3

• f(5) = 2 + 3 = 5

• f(6) = 3 + 5 = 8

• f(7) = 5 + 8 = 13

• f(8) = 8 + 13 = 21

•…

Fibonacci numbers as a recursive function

• Each number is a sum of two previous umbers

• f(n) = f(n-1) + f(n-2)

• What is the termination condition?

Fibonacci numbers

• f(1) = 1 (by definition)

• f(2) = 1 (by definition)

• f(3) = 1 + 1 = 2

• f(4) = 1 + 2 = 3

• f(5) = 2 + 3 = 5

• f(6) = 3 + 5 = 8

• f(7) = 5 + 8 = 13

• f(8) = 8 + 13 = 21

•…

Fibonacci numbers as a recursive function

• Each number is a sum of two previous umbers

• f(n) = f(n-1) + f(n-2)

• What is the termination condition?

• If n = 1 or n = 2 then f(n) = 1

Fibonacci numbers as a recursive function

Function fibonacci(n)

if n = 1 or n = 2

return 1

else

return fibonacci(n-1) + fibonacci(n-2)

end

Another example: Exponentiation

• Exponentiation is a mathematical operation, commonly referred to as

“power”

• E.g 23 is referred to as “two to the power of 3” and equals

• 23 = 2 * 2 * 2 = 8

• 516 = 5 * 5 * 5 * 5 * 5 * 5 * 5 * 5 * 5 * 5 * 5 * 5 * 5 * 5 * 5 * 5 = a lot

Exponentiation as a recursive function

• We can generalise exponentiation as a function with two parameters:

• n is the number that exponentiation is being applied to

• e is an exponent, the power to which n is being taken to

• ne

• exp(n,e)

Exponentiation as a recursive function

• We can see that ne is equal to n * ne-1

• exp(n, e) = n * exp(n, e-1)

• What is the termination condition?

• By definition, n0 is 1, and negative powers won’t be integers anymore

• So e = 0 is a good termination condition

Exponentiation as a recursive function

Function exp(n,e)

if e = 0

return 1

else

return n * exp(n, e-1)

end

But is this the best we can do?

We can do better…

• 16 * 16 = 256

• 24 * 24 = 28

• ne/2 * ne/2 = ne

• exp(n,e) = exp(n,e/2) * exp(n,e/2)

• exp(2,8) = exp(2,4) * exp(2,4)

Recursion as iteration

• Let’s consider a task of iterating through an array

• We usually use loops

for(int i = 0; i < arr.length; i++)

System.out.println(arr[i]);

• But we can also use recursion (very common in functional languages)

Recursion as iteration

• Let’s write a function that takes an array arr as a parameter and prints

out its elements

• We need to recursively follow from one array element to another

until we reach the end of the array

• At every step of the recursion we need to go to print the current

element

Divide and conquer

Function printArray

if we have reached the final element, stop

print the current element

go to the next element

end

What do we need?

• A function that will recursively call itself

• Some way for a function to keep track of the array that it is iterating

through

• Some way to know at which point in the array it currently is

• A print statement

Iterating over an array and printing elements

Function printArray(arr, n)

if n > length(arr)

stop

print(arr[n])

printArray(arr, n+1)

end

Iterating over an array and printing elements

Function printArray(arr, n)

if n > length(arr)

stop

arr = [1,2,3,4,5]

printArray(arr, 0)

print(arr[n])

Function printArray(arr)

printArray(arr, n+1)

printArray(arr, 0)

end

end

Using only one function and one parameter

Function printArray(arr)

if arr is empty

stop

print(arr[0])

remainder = removeFirstElement(arr)

printArray(remainder)

end

Using only one function and one parameter

Function printArray(arr)

if arr is empty

stop

print(arr[0])

[1,2,3,4,5]

Not empty

print 1

remainder = removeFirstElement(arr)

printArray(remainder)

end

[2,3,4,5]

printArray([2,3,4,5])

Example: erlang

printList([]) -> ok.

printList([H|T]) ->

print(H),

printList(T).

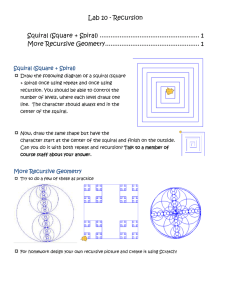

Tower of Hanoi

• A famous puzzle with many variations

• Classic variation is about a stack of disks of different sizes and three pegs

• The disks are stacked in a pyramid on the first peg, objective is to move all

disks to the third peg

• You can only move one disk at a time, from the top of one peg to the top of

another, you are not allowed to place a larger disk on top of a smaller disk

Tower of Hanoi

Tower of Hanoi

• Can be solved using a recursive algorithm, try and figure out how

• More about Tower of Hanoi in the upcoming lectures

Thank you

Week 16 – More Recursions

COMP5180 - Algorithms, Correctness and Efficiency

In the previous lecture: Reduction

arr

Minimum

arr

sortedArr

min

Sort

In the previous lecture: Divide and conquer

dataset = readDataset()

preProcess(dataset)

trainingSet,testSet = makeTrainingAndTestSets(dataset)

parameters = initialiseExperimentParameters()

decisionTreeModel = makeNewDecisionTreeModel()

construct(decisionTreeModel, trainingSet, parameters)

prune(decisionTreeModel)

testResults = test(decisionTreeModel, testSet)

save(testResults)

In the previous lecture: Recursive solutions

Function factorial(n)

if n = 0

return 1

else

return factorial(n-1) * n

end

In the previous lecture: Recursive solutions

Function factorial(n)

if n = 0

return 1

else

return factorial(n-1) * n

end

In the previous lecture: Termination condition

Function factorial(n)

if n = 0

return 1

else

return factorial(n-1) * n

end

In this lecture

• More examples

• Iteration via recursion

• Tower of Hanoi

• Recursion trees

• Solving recursions

• Improving recursive solutions

Recursion as iteration

• Let’s consider a task of iterating through an array

• We usually use loops

for(int i = 0; i < arr.length; i++)

System.out.println(arr[i]);

• But we can also use recursion (very common in functional languages)

Recursion as iteration

• Let’s write a function that takes an array arr as a parameter and prints

out its elements

• We need to recursively follow from one array element to another

until we reach the end of the array

• At every step of the recursion we need to go to print the current

element

Divide and conquer

Function printArray

if we have reached the final element, stop

print the current element

go to the next element

end

What do we need?

• A function that will recursively call itself

• Some way for a function to keep track of the array that it is iterating

through

• Some way to know at which point in the array it currently is

• A print statement

Iterating over an array and printing elements

Function printArray(arr, n)

if n > length(arr)

stop

print(arr[n])

printArray(arr, n+1)

end

Iterating over an array and printing elements

Function printArray(arr, n)

if n > length(arr)

stop

arr = [1,2,3,4,5]

printArray(arr, 0)

print(arr[n])

Function printArray(arr)

printArray(arr, n+1)

printArray(arr, 0)

end

end

Using only one function and one parameter

Function printArray(arr)

if arr is empty

stop

print(arr[0])

remainder = removeFirstElement(arr)

printArray(remainder)

end

Using only one function and one parameter

Function printArray(arr)

if arr is empty

stop

print(arr[0])

[1,2,3,4,5]

Not empty

print 1

remainder = removeFirstElement(arr)

printArray(remainder)

end

[2,3,4,5]

printArray([2,3,4,5])

Example: erlang

printList([]) -> ok.

printList([H|T]) ->

print(H),

printList(T).

Tower of Hanoi

• A famous puzzle with many variations

• Classic variation is about a stack of disks of different sizes and three pegs

• The disks are stacked in a pyramid on the first peg, objective is to move all

disks to the third peg

• You can only move one disk at a time, from the top of one peg to the top of

another, you are not allowed to place a larger disk on top of a smaller disk

Tower of Hanoi

Tower of Hanoi

• Can be solved using a recursive algorithm, let’s figure out how

Let’s play some Tower of Hanoi

• https://www.mathsisfun.com/games/towerofhanoi.html

Intuition

• We need to move the whole pyramid from peg 1 to peg 3

• Smallest disk is easy to move

• Larger disks are harder to move cause we have to remove everything

smaller out of the way first

• Largest disk is the hardest one to move

An algorithm

• Start with pyramid on peg 1

• Move everything except the bottom disk out of the way

• Move the bottom disk to peg 3

• Move everything else to peg 3

Parameters of the problem

• We need to know:

• How many disks are we moving (height of the pyramid)

• From which peg

• To which peg

Pseudocode

Function TowerOfHanoi(height, from, to)

move everything else from peg #from to a free peg

move disk #height from peg #from to peg #to

move everything else to peg #to

end

Pseudocode

Function TowerOfHanoi(

4

,

1

, 3 )

move everything else from peg

1

move disk

to peg

from peg

1

move everything else to peg

3

end

4

to a free peg

3

So what do we do with this?

Function TowerOfHanoi(height, from, to)

move everything else from peg #from to a free peg

move disk #height from peg #from to peg #to

move everything else to peg #to

end

This is easy

Function TowerOfHanoi(height, from, to)

move everything else from peg #from to a free peg

move disk #height from peg #from to peg #to

move everything else to peg #to

end

These are harder

Function TowerOfHanoi(height, from, to)

move everything else from peg #from to a free peg

move disk #height from peg #from to peg #to

move everything else to peg #to

end

What actually happens during the hard stages?

• Let’s first look at the process of moving everything out of the way

before moving the biggest disk

Let’s see it in practice first

• https://www.mathsisfun.com/games/towerofhanoi.html

Is there any difference?

So…

• This means that getting everything out of the way is the same as

moving a smaller pyramid of disks to a free peg

• Does it also work for moving everything on top of the biggest disk?

Yes it does

Recursion

• So, those red parts of the code are just smaller Tower of Hanoi

problems

• We can solve those recursively

Back to pseudocode

Function TowerOfHanoi(height, from, to)

move everything else from peg #from to a free peg

move disk #height from peg #from to peg #to

move everything else to peg #to

end

Rewriting the pseudocode

Function TowerOfHanoi(height, from, to)

free = determine free peg somehow

TowerOfHanoi(height – 1, from, free)

move disk #height from peg #from to peg #to

TowerOfHanoi(height – 1, free, to)

end

Rewriting the pseudocode

Function TowerOfHanoi(height, from, to)

free = determine free peg somehow

TowerOfHanoi(height – 1, from, free)

move disk #height from peg #from to peg #to

TowerOfHanoi(height – 1, free, to)

end

Looks good, right? Anything missing?

Function TowerOfHanoi(height, from, to)

free = determine free peg somehow

TowerOfHanoi(height – 1, from, free)

move disk #height from peg #from to peg #to

TowerOfHanoi(height – 1, free, to)

end

Termination condition

Function TowerOfHanoi(height, from, to)

free = determine free peg somehow

TowerOfHanoi(height – 1, from, free)

move disk #height from peg #from to peg #to

TowerOfHanoi(height – 1, free, to)

end

Function TowerOfHanoi(height, from, to)

if(height = 1) just move the disk

free = determine free peg somehow

TowerOfHanoi(height – 1, from, free)

move disk #height from peg #from to peg #to

TowerOfHanoi(height – 1, free, to)

end

Tower of Hanoi

• We have now looked at the recursive Tower of Hanoi

• Coding it is relatively easy – pseudocode covers most of it

• Things become more interesting when we have > 3 pegs

• We’ll look at it again soon

Recursion trees

• Let’s consider a recursive implementation of Fibonacci

• f(1) = 1, f(2) = 1

• f(n) = f(n-1) + f(n-2)

• How do we calculate f(3)?

Recursion trees: f(3)

f(3)

f(2)

f(1)

1

1

What about f(4)?

f(4)

f(2)

f(3)

f(2)

f(1)

1

1

1

What about f(5)?

f(5)

f(3)

f(4)

f(3)

f(2)

f(1)

1

1

f(2)

f(2)

f(1)

1

1

1

What about f(6)?

f(6)

f(4)

f(5)

f(3)

f(4)

f(3)

f(2)

f(1)

1

1

f(2)

f(3)

f(2)

f(2)

f(1)

f(2)

f(1)

1

1

1

1

1

1

Week 16 – Recursion trees

COMP5180 - Algorithms, Correctness and Efficiency

In the previous lectures

• Reduction

• Divide and Conquer

• Recursions

• Various examples, e.g. Factorial, Exponentiation, Tower of Hanoi

In this lecture

• General structure of recursive problems

• Divide and Conquer

• Recursion trees

• Solving recursions

So what does a recursive solution look like?

• Takes some input

• Checks termination condition

• Makes a recursive call (or several)

• Maybe does pre-processing

• Maybe does post-processing

• Maybe does something at the current iteration

• Maybe returns something

Let’s consider a general implementation:

Function myFunction(input size n)

myFunction(input size n/c)

myFunction(input size n/c)

myFunction(input size n/c)

…………………………………………………………………

end

r

General implementation

• Function with input size n

• Makes r recursive calls

• Each call uses input size n/c

Example: searching a tree

• Let’s write a method that takes a binary tree as input

• Tree has n nodes

• Searches the tree recursively for a specific element

• Returns true if it finds it, returns false if it doesn’t

• Starts with root node, recursively checks both child nodes

complete tree with N = 16 nodes (height = 4)

Example: searching a tree

Function searchTree(node, element)

if node is the element then return true

if node is a leaf then return false

leftFound = searchTree(left child, element)

rightFound = searchTree(right child, element)

return leftFound OR rightFound

end

Analysing the pseudocode

Function searchTree(node, element)

Input: Tree of n nodes

if node is the element then return true

if node is a leaf then return false

Termination

conditions

leftFound = searchTree(left child, element)

rightFound = searchTree(right child, element)

2 recursive

calls

Each of size n/2

return leftFound OR rightFound

end

Post-processing

and return statement

General implementation for treeSearch

• Function with input size n

Input: Tree of n nodes

• Makes r recursive calls

2 recursive

calls

• Each call uses input size n/c

Each of size n/2

Divide and Conquer

• A general framework for algorithm design

1. Divide

2. Delegate

3. Combine

1. Divide

• Divide the problem into smaller sub-problems

• Includes:

• Pre-processing of input

• Checking termination conditions

2. Delegate

• Delegate each sub-problem to a separate function call

• Can be a different function

• Can be a recursive call to the same function

• Includes

• Making recursive calls

• Calling helper functions

3. Combine

• Combine the sub-problem solutions into a final result

• Includes

• Post-processing

• Outputting results

Divide and Conquer framework

1. Divide

• Divide the problem into sub-problems

• Pre-process inputs

2. Delegate

• Make recursive calls

• Call helper functions

3. Combine

• Combine results of function calls

• Output results

Analysing the recursive solutions

• It is useful to estimate the computational complexity of recursive

solutions

• We have a method for doing that

Example: Factorial

n! = n * (n – 1)!

Problem

Sub-Problem

T(n) = T(n-1) + O(1)

Problem time

Sub-Problem time

Step time

Example: Exponentiation

T(e) = T(e/2) + O(1)

• Calculating ne

Problem time

• Recursive formula:

• If e is even then ne = ne/2 * ne/2

• If e is odd then ne = n * n(e-1)/2 * n(e-1)/2

Problem

Sub-Problems

Sub-Problem time

Step time

Example: Fibonacci numbers

• Calculating f(n)

• f(n) = f(n-1) + f(n-2)

Problem Sub-Problems

T(n) = T(n-1) + T(n-2) + O(1)

Problem time Sub-Problem times

Step time

Example: Tower of Hanoi

• Solving Tower of Hanoi for n disks

• TowerOfHanoi(height, from, to)

Problem

TowerOfHanoi(height-1, from, free)

move disk from peg #from to peg #to

TowerOfHanoi(height-1, free, to)

Sub-Problems

T(height) = 2*T(height-1) + O(1)

Problem time

Sub-Problem times

Step time

Examples so far:

• Factorial

T(n) = T(n-1) + O(1)

• Exponentiation

T(e) = T(e/2) + O(1)

• Fibonacci

T(n) = T(n-1) + T(n-2) + O(1)

• Tower of Hanoi

T(height) = 2*T(height-1) + O(1)

Recursion trees

• A way to visualise recursions and their complexities

• Looks like a tree graph where

• Each node is a recursive call

• Each level of height is a level of recursion

Example from previous lecture

f(6)

f(4)

f(5)

f(3)

f(4)

f(3)

f(2)

f(1)

1

1

f(2)

f(3)

f(2)

f(2)

f(1)

f(2)

f(1)

1

1

1

1

1

1

We can generalise this

Recursion tree

• An algorithm spends O(f(n)) time on non-recursive work

• Algorithm makes r recursive calls

• At level d, the number of nodes is rd

• Divides input size by c at every recursive call

• Termination condition is reached at some level L

• It is reached when input cannot be divided anymore, eg. size 1

• n/cL = 1

• Therefore, L = logcn

More in the next lecture

• Thank you

Week 17 - Mergesort

COMP5180 - Algorithms, Correctness and Efficiency

Mergesort

• A sorting algorithm

• Takes array as an input, returns the same array but sorted

• Very simple recursive algorithm:

1. Sort left half

2. Sort right half

3. Merge into a sorted array

Mergesort example

52867134

5286

52

5

7134

86

2

8

25

6

68

2568

71

7

34

1

3

17

1347

12345678

34

4

Mergesort algorithm

function mergeSort(arr)

n = length(arr)

m = n/2

leftHalf = mergeSort(arr[0-m])

rightHalf = mergeSort(arr[m-n])

sortedArr = merge(leftHalf, rightHalf)

return sortedArr

end

Mergesort reduction

arr[0-m]

arr

mergeSort

sortedArr[0-m]

arr[m-n]

mergeSort

sortedArr[m-n]

sortedArr[0-m]

sortedArr[m-n]

sortedArr

sortedArr[0-n]

merge

mergeSort

Overview

• Select a number m

• Run two mergesorts

• Merge their outputs

• Return the result

Correctness of Mergesort

• We assume mergesort is correct

• Correctness of selecting m

• m = n/2

• Correctness of merge

• How do we merge two arrays?

Merge

• A function that takes two arrays as parameters

• Assumes both arrays are sorted

• Returns a sorted array consisting of the elements of both input arrays

Merge example

merge( 2 5 6 8 , 1 3 4 7 )

a= 2568

b= 1347

c = new array of size ???

length(a) + length(b) = 4 + 4 = 8

Merge example

i= 0123

a[ i ] = 2 5 6 8

merge( 2 5 6 8 , 1 3 4 7 )

j= 0123

b[ j ] = 1 3 4 7

k= 01234567

c[ k ] = 0 0 0 0 0 0 0 0

What’s the first number we need to select?

Merge example

i= 0123

a[ i ] = 2 5 6 8

merge( 2 5 6 8 , 1 3 4 7 )

j= 0123

b[ j ] = 1 3 4 7

k= 01234567

c[ k ] = 0 0 0 0 0 0 0 0

Start with

i=0

j=0

k=0

Merge example

i= 0123

a[ i ] = 2 5 6 8

merge( 2 5 6 8 , 1 3 4 7 )

j= 0123

b[ j ] = 1 3 4 7

k= 01234567

c[ k ] = 1

00000000

a[i] = 2

b[j] = 1

1 is smaller than 2

so next number in c is 1

we have used b[j]

so j needs to increase

also we assigned c[k]

so k needs to increase

Merge example

i= 0123

a[ i ] = 2 5 6 8

merge( 2 5 6 8 , 1 3 4 7 )

j= 0123

b[ j ] = 1 3 4 7

k= 01234567

c[ k ] = 1 0

2000000

a[i] = 2

b[j] = 3

2 is smaller than 3

so next number in c is 2

we have used a[i]

so i needs to increase

also we assigned c[k]

so k needs to increase

Merge example

i= 0123

a[ i ] = 2 5 6 8

merge( 2 5 6 8 , 1 3 4 7 )

j= 0123

b[ j ] = 1 3 4 7

k= 01234567

c[ k ] = 1 2 0

300000

a[i] = 5

b[j] = 3

3 is smaller than 5

so next number in c is 3

we have used b[j]

so j needs to increase

also we assigned c[k]

so k needs to increase

Merge example

i= 0123

a[ i ] = 2 5 6 8

merge( 2 5 6 8 , 1 3 4 7 )

j= 0123

b[ j ] = 1 3 4 7

k= 01234567

c[ k ] = 1 2 3 4

00000

a[i] = 5

b[j] = 4

4 is smaller than 5

so next number in c is 4

we have used b[j]

so j needs to increase

also we assigned c[k]

so k needs to increase

Merge example

i= 0123

a[ i ] = 2 5 6 8

merge( 2 5 6 8 , 1 3 4 7 )

j= 0123

b[ j ] = 1 3 4 7

k= 01234567

c[ k ] = 1 2 3 4 5

0000

a[i] = 5

b[j] = 7

5 is smaller than 7

so next number in c is 5

we have used a[i]

so i needs to increase

also we assigned c[k]

so k needs to increase

Merge example

i= 0123

a[ i ] = 2 5 6 8

merge( 2 5 6 8 , 1 3 4 7 )

j= 0123

b[ j ] = 1 3 4 7

k= 01234567

c[ k ] = 1 2 3 4 5 6

000

a[i] = 6

b[j] = 7

6 is smaller than 7

so next number in c is 6

we have used a[i]

so i needs to increase

also we assigned c[k]

so k needs to increase

Merge example

i= 0123

a[ i ] = 2 5 6 8

merge( 2 5 6 8 , 1 3 4 7 )

j= 0123 4

b[ j ] = 1 3 4 7

k= 01234567

c[ k ] = 1 2 3 4 5 6 7

00

a[i] = 8

b[j] = 7

7 is smaller than 8

so next number in c is 7

we have used b[j]

so j needs to increase

also we assigned c[k]

so k needs to increase

Merge example

merge( 2 5 6 8 , 1 3 4 7 )

i= 0123 4

j= 0123 4

a[ i ] = 2 5 6 8 b[ j ] = 1 3 4 7

k= 01234567 8

c[ k ] = 1 2 3 4 5 6 7 8

0

a[i] = 8

j is out of bounds

so next number in c is 8

we have used a[i]

so i needs to increase

also we assigned c[k]

so k needs to increase

Merge example

merge( 2 5 6 8 , 1 3 4 7 )

i= 0123 4

j= 0123 4

a[ i ] = 2 5 6 8 b[ j ] = 1 3 4 7

k= 01234567 8

c[ k ] = 1 2 3 4 5 6 7 8

return c

So what now?

The merge is complete

function merge(a, b)

c = new array of size length(a) + length(b)

keep running until there are no more elements to add

pick the smallest next element from a and b

put that element in c

move indexes

end

function merge(a, b)

c = new array [length(a) + length(b)]

i = 0, j = 0, k = 0

while i < length(a) OR j < length(b)

if a[i] < b[j]

c[k] = a[i], i++, k++

if i > length(a)

else

???

c[k] = b[j], j++, k++

if j > length(b)

???

end

Mergesort correctness

• If merge is correct, then mergeSort is correct

Mergesort recurrence

• To mergesort an array, we need to:

T(n)

• Mergesort the left half

T(n/2)

• Mergesort the right half

T(n/2)

• Merge the results

O(n)

Mergesort recurrence

• To mergesort an array, we need to:

T(n) = 2*T(n/2) + O(n)

• Mergesort the left half

T(n/2)

• Mergesort the right half

T(n/2)

• Merge the results

O(n)

From the previous lecture

• T(n) = r * T(n/c) + f(n)

• n is size of input

• r is number of recursive calls made by a recursive function

• c is a constant by which the size of the input is divided at each level

• f(n) is time spent on non-recursive stuff

• L is the lowest level of recursive tree, L = logcn

Mergesort recurrence

• T(n) = 2*T(n/2) + O(n)

• r = 2, because mergesort makes 2 recursive calls

• c = 2, because mergesort divides input in half

• f(n) = O(n), because complexity of merging results is O(n)

• L = logcn = log2n, because after log2n divisions, the arrays will reach size 1

Solving mergesort recurrence

• T(n) = 2*T(n/2) + O(n)

• T(n) = O(n) + 2*O(n/2) + 4*O(n/4) + 8*O(n/8) + …… + 2

O(n)

O(n)

O(n)

• This is a recurrence of “equal” type

• So T(n) = L * f(n)

• L = log2n and f(n) = O(n)

• So T(n) = log2n * O(n) = O(n*log2n)

O(n)

log2n

*O(n/2

O(n)

log2n

)

Before you go

Computing society

•Meeting this Friday 14:00-17:00 in Cornwallis SW101

•https://discord.gg/f7y2MRm6Hd

Thank you

Week 17 – Solving recursions

COMP5180 - Algorithms, Correctness and Efficiency

In the previous lecture

• Generalised recursive solution

• Recurrences

• Recursion trees

• With some examples

Recurrences

• It takes T(n) time to solve a recursive problem with input size n

• We can define T(n) recursively too

• To calculate the time it would take solve the recursive problem we

need to calculate the time it would make the recursive calls plus the

time it would take to do all of the non-recursive stuff

Recurrences: definitions

• A recursive function gets called with input of size n

• A recursive function makes r recursive calls

• For each recursive call it uses input of size n/c

• It also spends f(n) time on non-recursive processing

Example from previous lecture

Function searchTree(node, element)

Input: Tree of n nodes

if node is the element then return true

if node is a leaf then return false

Termination

conditions

leftFound = searchTree(left child, element)

rightFound = searchTree(right child, element)

2 recursive

calls

Each of size n/2

return leftFound OR rightFound

end

Post-processing

and return statement

Recursion trees

In this lecture

• Solving recursions

• Examples

So, we have made these definitions:

• A recursive function gets called with input of size n

• A recursive function makes r recursive calls

• For each recursive call it uses input of size n/c

• It also spends f(n) time on non-recursive processing

• T(n) = r * T(n/c) + f(n) is a recurrence formula for a recursive function

How deep is the recursion tree?

Recursion trees

How deep is the recursion tree?

• Let’s define the lowest level of the tree as level L

Recursion trees

How deep is the recursion tree?

• Let’s define the lowest level of the tree as level L

• It will be reached when the input can not be divided anymore,

effectively the input will become 1

Recursion trees

How deep is the recursion tree?

• Let’s define the lowest level of the tree as level L

• It will be reached when the input can not be divided anymore,

effectively the input will become 1

• So, n/cL = 1

• n = cL

• Therefore: L = logcn

What can we do with those definitions?

• T(n) = r * T(n/c) + f(n)

• n is size of input

• r is number of recursive calls made by a recursive function

• c is a constant by which the size of the input is divided at each level

• f(n) is time spent on non-recursive stuff

• L is the lowest level of recursive tree, L = logcn

Examples of recurrences from previous lecture

• Factorial

T(n) = T(n-1) + O(1)

• Exponentiation

T(e) = T(e/2) + O(1)

• Fibonacci

T(n) = T(n-1) + T(n-2) + O(1)

• Tower of Hanoi

T(height) = 2*T(height-1) + O(1)

But these are all recursively defined

• Factorial

T(n) = T(n-1) + O(1)

• Exponentiation

T(e) = T(e/2) + O(1)

• Fibonacci

T(n) = T(n-1) + T(n-2) + O(1)

• Tower of Hanoi

T(height) = 2*T(height-1) + O(1)

Solving recurrences

• We can “solve” a recurrence formula and turn it into a non-recursive

estimation of time complexity of a recursive function

• Basically O-notation for recursive functions

Time complexity of a recursive function

Recursion trees

Time complexity of a recursive function

T(n) =

Time complexity of a recursive function

T(n) = f(n)

Time complexity of a recursive function

T(n) = f(n) + r * f(n/c)

Time complexity of a recursive function

T(n) = f(n) + r * f(n/c) + r2 * f(n/c2)

Time complexity of a recursive function

T(n) = f(n) + r * f(n/c) + r2 * f(n/c2) + ………

Time complexity of a recursive function

T(n) = f(n) + r * f(n/c) + r2 * f(n/c2) + ……… + rL * f(n/cL)

Recursion trees

This seems correct, what now?

T(n) = f(n) + r * f(n/c) + r2 * f(n/c2) + ……… + rL * f(n/cL)

It’s a long expression

T(n) = f(n) + r * f(n/c) + r2 * f(n/c2) + ……… + rL * f(n/cL)

Which term is the most important here?

T(n) = f(n) + r * f(n/c) + r2 * f(n/c2) + ……… + rL * f(n/cL)

Recursion trees

Three types

T(n) = f(n) + r * f(n/c) + r2 * f(n/c2) + ……… + rL * f(n/cL)

• Decreasing

• T(n) = O(f(n))

• Equal or almost equal

• T(n) = O(L * f(n))

• Increasing

• T(n) = O(rL * f(1)) = O(rL)

Example: decreasing recurrence

• T(n) = T(n/2) + O(n)

• T(n) = f(n) + f(n/2) + f(n/4) + f(n/8) + …

• T(n) ≈ f(n)

• T(n) = O(n)

Example: equal or almost equal

• T(n) = T(n-1) + O(1)

• T(n) = f(n) + f(n-1) + f(n-2) + f(n-3) …… + f(1)

• T(n) = L * f(n)

• L = n in this example

• T(n) = n * O(1) = O(n)

Example: increasing

• T(n) = 3*T(n/2) + O(1)

• T(n) = f(n) + 3*f(n/2) + 9*f(n/4) + 27*f(n/8) + 81*f(n/16) + … + 3L*f(n/2L)

• T(n) = rL

• L = logcn = log2n

• T(n) = 3 log2n

Three types

T(n) = f(n) + r * f(n/c) + r2 * f(n/c2) + ……… + rL * f(n/cL)

• Decreasing

• T(n) = O(f(n))

• Equal or almost equal

• T(n) = O(L * f(n))

• Increasing

• T(n) = O(rL * f(1)) = O(rL)

Determining time complexity of recursions

1. Determine the number of recursive calls r

2. Determine the reduction in input size at each recursive call c

3. Determine the step time f(n)

4. Determine which type of recurrence it is and solve it:

• Decreasing: T(n) = O(f(n))

• Equal: L = n; T(n) = L * f(n) = O(n * f(n))

• Increasing: L = logcn; T(n) = rL = O(r logcn )

Recursion trees

Thank you

• See you in the next lecture

Week 18 – Backtracking

COMP5180 - Algorithms, Correctness and Efficiency

In this lecture

• Backtracking

• N Queens problem

• Sum of Subset problem

Let’s consider a problem

• We have a chess board

• 8x8

• How many queens can we place

• So that they can’t attack each other?

N Queens

N Queens

• So how many queens can we place?

• What’s the theoretical limit?

N Queens algorithm

• Let’s implement an algorithm for solving this problem

• Since there can only be one queen per row, let it iterate through rows

and try and place a queen at the first available spot in that row

N Queens

N Queens

N Queens

N Queens

N Queens

N Queens

N Queens

• So we found a possible solution

• This one has 5 queens

• But maybe we can do better

• Let’s go back a step

N Queens

N Queens

N Queens

N Queens

Better

• We have managed to achieve a

better solution

• This time with 7 queens

• We achieved it by arriving at a

possible solution with 5 queens, and

taking a step back to try an

alternative move

Backtracking

• A technique for recursive solutions that allows us to recursively try

different solutions

• Most applicable in search algorithms and optimisation problems

• Can be applied to non-recursive solutions too, sometimes

N Queens with backtracking

• Let’s write a method that solves N Queens

• It takes a grid as input and returns a number

• Tries to make add a queen on this grid

• If it can, it adds it on the grid, and recursively runs itself on this grid

• If it can’t, it just returns the number of queens on the grid

Backtracking

• So at each step we need to:

• Check if there are any available moves

• If there are available moves:

• Iterate through those moves

• For each move, recursively solve the N Queens problem on the resulting grid

• Select the highest result

• If there are no available moves:

• Just return the number of queens on current grid

Recursive formula

NQueens(

NQueens(

) = countQueens(

) = max( NQueens(

)

), NQueens(

)

Function NQueens(grid)

find all free spaces on grid

count how many queens are on grid

if there are no free spaces

return the number of queens

if there are free spaces

iterate through those spaces

placing queen on grid

and running Nqueens on that grid

update n whenever a recursive call

returns a higher value

return n

end

N Queens with backtracking

Function NQueens(grid)

freeSpaces = findFreeSpaces(grid)

n = countQueens(grid)

if notEmpty(freeSpaces)

for each freeSpace in freeSpaces

nextGrid = addQueen(grid, freeSpace)

nextGridQueens = NQueens(nextGrid)

if(nextGridQueens > n)

n = nextGridQueens

return n

end

Backtracking

• So instead of directly using the result of each recursive call, we

compare it to results of other recursive calls, and then select the best

one

• After the recursive call is done, the execution backtracks to the level

above and makes decisions there

Let’s look at another classic problem

• We have a set of numbers

• E.g. {6, 13, 25, 12, 71, 33, 5}

• And we want to find if a certain sum k can be achieved by adding up

some of these numbers

• E.g. if k = 51

• A subset {6, 12, 33} would satisfy this condition

Sum of subset algorithm

• Let’s implement an algorithm for this problem

• It will take an array of integers as input representing the set, and an

integer k representing the target sum

• It will produce a binary value as output:

• true if there is a subset that adds up to k

• false if there is no such subset

Recursive formula

• We need to derive a recursive formula for the sum of subset algorithm

• Input size is the length of the input array

• We can reduce the input size at each iteration by removing one of the

numbers from the array; this will represent selecting that number for

the addition

• Sum needs to be adjusted too…

Reduction

• We can reduce the problem of solving the sum of subset problem

• To solving several smaller problems where each one represents

selecting one of the numbers

• If we have set of numbers {4, 3, 5} and we aim to get sum of 8

• After selecting number 4, we now need to now solve the problem

where set is {3, 5} and target sum is 8 – 4 = 4

Set = {4, 3, 5}

K=8

Set = {3, 5}

K=4

Set = {4, 5}

K=5

Set = {5}

K=1

Set = {3}

K = -1

Set = {5}

K=1

Set = {}

K = -4

Set = {}

K = -4

Set = {}

K = -4

Set = {4}

K=0

Set = {3, 4}

K=3

Set = {4}

K=0

Set = {3}

K = -1

Set = {}

K = -4

Recursive formula

Set = *

sumOfSubset(

) = true

K=0

Set = {*}

sumOfSubset(

)=

K≠0

n

OR

i=0

Set = {}

sumOfSubset(

) = false

K≠0

Set – set[i]

sumOfSubset(

K – set[i]

)

Pseudocode

Function sumOfSubset(set, k)

if k = 0

return true

if empty(set)

return false

sumFound = false

for i = 0:length(set)

sumFound =

sumFound OR sumOfSubset(set-set[i], K –set[i])

return sumFound

end

Backtracking

• After checking whether the sum is achievable using the set of

numbers, the sumOfSubset function passes the result back to the call

on the previous recursion layer

• That layer can then check if any of its recursive calls returned true and

return true if they did

Backtracking: general approach

• When a function makes some recursive calls, it can analyse their

results and pick the best one

• Each recursive call evaluates one decision

• And, recursively, the next possible decisions

• It can be used to find best sequences of moves, best combinations of

options, optimal paths, etc

Thank you

Week 18 – Memoisation &

Dynamic Programming

COMP5180 - Algorithms, Correctness and Efficiency

Let’s consider an example

• Fibonacci function

• f(1) = 1

• f(2) = 1

• f(n) = f(n-1) + f(n-2)

f(6)

f(6)

f(4)

f(5)

f(3)

f(4)

f(3)

f(2)

f(1)

1

1

f(2)

f(3)

f(2)

f(2)

f(1)

f(2)

f(1)

1

1

1

1

1

1

Time complexity of Fibonacci

• f(n) = f(n-1) + f(n-2)

• T(n) = T(n-1) + T(n-2) + O(1)

• T(n-1) ≈ T(n-2)

• T(n) = 2*T(n-1) + O(1)

Solving Fibonacci recurrence

• T(n) = 2*T(n-1) + O(1)

•r=2

•c=1

•L=n

• f(n) = O(1)

• T(n) = f(n) + 2*T(n-1) + 4*T(n-2) + 8*T(n-3) + ……… + 2L*T(n-L)

• Increasing recurrence

• T(n) = O(rL) = O(2n)

Recursive Fibonacci

• f(n) = f(n-1) + f(n-2)

• Time complexity O(2n)

• Can we do better?

• Any ideas?

f(6)

f(6)

f(4)

f(5)

f(3)

f(4)

f(3)

f(2)

f(1)

1

1

f(2)

f(3)

f(2)

f(2)

f(1)

f(2)

f(1)

1

1

1

1

1

1

Some ideas

• Height of the tree can be big

• Big height means lots of computations

• Maybe we can reduce height?

What if we make f(3) = 2?

f(6)

f(4)

f(5)

f(3)

f(4)

f(3)

f(2)

1

2

f(1)

1

f(2)

f(2)

1

1

2

f(2)

f(3)

f(1)

f(2)

1

1

2

f(1)

1

1

What if we make f(4) = 3?

f(6)

f(4)

f(5)

f(4)

f(3)

2

3

f(2)

1

f(3)

f(3)

2

2

3

f(2)

1

What if we make f(5) = 5?

f(6)

f(4)

f(5)

f(4)

3

5

f(3)

2

3

Extra termination conditions

• We can add new termination conditions to allow for faster

calculations

• If-statements with pre-calculated results

• Can help reduce tree depth

Introducing new termination conditions

• Calculating f(n) for small value of n is quick

• So we need to focus on improving the calculations for large values of n

• Add periodic stopping points to limit the depth of the tree

Example

• f(n) = f(n-1) + f(n-2), f(1) = 1, f(2) = 1

• f(10) = 55, f(11) = 89

• f(20) = 6765, f(21) = 10946

• f(30) = 832040, f(31) = 1346269

• And so on

Adding new termination conditions

• Advantages:

• Faster computations for large values of n

• Easy to implement

• Disadvantages:

• Limited effect

• Still O(2n)

• Have to check all termination conditions at every call

f(6)

f(6)

f(4)

f(5)

f(3)

f(4)

f(3)

f(2)

f(1)

1

1

f(2)

f(3)

f(2)

f(2)

f(1)

f(2)

f(1)

1

1

1

1

1

1

Maybe change the recursion formula?

• f(n) = f(n-1) + f(n-2)

• We can express f(n-1) as

• f(n-2) + f(n-3)

• So f(n) = f(n-2) + f(n-3) + f(n-2)

• f(n) = 2*f(n-2) + f(n-3)

Even further?

• f(n) = 2*f(n-2) + f(n-3)

• Express f(n-2) as f(n-3) + f(n-4)

• f(n) = 3*f(n-3) + 2*f(n-4)

How far can the reduction go?

• f(n) = 3*f(n-3) + 2*f(n-4)

• f(n) = 5*f(n-4) + 3*f(n-5)

• f(n) = 8*f(n-5) + 5*f(n-6)

• f(n) = 13*f(n-6) + 8*f(n-7)

• f(n) = 21*f(n-7) + 13*f(n-8)

As far as we want

f(n)

f(n-2)

f(n-1)

f(n-2)

f(n-3)

f(n-3)

f(n-4) f(n-4)

f(n-5)

f(n-3)

f(n-4)

f(n-4)

f(n-5) f(n-5)

f(n-6)

f(n)

f(n-3)

f(n-2)

f(n-4)

f(n-6)

f(n-5)

f(n-7) f(n-7)

f(n-8)

f(n-5)

f(n-7)

f(n-6)

f(n-8) f(n-7)

f(n-9)

f(n)

f(n-4)

f(n-3)

f(n-6)

f(n-7)

f(n-9) f(n-10) f(n-10) f(n-11)

f(n-7)

f(n-8)

f(n-10) f(n-11) f(n-11) f(n-12)

f(n)

f(n-5)

f(n-4)

f(n-8)

f(n-9)

f(n-12) f(n-13) f(n-13) f(n-14)

f(n-9)

f(n-10)

f(n-13) f(n-14) f(n-14) f(n-15)

Further reduction

• Advantages:

• Better recursive formula

• Affects all values of n

• L gets smaller since some levels of recursion are skipped

• Disadvantages:

• Still O(2n)

• Recursive formulas have to be added manually

The main issue

• Function f(n) is defined recursively as f(n-1) + f(n-2)

• This leads to overlaps, same results being calculated multiple times

f(6)

f(6)

f(4)

f(5)

f(3)

f(4)

f(3)

f(2)

f(1)

1

1

f(2)

f(3)

f(2)

f(2)

f(1)

f(2)

f(1)

1

1

1

1

1

1

Solution

• Keep track of previous results

• When the same results are needed, just use old ones instead of

calculating new ones

Memoisation

• Keeping track of the results of previous function calls

• When a function is called with a new input, calculate and save the

result

• When a function is called with a previously calculated input, just

return the previously calculated result

Memoisation: general approach

• Create a data structure that can store previous results

• Preferably indexed by input

• When a function is called – search the data structure for those inputs

• If found – return the result stored in the data structure

• If not found – run the function normally

f(7)

Example: fibonacci(7)

13

f(6)

8

f(4)

f(5)

5

f(3)

f(4)

3

f(3)

2

f(2)

f(2)

f(1)

1

1

1

5

3

2

n

f(n)

f(5)

1234567

1 1 2 3 5 8 13

.

function fibonacci(n)

if n = 1 OR n = 2

return 1

if previouslyCalculated[n]

return previousResult[n]

result = fibonacci(n-1) + fibonacci(n-2)

previouslyCalculated[n] = true

previousResult[n] = result

return result

end

f(7)

What is the complexity?

13

f(6)

8

f(4)

f(5)

5

f(3)

f(4)

3

f(3)

2

f(2)

f(2)

f(1)

1

1

1

5

3

2

n

f(n)

f(5)

1234567

1 1 2 3 5 8 13

.

Memoisation

• Advantages:

• No redundant calls

• O(n)

• Usually easy to implement

• Disadvantages:

• Storing results takes up memory

• Searching for old results can be time-consuming in big tasks

Recap

• If your recursive solution is inefficient, you can:

• Hard-code intermediate results

• Change the recursion formula

• Store previous results to avoid redundancy (Memoisation)

Thank you

Week 20 - Quicksort

COMP5180 - Algorithms, Correctness and Efficiency

In this lecture

• Quicksort

• Correctness of quicksort

• Efficiency of quicksort

• Variations of quicksort

Quicksort

• A sorting algorithm

• Published in 1961

• Has a simple recursive structure:

• Select an element (pivot)

• Partition the input around the pivot

• Quicksort the left part

• Quicksort the right part

Quicksort example

53

1

28

36

47

51

64

72

8

23415768

1

23

1

24

31

4

76

6

78

1243

678

43

3

4

34

3

6

8

Quicksort algorithm overview

• Select a pivot

• Partition the array around the pivot

• Recursively sort the left part

• Recursively sort the right part

Quicksort reduction

arr

arr

partition

partitionedArr, p

partitionedArr[0-p]

quickSort

sortedArr[0-p]

partitionedArr[p-n]

sortedArr

sortedArr[p-n]

quickSort

quickSort

Correctness of Quicksort

• The recursive calls are assumed to

be correct

• Quicksort correctness depends on

the correctness of the partition

Partition

• A way to divide the array into two parts

• Around a certain element called pivot

• In such a way that all elements of the left are smaller and all elements

on the right are larger

Selecting the pivot element

• If nothing is known about the array, we can just select first one by

default

• Original implementation selected last one by default

• Ideally we want to select the mean element of the array, but it’s

usually impractical to search for it

• Many varieties of quicksort exist, with different pivot selections

Partition example

i= 01234567

arr[ i ] = 5 3 8 6 7 1 4 2

Correctness of Quicksort

• If partition is correct, then the Quicksort is correct

How to implement a Quicksort

• Implement a partition function that partitions the array

function partition(arr, lo, hi)

pivot = arr[hi]

i = lo - 1

for j = lo -> hi - 1

if arr[j] <= pivot then

i = i + 1

swap arr[i] and arr[j]

i = i + 1

swap arr[i] and arr[hi]

return i

end

How to implement a Quicksort

• Implement a partition function that partitions the array

• Implement the swap function that swaps array values

function swap(arr, i, j)

exchange = arr[i]

arr[i] = arr[j]

arr[j] = exchange

end

How to implement a Quicksort

• Implement a partition function that partitions the array

• Implement the swap function that swaps array values

• Implement the quicksort function

function quicksort(arr, lo, hi)

if lo = hi

return

pivot = partition(arr, lo, hi)

quicksort(arr, lo, pivot-1)

quicksort(arr, pivot+1, hi)

end

How to implement a Quicksort

• Implement a partition function that partitions the array

• Implement the swap function that swaps array values

• Implement the quicksort function

Complexity of Quicksort

• Quicksort divides the problem of sorting an array into two smaller

sorting problems

• Sizes of those problems can vary

• Sizes of sub arrays are pivot and n – pivot

Week 20 – Complexity classes

COMP5180 - Algorithms, Correctness and Efficiency

Final lecture

In this lecture

• Circuit satisfiability

• Decision problems

• P vs NP

• How to prove P vs NP

Circuit satisfiability

• A common problem

• Analysing a circuit

• Trying to find if a particular logical formula is satisfiable

Common logic gates

Common logic gates

Circuit satisfiability example

Circuit satisfiability example

Circuit Satisfiability problem

• Given a boolean circuit:

• Is there a set of inputs that makes the circuit output True?

• Or, does the circuit always output False?

Solving circuit satisfiability problem

• Easy solution: Brute force

• Just evaluate the formula for every value combination

• For n variables, there is 2n combinations

• Evaluating the formula is usually easy, maybe around O(n)

• So you can probably solve it in O(n*2n)

Can we do better?

• Not known

• Nobody has actually formally proved that we can’t beat brute force

• Maybe, there is a clever algorithm that just hasn’t been discovered yet

P vs NP

• Running time of an efficient algorithm should be bounded by a

polynomial function of its input size

• T(n) ≤ O(nc) where n is the input size and c is some constant power

• T(n) ≤ O(1)

• T(n) ≤ O(n)

• T(n) ≤ O(n2)

• T(n) ≤ O(n3)

• T(n) ≤ O(2n)

P vs NP

O(1)

O(n)

O(n2)

O(2n)

Decision problems

• Decision problems are problems that can give a yes or no answer for a

particular question

• Search problems

• Path finding problems

• Satisfiability problems

• Etc.

P vs NP

• P is a class of all decision problems that can be solved in

polynomial time:

• Comparing two numbers

• Evaluating a set of conditions

• Binary search on an array

• Determining is a number is prime (shown in 2002)

• Etc.

P vs NP

• NP is a set of all decision problems for which if the answer is yes, the

corresponding solution (proof) can be verified in polynomial time

• Satisfiability problem

• Sum of subset problem

• Binary search on an array

• Determining if a number is prime

P vs NP

• co-NP is a set of all decision problems for which if the answer is no,

the corresponding solution (proof) can be verified in polynomial time

• Which ones are co-NP here?

• Satisfiability problem

• Sum of subset problem

• Binary search on an array

• Determining if a number is prime

P vs NP

• EXP is a set of all decision problems that can be solved in exponential

time, e.g. O(2n)

• Satisfiability problem

• Most path-finding algorithms

• Sum of subset

• Tower of Hanoi

• All P problems

P vs NP

• Some problems can have interesting properties:

• Circuit satisfiability is probably not a P problem, it has not been

proven to be solvable in polynomial time, but has not been proven

otherwise either

• At the same time, it is definitely an NP problem, as its solution can be

evaluated in polynomial time

• Also, it has not yet been proven that it is a coNP problem, it still takes

O(2n) to make sure that a circuit is unsatisfiable

P vs NP

• P is a set of decision problems solvable in polynomial time

• NP is a set of all decision problems for which a positive solution can

be evaluated in polynomial time

• Is P = NP?

• This is the single most important unanswered question in theoretical

computer science

How to prove P vs NP

• Most researchers believe that P ≠ NP

• There are a few useful definitions

How to prove P vs NP

• P ⊆ NP

• Because we can check the solution for a polynomial problem by

computing the answer again, which can be done in polynomial time

• P ⊆ co-NP

• Because we can verify that a polynomial decision problem has no

solutions in polynomial time

• Some problems are both NP and co-NP

• All polynomial classes are contained within the EXP class

How to prove P vs NP

How to prove P vs NP

Circuit Satisfiability

Some problems can be reduced to other

problems

• Let’s consider two problems: problem A and problem B

• Let’s say it is possible to reduce the solution for problem A to solving

problem B

• E.g. A is a problem of finding the minimum of an array and B is a

problem of sorting an array

Recall the example

Reductions

• What this means is that if problem A can be reduced to problem B

• In polynomial time, i.e. no exponential stuff is introduced

• Then, problem B is at least as hard as problem A

• But may be harder

• In this particular example, A is O(n) and B is O(n*log n)

Definitions

• NP-hard is a class of problems which are at least as hard as the

hardest problems in NP

• Includes problems that are in NP but not in P:

• Traveling salesman problem

• Sum of subset problem

• Includes problems that are not in NP

• Tower of Hanoi problem

• Includes undecidable problems

NP-hard

Definitions

• NP-hard is a class of problems which are at least as hard as the

hardest problems in NP

• NP-complete is a class of decision problems which contains the

hardest problems in NP

• All NP-hard problems in NP

NP-complete

Evaluating the class of a new problem

• How can we check if a problem belongs to a particular class?

Determining complexity class of a problem

•P

• Create a polynomial-time solution to the problem

• NP

• Create a polynomial-time evaluator for a positive solution

• co-NP

• Create a polynomial-time evaluator for a negative solution

What about NP-hard and NP-complete?

NP-hard

• Proof by enumeration

• Reduce every known NP-problem to the new problem

• Reduce to a known NP-hard problem

• If the problem can be reduced to an NP-hard problem than it has to be at

least NP-hard

NP-hard

NP-complete?

• Show that a problem is NP

• Show that it’s NP-hard

• If both can be proven, the problem is NP-complete

Recap

• Problems vary in complexity

• Polynomial are efficient

• Exponential are not efficient

• Solutions for NP problems can be evaluated in polynomial time

• We don’t know if P = NP

• $1,000,000 for whoever finds out

Thank you

From last week

• Motivation for why O-notation is important

• O-notation for classifying inputs

• Objections…

• Formalising

• Examples

• Dealing with earlier objections

O(f(n)): ! ∃#. ∃%! . # > 0 ∧ %! > 0 ∧ ∀% . % ≥ %! → 0 ≤ !(%) ≤ # / 0(%)}

• Manipulating and Computing with O-notation

• Alternatives to (Big) O-notation

finishing week 12 O-notation

1

Remember: bubble sort pseudocode

for i = 0 to N - 2

for j = 0 to N - 2

if (A(j) > A(j + 1)

multiplication

temp = A(j)

A(j) = A(j + 1)

A(j + 1) = temp

end-if

end-for

end-for

addition

For this for-loop,

T=1+ 1+1+1

=4

(if we assume time

'! for each line here

=1 and we assume

worst case

scenario)

• addition: if you have a

sequence of statements

"! ; "" ; where the “time”

needed to run statement "# is

$# , then the time for the

sequence is $! + $" .

• multiplication: if you have a

for-loop for(int

i=0;i<N,i++) S; and the

cost for a single loop iteration

is $ then the overall cost for

the loop is & × $.

Polynomial emerges: N × # × 4 = 4# "

finishing week 12 O-notation

(in practice, we usually ignore the constant ‘4’ , and refer to bubble sort as O !

!

- see later)

2

Manipulating O-notation

• to describe the O-notation characteristic of a growth function f we

often want the simplest growth function g, such that O(f)=O(g)

O(f(n)): ! ∃#. ∃%! . # > 0 ∧ %! > 0 ∧ ∀% . % ≥ %! → 0 ≤ !(%) ≤ # / 0(%)}

• this involves:

• algebraic manipulation, ordinary

• eliminating constant factors

• eliminating slower-growing summands

• (summands = terms summed together in the function)

finishing week 12 O-notation

3

Some ordinary algebraic laws (reminder)

• !! " #! = ! " # !

" #

• !

= !"$#

• !" " !# = !"%#

• !!%& = ! " !!

• log ' (# " )) = log ' # + log ' )

• log ' # ( = ) " log ' #

• log ' , = log ) , " log ' #

finishing week 12 O-notation

4

O(f(n)): ! ∃#. ∃%! . # > 0 ∧ %! > 0 ∧ ∀% . % ≥ %! → 0 ≤ !(%) ≤ # / 0(%)}

Egs. eliminating constant factors

NB The ( )*+ ,*+-+.*, represents multiplication

• O(3x2) = O(x2)

• because if g is bounded by & ' 3)2 it is also bounded by (3 ' &) ' )2

• 1(log ' 2) = 1(log ( 2)

• because [algebraic rule] log # / = log $ / ' log # & and log # & is a constant factor (hence

why we often just say log(/) in O-notation)

• similarly, 1 3!%& = 1 3!

• because [algebraic rule] 3%&' = 3 ' 3% , and 3 is a constant factor

• however, 1 , !%& ≠ 1 , !

• because we can use the same algebraic rule for ) %&' = ) ' ) %

factor

finishing week 12 O-notation

but x is not a constant

5

Eliminating slower growing summands

Generally, polynomials can be reduced to the term with largest degree e.g.:

2 5%( + 8% = 2(%( )

• if 0 ∈ 2(!) then 2 0 + ! = 2 !

• e.g. 8/ ∈ 2(/" ) as 0 ≤ 8/ ≤ 5 ' /" - so 2 8/ + 5/"

= 2 /"

This works because for sufficiently large n , 5%( > 8%

• E.g. n = 2 (20 > 16)

finishing week 12 O-notation

6

Where do all of these operations come from?

program analysis!

• sequences of statements: cost is the maximum sum of the costs of all the statements that

could be executed

where stA costs O(a) , stB

• E.g. cost of {stA; stB } = O(a) + O(b) = O(a +b)

• E.g. cost of { if (cond) stA; else stB; } = O(c)+max(O(a),O(b))

costs O(b) and checking

the condition costs O(c)

• loops: running stA k times is k times as expensive: cost=k×O(a)= O(k×a)

• nested loops -> polynomials

%

• divide&conquer searches cost is logarithmic; split / inputs into 7 parts of size # , take one part

%

& split into 7 parts of size #! etc. . . = log # / cost

• exhaustive trial-and-error searches have worst-case exponential cost

• exhaustively checking all possibilities for n variables – GP (a, ar, ar2, ar3, …, arn )

• Method calls: cost of its method body (+c for passing params/results)

• for recursive methods we identify a function T defined recursively to capture the recursive method calls (or

ideally, try to calculate/guess a non-recursively-defined

version that satisfies the recurrence equations

finishing week 12 O-notation

7 of T)

(Example, bubblesort loop)

for (int i=0; i<K; i++) {

for (int j=1; j<M; j++) {

if (a[j]<a[j-1]) {

int aux=a[j];

a[j]=a[j-1];

a[j-1]=aux;

}

}

}

finishing week 12 O-notation

See the recording I put up

last week, or refer back to

the week 9 example in the

maths lecture

8

Variations on big-O

• O(f) ["big O"] gives a class of growth functions for which # / 0 is an upper

bound

•

< ∃&. ∃//. & > 0 ∧ // > 0 ∧ ∀/ . / ≥ // → 0 ≤ <(/) ≤ & ' C(/)}

• (we already saw this)

• there are various other notations around, e.g.

• E(F) is the dual (& ' C is a lower bound), or < ∈ Ω(C) ⟷ C ∈ 2(<);

• I(F) has this as both upper and lower bound, i.e. Θ C = 2(C) ∩ Ω(C)

• while O(f) provides an upper bound, there is also:

e.g. Big-O vs little-o:

• o(f) ["little o"] for providing a strict upper bound:

" = 2() " )

2)

• < ∀&. ∃//. & > 0 ∧ // > 0 ∧ ∀/. / ≥ // → 0 ≤ < / < & ' C(/)}

2) " ≠ O() ")

")

2) = O()

finishing week 12 O-notation

9

Final reflections: How problems increase

N

log(N)

1

0.00

2

0.69

3

1.10

4

1.39

5

1.61

10

2.30

50

3.91

100

4.61

200

5.30

1000

6.91

NlogN

0.00

1.39

3.30

5.55

8.05

23.03

195.60

460.52

1059.66

6907.76

N2

2N

N!

1

4

9

16

25

100

2500

10000

40000

1000000

2

4

8

16

32

1024

1.1259E+15

1.26765E+30

1.60694E+60

1.0715E+301

1

2

6

24

120

3628800

3.04141E+64

9.3326E+157

#NUM!

#NUM!

• Life time of the universe about 4E+10 years (40 billion years) = approx

1E+18 seconds. At 5 Peta (5+E15) FLOPS we get 5E+33 instructions per

universe lifetime

• With a graph of 200 nodes, an algorithm taking exactly exponential time

means we need about 3E+26 universe lifetimes to solve the problem.

finishing week 12 O-notation

10

Now onto graphs…

finishing week 12 O-notation

11