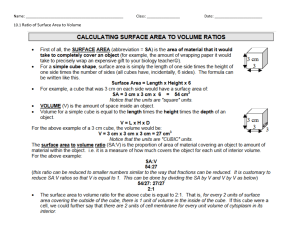

Module 7: Power “The combination of some data and an aching desire for an answer does not ensure that a reasonable answer can be extracted from a given body of data.” - John Tukey Review This module will apply what we’ve learned about: ● Hypothesis testing (Module 6) ● Using Confidence Intervals (Module 5) ● Type I and Type II errors (Module 6) 2 7.1 Review of Type I and Type II errors 7.2 The width of a Confidence Interval Section 7.1: Review of Type I and Type II errors 7.3 Defining Statistical Power 7.4 Power Analysis 7.5 Power in Jamovi 7.6 Why do we care about power? 3 Review of Type I and Type II errors ● Remember the breast cancer example from the last module. We tested 𝐻! : 𝜇" − 𝜇# = 0 ● We hope that we will Reject 𝐻0 when 𝐻0 is false, and FTR 𝐻0 when 𝐻0 is true. ● Let’s review each type of error and how to avoid them. 4 Type I Errors ● If we reject 𝐻! when 𝐻! is true, we have committed a Type I error. ● In our example, we imagine the CI excluding 0, even though 0 was the true difference in means (𝜇" = 𝜇# , or 𝐻! is true, there is no difference). 𝜇" − 𝜇# = 0 5 Type I Errors ● The probability of a Type I error is just 𝛼, which we set before the test begins. In STAT 301/307, we have been using 𝛼 = 0.05. 𝛼 = 𝑃 𝑇𝑦𝑝𝑒 𝐼 𝐸𝑟𝑟𝑜𝑟 = 𝑃(𝑅𝑒𝑗𝑒𝑐𝑡 𝐻! |𝐻! 𝑖𝑠 𝑡𝑟𝑢𝑒) Probability... of rejecting H0... given H0 is true ● If we wanted fewer Type I errors, we could make 𝛼 smaller. 6 Type II Errors ● If we fail to reject 𝐻! when 𝐻! is false, we have committed a Type II error. ● In our example, if the breast cancer CI included 0 even though the true value of 𝜇" − 𝜇# is some other number different from zero (𝜇" ≠ 𝜇# , so 𝐻! is false, there is a difference), we would commit a Type II error. 𝜇' − 𝜇( ≠ 0 7 Type II Errors Q: How can we make Type II errors less likely? A: By making the confidence interval narrower. Then we are including a smaller range of “wrong” values. (null hypothesized value) 8 Type I and Type II error trade off ● We can reduce Type I errors by lowering 𝛼 but doing this would increase Type II errors. ● This is because lowering 𝛼 makes it harder to reject 𝐻! , which is good when 𝐻! is true but bad when 𝐻! is false. ● Similarly, we can reduce Type II errors by increasing 𝛼, but doing this would increase Type I errors. (Why is this?) 9 iClicker: Types of Errors Rejecting a true null hypothesis is what type of error? A. Type I B. Type II C. Type III D. It is not an error E. I don’t know 10 7.1 Review of Type I and Type II errors 7.2 The width of a Confidence Interval Section 7.2: The width of a Confidence Interval 7.3 Defining Statistical Power 7.4 Power Analysis 7.5 Power in Jamovi 7.6 Why do we care about power? 11 What affects the width of a CI? Of the four things in this CI formula for one mean, increasing which affects the width, and how? 𝐶𝐼 𝑓𝑜𝑟 𝜇 = 𝑋B ± (𝑐𝑟𝑖𝑡𝑖𝑐𝑎𝑙 𝑣𝑎𝑙𝑢𝑒) ⋅ 𝑠 𝑛 Term 𝑋B 𝑐𝑟𝑖𝑡𝑖𝑐𝑎𝑙 𝑣𝑎𝑙𝑢𝑒 𝑠 𝑛 Affects Width? no yes yes yes widens widens narrows How? 12 What affects the width of a CI? ● The width of the CI is determined by the size of the margin of error: 𝐶𝐼 𝑓𝑜𝑟 𝜇 = 𝑋B ± (𝑐𝑟𝑖𝑡𝑖𝑐𝑎𝑙 𝑣𝑎𝑙𝑢𝑒) ⋅ 𝑠 𝑛 1. If the critical value is smaller, the CI will be narrower. 2. If the standard deviation is smaller, the CI will be narrower. 3. If the sample size is larger, the CI will be narrower. (For more information, see Module 5 Confidence Intervals) 13 How can we change the width of a CI? 𝐶𝐼 𝑓𝑜𝑟 𝜇 = 𝑋( ± (𝑐𝑟𝑖𝑡𝑖𝑐𝑎𝑙 𝑣𝑎𝑙𝑢𝑒) ⋅ 𝑠 𝑛 1. We can make the critical value smaller by increasing 𝛼, but at the cost of more Type I errors. This isn’t a great option. 2. We can’t control the standard deviation. It is what it is. 3. We can make the sample size larger by just taking more measurements. This is the way to control the width of the confidence interval. 14 Choosing sample size for a certain CI width We know how to use 𝑠, 𝑛, and 𝑐𝑟𝑖𝑡𝑖𝑐𝑎𝑙 𝑣𝑎𝑙𝑢𝑒 to find the margin of error of a confidence interval. Just use the CI formula! 𝒏 𝒄𝒓𝒊𝒕𝒊𝒄𝒂𝒍 𝒗𝒂𝒍𝒖𝒆 𝑴𝑬 𝒔 But how can we go the other way? 𝒏 ? 𝑴𝑬 15 Choosing sample size for a certain CI width In other words, if I want to collect data to make a 95% confidence interval with a margin of error no bigger than 𝑀, how much data do I need to collect? 𝒏 ? 𝑴𝑬 This question is asked before any data is collected! 16 Choosing a sample size for a certain CI width ● If the margin of error should be less than 𝑀, then (𝑐𝑟𝑖𝑡𝑖𝑐𝑎𝑙 𝑣𝑎𝑙𝑢𝑒) ⋅ ! " ≤𝑀 𝑛≥ ($%&'&$() *()+,)⋅! 0 / ● As usual with a 95% confidence interval, we can use 𝑐𝑟𝑖𝑡𝑖𝑐𝑎𝑙 𝑣𝑎𝑙𝑢𝑒 ≈ 2. ● If 𝑛 satisfies the equation on the right, then our CI will have the desired width. 17 Choosing a sample size for a certain CI width (𝑐𝑟𝑖𝑡𝑖𝑐𝑎𝑙 𝑣𝑎𝑙𝑢𝑒) ⋅ 𝑠 0 𝑛≥ 𝑀 However, there’s still a problem! What do we put in for 𝒔? Remember, we’re doing this before we collect any data. 18 A few options for 𝒔 ● Use past studies. Have other studies been done on similar populations? Use standard deviations from those studies. ● Use reasonable guesses or expert opinions. Think about how much your variable ought to vary? Make up a distribution and find its standard deviation. Run this by someone with experience collecting data on this variable. ● Run a (small) pilot study for purposes of gathering information about s. ● There’s no single “correct” way to do this. 19 Example: 95% CI for favorability rating Suppose we plan to give a survey to American adults about their view on intense cardiovascular exercise (running, swimming, biking). The question wording will be: Rate your general inclination to participate in cardiovascular exercise on a daily basis: 1 = Never exercise 2 = Partake once/week 3 = Partake 2-3 times/week 4 = Partake 4-6 times/week 5 = Partake daily 20 Example: 95% CI for favorability rating We will make a confidence interval for population mean participation rating. Suppose we want each confidence interval to be no more than 0.5 units wide. What is our desired margin of error? If the confidence interval is no wider than 0.5 units, then the MOE can be no wider than half of that, 0.25 units. 21 iClicker Numeric: 95% CI for favorability rating Enter a reasonable value for the standard deviation. There is no single correct answer here, but you should be able to justify your choice. 22 Example: 95% CI for favorability rating Now calculate required sample size, using (𝑐𝑟𝑖𝑡𝑖𝑐𝑎𝑙 𝑣𝑎𝑙𝑢𝑒) ⋅ 𝑠 # 𝑛≥ 𝑀 We will use the critical value of 2. Let’s use a standard deviation of 1.5, and M, the desired MOE, is 0.25. # 2 ⋅ 1.6 𝑛≥ = 163.84 0.25 So, a sample size of 164 (making it a whole number) or larger will produce an approximate 95% CI no wider than 0.5 units (using a standard deviation of 1.5). 23 7.1 Review of Type I and Type II errors 7.2 The width of a Confidence Interval Section 7.3: Defining Statistical Power 7.3 Defining Statistical Power 7.4 Power Analysis 7.5 Power in Jamovi 7.6 Why do we care about power? 24 Probability of a Type II error ● The probability of Type II error is not set by the researcher. It has to be calculated based on the design of the study. ● We denote the probability of a Type II error as 𝛽: 𝛽 = 𝑃 𝑇𝑦𝑝𝑒 𝐼𝐼 𝐸𝑟𝑟𝑜𝑟 = 𝑃 𝐹𝑇𝑅 𝐻! 𝐻! 𝑖𝑠 𝑓𝑎𝑙𝑠𝑒 Probability ... of failing to reject H0 ... given H0 is false 25 Power ● Statisticians usually care about the opposite probability, the probability of not committing a Type II error. We call this power. 𝑷𝒐𝒘𝒆𝒓 = 𝑃 𝑅𝑒𝑗𝑒𝑐𝑡 𝐻1 𝐻1 𝑖𝑠 𝑓𝑎𝑙𝑠𝑒 or 𝑃𝑜𝑤𝑒𝑟 = 1 − 𝑃 𝑇𝑦𝑝𝑒 𝐼𝐼 𝐸𝑟𝑟𝑜𝑟 = 1 − 𝛽 26 Calculating Power ● Power calculation is complicated and difficult, and the exact formula is beyond the scope of this class. We will not calculate power by hand. ● We WILL cover the things that affect power, the kinds of questions that are asked in power analysis, and how to use software to answer those questions. 27 What determines power? Power is determined by sample size and effect size Power Sample Size Effect Size 28 Sample Size and Power As sample size increases, power increases. Power Sample Size We’ve already seen that a larger sample size makes a confidence interval narrower, reducing the chance of a Type II error. So, increasing sample size must increase power (the chance of NOT committing a Type II error). 29 Effect Size and Power But what is effect size, and how does it affect power? Power Effect Size 30 “Effect size” ● Suppose we compute a difference in means between a treatment group and a control group. A large difference in means could be interpreted as a large “effect” for the treatment. ● However, a seemingly large difference in means might not seem large if the data are highly dispersed. ● Example: if we are comparing one mile running times among elite athletes, a difference of 10 seconds may be considered large. In contrast, among casual joggers, it may be considered small. ● Effect size is a measure of importance for a calculated statistic. 31 Quantifying effect size: Cohen’s d ● For a two-sample test, a popular measure of effect size is Cohen’s d: δ= |𝜇" − 𝜇# | 𝜎$ ● Where 𝜇" and 𝜇# are the population means for groups one and two, and 𝜎$ is the combined (or “pooled”) standard deviation for both groups. Don’t worry how 𝜎$ is computed. ● So, Cohen’s d tells us how far apart two means are from one another, in terms of the number of standard deviations. ● The corresponding sample statistic is: |𝑋Q" − 𝑋Q# | 𝑑= 𝑠$ 32 Example: Cohen’s d ● Suppose we want to covert a 10 second difference in mile running times into a Cohen’s d effect size. We have: |𝜇" − 𝜇# | 10 δ= = 𝜎$ 𝜎$ ● Now we need a value for the standard deviation. This could make a big difference! Compare 𝜎$ = 5 to 𝜎$ = 30: 10 δ= =2 5 10 𝑣𝑠. δ = = 0.33 30 33 Visualizing Cohen’s dS 34 Visualizing Cohen’s d 35 Effect Size and Power As effect size increases, power increases. Power Larger effects are easier to detect reliably. It’s possible that a small effect could slip through the cracks, but it’s unlikely that a large one would. Effect Size 36 Effect Size and Power We might miss this: But it would be hard to miss this: 37 Sample size and effect size Sample size and effect size are implicitly related because they both affect power. Power Sample Size Effect Size 38 Sample size and effect size ● If we want to detect a smaller difference, that lowers power. So, we can compensate by increasing sample size. ● If our sample size is limited (for example by budget), that lowers power. So, we will only be able to reliably detect larger differences. ● If we get a new source of funding to increase our sample size, that raises power. Consequently, we will be able to reliably detect smaller differences. Sample Size Effect Size 39 iClicker: Effect Size Which effect size is larger? A) B) 40 7.1 Review of Type I and Type II errors 7.2 The width of a Confidence Interval Section 7.4: Power Analysis 7.3 Defining Statistical Power 7.4 Power Analysis 7.5 Power in Jamovi 7.6 Why do we care about power? 41 Power Analysis Questions We can use any two of these pieces to find the third. This leads to three types of power analysis questions we can ask. Like with finding sample size for a confidence interval, we ask these questions before collecting any data. Power Sample Size Effect Size 42 Power Analysis #1: computing power “If we have 𝑛 data points, and we would want to detect a difference, effect size = 𝛿, what is the power of the test?” UNKNOWN Power Sample Size Effect Size KNOWN 43 Example: Equine Cancer – Compute Power The VTH has 20 horses with cancer whose owners have volunteered them for an experimental radiation treatment trial. They give 10 horses the treatment and leave 10 as a control and measure the tumor size for each group. They are only interested in determining if there is at least a medium difference (𝜹 = 𝟎. 𝟓) in average tumor size between the groups. What is the power of this test? We know the sample size (limited by horse availability) and the effect size (minimum necessary for clinical success), and want to find the power. 44 Power Analysis #2: computing sample size “If we want to detect effects of size = 𝑑 with power = 𝑝, how many samples do we need to collect?” Power KNOWN UNKNOWN Sample Size Effect Size 45 Example: Equine Cancer – Compute Sample Size The VTH wants to recruit horses with cancer for a trial of its experimental radiation treatment. It knows that it wants a test that can detect medium differences in tumor size (𝜹 = 𝟎. 𝟓) fairly reliably (𝑷𝒐𝒘𝒆𝒓 = 𝟎. 𝟕). How many horses does it need to recruit? We know the effect size (small differences in tumor size) and the desired power (70%, decent power), and we want to find the required sample size. 46 Power Analysis #3: computing effect size “If we have 𝑛 observations, what size effect could we detect with power = 𝑝?” KNOWN Sample Size Power UNKNOWN Effect Size 47 Example: Equine Cancer – Compute Effect Size The VTH has 20 horses with cancer whose owners have volunteered them for an experimental radiation treatment trial. They give 10 horses the treatment and measure the tumor size. What is the smallest effect size that can be detected with 70% power? We know the sample size (limited by # of available horses) and the desired power (70%), and we want to find the effect size that we could detect. 48 7.1 Review of Type I and Type II errors 7.2 The width of a Confidence Interval Section 7.5: Power in Jamovi 7.3 Defining Statistical Power 7.4 Power Analysis 7.5 Power in Jamovi 7.6 Why do we care about power? 49 Power in Jamovi ● Jamovi will perform power analysis; however, we must install a new module. To do this, click the + Module button in the upper right corner. Then click ‘jamovi library’. Scroll till you see the ‘jpower’ module and click install. 50 Power in Jamovi ● Now that ‘jpower’ is installed, go under Analyses/ jpower / Independent Samples T-Test. ● Notice that we don’t need any data to do this!! 51 Power in Jamovi ● We will always use alpha = 0.05, Relative size of group 2 to group 1 = 1, and Tails = “two-tailed." 52 Power in Jamovi - Calculate values ● If we want to use this Jamovi tool to calculate power using Cohen’s d, we just need to change ‘Calculate:’ to ‘Power’ ● Example: finding power when d = 0.8 and total sample size is n = 100. ● There are more examples like this in the Module 7 Class Example. Note: The power box is grayed out so you cannot update that value and indicates that is what is being calculated. Also, since the total sample size is 100, you MUST enter half that amount (50) into N for group 1. 53 Example: Equine Cancer – Compute Power We had 𝑛 = 20, 𝛿 = 0.5, and we wanted to know power. Result: 18.5% power (that’s pretty low) 54 Example: Equine Cancer – Compute Sample Size We had 𝛿 = 0.5, 𝑃𝑜𝑤𝑒𝑟 = 0.7, and wanted to know 𝑛. Result: We need at least 51+ 51 = 102 horses. 55 Example: Equine Cancer – Compute Effect Size We had 𝑛 = 20, 𝑃𝑜𝑤𝑒𝑟 = 0.7, and we wanted effect size (𝛿). Result: We can only detect very large differences, 𝑑 ≥ 1.17. 56 iClicker Numeric Answer: Calculate Sample Size Using the output below, what sample size is required for an effect size of 0.5 and 90% power? Enter one number. 57 7.1 Review of Type I and Type II errors 7.2 The width of a Confidence Interval Section 7.6: Why do we care about power? 7.3 Defining Statistical Power 7.4 Power Analysis 7.5 Power in Jamovi 7.6 Why do we care about power? 58 Why We Care About Power: Efficiency Determining sample size is often a battle between two forces: Budget Each additional research subject costs money. Results Each additional research subject increases power and lowers the probability of Type II errors. publicdomainpictures.net maxpixel.net - Creative commons 59 Efficiency and power ● If power is too low, we will be very likely to commit a Type II error. ● What’s the point of conducting a hypothesis test if we would have no chance of detecting a result, even if one existed? ● With low power, it is tough to know what to make of a “FTR H0” result. Was it because the null hypothesis is really true, or because power is low? ● Conducting a test with low power is a waste of time and money, which is generally considered a bad thing to do. 60 Efficiency and Power You may be asked something like the following questions: ● “We have $𝑥 budget, so we can collect 𝑛 measurements. What’s the probability of a Type II error with that much data? If there’s only a small difference between the samples, would we be able to tell?” ● “The NSF needs us to have 80% power to receive funding. How much data do we need to collect?” You may be the person in the room who knows the most statistics, so you’ll have to answer these questions. 61 Why We Care About Power: Publication Bias ● Some journals will only publish a paper with “statistically significant” results. This is called publication bias. ● Publication bias, when combined with low power studies, can result in overestimates of effect sizes in published papers. 62 Low power ⇒ “Significant” results are biased ● Lower power corresponds to wider Confidence Intervals. High power, narrow CI, zero outside CI ⇒ Reject H0 ● In this example, we assume a Low power, wide CI, zero inside CI ⇒ FTR H0 population mean difference of 3. (null hypothesized value) (assumed true value) 63 Low power ⇒ “Significant” results are biased ● Now consider only wide, “low power” CIs. CI is centered well far above true mean difference; zero just barely outside CI ⇒ Reject H0 ● A wide CI may be centered close to the true value being estimated but include zero. The estimate would be accurate, but not statistically significant. CI is centered on true mean difference, so ; zero inside CI ⇒ FTR H0 ● In order to have a “significant” result, we need to overestimate the effect. (hypothesized value) (true value) 64 Same idea, using sampling distributions ● Left histogram: sampling distribution of Cohen’s d, when population d = 0.5 and power is low (0.27) ● Right histogram: same thing, but after removing all d statistics that do not achieve statistical significance. ● The mean of the distribution on the right is larger than the mean of the distribution on the left. So “significant” results are biased upward. 65 Why We Care About Power: Ethics “The ethical statistician strives to avoid the use of excessive or inadequate numbers of research subjects by making informed recommendations for study size.” Ethical Guidelines for Statistical Practice (2018), American Statistical Association (emphasis added) 66 Power and ethics ● If sample size is too small, power is low. Our research subjects will have gone through our study for nothing, since we are unlikely to get a good estimate of what we want to measure. Is this ethical if the study involves risk to the subjects? (Like a drug trial?) ● If sample size is too large, power is very high. We made a whole lot of research subjects participate when a smaller number would have been enough. Is this ethical if the study involves risk to our subjects? 67 Module 7 summary ● Hypothesis tests result in either rejecting 𝐻! (the null hypothesis) or failing to reject 𝐻! ● Rejecting a true null is a Type I error. Failing to reject a false null is a Type II error. ● Power is the probability that you do NOT commit a Type II error when 𝐻! is false. ● In other words, power is the probability that you reject 𝐻! when 𝐻! is false: 𝑝𝑜𝑤𝑒𝑟 = 𝑃(𝑟𝑒𝑗𝑒𝑐𝑡 𝐻! |𝐻! 𝑖𝑠 𝑓𝑎𝑙𝑠𝑒) ● We want power to be as high as possible. ● Power is determined by effect size and sample size: o When effect size is larger, power is larger. o When sample size is larger, power is larger. 68 Module 7 Summary (cont.) ● Power analysis involves using two of these three values to calculate the third: power, effect size, sample size. ● Low power is bad for two reasons: ● It means you’re likely to make a Type II error when 𝐻! is false. ● It means that you have to over-estimate the true effect size in order to reject 𝐻! and get “statistical significance." ● There is a practical trade off between power and expense. Larger sample sizes give more power, but they are more expensive and could possibly be put to better use on different studies. 69