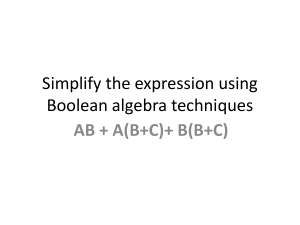

This is your last free member-only story this month. Upgrade for unlimited access. Member-only story Top 10 Mathematic Ideas Every Programmer Should Master The Ultimate Guide to Math for Programmers: 10 Concepts You Need to Know CodeCircuit · Follow Published in JavaScript in Plain English 14 min read · Apr 23 Listen Share More Photo by Jeswin Thomas on Unsplash As the world becomes more technologically advanced, programming has become a crucial skill that many people want to learn. However, there is a common misconception that programming doesn’t require any math knowledge. This statement is not entirely true. Although you can program without math, learning math makes programming easier to understand, and it unlocks the secrets of the entire physical universe. However, magic isn’t real math. Developers often avoid learning math because it looks scary, but they fail to realize that math is the foundation of all programming, from simple code to complicated programs like computer graphics and neural networks. Understanding math concepts is what separates a true engineer from a novice. We are no math genius. We are the opposite, which makes us uniquely qualified to teach the ten essential math concepts that any programmer needs to understand. 1. Numeral Systems Photo by Mika Baumeister on Unsplash Throughout history, humans have used various methods to count things, but most of them have been based on the number ten. We have ten fingers on our hands, and this is what we use to count. For example, in the number 423, the four represents four times one hundred in the hundreds place, the two represents two times ten in the tens place, and the three represents three times one in the one’s place. However, computers work off of a two-numerical system, also known as binary. In base 2, the concept of place value works the same way as base 10, but because there are only two symbols to work with, each place is multiplied by two. The first few numbers in base 2 are 1, 2, 4, 8, 16, 32, 64, 128, and so on. Each number is double the previous one. Understanding base two is crucial for understanding other numeral systems like hexadecimal, which is base 16. Hexadecimal uses the digits 0 through 9 along with A through F to represent numbers. For example, the number A in hexadecimal represents the number ten in base 10. Hexadecimal is commonly used to describe binary values more concisely because each hexadecimal digit can translate into four bits. The base64 numeral system presents a more elaborate encoding scheme by introducing additional letters and symbols for binary values. Consequently, developers can convey binary data, including images, as text strings. Its applications extend to email attachments and web content encoding. Computers employ base 2 yet still necessitate the representation of numbers in base 10 for human comprehension. Hence, numerical miniatures in applications and interfaces feature base 10. The conversion of numbers to base 2 becomes necessary to Open in app display them in the binary system. Search Medium 2. Boolean Algebra Boolean algebra represents a fundamental construct in computer science and programming, which is almost always utilized while writing code. It is a variant of algebra that concerns itself with binary variables that can have only two possible values — true or false. In this discourse, we will deliberate on the rudimentary elements of Boolean algebra, and how they are utilized in coding. There exist three operators in Boolean algebra: conjunction, disjunction, and negation. The conjunction operator (AND) is employed to fuse two or more Boolean values, and the resulting value is only true if all the input values are true. On the flip side, the disjunction operator (OR) is applied to merge two or more Boolean values, and the resulting value is true if any of the input values are true. The negation operator (NOT) is utilized to reverse a Boolean value, and the resulting value is true if the input value is false, and false if the input value is true. To shed light on the concept of Boolean algebra let us assume a relatable example. Envision that you are endeavoring to secure a significant other. We can utilize the Boolean variables “affluent” and “attractive” to define you. If you are both wealthy and good-looking, you will most likely procure a significant other. Nevertheless, if you are neither affluent nor attractive, you may have to remunerate a model significant other. If you are either affluent or attractive, you can still get a significant other, but your selection will be more restricted. We can demonstrate this logic in code using if statements, or we can portray it visually using Venn diagrams. Photo by Tolga Ahmetler on Unsplash A Venn diagram is a graphical representation of a group of elements. In this scenario, we can use Venn diagrams to display the various combinations of Boolean values. Truth tables are another method to visually represent Boolean values. A truth table is a table that demonstrates all the conceivable combinations of input values and the resulting output values. 3. Floating Points Numbers in Computer Science When engaging with numerical values in the domain of computer science, one of the most ubiquitous methods is utilizing floating-point numbers. These numerical entities provide programmers with the option to balance precision and range, as the storage capacity for these values is limited. If one is unacquainted with floating-point numbers, one might question how a computer could generate nonsensical results, such as adding 0.1 and 0.2 and arriving at 0.30004. The explanation lies in the realm of science, where numbers can range greatly depending on the scope of the measurement. To avoid listing these numerical values in full, the scientific community uses scientific notation, whereby an exponent elevates the number to a rounded value, creating a more concise form of the numerical value. Computers operate similarly to floatingpoint numbers, given the constraints of the storage capacity. In particular, single-precision floating-point numbers require 32 bits, whereas doubleprecision floating-point numbers, which are the default in programming languages like Python and JavaScript, require 64 bits. This is why they are deemed “floatingpoint” numbers, as the numerical value does not have a fixed number of digits preceding or succeeding the decimal point. Floating point numbers are a widely used numerical format in programming. However, specific values, such as 0.01, cannot be expressed precisely in this format due to their binary nature. As a result, there can be miniature inaccuracies, commonly referred to as rounding errors, when performing operations with floating point numbers. To avoid these inaccuracies, programmers must understand the limitations of floating point numbers and create adjustments to their code accordingly. This may entail altering the sequence of operations, employing a data type with a higher degree of precision, or applying rounding functions to rectify any discrepancies. It is imperative to acknowledge and address these issues to produce accurate and reliable results in computational tasks. 4. Logarithms Logarithmic functions are a fundamental concept in algebra, frequently taught in high school mathematics courses. To better comprehend these functions, consider an actual log, such as a long piece of wood. The log begins at a length of 16 feet and is gradually sawn in half until it reaches a length of 2 feet. Observe how the line drawn through the log curves gradually rather than being perfectly straight. In computer science, this gradual curve is representative of the operations of several algorithms, including binary search. However, the intriguing aspect is that if the number of times the log was cut is known, raising 2 to the power of that value yields the original length of the log. In this scenario, four cuts were used to achieve a length of 2 feet; thus, raising 2 to the power of 4 provides the original length of the log. This is known as exponentiation. Conversely, the logarithm is the precise reverse of exponentiation. Assume that we are unaware of the exponent but know the original length of the log, and we want to determine the number of times the log must be cut to achieve a length of 2 feet. This can be calculated using a base 2 log on the original length, which yields a value of 4. When the base is 2, it is directed to a binary logarithm. However, in mathematics, the common logarithm, which uses base 10, is more prevalent. Logarithmic functions have applications beyond mathematics, including science, engineering, and computer science. They assist in solving problems related to growth rates, sound levels, and earthquake magnitudes, among others. Furthermore, they are utilized in finance, such as calculating interest rates and compound growth. 5. Set Theory Set theory may seem unfamiliar to those new to the field of computer science or programming. However, in essence, it is the study of collections of distinct, unordered entities. This article seeks to delve deeper into the intricate workings of set theory and its role in the realm of computer science and programming. A prime example of set theory in action is demonstrated by relational databases. Within these databases, tables are utilized assets that consist of a unique array of rows. These tables can be combined in numerous ways through set theory. For instance, if you wish to select records that match on both tables, an inner join, also referred to as an intersection, would be employed. Conversely, if you aim to obtain all matching records from both tables, a full outer join, or a union in set theory, would be utilized. When incorporating left and right joins, these concepts come together. By merging an intersection with the difference between the two sets, a new set is formed that encompasses solely the elements common to both sets. It is crucial to understand that the above-mentioned structures are finite in terms of element count, falling under the purview of discrete math. In contrast, continuous math, such as geometry and calculus, deals with real numbers and approximations. Set theory plays a vital role in the sphere of computer science and programming as it enables the organization and manipulation of data. With an understanding of set theory, one can execute various operations on sets of data, including sorting, filtering, and aggregating. 6. Linear algebra Linear algebra is a branch of mathematics that is necessary for understanding computer graphics and deep neural networks. To comprehend it, you must first understand three essential terms: scalar, vector, and matrix. A scalar is a single numeric value. On the other hand, a vector is a list of numbers, comparable to a one-dimensional array. Lastly, a matrix is like a grid or twodimensional array that contains rows and columns of numeric values. What makes vectors and matrices fascinating is their ability to represent points, directions, and transformations. Vectors can represent points and directions in a three-dimensional space, while matrices can represent transformations that occur to these vectors. Consider this: when you move a player around in a video game, the lighting and shadows, as well as the graphics, change magically. But it’s not magic; it’s linear algebra that’s being distributed on your GPU. Linear transformations can be a complex problem but let us examine a specific scenario. Consider a 2D vector that instantly resides at 0.2X 3Y, denoting a solitary point in an image. The application of scaling, translation, rotation, shear, and other linear transformations is a possible avenue to explore. However, assume the intent is to alter the point to be at 4X 6Y. In that case, a matrix representation of the scaling factors is required, accompanied by the modification of the pinpoint to a column vector. Through the use of matrix multiplication, the point can be shifted to its desired location. One area that utilizes this kind of operation extensively is cryptography, more particularly RSA encryption. Moreover, deep neural networks are dependent on vectors as a means of representing features. Through matrix multiplication, data can be propagated between nodes of the network. Despite the apparent simplicity of the math behind these transformations, the computing power required to handle the massive amount of data is staggering. The study of math is integral to the understanding of the magic that computers perform. 7. Combinatorics Combinatorics is the art of counting and understanding patterns. It deals with the combinations and permutations of sets and is used in a variety of applications, from app algorithms to global databases. In this article, we will explore the concept of combinatorics and its relevance in various fields. Combinatorics is all about counting things, especially when combining sets into combinations or permutations. For instance, if you have an app like Tinder, you might need to count all the possible combinations of matches as part of a more complex algorithm that figures out what to show the end user. Similarly, if you’re building a globally distributed database, you need to figure out how many database partitions you’ll need about the world. Ultimately, it’s all about understanding how patterns can emerge. The Fibonacci sequence is an excellent example of how combinatorics works. The formula for the Fibonacci sequence can be seen in the image below. Writing a function that generates it is a great exercise that allows you to grasp the concept of combinatorics. Engineers at Google use the same kind of logic to render tiles on tools like Google Maps. The Fibonacci sequence is also prevalent, and we can see it in the spirals of a nautilus shell or the arrangement of sunflower seeds. Combinatorics is closely related to graph theory. Graph theory is concerned with the research of graphs, which are mathematical models applied to explain pairwise relationships between things. Graph theory is widely used in computer science, telecommunications, and social network analysis. 8. Statistic AI is an area of study that heavily relies on the principles of statistics. This field deals with developing algorithms and models that can learn from data and make predictions or decisions based on that information. In essence, machine learning is a sophisticated way of performing statistical analyses. This piece aims to expound on the importance of statistics in the realm of artificial intelligence and outline some fundamental statistical concepts that are crucial for anyone seeking to pursue a career in AI. When you interact with an AI-powered chatbot like ChatGPT, the system generates a response by computing the probability that it fits the input prompt based on statistical models. It is essential to have a clear grasp of the measures of central tendency, namely mean, median, and mode, which are necessary statistical tools. Additionally, understanding the concept of standard deviation is crucial as it helps in researching how close or far apart values in a data set are from the average value. Linear and logistic regression are two critical statistical methods that are commonly applied in AI. In linear regression, the goal is to predict continuous values, such as the loss or gain in monetary terms. The correlation between input and output variables is linear, and the primary objective is to find the line that best fits the dataset. On the other hand, logistic regression is valid in different problem scenarios such as classification, for example, distinguishing between images of hot dogs and non-hot dogs. The relationship between the input and output variables in this case is not linear but rather a sigmoid function that predicts the probability of a Boolean variable being true or false. 9. Complexity Theory Complexity theory allows us to apprehend how much time and memory an algorithm should ideally use. Big O notation is utilized in complexity theory to denote complexity. Concerning time complexity, the input represents the number of steps needed to execute a given task. For example, extracting a single element from an array would give us 0 of 1, which is constant time, exceedingly swift, and straightforward. Conversely, traversing an array would give us 0 of n, where n is the array’s length. If, in each iteration of that loop, we traverse the same array once more, it would yield 0 of N squared, which is significantly less efficient. Sophisticated algorithms like binary search, halve the search space with each iteration, resulting in logarithmic time complexity. Understanding the measurement of complexity is fundamental for technical interviews and a necessary consideration in general. When attempting to comprehend complexity theory, it is imperative to understand time complexity. The time complexity of an algorithm denotes the number of steps required to complete a task. The greater the number of steps an algorithm takes, the more time and memory it consumes. Thus, it is crucial to fathom how to measure complexity in both time and memory. Big O notation is frequently used to express temporal complexity. It is a mathematical notation that defines the upper bound of a function’s growth rate. The symbol O(1) denotes constant time complexity, which suggests that the method takes an identical amount of time regardless of input size. In contrast, O(n) denotes linear time complexity, implying that the algorithm’s runtime increases linearly with the input size. Similarly, O(N²) represents quadratic time complexity, meaning that the algorithm’s runtime advances exponentially with the input size. For instance, if we need to traverse an array of size n, the time complexity would be O(n). Nevertheless, if we cross the same display once more in each iteration of that loop, the time complexity would be O(n²), which is considerably less efficient. Binary search is a more sophisticated algorithm that halves the search area with each iteration, resulting in logarithmic time complexity. It is vital to apprehend the tradeoffs between the time complexities of different algorithms to select the most efficient one for a given task. 10. Graph Theory When it comes to data structures, a graph is one of the most powerful and versatile tools available. Graphs are used to represent complex relationships between objects or concepts and can be found in everything from social networks to transportation systems. In this article, we’ll take a closer look at what a graph is, and how it can be used in programming and data analysis. At its most basic level, a graph is composed of two types of elements: nodes (also known as vertices) and edges. Nodes are the individual elements within the graph, while edges are the connections between those elements. For example, in a social network, a person might be a node, and the relationship between two people might be an edge. If you love your mom, and your mom loves you back, this would be an example of an undirected graph, because the relationship goes both ways. On the other hand, if you love your OnlyFans girlfriend, but she doesn’t love you back, this would be a directed graph because the relationship only goes one way. Edges can also be weighted, meaning that one relationship is more important than another. For example, in a transportation network, some roads might be faster or more efficient than others, and these relationships could be represented by weighted edges. Ascertaining whether a graph is cyclic or acyclic is a significant factor in graph theory. If a node can traverse back to itself, then it’s considered cyclic, otherwise, it is referred to as acyclic. This differentiation carries great significance since it impacts the algorithms that can be employed to scrutinize or manipulate the graph. As a software developer, constructing graphs from the ground up is a commonplace activity. However, what’s even more critical is understanding how to traverse them. Traversal denotes the procedure of exploring the graph to locate particular nodes or relationships. Several algorithms can be utilized for graph traversal, but one of the most prevalent approaches is Dijkstra’s algorithm. This method is operated to discover the most efficient route through a graph. Elevate Your Coding with ChatGPT: Explore 250+ Powerful Lines for Peak Performance! 💻📖 Unlock ChatGPT’s potential with practical guidance and innovative ideas. Join the over 4,000 readers on the journey to becoming all-encompassing, life-long software engineers and managers. 🌍🚀Start reading Codestar for FREE today. 📚🧑💻✨ Want to make the most out of ChatGPT? Check out our collection of 400+ powerful lines for ChatGPT! It’s chock-full of ideas and practical information that will help you unleash the full potential of ChatGPT. I hope you found it interesting to read. If you’d like to support me as a writer, consider signing up to become a Medium member. It’s just $5 a month, as you get unlimited access to Medium. Programming Programmer Programmers Life Math Math Concepts Follow Written by CodeCircuit 1.91K Followers · Writer for JavaScript in Plain English Tech enthusiast😊, offering a fresh take on coding and productivity tips for programmers🧑💻. https://linktr.ee/codecircuit More from CodeCircuit and JavaScript in Plain English CodeCircuit in CodeX The 8 Must-Have Productivity Apps for Programmers in 2023 Boost Your Development Skills with the Hottest Apps in 2023 · 6 min read · Apr 12 148 2 The woman in JavaScript in Plain English 2 Regrets of a 55 Years Old Retired Software Engineer He worked for a Fortune 500 company and wished somebody had told him this earlier · 4 min read · May 9 2.6K 36 Somnath Singh in JavaScript in Plain English Bill Gates: People Don’t Realize What’s Coming Tech Jobs Won’t Exist in 5 Years · 13 min read · Apr 13 13.6K 330 CodeCircuit in CodeX 5 New DevOps Tools Expected to Make a Huge Impact in 2023 Enhance your DevOps collaboration with the latest and most innovative tools to shake up the industry in 2023. · 6 min read · Mar 25 228 8 See all from CodeCircuit See all from JavaScript in Plain English Recommended from Medium John Raines Be an Engineer, not a Frameworker Time to level up. · 10 min read · Mar 8, 2022 1.5K 26 Attila Vágó in The Gray Area Apple Just Held Its Most Mind-blowing Event In Modern Tech History Forget the first-ever launch of the iPhone, the iPad, or even Apple Silicon. · 7 min read · Jun 6 1.3K 27 Lists Stories to Help You Grow as a Software Developer 19 stories · 112 saves Leadership 30 stories · 54 saves How to Run More Meaningful 1:1 Meetings 11 stories · 48 saves Stories to Help You Level-Up at Work 19 stories · 88 saves Allen Helton in Better Programming ChatGPT Changed How I Write Software Generative AI has completely changed the way I approach software design. I don’t think I could ever go back. · 8 min read · Jun 1 1.5K 22 Love Sharma in Dev Genius System Design Blueprint: The Ultimate Guide Developing a robust, scalable, and efficient system can be daunting. However, understanding the key concepts and components can make the… · 9 min read · Apr 20 3K 24 Rohith Teja in Geek Culture ChatGPT, Random Numbers, and the Lottery Testing the claims of lottery winnings using ChatGPT · 7 min read · Jun 3 700 13 Martin Heinz in Better Programming The Right Way to Run Shell Commands From Python These are all the options you have in Python for running other processes — the bad, the good, and most importantly, the right way to do it · 7 min read · Jun 5 602 11 See more recommendations