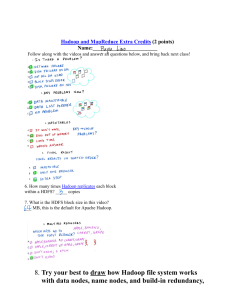

Hadoop and Hive

Contents

Hadoop

Hive and its features

Hive Architecture

Hadoop

It is an open-source framework to store and process Big Data in a

distributed environment. It contains two modules, one is MapReduce and

another is Hadoop Distributed File System (HDFS).

•MapReduce: It is a parallel programming model for processing large

amounts of structured, semi-structured, and unstructured data on large

clusters of commodity hardware.

•HDFS:Hadoop Distributed File System is a part of Hadoop framework,

used to store and process the datasets.

The Hadoop ecosystem contains different sub-projects (tools) such as Sqoop, Pig,

and Hive that are used to help Hadoop modules.

•Sqoop: It is used to import and export data to and from between HDFS and

RDBMS.

•Pig: It is a procedural language platform used to develop a script for MapReduce

operations.

•Hive: It is a platform used to develop SQL type scripts to do MapReduce

operations.

Introduction

• Hive is a data warehouse infrastructure tool to process structured data in

Hadoop. It resides on top of Hadoop to summarize Big Data and makes querying

and analyzing easy.

• The theme for structured data analysis is to store the data in a tabular manner,

and pass queries to analyze it.

• Initially Hive was developed by Facebook, later the Apache Software Foundation

took it up and developed it further as an open source under the name Apache

Hive.

5

What is Hive?

It is a data warehouse infrastructure tool to process structured data in Hadoop. It

resides on top of Hadoop to summarize Big Data, and makes querying and

analyzing easy.

Initially Hive was developed by Facebook, later the Apache Software Foundation

took it up and developed it further as an open source under the name Apache Hive.

It is used by different companies. For example, Amazon uses it in Amazon Elastic

MapReduce.

Hive is not

•A relational database

•A design for OnLine Transaction Processing (OLTP)

•A language for real-time queries and row-level updates

Features of Hadoop

• It stores schema in a database and processed data into HDFS.

• It is designed for OLAP.

• It provides SQL type language for querying called HiveQL or HQL.

• It is familiar, fast and scalable.

9

Architecture of Hive:

1

0

Unit Name

User Interface

Meta Store

HiveQL Process

Engine

Operation

Hive is a data warehouse infrastructure software that can create interaction

between user and HDFS.

The user interfaces that Hive supports are Hive Web UI, Hive command line,

and Hive HD Insight (In Windows server).

Hive chooses respective database servers to store the schema or Metadata

of tables, databases, columns in a table, their data types, and HDFS mapping.

HiveQL is similar to SQL for querying on schema info on the Metastore.

It is one of the replacements of traditional approach for MapReduce

program.

Instead of writing MapReduce program in Java, we can write a query for

MapReduce job and process it.

1

1

Unit Name

Operation

Execution Engine Execution engine processes the query and generates results as same as

MapReduce results.

HDFS or HBASE

Hadoop distributed file system or HBASE are the data storage techniques to

store data into file system.

1

2

Working of Apache Hive

Contents

Working of Hive

Hive CLI

Hive Working

3

Step No.

Operation

1

Execute Query The Hive interface such as Command Line or Web UI sends

query to Driver (any database driver such as JDBC, ODBC, etc.) to execute.

2

Get Plan The driver takes the help of query compiler that parses the query to

check the syntax and query plan or the requirement of query.

3

Get Metadata The compiler sends metadata request to Metastore

(any database).

4

Send Metadata Metastore sends metadata as a response to the compiler.

5

Send Plan The compiler checks the requirement and resends the plan to the

driver. Up to here, the parsing and compiling of a query is complete.

6

Execute Plan The driver sends the execute plan to the execution engine.

4

Step No.

Operation

7

Execute Job Internally, the process of execution job is a MapReduce job. The

execution engine sends the job to JobTracker, which is in Name node and it

assigns this job to TaskTracker, which is in Data node. Here, the query

executes MapReduce job.

7.1

Metadata Ops Meanwhile in execution, the execution engine can execute

metadata operations with Metastore.

8

Fetch Result The execution engine receives the results from Data nodes.

9

Send Results The execution engine sends those resultant values to the

driver.

10

Send Results The driver sends the results to Hive Interfaces.

5

Hive CLI

• DDL:

• create table/drop table/rename table

• alter table add column

• Browsing:

• show tables

• describe table

• cat table

• Loading Data

• Queries

6

Create Database Statement

CREATE DATABASE|SCHEMA [IF NOT EXISTS] <database name>;

hive> CREATE DATABASE [IF NOT EXISTS] userdb; OR

hive> CREATE SCHEMA userdb;

hive> SHOW DATABASES;

hive> DROP DATABASE IF EXISTS userdb;

7

hive> CREATE TABLE IF NOT EXISTS employee ( eid int, name String, salary

String , destination String)

> COMMENT ‘Employee details’

> ROW FORMAT DELIMITED

> FIELDS TERMINATED BY ‘\t’

> LINES TERMINATED BY ‘\n’

> STORED AS TEXTFILE;

hive> LOAD DATA LOCAL INPATH '/home/user/sample.txt'

8

9

Apache Pig & its Architecture

Contents

Introduction

Features of Pig

Pig Architecture

Introduction

• It is a tool/platform which is used to analyze larger sets of data representing them

as data flows. we can perform all the data manipulation operations in Hadoop

using Apache Pig.

• Pig provides a high-level language known as Pig Latin. This language provides

various operators which programmers can develop their own functions for

reading, writing, and processing data.

• Pig was built to make programming MapReduce applications easier. Before Pig,

Java was the only way to process the data stored on HDFS.

3

Introduction

• Using the PigLatin scripting language operations like ETL (Extract, Transform

and Load).

• Pig is an abstraction over MapReduce. In other words, all Pig scripts internally

are converted into Map and Reduce tasks to get the task done.

• Using Pig Latin, programmers can perform MapReduce tasks easily without

having to type complex codes in Java.

• For example, an operation that would require you to type 200 lines of code (LoC)

in Java can be easily done by typing as less as just 10 LoC in Apache Pig.

4

Features of Pig

• Ease of programming − Pig Latin is similar to SQL and it is easy to write a Pig script if

you are good at SQL.

• Rich set of operators − It provides many operators to perform operations like join, sort,

filer, etc.

• UDF’s − Pig provides the facility to create User-defined Functions in other programming

languages such as Java and invoke or embed them in Pig Scripts.

• Handles all kinds of data − Apache Pig analyzes all kinds of data, both structured as well

as unstructured. It stores the results in HDFS.

5

6

Parser

Initially the Pig Scripts are handled by the Parser. It checks the syntax of the script, does type

checking, and other miscellaneous checks. The output of the parser will be a DAG (directed

acyclic graph), which represents the Pig Latin statements and logical operators.

Optimizer

The logical plan (DAG) is passed to the logical optimizer, which carries out the logical

optimization.

Compiler

The compiler compiles the optimized logical plan into a series of MapReduce jobs.

Execution engine

Finally the MapReduce jobs are submitted to Hadoop in a sorted order. Finally, these

MapReduce jobs are executed on Hadoop producing the desired results.

7

Apache HBase

Contents

Introduction

Column Families

HBase Architecture

Introduction

• HBase is an Apache open source project whose goal is to provide storage for the

Hadoop Distributed Computing.

• HBase is a distributed column-oriented data store built on top of HDFS

• Data is logically organized into tables, rows and columns.

• It provides real time read/write operations to data in hadoop cluster.

• Hbase is horizontally scalable.

3

HBase: Keys and Column Families

4

5

• The HBaseMaster

• One master

• The HRegionServer

• Many region servers

• The HBase client

6

Region

A subset of a table’s rows, like horizontal range partitioning

Automatically done

RegionServer (many slaves)

Manages data regions

Serves data for reads and writes (using a log)

Master

Responsible for coordinating the slaves

Assigns regions, detects failures

Admin functions

7

8

MapReduce

Contents

Introduction

Example

Introduction

• MapReduce is the heart of Hadoop®. It is the programming paradigm that allows

for massive scalability across thousands of servers in a Hadoop cluster.

• MapReduce is a programming model and an associated implementation for

processing and generating large data sets with a parallel, distributed algorithm on

a cluster.

• The MapReduce software framework provides an abstraction layer with the data

flow and flow of control of users and hides implementation of all data flow steps

such as data partitioning mapping, synchronization, communication and

scheduling.

3

• JobTracker provides connectivity between hadoop and your application.

• JobTracker splits up data into smaller tasks(“Map”) and sends it to the

TaskTracker process in each node.

• Job tracker a master component, responsible for overall execution of map

reduce job. There is a single Job tracker per hadoop cluster.

• TaskTracker reports back to the JobTracker node and reports on job

progress, sends data (“Reduce”) or requests new jobs

4

5

6

HDFS

Contents

Introduction

Architecture

Introduction

• HDFS is a Java-based file system that provides distributed, redundant, scalable

and reliable data storage.

• It was designed to span large clusters of commodity servers.

• HDFS has demonstrated production scalability of up to 200 PB of storage and a

single cluster of 4500 servers, supporting close to a billion files and blocks.

• HDFS has two essential demons called NameNode and DataNode.

3

HDFS Architecture

4

NameNode:

• HDFS breaks larger files into small blocks. NameNode keeps tracks of all the

blocks. Name node Stores metadata for the files, like the directory structure of

a typical FS. Name node uses rack_id to identify data node racks.

• Rack is a collection of data nodes in a cluster. Handles creation of more

replica blocks, when necessary, after a DataNode failure.

DataNode:

• There are multiple data nodes per cluster. Notifies NameNode of what blocks

it has. A data node sends message to name node to ensure the connectivity

between data node and name node.

5

6

Content

R Studio GUI

Steps or Procedure

Introduction

R is a free, open-source software and programming language

developed in 1995 at the University of Auckland as an

environment for statistical computing and graphics.

RStudio allows the user to run R in a more user-friendly

environment. RStudio is an integrated development

environment (IDE) that allows you to interact with R more

readily. It is open-source (i.e. free) and available at

http://www.rstudio.com/

2

RStudio screen

3

RStudio Windows and Description

Workspace tab (1)

The workspace tab stores any object, value, function or

anything you create during your R session. In the example

below, if you click on the dotted squares you can see the data

on a screen to the left.

5

Workspace tab (2)

Here is another example on how the workspace looks like

when more objects are added. Notice that the data frame

house.pets is formed from different individual values or vectors.

Click on the dotted square to look at the

dataset in a spreadsheet form.

History Tab

The history tab keeps a record of all previous commands. It

helps when testing and running processes. Here you can either

save the whole list or you can select the commands you want

and send them to an R script to keep track of your work.

In this example, we select all and click on the “To Source” icon, a window on the left will open with the list of

commands. Make sure to save the ‘untitled1’file as an *.R script.

Changing the working directory

1

2

If you have different projects you can change the working

directory for that session, see above. Or you can type:

# Shows the working directory (wd)

getwd()

# Changes the wd

setwd("C:/myfolder/data")

More info see the following document:

http://dss.princeton.edu/training/RStata.p

df

3

Setting a default working directory

1

2

3

Every time you open RStudio, it goes to a default

directory. You can change the default to a folder

where you have your datafiles so you do not have

to do it every time. In the menu go to Tools>Options

4

R script (1)

The usual RStudio screen has four windows:

1. Console.

2. Workspace and history.

3. Files, plots, packages and help.

4. The R script(s) and data view.

The R script is where you keep a record of your work. For Stata users this

would be like the do-file, for SPSS users is like the syntax and for SAS users

the SAS program.

R script (2)

To create a new R script you can either go to File -> New -> R Script, or click on the

icon with the “+” sign and select “R Script”, or simply press Ctrl+Shift+N. Make sure

to save the script.

Here you can type R commands and run them.

Just leave the cursor anywhere on the line

where the command is and press Ctrl-R or click

on the ‘Run’ icon above. Output will appear in

the console below.

Packages tab

The package tab shows the list of add-ons included in the installation of RStudio. If

checked, the package is loaded into R, if not, any command related to that package

won’t work, you will need select it. You can also install other add-ons by clicking on

the ‘Install Packages’ icon. Another way to activate a package is by typing, for

example, library(foreign). This will automatically check the --foreign package (it

helps bring data from proprietary formats like Stata, SAS or SPSS).

Installing a package

Befor

e

1

2

3

Afte

r

We are going to install the

package – rgl (useful to plot 3D

images). It does not come with

the original R install.

Click on “Install Packages”,

write the name in the pop-up

window and click on “Install”.

Plots tab (1)

The plots tab will display the

graphs. The one shown here is

created by the command on line 7

in the script above.

See next slide to see what happens

when you have more than one graph

Plots tab (2)

Here there is a second graph (see

line 11 above). If you want to see

the first one, click on the leftarrow icon.

Plots tab (3) – Graphs export

To extract the graph, click on “Export” where you can save the file as an image (PNG, JPG, etc.) or as PDF,

these options are useful when you only want to share the graph or use it in a LaTeX document. Probably, the

easiest way to export a graph is by copying it to the clipboard and then paste it directly into your Word

document.

1

2

3 Make sure to select ‘Metafile’

5 Paste it

into your

Word

document

4

1

5

3D graphs

3D graphs will display on a separate screen (see line 15

above). You won’t be able to save it, but after moving it

around, once you find the angle you want, you can

screenshot it and paste it to you Word document.

1

6

Resources

For R related tutorials and/or resources see the following links:

http://dss.princeton.edu/training/

http://libguides.princeton.edu/dss

DATA TYPES

Generally, while doing programming in any programming language, you need to use

various variables to store various information. Variables are nothing but reserved

memory locations to store values. This means that, when you create a variable you

reserve some space in memory.

You may like to store information of various data types like character, wide character,

integer, floating point, double floating point, Boolean etc. Based on the data type of a

variable, the operating system allocates memory and decides what can be stored in

the reserved memory.

In contrast to other programming languages like C and java in R, the variables are

not declared as some data type. The variables are assigned with R-Objects and the

data type of the R-object becomes the data type of the variable. There are many types

of R-objects. The frequently used ones are −

Vectors

Lists

Matrices

Arrays

Factors

Data Frames

The simplest of these objects is the vector object and there are six data types of

these atomic vectors, also termed as six classes of vectors. The other R-Objects are

built upon the atomic vectors.

Data Type

Logical

Example

Verify

TRUE, FALSE

v <- TRUE

print(class(v))

it produces the following

result −

[1] "logical"

Numeric

12.3, 5, 999

v <- 23.5

print(class(v))

it produces the following

result −

[1] "numeric"

Integer

2L, 34L, 0L

v <- 2L

print(class(v))

it produces the following

result −

[1] "integer"

Complex

3 + 2i

v <- 2+5i

print(class(v))

it produces the following

result −

[1] "complex"

Character

'a' , '"good", "TRUE", '23.4'

v <- "TRUE"

print(class(v))

it produces the following

result −

[1] "character"

Raw

"Hello" is stored as 48 65 6c

6c 6f

v <charToRaw("Hello")

print(class(v))

it produces the following

result −

[1] "raw"

Logical

TRUE, FALSE

v <- TRUE

print(class(v))

it produces the following

result −

[1] "logical"

Vectors

When you want to create vector with more than one element, you should

use c() function which means to combine the elements into a vector.

# Create a vector.

apple <- c('red','green',"yellow")

print(apple)

# Get the class of the vector.

print(class(apple))

When we execute the above code, it produces the following result −

[1] "red"

"green"

[1] "character"

"yellow"

Lists

A list is an R-object which can contain many different types of elements inside it like

vectors, functions and even another list inside it.

# Create a list.

list1 <- list(c(2,5,3),21.3,sin)

# Print the list.

print(list1)

When we execute the above code, it produces the following result −

[[1]]

[1] 2 5 3

[[2]]

[1] 21.3

[[3]]

function (x)

.Primitive("sin")

Matrices

A matrix is a two-dimensional rectangular data set. It can be created using a vector

input to the matrix function.

# Create a matrix.

M = matrix( c('a','a','b','c','b','a'), nrow = 2, ncol = 3, byrow

= TRUE)

print(M)

When we execute the above code, it produces the following result −

[,1] [,2] [,3]

[1,] "a" "a" "b"

[2,] "c" "b" "a"

Arrays

While matrices are confined to two dimensions, arrays can be of any number of

dimensions. The array function takes a dim attribute which creates the required

number of dimension. In the below example we create an array with two elements

which are 3x3 matrices each.

# Create an array.

a <- array(c('green','yellow'),dim = c(3,3,2))

print(a)

When we execute the above code, it produces the following result −

, , 1

[,1]

[,2]

[,3]

[1,] "green" "yellow" "green"

[2,] "yellow" "green" "yellow"

[3,] "green" "yellow" "green"

, , 2

[,1]

[,2]

[,3]

[1,] "yellow" "green" "yellow"

[2,] "green" "yellow" "green"

[3,] "yellow" "green" "yellow"

Factors

Factors are the r-objects which are created using a vector. It stores the vector along

with the distinct values of the elements in the vector as labels. The labels are always

character irrespective of whether it is numeric or character or Boolean etc. in the input

vector. They are useful in statistical modelling.

Factors are created using the factor() function. The nlevels functions gives the count

of levels.

Create a vector.

apple_colors <c('green','green','yellow','red','red','red','green')

# Create a factor object.

factor_apple <- factor(apple_colors)

# Print the factor.

print(factor_apple)

print(nlevels(factor_apple))

When we execute the above code, it produces the following result −

[1] green

green

yellow red

red

red

green

Levels: green red yellow

[1] 3

Data Frames

Data frames are tabular data objects. Unlike a matrix in data frame each column can

contain different modes of data. The first column can be numeric while the second

column can be character and third column can be logical. It is a list of vectors of equal

length.

Data Frames are created using the data.frame() function.

# Create the data frame.

BMI <data.frame(

gender = c("Male", "Male","Female"),

height = c(152, 171.5, 165),

weight = c(81,93, 78),

Age = c(42,38,26)

)

print(BMI)

When we execute the above code, it produces the following result −

gender height weight Age

1

Male 152.0

81 42

2

Male 171.5

93 38

3 Female 165.0

78 26

Descriptive Analysis

In Descriptive analysis, we are describing our data with the help of various

representative methods like using charts, graphs, tables, excel files, etc. In the

descriptive analysis, we describe our data in some manner and present it in a

meaningful way so that it can be easily understood. Most of the time it is performed on

small data sets and this analysis helps us a lot to predict some future trends based on

the current findings. Some measures that are used to describe a data set are

measures of central tendency and measures of variability or dispersion.

Measure of central tendency

It represent the whole set of data by single value. It gives us the location of central

points. There are three main measure of central tendency.

Mean

Median

Mode

Measure of variability

Measure of variability is known as the spread of data or how well is our data is

distributed. The most common variability measures are:

Range

Variance

Standard Variation

Analysis

R Function

Mean

mean()

Median

median()

Analysis

R Function

Mode

mfv() [modeest]

Range of values (minimum and

maximum)

range()

Minimum

min()

Maximum

maximum()

Variance

var()

Standrad Deviation

sd()

Sample quantiles

quantile()

Interquartile range

IQR()

Generic function

summary()

stat.desc() function

The function stat.desc() [in pastecs package], provides other useful statistics including:

the median

the mean

the standard error on the mean (SE.mean)

the confidence interval of the mean (CI.mean) at the p level (default is 0.95)

the variance (var)

the standard deviation (std.dev)

and the variation coefficient (coef.var) defined as the standard deviation divided by the mean.

In video lecture , function are discussed with the help of example and implementation

Explorative Analysis in R

Exploratory data analysis (EDA) is not based on a set of rules or formulas.

Exploratory Data Analysis refers to the critical process of performing

initial investigations on data so as to discover patterns, to spot anomalies,

to test hypothesis and to check assumptions with the help of summary

statistics and graphical representations.

Exploratory data analysis is an approach of analyzing data sets to

summarize their main characteristics, often using statistical graphics and

other data visualization methods.

EDA is an iterative cycle. You:

1. Generate questions about your data.

2. Search for answers by visualising, transforming, and modelling your data.

3. Use what you learn to refine your questions and/or generate new

questions.

Variation

Variation is the tendency of the values of a variable to change from

measurement to measurement. You can see variation easily in real life; if you

measure any continuous variable twice, you will get two different results. This is

true even if you measure quantities that are constant, like the speed of light.

Each of your measurements will include a small amount of error that varies from

measurement to measurement. Categorical variables can also vary if you

measure across different subjects (e.g. the eye colors of different people), or

different times (e.g. the energy levels of an electron at different moments).

Every variable has its own pattern of variation, which can reveal interesting

information. The best way to understand that pattern is to visualise the

distribution of the variable’s values.

Visualising distributions

How you visualise the distribution of a variable will depend on whether the

variable is categorical or continuous. A variable is categorical if it can only take

one of a small set of values. In R, categorical variables are usually saved as

factors or character vectors. To examine the distribution of a categorical

variable, use a bar chart:

ggplot(data = diamonds) +geom_bar(mapping = aes(x = cut))

The height of the bars displays how many observations occurred with each x

value. You can compute these values manually with dplyr::count():

A variable is continuous if it can take any of an infinite set of ordered values.

Numbers and date-times are two examples of continuous variables. To examine

the distribution of a continuous variable, use a histogram:

ggplot(data = diamonds) +

geom_histogram(mapping = aes(x = carat), binwidth = 0.5)

A histogram divides the x-axis into equally spaced bins and then uses the height

of a bar to display the number of observations that fall in each bin. In the graph

above, the tallest bar shows that almost 30,000 observations have a carat value

between 0.25 and 0.75, which are the left and right edges of the bar.

You can set the width of the intervals in a histogram with

the binwidth argument, which is measured in the units of the x variable. You

should always explore a variety of binwidths when working with histograms, as

different binwidths can reveal different patterns. For example, here is how the

graph above looks when we zoom into just the diamonds with a size of less than

three carats and choose a smaller binwidth.

smaller <- diamonds %>%

filter(carat < 3)

ggplot(data = smaller, mapping = aes(x = carat)) +

geom_histogram(binwidth = 0.1)

Unusual values

Outliers are observations that are unusual; data points that don’t seem to fit the

pattern. Sometimes outliers are data entry errors; other times outliers suggest

important new science. When you have a lot of data, outliers are sometimes

difficult to see in a histogram. For example, take the distribution of the y variable

from the diamonds dataset. The only evidence of outliers is the unusually wide

limits on the x-axis.

ggplot(diamonds) + geom_histogram(mapping = aes(x = y), binwidth = 0.5)

In video lecture , implementation part can be explored.